Exploiting Procedural Domain Control Knowledge in State-of-the-Art Planners Jorge A. Baier

advertisement

Exploiting Procedural Domain Control Knowledge in State-of-the-Art Planners

Jorge A. Baier

Christian Fritz

Sheila A. McIlraith

Department of Computer Science, University of Toronto,

Toronto, ON M5S 3G4, CANADA

{jabaier,fritz,sheila}@cs.toronto.edu

reduces the search space but still requires a planner to backtrack to find a valid plan, it should prove beneficial to exploit

better search techniques. In this paper we explore ways in

which SOA planning techniques and existing SOA planners

can be used in conjunction with DCK, with particular focus

on procedural DCK.

As a simple example of DCK, consider the trucks domain of the 5th International Planning Competition, where

the goal is to deliver packages between certain locations using a limited capacity truck. When a package reaches its

destination it must be delivered to the customer. We can

write simple and natural procedural DCK that significantly

improves the efficiency of plan generation for instance: Repeat the following until all packages have been delivered:

Unload everything from the truck, and, if there is any package in the current location whose destination is the current

location, deliver it. After that, if any of the local packages

have destinations elsewhere, load them on the truck while

there is space. Drive to the destination of any of the loaded

packages. If there are no packages loaded on the truck, but

there remain packages at locations other than their destinations, drive to one of these locations.

Procedural DCK (as used in HTN (Nau et al. 1999) or

Golog (Levesque et al. 1997)) is action-centric. It is much

like a programming language, and often times like a plan

skeleton or template. It can (conditionally) constrain the order in which domain actions should appear in a plan. In order to exploit it for planning, we require a procedural DCK

specification language. To this end, we propose a language

based on G OLOG that includes typical programming languages constructs such as conditionals and iteration as well

as nondeterministic choice of actions in places where control

is not germane. We argue that these action-centric constructs

provide a natural language for specifying DCK for planning.

We contrast them with DCK specifications based on linear

temporal logic (LTL) which are state-centric and though still

of tremendous value, arguably provide a less natural way to

specify DCK. We specify the syntax for our language as well

as a PDDL-based semantics following Fox & Long (2003).

With a well-defined procedural DCK language in hand,

we examine how to use SOA planning techniques together

with DCK. Of course, most SOA planners are unable to

exploit DCK. As such, we present an algorithm that translates a PDDL2.1-specified ADL planning instance and as-

Abstract

Domain control knowledge (DCK) has proven effective in

improving the efficiency of plan generation by reducing the

search space for a plan. Procedural DCK is a compelling

type of DCK that supports a natural specification of the skeleton of a plan. Unfortunately, most state-of-the-art planners do

not have the machinery necessary to exploit procedural DCK.

To resolve this deficiency, we propose to compile procedural

DCK directly into PDDL2.1, thus enabling any PDDL2.1compatible planner to exploit it. The contribution of this paper is threefold. First, we propose a PDDL-based semantics for an Algol-like, procedural language that can be used

to specify DCK in planning. Second, we provide a polynomial algorithm that translates an ADL planning instance and

a DCK program, into an equivalent, program-free PDDL2.1

instance whose plans are only those that adhere to the program. Third, we argue that the resulting planning instance

is well-suited to being solved by domain-independent heuristic planners. To this end, we propose three approaches to

computing domain-independent heuristics for our translated

instances, sometimes leveraging properties of our translation

to guide search. In our experiments on familiar PDDL planning benchmarks we show that the proposed compilation of

procedural DCK can significantly speed up the performance

of a heuristic search planner. Our translators are implemented

and available on the web.

Introduction

Domain control knowledge (DCK) imposes domain-specific

constraints on the definition of a valid plan. As such, it can

be used to impose restrictions on the course of action that

achieves the goal. While DCK sometimes reflects a user’s

desire to achieve the goal a particular way, it is most often

constructed to aid in plan generation by reducing the plan

search space. Moreover, if well-crafted, DCK can eliminate those parts of the search space that necessitate backtracking. In such cases, DCK together with blind search

can yield valid plans significantly faster than state-of-theart (SOA) planners that do not exploit DCK. Indeed most

planners that exploit DCK, such as TLP LAN (Bacchus &

Kabanza 1998) or TALP LANNER (Kvarnström & Doherty

2000), do little more than blind depth-first search with cycle

checking in a DCK-pruned search space. Since most DCK

c 2007, Association for the Advancement of Artificial

Copyright Intelligence (www.aaai.org). All rights reserved.

26

Planning problems are tuples (Init , Goal , Objs P , τP ),

where Init is the initial state, Goal is a sentence with quantifiers for the goal, and Objs P and τP are defined analogously as for domains.

Semantics: Fox & Long (2003) have given a formal semantics for PDDL 2.1. In particular, they define when a sentence is true in a state and what state trace is the result of

performing a set of timed actions. A state trace intuitively

corresponds to an execution trace, and the sets of timed actions are ultimately used to refer to plans. In the ADL subset of PDDL2.1, since there are no concurrent or durative

actions, time does not play any role. Hence, state traces reduce to sequences of states and sets of timed actions reduce

to sequences of actions.

Building on Fox and Long’s semantics, we assume that

|= is defined such that s |= ϕ holds when sentence ϕ is

true in state s. Moreover, for a planning instance I, we assume there exists a relation Succ such that Succ(s, a, s ) iff

s results from performing an executable action a in s. Finally, a sequence of actions a1 · · · an is a plan for I if there

exists a sequence of states s0 · · · sn such that s0 = Init,

Succ(si , ai+1 , si+1 ) for i ∈ {0, . . . , n−1}, and sn |= Goal .

sociated procedural DCK into an equivalent, program-free

PDDL2.1 instance whose plans provably adhere to the DCK.

Any PDDL2.1-compliant planner can take such a planning

instance as input to their planner, generating a plan that adheres to the DCK.

Since they were not designed for this purpose, existing

SOA planners may not exploit techniques that optimally

leverage the DCK embedded in the planning instance. As

such, we investigate how SOA planning techniques, rather

than planners, can be used in conjunction with our compiled

DCK planning instances. In particular, we propose domainindependent search heuristics for planning with our newlygenerated planning instances. We examine three different

approaches to generating heuristics, and evaluate them on

three domains of the 5th International Planning Competition. Our results show that procedural DCK improves the

performance of SOA planners, and that our heuristics are

sometimes key to achieving good performance.

Background

A Subset of PDDL 2.1

A planning instance is a pair I = (D, P ), where D is a

domain definition and P is a problem. To simplify notation,

we assume that D and P are described in an ADL subset of

PDDL. The difference between this ADL subset and PDDL

2.1 is that no concurrent or durative actions are allowed.

Following convention, domains are tuples of finite sets

(PF , Ops, Objs D , T, τD ), where PF defines domain predicates and functions, Ops defines operators, Objs D contains

domain objects, T is a set of types, and τD ⊆ Objs D × T is

a type relation associating objects to types. An operator (or

action schema) is also a tuple O(x), t, Prec(x), Eff (x),

where O(x) is the unique operator name and x =

(x1 , . . . , xn ) is a vector of variables. Furthermore, t =

(t1 , . . . , tn ) is a vector of types. Each variable xi ranges

over objects associated with type ti . Moreover, Prec(x) is

a boolean formula with quantifiers (BQF) that specifies the

operator’s preconditions. BFQs are defined inductively as

follows. Atomic BFQs are either of the form t1 = t2 or

R(t1 , . . . , tn ), where ti (i ∈ {1, . . . , n}) is a term (i.e. either a variable, a function literal, or an object), and R is a

predicate symbol. If ϕ is a BFQ, then so is Qx-t ϕ, for a

variable x, a type symbol t, and Q ∈ {∃, ∀}. BFQs are also

formed by applying standard boolean operators over other

BFQs. Finally Eff (x) is a list of conditional effects, each of

which can be in one of the following forms:

∀y1 -t1 · · · ∀yn -tn . ϕ(

x, y ) ⇒ R(

x, y ),

x, y ) ⇒ ¬R(

x, y ),

∀y1 -t1 · · · ∀yn -tn . ϕ(

x, y ) ⇒ f (

x, y ) = obj,

∀y1 -t1 · · · ∀yn -tn . ϕ(

Domain-Independent Heuristics for Planning

In sections to follow, we investigate how procedural DCK

integrates into SOA domain-independent planners. Domainindependent heuristics are key to the performance of these

planners. Among the best known heuristic-search planners

are those that compute their heuristic by solving a relaxed

STRIPS planning instance (e.g., as done in HSP (Bonet &

Geffner 2001) and FF (Hoffmann & Nebel 2001) planners).

Such a relaxation corresponds to solving the same planning

problem but on an instance that ignores deletes (i.e. ignores

negative effects of actions).

For example, the FF heuristics for a state s is computed

by expanding a relaxed planning graph (Hoffmann & Nebel

2001) from s. We can view this graph as composed of relaxed states. A relaxed state at depth n + 1 is generated by

adding all the effects of actions that can be performed in the

relaxed state of depth n, and then by copying all facts that

appear in layer n. The graph is expanded until the goal or a

fixed point is reached. The heuristic value for s corresponds

to the number of actions in a relaxed plan for the goal, which

can be extracted in polynomial time.

Both FF-like heuristics and HSP-like heuristics can be

computed for (more expressive) ADL planning problems.

(1)

(2)

(3)

A Language for Procedural Control

In contrast to state-centric languages, that often use LTLlike logical formulae to specify properties of the states traversed during plan execution, procedural DCK specification

languages are predominantly action-centric, defining a plan

template or skeleton that dictates actions to be used at various stages of the plan.

Procedural control is specified via programs rather than

logical expressions. The specification language for these

programs incorporates desirable elements from imperative

programming languages such as iteration and conditional

where ϕ is a BFQ whose only free variables are among x

and y , R is a predicate, f is a function, and obj is an object

After performing a ground operator – or action – O(c) in a

certain state s, for all tuples of objects that may instantiate y

such that ϕ(c, y ) holds in s, effect (1) (resp. (2)) expresses

that R(c, y) becomes true (resp. false), and effect (3) expresses that f (c, y ) takes the value obj. As usual, states are

represented as finite sets of atoms (ground formulae of the

form R(c) or of the form f (c) = obj).

27

• ( load(C, P ); f ly(P, LA) | load(C, T ); drive(T, LA) ):

Either load C on the plane P or on the truck T , and

perform the right action to move the vehicle to LA.

constructs. However, to make the language more suitable to

planning applications, it also incorporates nondeterministic

constructs. These elements are key to writing flexible control since they allow programs to contain missing or open

program segments, which are filled in by a planner at the

time of plan generation. Finally, our language also incorporates property testing, achieved through so-called test actions. These actions are not real actions, in the sense that

they do not change the state of the world, rather they can

be used to specify properties of the states traversed while

executing the plan. By using test actions, our programs

can also specify properties of executions similarly to statecentric specification languages.

The rest of this section describes the syntax and semantics

of the procedural DCK specification language we propose to

use. We conclude this section by formally defining what it

means to plan under the control of such programs.

Semantics

The problem of planning for an instance I under the control

of program σ corresponds to finding a plan for I that is also

an execution of σ from the initial state. In the rest of this section we define what those legal executions are. Intuitively,

we define a formal device to check whether a sequence of

actions a corresponds to the execution of a program σ. The

device we use is a nondeterministic finite state automaton

with ε-transitions (ε-NFA).

For the sake of readability, we remind the reader that εNFAs are like standard nondeterministic automata except

that they can transition without reading any input symbol,

through the so-called ε-transitions. ε-transitions are usually

defined over a state of the automaton and a special symbol

ε, denoting the empty symbol.

An ε-NFA Aσ,I is defined for each program σ and each

planning instance I. Its alphabet is the set of operator names,

instantiated by objects of I. Its states are program configurations which have the form [σ, s], where σ is a program

and s is a planning state. Intuitively, as it reads a word of actions, it keeps track, within its state [σ, s], of the part of the

program that remains to be executed, σ, as well as the current planning state after performing the actions it has read

already, s.

Formally, Aσ,I = (Q, A, δ, qo , F ), where Q is the set of

program configurations, the alphabet A is a set of domain

actions, the transition function is δ : Q × (A ∪ {ε}) → 2Q ,

q0 = [σ, Init ], and F is the set of final states. The transition

function δ is defined as follows for atomic programs.

Syntax

The language we propose is based on G OLOG (Levesque et

al. 1997), a robot programming language developed by the

cognitive robotics community. In contrast to G OLOG, our

language supports specification of types for program variables, but does not support procedures.

Programs are constructed using the implicit language for

actions and boolean formulae defined by a particular planning instance I. Additionally, a program may refer to variables drawn from a set of program variables V . This set

V will contain variables that are used for nondeterministic

choices of arguments. In what follows, we assume O denotes the set of operator names from Ops, fully instantiated

with objects defined in I or elements of V .

The set of programs over a planning instance I and a set

of program variables V can be defined by induction. In what

follows, assume φ is a boolean formula with quantifiers on

the language of I, possibly including terms in the set of program variables V . Atomic programs are as follows.

1. nil : Represents the empty program.

2. o: Is a single operator instance, where o ∈ O.

3. any: A keyword denoting “any action”.

4. φ?: A test action.

If σ1 , σ2 and σ are programs, so are the following:

1. (σ1 ; σ2 ): A sequence of programs.

2. if φ then σ1 else σ2 : A conditional sentence.

3. while φ do σ: A while-loop.

4. σ ∗ : A nondeterministic iteration.

5. (σ1 |σ2 ): Nondeterministic choice between two programs.

6. π(x-t) σ: Nondeterministic choice of variable x ∈ V of

type t ∈ T .

Before we formally define the semantics of the language,

we show some examples that give a sense of the language’s

expressiveness and semantics.

• while ¬clear(B) do π(b-block ) putOnT able(b): while

B is not clear choose any b of type block and put it on the

table.

• any ∗ ; loaded(A, T ruck)?: Perform any sequence of actions until A is loaded in T ruck. Plans under this control

are such that loaded(A, T ruck) holds in the final state.

δ([a, s], a) = {[nil , s ]} iff Succ(s, a, s ), s.t. a ∈ A,

δ([any, s], a) = {[nil, s ]} iff Succ(s, a, s ), s.t. a ∈ A,

δ([φ?, s], ε) = {[nil , s]} iff s |= φ.

(4)

(5)

(6)

Equations 4 and 5 dictate that actions in programs change

the state according to the Succ relation. Finally, Eq. 6 defines transitions for φ? when φ is a sentence (i.e., a formula

with no program variables). It expresses that a transition can

only be carried out if the plan state so far satisfies φ.

Now we define δ for non-atomic programs. In the definitions below, assume that a ∈ A ∪ {ε}, and that σ1 and σ2 are

subprograms of σ , where occurring elements in V may have

been instantiated by any object in the planning instance I .

δ([(σ1 ; σ2 ), s], a) =

[

{[(σ1 ; σ2 ), s ]} if σ1 = nil ,

(7)

,s ]∈δ([σ ,s],a)

[σ1

1

δ([(nil; σ2 ), s], a) = δ([σ2 , s], a),

(

δ([if φ then σ1 else σ2 , s], a) =

(8)

δ([σ1 , s], a) if s |= φ,

δ([σ2 , s], a) if s |= φ,

δ([(σ1 |σ2 ), s], a) = δ([σ1 , s], a) ∪ δ([σ2 , s], a),

(

{[nil , s]}

if s |= φ and a = ε,

δ([while φ do σ1 , s], a) =

δ([σ1 ; while φ do σ1 , s], a) if s |= φ,

δ([σ1∗ , s], a) = δ([(σ1 ; σ1∗ ), s], a) if a = ε

28

(9)

δ([σ1∗ , s], ε) = δ([(σ1 ; σ1∗ ), s], ε) ∪ {[nil , s]},

[

δ([σ1 |x/o , s], a).

δ([π(x-t) σ1 , s], a) =

(10)

test(¬φ)

(11)

1

(o,t)∈τD ∪τP

test(φ)

test(ψ)

test(¬ψ)

where σ1 |x/o denotes the program resulting from replacing

any occurrence of x in σ1 by o. For space reasons we only

explain two of them. First, a transition on a sequence corresponds to transitioning on its first component first (Eq. 7),

unless the first component is already the empty program, in

which case we transition on the second component (Eq. 8).

On the other hand, a transition of σ1∗ represents two alternatives: executing σ1 at least once, or stopping the execution

of σ1∗ , with the remaining program nil (Eq. 9, 10).

To end the definition of Aσ,I , Q corresponds precisely to

the program configurations [σ , s] where σ is either nil or

a subprogram of σ such that program variables may have

been replaced by objects in I, and s is any possible planning state. Moreover, δ is assumed empty for elements of its

domain not explicitly mentioned above. Finally, the set of

accepting states is F = {[nil , s] | s is any state over I}, i.e.,

those where no program remains in execution. We can now

formally define an execution of a program.

Definition 1 (Execution of a program). A sequence of actions a1 · · · an is an execution of σ in I if a1 · · · an is accepted by Aσ,I .

The following remark illustrates how the automaton transitions in order to accept executions of a program.

Remark 1. Let σ = (if ϕ then a else b; c), and suppose

that Init is the initial state of planning instance I. Assume

furthermore that a, b, and c are always possible. Then Aσ,I

accepts ac if Init |= ϕ.

Proof. Suppose q a q denotes that Aσ,I can transition

from q to q by reading symbol a. Then if Init |= ϕ observe

that [σ, Init ] a [nil ; c, s2 ] c [nil , s3 ], for some planning

states s2 and s3 .

Now that we have defined those sequences of actions corresponding to the execution of our program, we are ready to

define the notion of planning under procedural control.

Definition 2 (Planning under procedural control). A sequence of action a is a plan for instance I under the control

of program σ if a is a plan in I and is an execution of σ in I.

3

2

5

a

4 noop

b

noop

6

noop

8

7

c

9

if

while

sequence

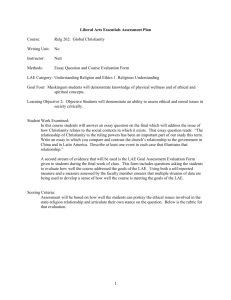

Figure 1: Automaton for while φ do (if ψ then a else b); c.

ple program shown as a finite state automaton. Intuitively,

the operators we generate in the compilation define the transitions of this automaton. Their preconditions and effects

condition on and change the automaton’s state.

The translation is defined inductively by a function

C(σ, n, E) which takes as input a program σ, an integer n, and a list of program variables with types E =

[e1 -t1 , . . . , ek -tk ], and outputs a tuple (L, L , n ) with L a

list of domain-independent operator definitions, L a list of

domain-dependent operator definitions, and n another integer. Intuitively, E contains the program variables whose

scope includes (sub-)program σ. Moreover, L contains restrictions on the applicability of operators defined in D, and

L contains additional control operators needed to enforce

the search control defined in σ. Integers n and n abstractly

denote the program state before and after execution of σ.

We use two auxiliary functions. Cnoop(n1 , n2 ) produces

an operator definition that allows a transition from state n1

to n2 . Similarly Ctest(φ, n1 , n2 , E) defines the same transition, but conditioned on φ. They are defined as:1

Cnoop(n1 , n2 ) = noop n1 n2 (), [ ], state = sn1 , [state = sn2 ]

x), t, Prec(

x), Eff (

x) with

Ctest(φ, n1 , n2 , E) = test n1 n2 (

= e1 -t1 , . . . , em -tm ,

(e-t,

x) = mentions(φ, E), e-t

`

Prec(

x) = state = sn1 ∧ φ[ei /xi ]m

i=1 ∧

^m

´

bound(ei ) → map(ei , xi ) ,

i=1

Eff (

x) = [state = sn2 ] · [bound(ei ), map(ei , xi )]m

i=1 .

of program

Function mentions(φ, E) returns a vector e-t

variables and types that occur in φ, and a vector x of new

variables of the same length. Bookkeeping predicates serve

the following purposes: state denotes the state of the automaton; bound(e) expresses that the program variable e

has been bound to an object of the domain; map(e, o) states

that this object is o. Thus, the implication bound(ei ) →

map(ei , xi ) forces parameter xi to take the value to which

ei is bound, but has no effect if ei is not bound.

Consider the inner box of Figure 1, depicting the compilation of the if statement. It is defined as:

Compiling Control into the Action Theory

This section describes a translation function that, given a

program σ in the DCK language defined above together with

a PDDL2.1 domain specification D, outputs a new PDDL2.1

domain specification Dσ and problem specification Pσ . The

two resulting specifications can then be combined with any

problem P defined over D, creating a new planning instance

that embeds the control given by σ, i.e. that is such that only

action sequences that are executions of σ are possible. This

enables any PDDL2.1-compliant planner to exploit search

control specified by any program.

To account for the state of execution of program σ and

to describe legal transitions in that program, we introduce

a few bookkeeping predicates and a few additional actions.

Figure 1 graphically illustrates the translation of an exam-

C(if φ then σ1 else σ2 , n, E) = (L1 · L2 · X, L1 · L2 , n3 )

with (L1 , L1 , n1 ) = C(σ1 , n + 1, E),

(L2 , L2 , n2 ) = C(σ2 , n1 + 1, E), n3 = n2 + 1,

X = [ Ctest(φ, n, n + 1, E), Ctest(¬φ, n, n1 + 1, E),

Cnoop(n1 , n3 ), Cnoop(n2 , n3 ) ]

and in the example we have φ = ψ, n = 2, n1 = 4, n2 =

6, n3 = 7, σ1 = a, and σ2 = b.

1

29

We use A · B to denote the concatenation of lists A and B.

The inductive definitions for other programs σ are:

As is shown below, planning in the generated instance

Iσ = (Dσ , Pσ ) is equivalent to planning for the original

instance I = (D, P ) under the control of program σ, except that plans on Iσ contain actions that were not part of

the original domain definition (test, noop, and free).

Theorem 1 (Correctness). Let Filter(a, D) denote the sequence that remains when removing from a any action not

defined in D. If a is a plan for instance Iσ = (Dσ , Pσ ) then

Filter(a, D) is a plan for I = (D, P ) under the control of σ.

Conversely, if a is a plan for I under the control of σ, there

exists a plan a for Iσ , such that a = Filter(a , D).

Proof. See (Baier, Fritz, & McIlraith 2007).

Now we turn our attention to analyzing the succinctness

of the output planning instance relative to the original instance and control program. Assume we define the size of a

program as the number of programming constructs and actions it contains. Then we obtain the following result.

Theorem 2 (Succinctness). If σ is a program of size m,

and k is the maximal nesting depth of π(x-t) statements in

σ, then |Iσ | (the overall size of Iσ ) is O(km).

Proof. See (Baier, Fritz, & McIlraith 2007).

The encoding of programs in PDDL2.1 is, hence, in worst

case O(k) times bigger than the program itself. It is also

easy to show that the translation is done in time linear in the

size of the program, since, by definition, every occurrence

of a program construct is only dealt with once.

C(nil , n, E) = ([ ], [ ], n)

x), Eff (

x)], n + 1) with

C(O(r), n, E) = ([ ], [O(

x), t, Prec (

O(

x), t, Prec(

x), Eff (

x) ∈ Ops, r = r1 , . . . , rm ,

x) = (state = sn ∧

Prec (

^

bound(ri ) → map(ri , xi ) ∧

i s.t. ri ∈E

^

xi = ri ),

i s.t. ri ∈E

Eff (

x) = [ state = sn ⇒ state = sn+1 ] ·

[state = sn ⇒ bound(ri ) ∧ map(ri , xi )]i s.t. ri ∈E

C(φ?, n, E) = ( [Ctest(φ, n, n + 1, E)], [ ], n + 1)

C((σ1 ; σ2 ), n, E) = (L1 · L2 , L1 · L2 , n2 ) with

(L1 , L1 , n1 ) = C(σ1 , n, E), (L2 , L2 , n2 ) = C(σ2 , n1 , E)

C((σ1 |σ2 ), n, E) = (L1 · L2 · X, L1 · L2 , n2 + 1) with

(L1 , L1 , n1 ) = C(σ1 , n + 1, E),

(L2 , L2 , n2 ) = C(σ2 , n1 + 1, E),

X = [ Cnoop(n, n + 1), Cnoop(n, n1 + 1),

Cnoop(n1 , n2 + 1), Cnoop(n2 , n2 + 1) ]

C(while φ do σ, n, E) = (L · X, L , n1 + 1) with

(L, L , n1 ) = C(σ, n + 1, E), X = [Ctest(φ, n, n + 1, E),

Ctest(¬φ, n, n1 + 1, E), Cnoop(n1 , n)]

C(σ ∗ , n, E) = (L · [Cnoop(n, n2 ), Cnoop(n1 , n)], L , n2 )

with (L, L , n1 ) = C(σ, n, E), n2 = n1 + 1

C(π(x-t, σ), n, E) = (L · X, L , n1 + 1) with

(L, L , n1 ) = C(σ, n, E · [x-t]),

X = [f ree n1 (x), t, state = sn1 ,

[state = sn1 +1 , ¬bound(x), ∀y.¬map(x, y)] ]

Exploiting DCK in SOA Heuristic Planners

Our objective in translating procedural DCK to PDDL2.1

was to enable any PDDL2.1-compliant SOA planner to

seamlessly exploit our DCK. In this section, we investigate

ways to best leverage our translated domains using domainindependent heuristic search planners.

There are several compelling reasons for wanting to apply domain-independent heuristic search to these problems.

Procedural DCK can take many forms. Often, it will provide explicit actions for some parts of a sequential plan, but

not for others. In such cases, it will contain unconstrained

fragments (i.e., fragments with nondeterministic choices of

actions) where the designer expects the planner to figure

out the best choice of actions to realize a sub-task. In

the absence of domain-specific guidance for these unconstrained fragments, it is natural to consider using a domainindependent heuristic to guide the search.

In other cases, it is the choice of action arguments that

must be optimized. In particular, fragments of DCK may

collectively impose global constraints on action argument

choices that need to be enforced by the planner. As such,

the planner needs to be aware of the procedural control in

order to avoid backtracking. By way of illustration, consider a travel planning domain comprising two tasks “buy

air ticket” followed by “book hotel”. Each DCK fragment

restricts the actions that can be used, but leaves the choice

of arguments to the planner. Further suppose that budget is

limited. We would like our planner to realize that actions

used to complete the first part should save enough money to

complete the second task. The ability to do such lookahead

can be achieved via domain-independent heuristic search.

The atomic program any is handled by macro expansion to

above defined constructs.

As mentioned above, given program σ, the return value

(L, L , nfinal ) of C(σ, 0, [ ]) is such that L contains new operators for encoding transitions in the automaton, whereas L

contains restrictions on the applicability of the original operators of the domain. Now we are ready to integrate these new

operators and restrictions with the original domain specification D to produce the new domain specification Dσ .

Dσ contains a constrained version of each operator O(x) of the original domain D.

Let

n

[O(x), t, Prec i (x), Eff i (x)]i=1 be the sublist of L

that contains additional conditions for operator O(x). The

operator replacing O(x) in Dσ is defined as:

O (

x), t, Prec(

x) ∧

_n

Prec i (

x), Eff (

x) ∪

i=1

[n

Eff i (

x)

i=1

Additionally, Dσ contains all operator definitions in L. Objects in Dσ are the same as those in D, plus a few new ones

to represent the program variables and the automaton’s states

si ( 0 ≤ i ≤ nfinal ). Finally Dσ inherits all predicates in D

plus bound(x), map(x, y), and function state.

The translation, up to this point, is problem-independent;

the problem specification Pσ is defined as follows. Given

any predefined problem P over D, Pσ is like P except that

its initial state contains condition state = s0 , and its goal

contains state = snfinal . Those conditions ensure that the

program must be executed to completion.

30

test(not φ)

In the rest of the section we propose three ways in which

one can leverage our translated domains using a domainindependent heuristic planner. These three techniques differ

predominantly in the operands they consider in computing

heuristics.

Direct Use of Translation (Simple) As the name suggests,

a simple way to provide heuristic guidance while enforcing

program awareness is to use our translated domain directly

with a domain-independent heuristic planner. In short, take

the original domain instance I and control σ, and use the

resulting instance Iσ with any heuristic planner.

Unfortunately, when exploiting a relaxed graph to compute heuristics, two issues arise. First, since both the

map and bound predicates are relaxed, whatever value

is already assigned to a variable, will remain assigned

to that variable. This can cause a problem with itdef

erative control. For example, assume program σL =

while φ do π(c-crate) unload(c, T ), is intended for a domain where crates can be only unloaded sequentially from a

truck. While expanding the relaxed plan, as soon as variable

c is bound to some value, action unload can only take that

value as argument. This leads the heuristic to regard most

instances as unsolvable, returning misleading estimates.

The second issue is one of efficiency. Since fluent state

is also relaxed, the benefits of the reduced branching factor

induced by the programs is lost. This could slow down the

computation of the heuristic significantly.

Modified Program Structure (H-ops) The H-ops approach

addresses the two issues potentially affecting the computation of the Simple heuristic. It is designed to be used with

planners that employ relaxed planning graphs for heuristic computation. The input to the planner in this case is a

pair (Iσ , HOps), where Iσ = (Dσ , Pσ ) is the translated instance, and HOps is an additional set of planning operators.

The planner uses the operators in Dσ to generate successor states while searching. However, when computing the

heuristic for a state s it uses the operators in HOps.

Additionally, function state and predicates bound and

map are not relaxed. This means that when computing the

relaxed graph we actually delete their instances from the relaxed states. As usual, deletes are processed before adds.

The expansion of the graph is stopped if the goal or a fixed

point is reached. Finally, a relaxed plan is extracted in the

usual way, and its length is reported as the heuristic value.

The un-relaxing of state, bound and map addresses the

problem of reflecting the reduced branching factor provided by the control program while computing the heuristics. However, it introduces other problems. Returning to the

σL program defined above, since state is now un-relaxed,

the relaxed graph expansion cannot escape from the loop,

because under the relaxed planning semantics, as soon as φ

is true, it remains true forever. A similar issue occurs with

the nondeterministic iteration.

This issue is addressed by the HOps operators. Due to

lack of space we only explain how these operators work in

the case of the while loop. To avoid staying in the loop

forever, the loop will be exited when actions in it are no

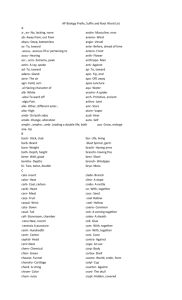

longer adding effects. Figure 2 provides a graphical repre-

1

test(φ)

2

test(fp ≤ 5)

7

test(fp > 5)

8

Figure 2: H-ops translation of while loops. While computing the heuristics, pseudo-fluent fp is increased each time

no new effect is added into the relaxed state, and it is set

to 0 otherwise. The loop can be exited if the last five (7-2)

actions performed didn’t add any new effect.

sentation. An important detail to note is that the loop is not

entered when φ is not found true in the relaxed state. (The

expression not φ should be understood as negation as failure.) Moreover, the pseudo-fluent f p is an internal variable

of the planner that acts as a real fluent for the HOps.

Since loops are guaranteed to be exited, the computation

of H-ops is guaranteed to finish because at some relaxed

state the final state of the automaton will be reached. At this

point, if the goal is not true, no operators will be possible

and a fixed point will be produced immediately.

A Program-Unaware Approach (Basic) Our programunaware approach (Basic) completely ignores the program

when computing heuristics. Here, the input to the planner

is a pair (Iσ , Ops), where Iσ is the translated instance, and

Ops are the original domain operators. The Ops operators

are used exclusively to compute the heuristic. Hence, Basic’s output is not at all influenced by the control program.

Although Basic is program unaware, it can sometimes

provide good estimates, as we see in the following section.

This is especially true when the DCK characterizes a solution that would be naturally found by the planner if no control were used. It is also relatively fast to compute.

Implementation and Experiments

Our implementation2 takes a PDDL planning instance and

a DCK program and generates a new PDDL planning instance. It will also generate appropriate output for the Basic

and H-ops heuristics, which require a different set of operators. Thus, the resulting PDDL instance may contain definitions for operators that are used only for heuristic computation using the :h-action keyword, whose syntax is

analogous to the PDDL keyword :action.

Our planner is a modified version of TLP LAN, which

does a best-first search using an FF-style heuristic. It is capable of reading the PDDL with extended operators.

We performed our experiments on the trucks, storage and

rovers domains (30 instances each). We wrote DCK for

these domains. For lack of space, we do not show the DCK

in detail, however for trucks we used the control shown as

an example in the Introduction. We ran our three heuristic approaches (Basic, H-ops, and Simple) and cycle-free,

depth-first search on the translated instance (blind). Additionally, we ran the original instance of the program (DCKfree) using the domain-independent heuristics provided by

the planner (original). Table 1 shows various statistics on

the performance of the approaches. Furthermore, Fig. 3

shows times for the different heuristic approaches.

2

31

Available at www.cs.toronto.edu/kr/systems

Trucks

Rovers

Storage

#n

#s

min

avg

max

#n

#s

min

avg

max

#n

#s

min

avg

max

original

1

9

1

1.1

1.2

1

10

1

2.13

4.59

1

18

1

4.4

21.11

Simple

0.31

9

1

1.03

1.2

0.74

19

1

1.03

1.2

1.2

18

1

1.05

1.29

Basic

0.41

15

1

1.02

1.07

1.06

28

1

1.05

1.3

1.13

20

1

1.01

1.16

H-ops

0.26

14

1

1.04

1.2

1.06

22

1

1.21

1.7

0.76

21

1

1.07

1.48

blind

19.85

3

1

1.04

1.07

1.62

30

1

1.53

2.14

1.45

20

1

1.62

2.11

ning instances. Each contribution is of value in and of itself.

The language can be used without the compilation, and the

compiled PDDL2.1 instance can be input to any PDDL2.1compliant SOA planner, not just the domain-independent

heuristic search planner that we propose. Our experiments

show that procedural DCK improves the performance of

SOA planners, and that our heuristics are sometimes key to

achieving good performance.

Much of the previous work on DCK in planning has exploited state-centric specification languages. In particular,

TLP LAN (Bacchus & Kabanza 1998) and TALP LANNER

(Kvarnström & Doherty 2000) employ declarative, statecentric, temporal languages based on LTL to specify DCK.

Such languages define necessary properties of states over

fragments of a valid plan. We argue that they could be less

natural than our procedural specification language.

Though not described as DCK specification languages

there are a number of languages from the agent programming and/or model-based programming communities that

are related to procedural control. Among these are E AG LE,

a goal language designed to also express intentionality (dal

Lago, Pistore, & Traverso 2002). Moreover, G OLOG is a

procedural language proposed as an alternative to planning

by the cognitive robotics community. It essentially constrains the possible space of actions that could be performed

by the programmed agent allowing non-determinism. Our

DCK language can be viewed as a version of G OLOG.

Further, languages such as the Reactive Model-Based Programming Language (RMPL) (Kim, Williams, & Abramson 2001) – a procedural language that combines ideas from

constraint-based modeling with reactive programming constructs – also share expressive power and goals with procedural DCK. Finally, Hierarchical Task Network (HTN) specification languages such as those used in SHOP (Nau et al.

1999) provide domain-dependent hierarchical task decompositions together with partial order constraints, not easily

describable in our language.

A focus of our work was to exploit SOA planners and

planning techniques with our procedural DCK. In contrast,

well-known DCK-enabled planners such as TLP LAN and

TALP LANNER use DCK to prune the search space at each

step of the plan and then employ blind depth-first cycle-free

search to try to reach the goal. Unfortunately, pruning is

only possible for maintenance-style DCK and there is no

way to plan towards achieving other types of DCK as there

is with the heuristic search techniques proposed here.

Similarly, G OLOG interpreters, while exploiting procedural DCK, have traditionally employed blind search to instantiate nondeterministic fragments of a G OLOG program.

Most recently, Claßen et al. (2007) have proposed to integrate an incremental G OLOG interpreter with a SOA planner. Their motivation is similar to ours, but there is a subtle difference: they are interested in combining agent programming and efficient planning. The integration works

by allowing a G OLOG program to make explicit calls to a

SOA planner to achieve particular conditions identified by

the user. The actual planning, however, is not controlled in

any way. Also, since the G OLOG interpreter executes the

returned plan immediately without further lookahead, back-

Table 1: Comparison between different approaches to planning

(with DCK). #n is the average factor of expanded nodes to the

number of nodes expanded by original (i.e., #n=0.26 means the

approach expanded 0.26 times the number of nodes expanded by

original). #s is the number of problems solved by each approach.

avg denotes the average ratio of the plan length to the shortest plan

found by any of the approaches (i.e., avg =1.50 means that on average, on each instance, plans where 50% longer than the shortest

plan found for that instance). min and max are defined analogously.

Not surprisingly, our data confirms that DCK helps to

improve the performance of the planner, solving more instances across all domains. In some domains (i.e. storage

and rovers) blind depth-first cycle-free search is sufficient

for solving most of the instances. However, quality of solutions (plan length) is poor compared to the heuristic approaches. In trucks, DCK is only effective in conjunction

with heuristics; blind search can solve very few instances.

We observe that H-ops is the most informative (expands

fewer nodes). This fact does not pay off in time in the experiments shown in the table. Nevertheless, it is easy to construct instances where the H-ops performs better than Basic.

This happens when the DCK control restricts the space of

valid plans (i.e., prunes out valid plans). We have experimented with various instances of the storage domain, where

we restrict the plan to use only one hoist. In some of these

cases H-ops outperforms Basic by orders of magnitude.

Summary and Related Work

DCK can be used to constrain the set of valid plans and

has proven an effective tool in reducing the time required

to generate a plan. Nevertheless, many of the planners that

exploit it use arguably less natural state-centric DCK specification languages, and their planners use blind search. In

this paper we examined the problem of exploiting procedural DCK with SOA planners. Our goal was to specify rich

DCK naturally in the form of a program template and to

exploit SOA planning techniques to actively plan towards

the achievement of this DCK. To this end we made three

contributions: provision of a procedural DCK language syntax and semantics; a polynomial-time algorithm to compile

DCK and a planning instance into a PDDL2.1 planning instance that could be input to any PDDL2.1-compliant planner; and finally a set of techniques for exploiting domainindependent heuristic search with our translated DCK plan-

32

100

100

100

10

original

Basic

1

10

original

Basic

1

H-ops

0.1

0.1

Simple

0

5

10

15

problem

20

25

(a) rovers

10

original

Basic

1

H-ops

H-ops

0.1

Simple

blind

0.01

seconds

1000

seconds

1000

seconds

1000

Simple

blind

30

0.01

0

5

10

15

problem

20

(b) storage

25

blind

30

0.01

0

5

10

15

problem

20

25

(c) trucks

Figure 3: Running times of the three heuristics and the original instance; logarithmic scale; run on an Intel Xeon, 3.6GHz, 2GB RAM

tracking does not extend over the boundary between G OLOG

and the planner. As such, each fragment of nondeterminism

within a program is treated independently, so that actions

selected locally are not informed by the constraints of later

fragments as they are with the approach that we propose.

Their work, which focuses on the semantics of ADL in the

situation calculus, is hence orthogonal to ours.

Finally, there is related work that compiles DCK into standard planning domains. Baier & McIlraith (2006), Cresswell & Coddington (2004), Edelkamp (2006), and Rintanen (2000), propose to compile different versions of LTLbased DCK into PDDL/ADL planning domains. The main

drawback of these approaches is that translating full LTL

into ADL/PDDL is worst-case exponential in the size of the

control formula whereas our compilation produces an addition to the original PDDL instance that is linear in the size

of the DCK program. Son et al. (2006) further show how

HTN, LTL, and G OLOG-like DCK can be encoded into planning instances that can be solved using answer set solvers.

Nevertheless, they do not provide translations that can be integrated with PDDL-compliant SOA planners, nor do they

propose any heuristic approaches to planning with them.

Acknowledgments We are grateful to Yves Lespérance

and the ICAPS anonymous reviewers for their feedback.

This research was funded by Natural Sciences and Engineering Research Council of Canada (NSERC) and the Ontario

Ministry of Research and Innovation (MRI).

of the 20th Int’l Joint Conference on Artificial Intelligence

(IJCAI-07), 1846–1851.

Cresswell, S., and Coddington, A. M. 2004. Compilation

of LTL goal formulas into PDDL. In Proc. of the 16th

European Conference on Artificial Intelligence (ECAI-04),

985–986.

dal Lago, U.; Pistore, M.; and Traverso, P. 2002. Planning

with a language for extended goals. In Proc. of AAAI/IAAI,

447–454.

Edelkamp, S. 2006. On the compilation of plan constraints and preferences. In Proc. of the 16th Int’l Conference on Automated Planning and Scheduling (ICAPS-06),

374–377.

Fox, M., and Long, D. 2003. PDDL2.1: An extension to

PDDL for expressing temporal planning domains. Journal

of Artificial Intelligence Research 20:61–124.

Hoffmann, J., and Nebel, B. 2001. The FF planning system: Fast plan generation through heuristic search. Journal

of Artificial Intelligence Research 14:253–302.

Kim, P.; Williams, B. C.; and Abramson, M. 2001. Executing reactive, model-based programs through graph-based

temporal planning. In Proc. of the 17th Int’l Joint Conference on Artificial Intelligence (IJCAI-01), 487–493.

Kvarnström, J., and Doherty, P. 2000. TALPlanner: A

temporal logic based forward chaining planner. Annals of

Mathematics and Artificial Intelligence 30(1-4):119–169.

Levesque, H.; Reiter, R.; Lespérance, Y.; Lin, F.; and

Scherl, R. B. 1997. GOLOG: A logic programming language for dynamic domains. Journal of Logic Programming 31(1-3):59–83.

Nau, D. S.; Cao, Y.; Lotem, A.; and Muñoz-Avila, H. 1999.

SHOP: Simple hierarchical ordered planner. In Proc. of

the 16th Int’l Joint Conference on Artificial Intelligence

(IJCAI-99), 968–975.

Rintanen, J. 2000. Incorporation of temporal logic control

into plan operators. In Horn, W., ed., Proc. of the 14th

European Conference on Artificial Intelligence (ECAI-00),

526–530. Berlin, Germany: IOS Press.

Son, T. C.; Baral, C.; Nam, T. H.; and McIlraith,

S. A. 2006. Domain-dependent knowledge in answer

set planning. ACM Transactions on Computational Logic

7(4):613–657.

References

Bacchus, F., and Kabanza, F. 1998. Planning for temporally extended goals. Annals of Mathematics and Artificial

Intelligence 22(1-2):5–27.

Baier, J. A., and McIlraith, S. A. 2006. Planning with firstorder temporally extended goals using heuristic search. In

Proc. of the 21st National Conference on Artificial Intelligence (AAAI-06), 788–795.

Baier, J.; Fritz, C.; and McIlraith, S. 2007. Exploiting procedural domain control knowledge in state-of-the-art planners (extended version). Technical Report CSRG-565, University of Toronto.

Bonet, B., and Geffner, H. 2001. Planning as heuristic

search. Artificial Intelligence 129(1-2):5–33.

Claßen, J.; Eyerich, P.; Lakemeyer, G.; and Nebel, B. 2007.

Towards an integration of Golog and planning. In Proc.

33