From: AAAI Technical Report WS-97-03. Compilation copyright © 1997, AAAI (www.aaai.org). All rights reserved.

Learning Problem Solving Control in Cooperative Multi-Agent Systems*

MV NagendraPrasad and Victor R Lesser

Department of ComputerScience

University of Massachusetts, Amherst, MA01003.

{nagendra,lesser} @cs.umass.edu

Abstract

The workpresented here deals with waysto improveproblemsolving control skills of cooperativeagents. Wepropose

situation-specificlearningas a wayto learn cooperative

control

knowledgein complexmulti-agent systems. Wedemonstrate

the powerof situation-specificlearningin the contextof dynamicchoiceof a coordinationstrategy basedon the problem

solving situation. Wepresent a leamingsystem, called COLLAGE,

that uses meta-levelinformationin the formof abstract

characterizationof the coordinationprobleminstanceto leam

to choosethe appropriatecoordinationstrategy fromamonga

class of strategies.

the agent set. Muchof the explicitly cooperative activities

involves direct communication.In contrast, indirect communication is achieved through observation of the behavior of

the other agents and their effects on the environment(Mataric

1993). Indirect communicationinvolves cues that are "simply by-productsof behaviors performedfor reasons other than

communication"(Seeley 1989).

In this paper, wedeal with an explicitly cooperativedirectly

communicatingsystem. In such systems, the local problem

solving control of an agent can interact in complexand intricate ways with the problemsolving control of other agents.

In these systems, an agent cannot makeeffective control decisions based on purely local problemsolving state. A given

The work presented here deals with ways to improveproblocal problemsolving state can mapto a multitude of global

lem solving control skills of cooperative agents. Problem

problemsolving states. Anagent with a purely local view of

solving control is the process by whichan agent organizes its

the problemsolving situation cannotlearn effective control decomputation. Anagent in a MASfaces control uncertainties

cisions that mayhave global implications, due the uncertainty

due to its partial local viewof the problemsolvingstates of the

aboutthe overall state of the system.Thisgives rise to the need

other agents in the system. Uncertainties about progress of

for learning moreglobally situated control knowledge.Onthe

problemsolving in other agents, characteristics of non-local

interacting sub-problems,expectations of non-local problem- other hand, an agent cannot utilize the entire global problem

solving state even if other agents are willing to communisolving activity, and generatedpartial results can lead to global

cate all the necessary information because of the limitations

incoherence and degradation in system performance if they

on its computationalresources, representational heterogeneare not managedeffectively through cooperation. Effective

like distraction. If an agent A provides

cooperation in such systems requires that the global problem- ity and phenomenon

another agent B with enormous amount of problem solving

solving state influence the local control decisions madeby an

agent. Wecall such an influence cooperative control(Lesser

state information,then agent B has to divert its computational

resources awayfrom local problem solving towards the task

1991).

of assimilating this information. This can have drastic conCooperationamongagents can be explicit or implicit. In explicitly cooperativesystems, the agents interact and exchange sequences in time constrained or resource boundeddomains.

Moreover, the phenomenon

of distraction can becomea seriinformation or performactions so as to benefit other agents.

ous problem.The incorrect or irrelevant information provided

Onthe other hand, implicitly cooperative agents perform acby another agent with weakly constrained knowledgecould

tions that are a part of their owngoal-seeking process but

these actions affect the other agents in beneficial ways(Mataric lead the receiving agent’s computations along unproductive

directions(Lesser 1991).

1993).

Agents exchange information through communication. An

This brings us to the importanceof communicating

only relagent in a MASneeds to communicatewith other agents to

evant information at suitable abstractions to other agents. We

acquire a view of the non-local problemsolving so as to make call such information a situation. Anagent needs to associate

local decisions that are influenced by moreglobal consideraappropriate views of the global situation with the knowledge

tions. The agents perform direct communicationor indirect

learned about effective control decisions. Wecall this form of

communicationas a part of their process of interaction with

knowledgesituation-specific control. In complexmulti-agent

other agents. Direct communicationinvolves intentionality

systems, the knowledgeengineering involved in providing

entailing a sender and one or more receivers. The commu- the agents with effective control strategies is a formidableand

nication is purposeful and is aimedat particular membersof

perhaps even impossible task due to the dynamic nature of

the interactions amongagents and between the environment

*Thismaterial is based uponworksupportedby the National

and agents. Thus, we appeal to machinelearning techniques

ScienceFoundationunderGrant Nos. IRI-9523419.Thecontent of

to learn situation specific problemsolving control in such

this paperdoesnot necessarilyreflect the positionor the policyof

systems. Learningcan involve acquiring strategies for negothe Government,

and no official endorsement

shouldbe inferred.

53

tiation or coordination, detecting coordination relationships,

incrementallyconstructing modelsof other agents and the task

environment.

In this paper, we will be dealing with learning coordination as an illustration of learning situation-specific control.

Coordination is the act of managinginterdependencies in a

multi-agent system(Decker&Lesser 1995). The agents learn

to dynamicallychoose the appropriate coordination strategy

in different coordination probleminstances. Weempirically

demonstratethat even for a narrowclass of agent activities,

learning to choosean appropriate coordination strategy based

on meta-level characterization of the global problemsolving state outperformsusing any single coordination strategy

across all probleminstances. The broad insights presented

here are moregenerally applicable to learning problemsolving control in complexcooperative multi-agent systems. Our

past workhas dealt with learning situation-specific organizational roles in a multi-agent parametric design system(Nagendra Prasad, Lesser, & Lander 1996b) and learning non-local

requirements in a negotiated search process(NagendraPrasad,

Lesser, & Lander 1996a).

Related Work

Muchof the literature in multi-agent learning relies on reinforcementlearning and classifier systems as learning algorithms. In Sen, Sekaran and Hale(Sen, Sekaran, &Hale 1994)

and Crites and Barto(Crites &Barto 1996), the agents do not

communicatewith one another and an agent treats the other

agents as a part of the environment.Weiss(Weiss1994) uses

classifier systems for learning appropriate multi-agent hierarchical organization structuring relationships. In Tan(Tan

1993), the agents share perception information to overcome

perceptual limitations or communicatepolicy functions. In

Sandholmand Crites(Sandholm&Crites 1995) the agents are

self-interested and an agent is not free to ask for any kind of

information from the other agents. The MASthat this paper

deals with contains complexcooperative agents (each agent is

a sophisticated problemsolver) and an agent’s local problem

solvingcontrol interacts withthat of the other agents’in intricate ways. In our work, rather than treating other agents as a

part of the environmentand learn in the presenceof increased

uncertainty, an agent tries to resolve the uncertainty about

other agents’ activities by communicatingmeta-level information(or situations) to resolve the uncertainty to the extent

possible (there is still the environmentaluncertainty that the

agents cannot do muchabout). In cooperative systems, an

agent can "ask" other agents for any information that it deems

relevant to appropriately situate its learned local coordination knowledge. Agents sharing perceptual information as

in Tan(Tan1993) or bidding information as in Weiss(Weiss

1994) do not makeexplicit the notion of situating the local

control knowledgein a more global abstract situation. The

information shared is weakand they are studied in domains

such as predator-prey(Tan1993) or blocks world (Weiss 1994)

wherethe need for sharing meta-level information and situating learningin it is not apparent.

54

Environment-Specific Coordination

Mechanisms

In this paper, weshowthe effectiveness of learning situationspecific coordination by extending a domain-independentcoordination frameworkcalled Generalized Partial Global Planning (GPGP)(Decker&Lesser 1995). GPGPis a flexible

modularmethodthat recognizes the need for creating tailored

coordinationstrategies in responseto the characteristics of a

particular task environment.It is structured as an extensible

set of modular coordination mechanismsso that any subset

of mechanisms can be used. Each mechanism can be invokedin response to the detection of certain features of the

environment and interdependencies between problem solving

activities of agents. The coordination mechanismscan be

configured(or parameterized)in different waysto derive different coordination strategies. In GPGP

(without the learning

extensions), once a set of mechanismsare configured (by

humanexpert) to derive a coordination strategy, that strategy is used across all probleminstances in the environment.

Experimentalresults have already verified that for someenvironmentsa subset of the mechanismsis moreeffective than

using the entire set of mechanisms(Decker & Lesser 1995;

NagendraPrasad et al. 1996). In this work, we present

learning extension, called COLLAGE,

that endowsthe agents

with the capability to choosea suitable subset of the coordination mechanismsbased on the present problem solving

situation, instead of havinga fixed subset across all problem

instances in an environment.

GPGPcoordination mechanismsrely on the coordination

problem instance being represented in a frameworkcalled

T~EMS(Decker

& Lesser 1993). The T~EMSframework (Task

Analysis, Environment Modeling, and Simulation) (Decker

& Lesser 1993) represents coordination problems in a formal, domain-independent way. It captures the essence of

the coordination probleminstances, independent of the details of the domain, in terms of trade-offs betweenmultiple

ways of accomplishinga goal, progress towards the achievementof goals, various interactions betweenthe activities in

the probleminstance and the effect of these activities on the

performance of the system as a whole. There are deadlines

associated with the tasks and someof the subtasks maybe

interdependent,that is, cannot be solved independentlyin isolation. There maybe multiple waysto accomplisha task and

these tradeoff the quality of a result producedfor the time to

producethat result. Quality is used as a catch-all term representing acceptability characteristics like certainty, precision,

and completeness, other than temporal characteristics. A set

of related tasks is called a task groupor a task structure. A

task structure is the elucidation of the structure of the problem

an agent is trying to solve. The quality of a task is a function

of the quality of its subtasks. The leaf tasks are denoted as

methodswith base level quality and duration. Methodsrepresent domainactions, like executing a blackboard knowledge

source, running an instantiated plan, or executing a piece of

code with its data. A task mayhave multiple waysto accomplish it, represented by multiple methods,that trade off the

time to producea result for the quality of the result. Besides

task/subtask relationships, there can be other interdependen-

cies, called coordination interrelationships, betweentasks in

a task group(Decker&Lesser 1993). In this paper, we will

be dealing with two such interrelationships:

¯ facilitates relationship or soft interrelationship: TaskA facilitates TaskB if the the executionTaskA producesa result

that affects an optional parameter of Task B. Task B could

have been executed without the result from Task A, but the

availability of the result providesconstraints on the execution of Task B, leading to improved quality and reduced

time requirements.

¯ enables relationships or hard interrelationship: If Task A

enables Task B then the execution Task A producesa result

that is a required input parameter for Task B. The results

of execution of Task A must be communicatedto Task B

before it can be executed.

In GPGP,a coordination strategy can be derived by activating a subset of the coordination mechanisms.Specifically we

will be investigating the effect of three coordinationstrategies:

Balanced (or dynamic-scheduling): Agents coordinate their

actions by dynamically forming commitments. A commitmentrepresents a contract to generate results of a particular

quality by a specified deadline. Relevant results are generated by specific times and communicatedto the agents to

whomcorresponding commitmentsare made. Agents schedule their local tasks trying to maximizethe accrual of quality

based on the commitmentsmade to it by the other agents,

while ensuring that commitmentsto other agents are satisfied. The agents have the relevant non-local view of the coordination problem, detect coordination relationships, form

commitmentsand communicatethe committed results.

Data Flow Strategy: An agent communicatesthe result of

performinga task to all the agents and the other agents can

exploit these results if they still can. This represents the other

extreme where there are no commitmentsfrom any agent to

any other agent.

Roughcoordination: This is similar to balanced

but commitments do not arise out of communicationbetween agents

but are knowna priori. Eachagent has an approximateidea of

whenthe other agents complete their tasks and communicate

results based on its past experience. "Roughcommitments"

are a form of tacit social contract betweenagents about the

completiontimes of their tasks.

COLLAGE:

Learning Coordination

LearningCoordination

Our learning algorithm, called COLLAGE,

uses abstract

meta-level information about coordination probleminstances

to learn to choose, for the given probleminstance, the appropriate coordination strategy from the three strategies described previously. Learning in COLLAGE

(COordination

Learner for muLtiple AGEntsystems) falls into the category

of Instance-Based Learning algorithms(Aha, Kibler, & Albert

1991) originally proposedfor supervisedclassification learning. We, however, use the IBL-paradigmfor unsupervised

learning of decision-theoretic choice.

Learninginvolves runningthe multi-agent systemon a large

numberof training coordination problem instances and observing the performanceof different coordination strategies

55

on these instances. Whena newtask group arises in the environment,each of the agents has its ownpartial local view of

the task group. Based on its local view, each agent forms a

local situation vector. A local situation represents an agent’s

assessmentof the utility of reacting to various characteristics of the environment. Such an assessment can potentially

indicate how to activate the various GPGPmechanismsand

consequentlyhas a direct bearing on the type of coordination

strategy that is best for the given coordination episode. The

agents then exchangetheir local situation vectors and each

of the agents composesall the local situation vectors into a

global situation vector. All agents agree on a choice of the

coordination strategy and the choice dependson the kind of

learning modeof the agents:

Mode1" In this mode,the agents run all the available coordination strategies and note their relative performances

for the each of the coordination problem instances. Thus,

for example, agents run each of data-flow, rough, and

balanced for a coordination episode and store their performancesfor each strategy.

Mode2: In this mode,the agents choose one of the coordination strategies for a given coordination episode and observe

and store the performanceonly for that coordination strategy. They choose the coordination that is represented the

least numberof times in the neighborhoodof a small radius

around the present global situation. This is done to obtain

a balanced representation for all the coordination strategies

across the space of possible global situations.

At end of each run of the coordination episode with

a selected coordination strategy, the performance of the

system is registered. This is represented as a vector of

four performance measures: total quality, numberof methods executed, number of communications, and termination time. Learning involves simply adding the new instance formedby the performanceof the coordination strategy along with the associated problem solving situation

to the "instance-base". Thus, the training phase builds a

set of {situation, coordination_strateg~l, performance}

triplets for each of the agents. Herethe global situation vector

is the abstraction of the global problemsolving state associated with the choice of a coordination-strategy. Note that at

the beginningof a problemsolving episode, all agents communicate their local problemsolving situations to other agents.

Thus, each agent aggregates the local problemsolving situations to form a commonglobal situation. All agents form

identical instance-bases because they build the sameglobal

situation vectors through communication.

Forminga Local Situation Vector The situation vector is

an abstraction of the coordination problemand the effects of

the coordination mechanismsin GPGP.It is composedof six

components:

(a) The first component

represents an approximationof the effect of detecting soft coordinationrelationships on the quality

componentof the overall performance. Anagent creates virtual task structures from the locally available task structures

by letting each of the facilitates coordination relationships potentially affecting a local task to actually take effect

and calls the scheduler on these task structures. In order to

achieve this, the agent detects all the faci i i rates interrelationships that affect its tasks. Anagent can be expectedto

knowthe interrelationships affecting its tasks thoughit may

not knowthe exact tasks in other agents that affect it without

communicatingwith them. The agent then produces another

set of virtual task structures, but this time with the assumption

that the facilitates

relationships are not detected and

hence the tasks that can potentially be affected by themare

not affected in these task structures. The scheduler is again

called with this task structure. The first component,representing the effect of detecting facilitates is obtained

as the ratio of the quality producedby the schedule without

fac i 1 i ta tes relationships and the quality producedby the

schedule with fac i litates relationships.

(b) The second component represents an approximation

the effect of detecting soft coordination relationships on the

duration componentof the overall performance. It is formed

using the same techniques discussed above for quality but

using the duration of the schedules formedwith the virtual

task structures.

(c) The third and fourth componentsrepresent an approximation of the effect of detecting hard coordinationinterrelationships on the quality and duration of the local task structures

at an agent. They are obtained in a mannersimilar to that

described for facilitates.

(e) The fifth componentrepresents the time pressure on the

agent. In a design-to-time scheduler, increased time pressure

on an agent will lead to schedules that will still adhere to

the deadline requirements as far as possible but with a sacrifice in quality. Undertime pressure, lower quality, lower

duration methodsare preferred over higher quality, higher duration methodsfor achieving a particular task. In order to

get an estimate of the time pressure, an agent generates virtual task structures from its local task structures by setting

the deadlines of the task groups, tasks and methodsto oo (a

large number)and scheduling these virtual task structures.

The agents scheduleagain with local task structures set to the

actual deadline. Timepressure is obtained as the ratio of the

schedule quality with the actual deadlines and the schedule

quality with large deadlines.

(f) The sixth componentrepresents the load. It is obtained

as the ratio of execution time under actual deadline and the

execution time under no time pressure. It is formed using

methodssimilar to that discussed abovefor time pressure but

using the duration of the schedules formedwith the virtual

task structures.

In the workpresented here, the cost of schedulingis ignored

and the time for scheduling is considered negligible compared

to the execution time of the methods. However,more sophisticated modelswouldneed to take into consideration these

factors too. Weview this a one of our future directions of

research.

Forminga Global Situation Vector Each agent communicates its local situation vector to all other agents. Anagent

composesall the local situation vectors: its ownand those it

received from others to form a global situation vector. We

can have a numberof composition functions but the one we

used in the experimentsreported here is simple: component56

wise average of the local situation vectors. Thusthe global

situation vector has six componentswhere each component

is the averageof all the correspondinglocal situation vector

components.

Choosing a Coordination Strategy

COLLAGE

chooses a coordination strategy based on how

the set of available strategies performedin similar past cases.

Weadopt the notation from Gilboa and Schmeidler(Gilboa

Schmeidler1995). Eachcase e is triplet

<p,a,r>

Ci

C_

PxAxR

where p 6 P and P is the set of situations representing

abstract characterization of coordination problems, a 6 A

and A is the set of coordination choices available, r 6 R and

R is the set of results fromrunningthe coordinationstrategies.

Decisions about coordination strategy choice are made

based on similar past cases. Outcomesdecide the desirability

of the strategies. Wedefine a similarity function and a utility

function as follows:

s : P~ ~ [0,1]

u:R

~ R

In the experiments presented later, we use the Euclidean

metric for similarity.

The desirability of a coordination strategy is determined

by a similarity-weighted sumof the utility it yielded in the

similar past cases in a small neighborhoodaroundthe present

situation vector. Weobservedthat such an averaging process

in a neighborhoodaround the present situation vector was

more robust than taking the nearest neighbor. Let Mbe the

set of past similar cases to problempn,,o 6 P (greater than

threshold similarity).

m 6 M ¢~ s(p,e,,

m) > st~,,,hoZa

Fora 6 A, letM. - {m = (p,a,r)

utility of a is definedas

U¢p..,~,a)

6 Mla = a}. The

1

= IM~I ~ s(p,,e.,q)u(r)

(q,a,r)EMa

Experiments

Experiments in the DDP domain

Our experiments on learning coordination were conducted in

the domainof distributed data processing(NagendraPrasad et

al. 1996). Someof the early insights into learning coordination in this domainwere presented in (NagendraPrasad

Lesser 1996)and the details of the modelingof the distributed

data processing domaincan be found in (NagendraPrasad et

al. 1996). This domainconsists of a number of geographically dispersed data processing centers (agents). Eachcenter

is responsible for conductingcertain types of analysis tasks

on streamsof satellite data arriving at its site: "routine analysis" that needs to be performedon data comingin at regular

intervals during the day, "crisis analysis" that needsto be performed on the incomingdata but with a certain probability

and "low priority analysis", the need for whicharises at the

beginningof the day with a certain probability. Lowpriority

analysis involves performingspecialized analysis on specific

archival data. Different types of analysis tasks havedifferent

priorities. A center shouldfirst attend to the "crisis analysis

tasks" and then perform "routine tasks" on the data. Time

permitting, it can handle the low-priority tasks. The processing centers have limited resources to conduct their analysis

on the incomingdata and they have to do this within certain

deadlines. Results of processing data at a center mayneed

to be communicatedto other centers due the interrelationships betweenthe tasks at these centers. (NagendraPrasad et

al. 1996) developed a graph-grammar-basedstochastic task

structure description language and generation tool for modeling task structures arising in a domainsuch as this. They

present the results of empirical explorations of the effects of

varying deadlines and crisis task group arrival probability.

Based on the experiments, they noted the need for different

coordinationstrategies in different situations to achieve good

performance. In this section, we intend to demonstrate the

power of COLLAGE

in choosing the most appropriate coordination strategy in a given situation. Weperformedtwo sets

of experimentsvarying the probability of the centers seeing

crisis tasks. In the first set of experiments,the crisis task group

arrival probability was0.25 and in the secondset it was 1.0.

For both sets of experiments, low priority tasks arrived with

a probability of 0.5 and the routine tasks werealwaysseen at

the time of newarrivals. A day consisted of a time slice of 140

time units and hence the deadline for the task structures was

fixed at 140 time units. In the experiments described here,

utility is the primary performance measure. Each message

an agent communicates

to another agent penalizes the overall

utility by a factor called eomm_eost.However,achieving a

better non-local view can potentially lead to higher quality

that adds to the system-wideutility. ThUS,utility = quality

- ~al_eoraraunieation x eomm_eoat. The system consisted of three agents (or data processingcenters) and they had

to learn to choose the best coordination strategy from among

balanced,rough,and data- flow.

ExperimentsFor the experiments where crisis task group

arrival probability was 0.25, COLLAGE

was trained on 4500

instances in Mode1 and on 10000instances in Mode2. For the

case wherecrisis task grouparrival probability was 1.0, it was

trained on 2500 instances in Mode1 and on 12500instances

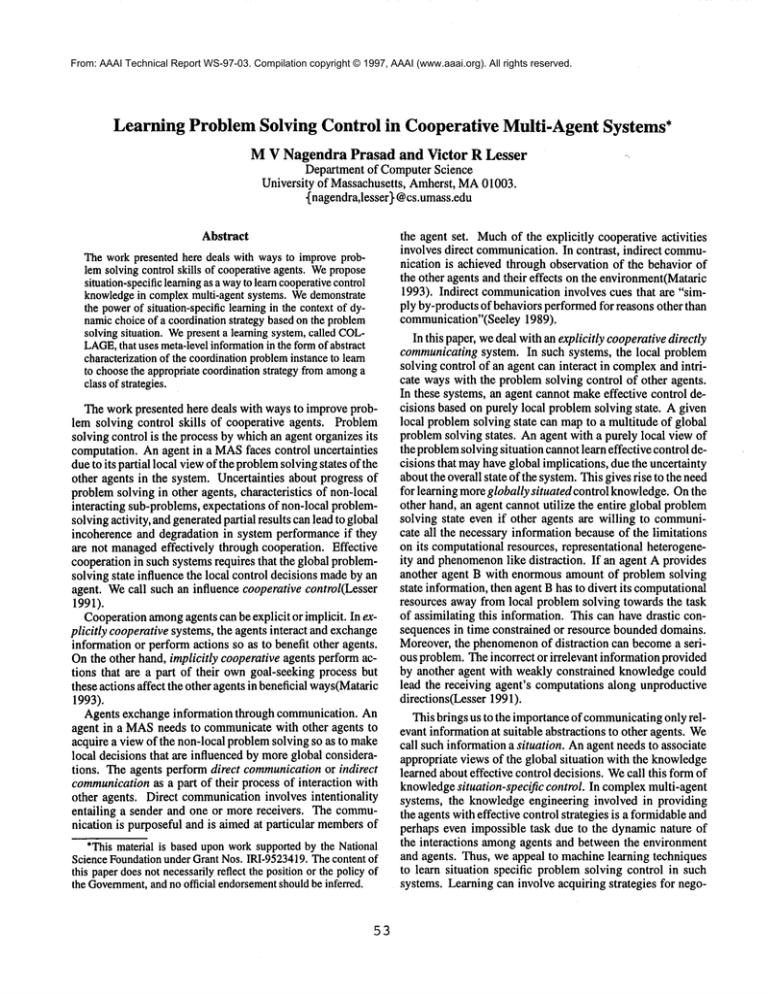

in Mode2. Figure 1 showsthe average quality over 100 runs

for different coordination strategies at various communication

costs. The curves for both Mode1 and Mode2 learning algorithms lie abovethose for all the other coordinationstrategies

for the most part in both the experiments. Weperformed

a Wilcoxonmatched-pair signed ranks analysis to test for

significant differences (at significance level 0.05) betweenaverage performances of the strategies across communications

costs (as versus, pairwise tests at each communication

cost).

This test revealed significant differences betweenthe learning

algorithms (both Mode1 and ModeII) and the other three

coordination strategies indicating that we can assert with a

high degree of confidence that the performanceof the learning algorithms across various communicationcosts is better

57

than statically using any one of the family of coordination

strategies t. As the communication

costs go up, the meanperformanceof the coordination strategies go down.For crisis

task group arrival probability of 0.25, the balanced coordination strategy performsbetter than the learning algorithmsat

very high communicationcosts because, learning algorithms

use additional units of communication

to form the global situation vectors. At very high communicationcosts, even the

three additional meta-level messagesfor local situation communication(one for each agent) led to large penalties on utility of system. At communicationcost of 1.0, Mode1 learner

and Mode2 learner average at 77.72 and 79.98 respectively,

whereas, choosing balanced

always produces an average

performanceof 80.48¯ Similar behavior was exhibited at very

high communicationcosts whenthe crisis task group arrival

probability was 1.0. Figure 2 gives an exampleof situationspecific choice of coordination strategies for Mode1 learner

in 100 test runs whenthe crisis task group probability was

1.0. The Z-axis showsthe numberof times a particular coordination strategy waschosenin the 100runs at a particular

communication cost. X-axis shows the communication cost

and the Y-axis showsthe coordination strategy.

-8O

’60

-40

¯ 30

10

Figure 2: Strategies chosen by COLLAGE

M1for Crisis TG

Probability 1.0 at various communication

costs

Conclusion

Manyresearchers have shown that no single coordination

mechanism

is goodfor all situations. However,there is little

in the literature that deals with howto dynamicallychoosea

coordination strategy based on the problemsolving situation.

In this paper, we presented the COLLAGE

system, that uses

meta-level information in the form of abstract characterization of the coordination probleminstance to learn to choose

the appropriate coordination strategy from amonga class of

strategies.

However,the scope situation-specific learning goes beyond

learning coordination. Effective cooperation in a multi-agent

systemrequires that the global problem-solvingstate influence the local learning process of an agent. Agents need

to associate appropriate views of the global problemsolving

*Testingacrosscommunication

costs is justified becausein reality, the cost mayvaryduringthe courseof the day.

96

4J~/~L,L~,

86

.,.~

.~

!

i

t i

,,,= CollageM2

--

.~........!

’

76.

.

220.

& datafow

....

.~ ~10~

~

i

............

.............

°

.....L~

’’ ’’ !’

~i.............

............

i I~-+°°I

....................

~a,ance,

4, !T,~ ~ , = ~ , ¯ ~

’ -C]’- rough

-

<1SO

j .........~........

i.............

i.. ! i

~

’’

i i .......i........

!.............

i.........

!............

!............

i............

!...........

i.....

!......

.......

i

1,o

I............

0 0,1 0,2 0,3 0,4 0,5 0,6 0,70,80,91

Communication

Cost

0 0,10,20,30,40,50,60,70,80,91

Communication

Cost

b)Crisis

TG1,0

a) Crisis

TG0,25

Figure 1: Average Quality versus CommunicationCost

state with the learned control knowledgein order to develop

more socially situated control knowledge.

This paper presents someof the empirical evidence that

we developed to validate these ideas. Further experimental

evidencefor the effectivenessof learningsituation-specific coordination can be found in (NagendraPrasad &Lesser 1997).

(Nagendra Prasad, Lesser, & Lander 1996b) and (Nagendra

Prasad, Lesser, &Lander1996a) deal with situation-specific

learning of other aspects of cooperative control.

on Learning, Interaction and Organizations in Multiagent

Environments.

NagendraPrasad, M. V., and Lesser, V.R. 1997. Learning

situation-specific coordination in cooperative multi-agent

systems. ComputerScience Technical Report 97-12, University of Massachusetts.

Nagendra Prasad, M. V.; Decker, K. S.; Garvey, A.; and

Lesser, V.R. 1996. Exploring Organizational Designs

with TAEMS:A Case Study of Distributed Data Processing. In Proceedingsof the SecondInternational Conference

on Multi-AgentSystems. Kyoto, Japan: AAAIPress.

NagendraPrasad, M. V.; Lesser, V. R.; and Lander, S. E.

1996a. Cooperative learning over composite search spaces:

Experienceswith a multi-agent design system. In Thirteenth

National Conferenceon Artificial Intelligence (AAAI-96).

Portland, Oregon: AAAIPress.

NagendraPrasad, M. V., Lesser, V. R.; and Lander, S. E.

1996b. Learning organizational roles in a heterogeneous

multi-agent system. In Proceedings of the SecondInternational Conference on Multi-Agent Systems. Kyoto, Japan:

AAAIPress.

Sandholm,T., and Crites, R. 1995. Multi-agent reinforcementlearning in the repeated prisoner’s dilemma,to appear

in Biosystems.

Seeley, T. D. 1989. The Honey Bee Colony as a Superorganism. AmericanScientist 77:546-553.

Sen, S.; Sekaran, M., and Hale, J. 1994. Learning to coordinate without sharing information. In Proceedingsof the

Twelfth National Conferenceon Artificial Intelligence, 426431. Seattle, WA:AAAI.

Tan, M. 1993. Multi-agent reinforcement learning: Independent vs. cooperative agents. In Proceedingsof the Tenth

International Conference on MachineLearning, 330-337.

Weiss, G. 1994. Somestudies in distributed machinelearning and organizational design. Technical Report FKI- 189-94,

Institut fiir Informatik, TUMiinchen.

References

Aha, D. W.; Kibler, D.; and Albert, M. K. 1991. Instancebased Learning Algorithms. MachineLearning 6:37-66.

Crites, R. H., and Barto, A. G. 1996. Improving elevator

performance using reinforcement learning. In Touretzky,

D.; Mozer, M.; and Hasselmo,M., eds., Advancesin Neural

Information Processing Systems 8. Cambridge, MA:MIT

Press.

Decker, K. S., and Lesser, V. R. 1993. Quantitative modeling of complexcomputational task environments. In Proceedings of the Eleventh National Conferenceon Artificial

Intelligence, 217-224.

Decker, K. S., and Lesser, V.R. 1995. Designing a family

of coordination algorithms. In Proceedingsof the First International Conferenceon Multi-Agent Systems, 73-80. San

Francisco, CA: AAAIPress.

Gilboa, I., and Schmeidler, D. 1995. Case-based Decision

Theory. The Quaterly Journal of Economics605-639.

Lesser, V. R. 1991. A retrospective view of FAJCdistributed

problem solving. IEEETransactions on Systems, Man, and

Cybernetics 21(6): 1347-1362.

Mataric, M. J. 1993. Kin recognition, similarity, and group

behavior. In Proceedingsof the Fifteenth AnnualCognitive

Science Society Conference, 705-710.

NagendraPrasad, M. V., and Lesser, V. R. 1996. Off-line

Learning of Coordination in Functionally Structured Agents

for Distributed Data Processing. In ICMAS96Workshop

58