From: ISMB-99 Proceedings. Copyright ' 1999, AAAI (www.aaai.org). All rights reserved.

Using Sequence Motifs for Enhanced Neural Network

Prediction of Protein Distance Constraints

Jan Gorodkin1 2, Ole Lund1, Claus A. Andersen1 , and Søren Brunak1

;

1 Center for

Biological Sequence Analysis, Department of Biotechnology,

The Technical University of Denmark, Building 208, DK-2800 Lyngby, Denmark

gorodkin@cbs.dtu.dk, olund@strubix.dk, ca2@cbs.dtu.dk, brunak@cbs.dtu.dk

Phone: +45 45 25 24 77, Fax: +45 45 93 15 85

2 Department of

Genetics and Ecology, The Institute of Biological Sciences

University of Aarhus, Building 540, Ny Munkegade, DK-8000 Aarhus C, Denmark

Abstract

Correlations between sequence separation (in residues) and

distance (in Angstrom) of any pair of amino acids in polypeptide chains are investigated. For each sequence separation we

define a distance threshold. For pairs of amino acids where

the distance between Cα atoms is smaller than the threshold,

a characteristic sequence (logo) motif, is found. The motifs change as the sequence separation increases: for small

separations they consist of one peak located in between the

two residues, then additional peaks at these residues appear,

and finally the center peak smears out for very large separations. We also find correlations between the residues in the

center of the motif. This and other statistical analyses are

used to design neural networks with enhanced performance

compared to earlier work. Importantly, the statistical analysis explains why neural networks perform better than simple statistical data-driven approaches such as pair probability

density functions. The statistical results also explain characteristics of the network performance for increasing sequence

separation. The improvement of the new network design is

significant in the sequence separation range 10–30 residues.

Finally, we find that the performance curve for increasing sequence separation is directly correlated to the corresponding

information content. A WWW server, distanceP, is available

at http://www.cbs.dtu.dk/services/distanceP/.

Keywords: Distance prediction; sequence motifs; distance

constraints; neural network; protein structure.

Introduction

Much work have over the years been put into approaches

which either analyze or predict features of the threedimensional structure using distributions of distances, correlated mutations, and more lately neural networks, or combinations of these e.g. (Tanaka & Scheraga 1976; Miyazawa

& Jernigan 1985; Bohr et al. 1990; Sippl 1990; Maiorov

& Crippen 1992; Göbel et al. 1994; Mirny & Shaknovich

1996; Thomas, Casari, & Sander 1996; Lund et al. 1997;

Olmea & Valencia 1997; Skolnick, Kolinski, & Ortiz 1997;

Present address: Structural Bioinformatics Advanced Technologies A/S, Agern Alle 3, DK-2970 Hørsholm, Denmark

Copyright c 1999, American Association for Artificial Intelligence (www.aaai.org). All rights reserved.

Fariselli & Casadio 1999). The ability to adopt structure

from sequences depends on constructing an appropriate cost

function for the native structure. In search of such a function

we here concentrate on finding a method to predict distance

constraints that correlate well with the observed distances

in proteins. As the neural network approach is the only approach so far which includes sequence context for the considered pair of amino acids, these are expected not only to

perform better, but also to capture more features relating distance constraints and sequence composition.

The analysis include investigation of the distances between amino acid as well as sequence motifs and correlations for separated residues. We construct a prediction

scheme which significantly improve on an earlier approach

(Lund et al. 1997).

For each particular sequence separation, the corresponding distance threshold is computed as the average of all

physical distances in a large data set between any two amino

acids separated by that amount of residues (Lund et al.

1997). Here, we include an analysis of the distance distributions relative to these thresholds and use them to explain qualitative behavior of the neural network prediction

scheme, thus extending earlier studies (Reese et al. 1996).

For the prediction scheme used here it is essential to relate

the distributions to their means. Analysis of the network

weight composition reveal intriguing properties of the distance constraints: the sequence motifs can be decomposed

into sub-motifs associated with each of the hidden units in

the neural network.

Further, as the sequence separation increases there is a

clear correspondence in the change of the mean value, distance distributions, and the sequence motifs describing the

distance constraints of the separated amino acids, respectively. The predicted distance constraints may be used as

inputs to threading, ab initio, or loop modeling algorithms.

Materials and Method

Data extraction

The data set was extracted from the Brookhaven Protein

Data Bank (Bernstein et al. 1977), release 82 containing

5762 proteins. In brief entries were excluded if: (1) the sec-

ondary structure of the proteins could not be assigned by the

program DSSP (Kabsch & Sander 1983), as the DSSP assignment is used to quantify the secondary structure identity

in the pairwise alignments, (2) the proteins had any physical chain breaks (defined as neighboring amino acids in the

sequence having Cα -distances exceeding 4:0Å) or entries

where the DSSP program detected chain breaks or incomplete backbones, (3) they had a resolution value greater than

2:5Å, since proteins with worse resolution, are less reliable

as templates for homology modeling of the C α trace (unpublished results).

Individual chains of entries were discarded if (1) they had

a length of less than 30 amino acids, (2) they had less than

50% secondary structure assignment as defined by the program DSSP, (3) they had more than 85% non-amino acids

(nucleotides) in the sequence, and (4) they had more than

10% of non-standard amino acids (B, X, Z) in the sequence.

A representative set with low pairwise sequence similarity was selected by running algorithm #1 of Hobohm et al.

(1992) implemented in the program RedHom (Lund et al.

1997).

In brief the sequences were sorted according to resolution

(all NMR structures were assigned resolution 100). The sequences with the same resolution were sorted so that higher

priority was given to longer proteins.

The sequences were aligned utilizing the local alignment

program, ssearch (Myers & Miller 1988; Pearson 1990) using the pam120 amino acid substitution matrix (Dayhoff &

Orcutt 1978), with gap penalties ,12, ,4. As a cutoffpfor

sequence similarity we applied the threshold T = 290= L,

T is the percentage of identity in the alignment and L the

length of the alignment. Starting from the top of the list

each sequence was used as a probe to exclude all sequence

similar proteins further down the list.

By visual inspection seven proteins were removed from

the list, since their structure is either ’sustained’ by DNA or

predominantly buried in the membrane. The resulting 744

protein chains are composed of the residues where the Cα atom position is specified in the PDB entry.

Ten cross-validation sets were selected such that they all

contain approximately the same number of residues, and all

have the same length distribution of the chains. All the data

are made publicly available through the world wide web

page http://www.cbs.dtu.dk/services/distanceP/.

Information Content / Relative entropy measure

Here we use the relative entropy to measure the information content (Kullback & Leibler 1951) of aligned regions

between sequence separated residues. The information content is obtained by summing for the respective position in

the alignment, I = ∑Li=1 Ii , where Ii is the information content of position i in the alignment. The information content

at each position will sometimes be displayed as a sequence

logo (Schneider & Stephens 1990). The position-dependent

information content is given by

qik

Ii = ∑ qik log2

;

(1)

pk

k

where k refers to the symbols of the alphabet considered

(here amino acids). The observed fraction of symbol k at

position i is qik , and pk is the background probability of finding symbol k by chance in the sequences. pk will sometimes

be replaced by a position-dependent background probability, that is the probability of observing letter k at some position in the alignment in another data set one wishes to compare to. Symbols in logos turned 180 degrees indicate that

qik < pk .

Neural networks

As in the previous work, we apply two-layer feed-forward

neural networks, trained by standard back-propagation, see

e.g. (Brunak, Engelbrecht, & Knudsen 1991; Bishop 1996;

Baldi & Brunak 1998), to predict whether two residues are

below or above a given distance threshold in space. The

sequence input is sparsely encoded. In (Lund et al. 1997)

the inputs were processed as two windows centered around

each of the separated amino acids. However, here we extend

that scheme by allowing the windows to grow towards each

other, and even merge to a single large window covering the

complete sequence between the separated amino acids. Even

though such a scheme increases the computational requirements, it allow us to search for optimal covering between the

separated amino acids.

As there can be a large difference in the number of positive (contact) and negative (no contact) sequence windows,

for a given separation, we apply the balanced learning approach (Rost & Sander 1993). Training is done by a 10 set

cross-validation approach (Bishop 1996), and the result is

reported as the average performance over the partitions. The

performance on each partition is evaluated by the Mathews

correlation coefficient (Mathews 1975)

C=

pN

Pt Nt , Pf N f

( t + N f )(Nt + Pf )(Pt + N f )(Pt + Pf )

;

(2)

where Pt is the number of true positives (contact, predicted

contact), Nt the number of true negatives (no contact, no

contact predicted), Pf the number of false positives (no contact, contact predicted), and N f is the number of false negatives (contact, no contact predicted).

The analysis of the patterns stored in the weights of the

networks is done through the saliency of the weights, that

is the cost of removing a single weight while keeping the

remaining ones. Due to the sparse encoding each weight

connected to a hidden unit corresponds exactly to a particular amino acid at a given position in the sequence windows

used as inputs. We can then obtain a ranking of symbols on

each position in the input field. To compute the saliencies

we use the approximation for two-layer one-output networks

(Gorodkin et al. 1997), who showed that the saliencies for

the weights between input and hidden layer can be written

as

skji = s ji = w2jiW j2 K ;

(3)

where w ji is the weight between input i and hidden unit j,

and W j the weight between hidden unit j and the output. K

is a constant. The kth symbol is implicitly given due to the

Sequence separation 2

Sequence separation 11

250

2000

Sequence separation 16

120

200

100

1000

100

50

-6

60

0

-4

-2

0

2

Physical distance (Angstrom)

20

-20 -15 -10 -5 0

5 10 15 20

Physical distance (Angstrom)

Sequence separation 3

1000

0

25

-20

Sequence separation 12

-10

0

10

20

30

Physical distance (Angstrom)

40

Sequence separation 20

90

200

80

800

70

Number

600

400

Number

150

Number

80

40

500

0

150

Number

Number

Number

1500

100

60

50

40

30

200

50

0

0

20

10

-6

-4

-2

0

2

4

Physical distance (Angstrom)

-20

Sequence separation 4

800

0

-10

0

10

20

Physical distance (Angstrom)

Sequence separation 13

70

200

700

Number

Number

Number

300

Sequence separation 60

100

40

30

20

200

50

10

100

0

-8

50

50

150

400

-10

0

10 20 30 40

Physical distance (Angstrom)

60

600

500

-20

-6

-4

-2

0

2

4

6

Physical distance (Angstrom)

0

-20

-10

0

10

20

Physical distance (Angstrom)

30

0

-40

-20

0

20

40

60

80

Physical distance (Angstrom)

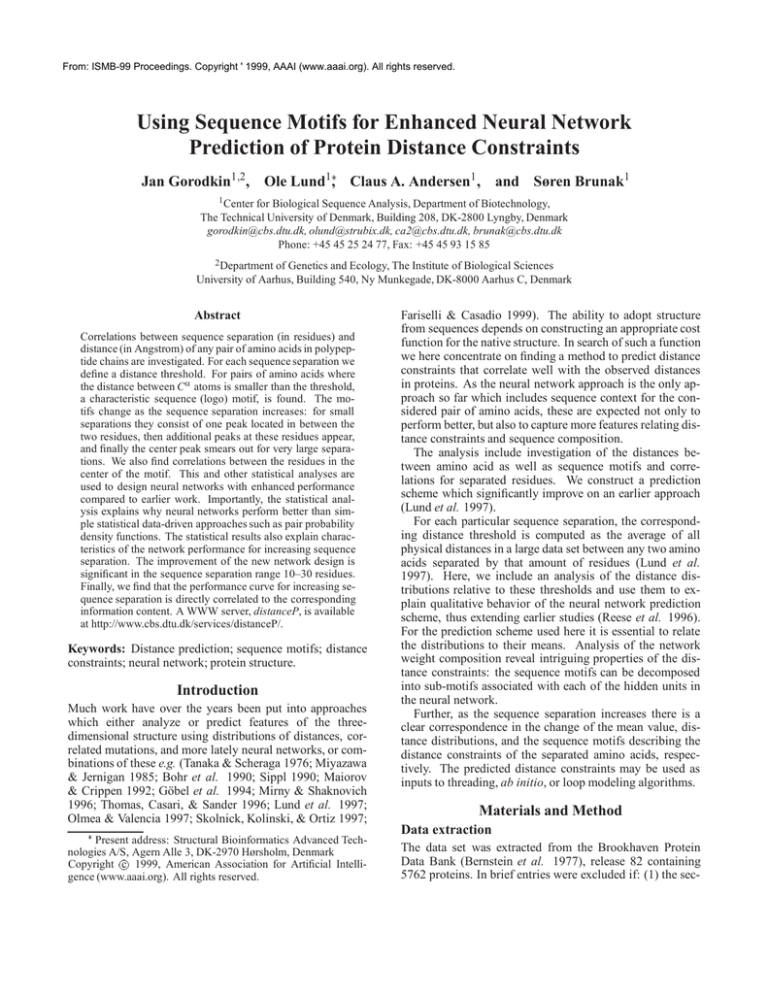

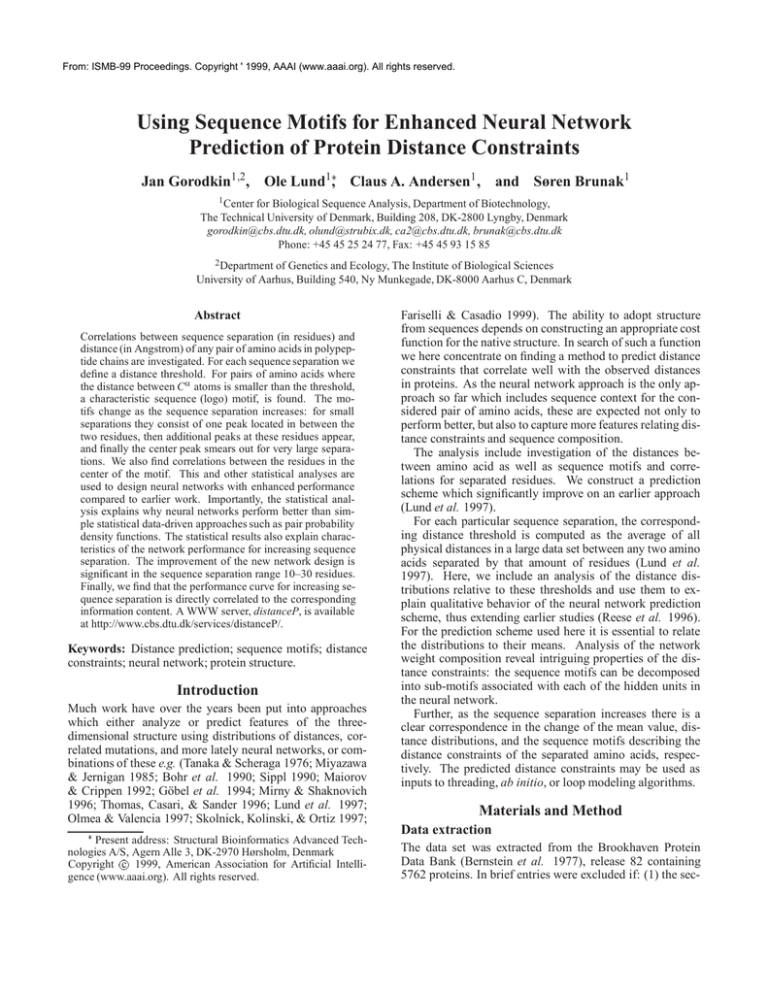

Figure 1: Length distributions of residue segments for corresponding sequence separations, relative the respective mean values. Sequence

separations 2, 3, 4, 11, 12, 13, 16, 20, and 60 are shown. These show the representative shapes of the distance distributions. The vertical line

through zero indicates the displacement with respect to the mean distance.

sparse encoding. If the signs of w ji and W j are opposite,

we display the corresponding symbol upside down in the

weight-saliency logo.

Results

We conduct statistical analysis of the data and the distance

constraints between amino acids, and subsequent use the results to design and explain the behavior of a neural network

prediction scheme with enhanced performance.

Statistical analysis

For each sequence separation (in residues), we derive the

mean of all the distances between pairs of Cα atoms. We use

these means as distance constraint thresholds, and in a prediction scheme we wish to predict whether the distance between any pair of amino acids is above or below the threshold corresponding to a particular separation in residues. To

analyze which pairs are above and below the threshold, it

is relevant to compare: (1) the distribution of distances between amino acid pairs below and above the threshold, and

(2) the sequence composition of segments where the pairs of

amino acids are below and above the threshold.

First we investigate the length distribution of the distances

as function of the sequence separation. A complete investigation of physical distances for increasing sequence separation is given by (Reese et al. 1996). In particular it

was found that the α-helices caused a distinct peak up to

sequence separation 20, whereas β-strands are seen up to

separations 5 only. However, when we perform a simple

translation of these distributions relative to their respective

means, the same plots provide essentially new qualitative information, which is anticipated to be strongly correlated to

the performance of a predictor (above/below the threshold).

In particular we focus on the distributions shown in Figure 1,

but we also use the remaining distributions to make some

important observations.

When inspecting the distance distributions relative to their

mean, two main observations are made. First, the distance

distribution for sequence separation 3, is the one distance

distribution where the data is most bimodal. Thus sequence

separation 3 provides the most distinct partition of the data

points. Hence, in agreement with the results in (Lund et

al. 1997) we anticipate that the best prediction of distance

constraints can be obtained for sequence separation 3. Furthermore, we observe that the α-helix peak shifts relative to

the mean when going from sequence separation 11 to 13.

The length of the helices becomes longer than the mean distances. This shift interestingly involve the peak to be placed

at the mean value itself for sequence separation 12. Due to

this phenomenon, we anticipate, that, for an optimized predictor, it can be slightly harder to predict distance constraints

for separation 12 than for separations 11 and 13.

The peak present at the mean value for sequence separation 12 does indeed reflect the length of helices as demonstrated clearly in Figure 2. Rather than using the simple rule

that each residue in a helix increases the physical distance

with 1.5 Angstrom (Branden & Tooze 1999), we computed

the actual physical lengths for each size helix to obtain a

more accurate picture. The physical length of the α-helices

was calculated by finding the helical axis and measuring the

translation per Cα -atom along this axis. The helical axis

was determined by the mass center of four consecutive Cα atoms. Helices of length four are therefore not included,

since only one center of mass was present. We see that helices at 12 residues coincide with sequence separation 12.

Again we use this as an indication that at sequence separation 12 it may be slightly harder to predict distance constraints than at separation 13.

Separation and helix length

Physical distance (Angstrom)

35

Average sequence separation

Average helix length

30

25

20

15

10

5

0

0

5

10

15

20

25

30

Separation/Length (Residues)

Figure 2: Mean distances for increasing sequence separation and

computed average physical helix lengths for increasing number of

residues.

As the sequence separation increases the distance distribution approaches a universal shape, presumably independent of structural elements, which are governed by more local distance constraints. The traits from the local elements

do as mentioned by (Reese et al. 1996) (who merge large

separations) vanish for separations 20–25. (Here we considered separations up to 100 residues.) In Figure 1 we see

that the transition from bimodal distributions to unimodal

distributions centered around their means, indicates that prediction of distance constraints must become harder with increasing sequence separation. In particular when the universal shape has been reached (sequence separation larger than

30), we should not expect a change in the prediction ability

as the sequence separation increases. The universal distribution has its mode value approximately at ,3:5 Angstrom,

indicating that the most probable physical distance for large

sequence separations corresponds to the distance between

two Cα atoms.

Notice that the universality only appears when the distribution is displaced by its mean distance. This is interesting

since the mean of the physical distances grows as the sequence separation increases. From a predictability perspective, it therefore makes good sense to use the mean value as

a threshold to decide the distance constraint for an arbitrary

sequence separation.

A useful prediction scheme must rely on the information

available in the sequences. To investigate if there exists a

detectable signal between the sequence separated residues,

for each sequence separation, we constructed sequence logos (Schneider & Stephens 1990) as follows: The sequence

segments for which the physical distance between the separated amino acids was above the threshold were used to generate a position-dependent background distribution of amino

acids. The segments with corresponding physical distance

below the threshold were all aligned and displayed in sequence logos using the computed background distribution

of amino acids. The pure information content curves are

shown for increasing sequence in Figure 3. The corresponding sequence logos are displayed in Figure 4. We used a

“margin” of 4 residues to the left and right of the logos, e.g.,

for sequence separation 2, the physical distance is measured

between position 5 and 7 in the logo.

The change in the sequence patterns is consistent with the

change in the distribution of physical distances. Up to separations 6–7, the distribution of distances (Figure 1) contains

two peaks, with the β-strand peak vanishing completely for

separation 7–8 residues. For the same separations, the sequence motif changes from containing one to three peaks.

For larger sequence separations, the motif consists of three

characteristic peaks, the two located at positions exactly corresponding to the separated amino acids. The third peak appear in the center. This peak tells us that for physical distances within the threshold, it indeed matters whether the

residues in the center can bend or turn the chain. We see

that exactly such amino acids are located in the center peak:

glycine, apartic acid, glutamic acid, and lysine, all these are

medium size residues (except glycine), being hydrophilic,

thereby having affinity for the solvent, but not being on the

outer most surface of the protein (see e.g., (Creighton 1993)

Separation: 13

Separation: 2

0.12

0.06

0.10

Bits

Bits

0.08

0.06

0.04

0.04

0.02

0.02

0.00

1

2

3

4

5

6

7

8

9

10

11

4

6

8

10

12

14

Position

Separation: 7

Separation: 20

0.06

0.03

0.04

0.02

0.02

16

18

20

22

0.01

0.00

2

4

6

8

10

12

14

0.00

16

2

4

6

8

10 12 14 16 18 20 22 24 26 28

Position

Position

Separation: 9

Separation: 30

0.06

0.02

0.04

Bits

Bits

2

Position

Bits

Bits

0.00

0.01

0.02

0.00

2

4

6

8

10

12

14

16

0.00

18

10

15

20

25

Position

Separation: 12

Separation: 60

0.06

30

35

0.02

0.04

Bits

Bits

5

Position

0.01

0.02

0.00

2

4

6

8

10

12

14

16

18

20

Position

0.00

5

10 15 20 25 30 35 40 45 50 55 60 65

Position

Figure 3: Sequence information profiles for increasing sequence separation.

for amino acid properties). As the sequence separation increases from about 20 to 30 the center peak smears out, in

agreement with the transition of the distance distributionthat

shifts to the universal distribution in this range of sequence

separation. The sequence motif likewise becomes “universal”. Only the peaks located at the separated residues are

left.

The composition of single peak motifs resemble to a large

degree the composition of the center for motifs having three

peaks. However, the slightly increased amount of leucine

moves from the center of the one peak motifs to the separated amino acid positions in the three peak motifs. The

reverse happens for glycine. Interestingly, as the sequence

separation increases valine (and isoleucine) become present

at the outermost peaks. In agreement with the helix length

shift from below to above the threshold at separations 12

Separation: 13

I

A

L

V

I

N

PQ

R

PQ

K

T

N

D

S

F

YR

FHHF

I

YFY

R

S

N

MTY

F

H

S

R

I

R

R

P

M

Q

Q

F

P

C

W

M

C

W

F

Y

I

M

C

W

I

T

I

G

KG

V

TV

S

YY

bits

CW

P

H

C

WW

H

H

H

W

C

C

W

C

C

KA

D

T

P

Q

S

N

Q

P

N

Q

K

E

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

W

D

T

P

Q

E

N

S

Q

K

H

T

K

W

WWWW

M

W

M

H

Q

F

W

M

N

H

W

V

E

MYV

T

P

H

M

L

D

Q

M

Q

H

H

P

Q

T

K

K

S

QP

DA

NE

N

P

P

H

C

C

H

C

H

Y

C

A

V

V

S

S

I

I

N

N

N

P

P

P

R

R

R

S

I

I

YYY

Q

F

FFQR

RQQQR

RRR

I

I

I

GR

V

D

QW

Q

Q

H

M

C

H

M

C

W

H

M

C

Q

W

H

Y

M

H

M

MW

H

M

F

H

Q

Q

Y

P

MWWWWWW

M

Q

Q

R

Q

M

H

H

Y

F

I

F

F

H

WW

bits

T

S

ND

R

W

W

H

M

G

H

H

YH

HYY

P

AAA

PK

NE

D

N

P

L

QPSD

R

H

H

H

C

S

G

K

S

N

D

P

R

G

T

PT

D

K

S

N

P

R

N

K

H

H

H

CC

W

W

W

C

C

CWWW

C

W

P

YY

H

D

K

K

E

E

E

K

N

N

P

P

Q

R

R

R

P

N

F

F

Q

Q

Y

W

W

W

M

M

M

Q

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

W

ET

S

S

G

P

R

K

N

K

E

E

K

D

E

DT

TS

S

D

L

I

FY

MY

P

M

P

P

M

R

QQ

P

E

H

M

M

M

F

R

F

P

R

N

H

HQ

F

H

F

WCYY

C

C

C

C

W

C

WW

W

I

G

V

I

D

K

R

HYQ

N

K

PQ

Q

H

N

H

H

K

I

R

I

R

E

Y

V

RY

F

P

K

K

N

LT

P

P

E

F

E

S

N

FQ

C

Y

L

A

V

A

E

A

NK

DD

L

L

YY

F

V

A

L

DE

FT

I

CM

M

F

CCCC

C

C

A

LA

LGG

N

QPR

S

Y

HQ

T

F

PPY

P

PN

Y

Y

F

N

F

FF

P

PNRT

D

F

N

S

P

KD

N

T

PPP

I

I KSG

I

TTD

I

I

VS

NN I

DS

G

T

DD

T

E

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

NS

REG

A

T

C

W

C

I

RT

PK

SA

Y

F

MY

FY

N

S

N

Y

W

M

C

W

C

W

M

N

H

C

C

R

E

NTTT

I

P

I

P

N

K

D

K

D

E

T

S

WC

G

N

I

V

F

D

VGS

Y

HY

C

M

H

H

M

W

L

A

V

L I

T

Q

K

T

K

M

H

M

F

YY

C

H

C

H

C

H

C

P

TN

K

P

D

E

A

SAT

F

Y

H

F

Y

F

I

GS

L

A

G

V

YR

S

T

T

I

I

F

I

I

V

ETNNNT

L I

K

D

PPPK

NEG

GG

S

V

TV

PK

DV

DTN

I

S

ET

K

E

E

D

D

K

L

VLA

L

L

A

A

FTV

V

G

K

DG

TDS

SS

G

G

T

EA

D

S

I

VV

V

0.0

L

T

L

G

G

LG

C

S

T

Separation: 30

A

E

bits

T

I

Y

E

I

D

L

LA

LA

A

PS

D

K

A

L

LAA

E

I

I

R

P

P

P

P

P

R

R

R

R

I

I

K

I

E

E

F

F

F

V

I

K

Q

P

P

P

N

R

N

E

N

A

K

Q

Q

M

M

H

F

F

F

R

N

AA

Q

Q

Q

H

M

M

Y

M

Y

P

Y

H

H

N

F

Y

P

N

P

E

D

Q

Q

Q

H

H

M

F

Y

Y

F

Y

Y

F

Y

F

Y

P

R

N

N

K

E

D

Q

Q

Q

Q

F

Y

F

P

R

F

P

P

P

K

Q

R

F

F

F

R

P

R

P

R

K

E

K

I

P

R

P

Q

Y

Y

P

P

P

R

I

E

K

I

A

V

Q

A

Q

Y

Q

Q

Q

Y

R

R

R

K

K

P

N

I

K

PYY

K

L

Q

A

F

R

F

A

TG

I

I

V

S

C

W

Q

W

W

M

H

M

W

Q

Y

W

M

W

H

M

W

W

I

I

I

KD

TR

I

NRT

RG

D

N

H

Y

H

H

Y

F

FY

P

HYY

H

H

H

W

W

H

PS

T

G

K

WWW

WWC

C

H

C

H

C

H

WWW

C

C

C

R

K

K

E

K

E

E

R

N

QN

N

P

P

R

P

F

Q

Q

Y

W

W

W

M

M

M

Q

T

P

E

D

N

S

Y

P

N

K

Y

Separation: 60

G

A

| |

bits

E

D

L

A

GL

VG

V

AT

L I

T

G

G

I

A

L

FV

I

T

Y

L

K

L

L

V

L

L

L

L

V

I

L

I

L

A

A

GG

D

S

V

T

G

D

G

V

G

V

D

S

V

D

V

T

GG

D

V

Q

Q

F

H

M

H

M

H

M

H

M

Q

F

W

H

M

W

H

M

C

W

Y

H

W

C

C

C

W

C

W

C

C

C

C

C

C

C

C

W

C

W

C

W

C

C

W

C

W

C

W

C

A

L

CM

F

Y

C

W

H

M

W

H

M

C

W

F

H

N

M

Y

C

C

W

A

I

I

D

E

D

A

E

D

E

V

L

D

L

AA

L

A

A

L

E

E

P

L

G

L

A

E

W

WW

W

M

W

M

W

M

M

M

M

W

M

Q

M

Q

Q

F

P

R

Y

Q

Q

P

R

M

E

I

V

I

F

L

L

F

V

F

P

R

P

R

P

K

E

QQ

I

QQ

I

V

K

K

M

W

H

H

M

M

M

W

M

Q

M

C

W

M

M

W

H

H

P

Q

Q

G

M

P

Q

N

K

T

H

E

D

S

H

R

R

N

QPP

P

R

QQQ

Y

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

C

W

C

W

H

M

H

MW

C

W

H

H

M

H

C

C

C

M

G

D

S

T

D

S

E

D

S

K

E

D

S

G

N

Y

K

E

D

S

G

T

K

E

D

S

T

E

D

S

E

N

S

I

K

E

D

S

K

D

N

S

N

I

N

S

Y

I

D

N

S

T

Y

I

H

M

Y

H

M

C

H

M

C

W

C

W

W

M

Q

Q

H

Q

Q

H

M

H

M

H

H

M

F

F

P

R

I

P

R

E

P

R

F

E

T

F

Y

F

Y

P

R

R

F

R

F

P

R

P

R

P

R

P

R

E

I

E

D

K

I

R

P

R

K

T

I

W

W

W

W

W

W

H

M

M

W

M

M

M

H

M

H

M

M

H

M

Q

D

V

F

D

V

Q

F

P

R

I

P

R

Q

L

F

P

R

P

R

F

P

E

D

V

E

T

D

V

V

L

A

Q

Q

Q

W

M

M

H

M

M

H

M

Q

H

M

H

M

H

Q

Q

Q

Q

H

H

Q

F

Q

Q

Y

F

Q

Q

F

Q

Y

F

Q

Q

Y

P

R

Q

P

I

P

F

P

R

Y

E

E

I

K

E

I

F

E

D

V

P

R

F

P

R

E

D

I

P

R

N

P

R

P

R

P

R

L

P

R

T

E

T

I

P

E

L

A

L

A

K

E

T

I

E

I

V

E

D

D

K

E

I

I

E

L

A

L

L

S

Q

I

T

G

PK

ND

S

E

G

M

H

F

W

P

N

M

V

NT

R

QPD

V

D

H

R

M

H

MC

HW

S

M

TY

W

RE

G

I

Y

Y

I

K

N

HM

TT

F

FYHH

K

K

C

M

F

D

KG

G

P

F

M

P

P

WWWWY

Q

F

W

W

S

G

E

K

T

D

Q

N

S

P

K

P

N

K

E

D

S

T

P

N

F

A

R

K

N

N

KE

QPK

E

K

P

P

R

L

A

L

F

R

F

F

P

R

F

F

R

N

P

R

I

K

K

E

K

E

T

I

E

T

I

Q

Q

Q

Q

W

F

Y

R

R

F

P

R

P

P

R

P

R

P

R

P

R

I

E

T

K

E

T

E

T

E

T

V

P

R

P

R

T

L

A

V

P

V

R

F

F

F

Q

Q

Y

P

R

I

I

L

L

A

L

Q

I

A

I

K

I

K

I

K

E

A

A

K

E

K

I

L

A

L

A

L

A

L

A

L

P

R

P

R

F

I

I

P

R

F

P

R

K

E

I

E

T

K

T

E

I

T

I

E

T

L

K

L

L

A

V

V

V

V

A

A

E

T

V

K

E

T

E

K

T

V

L

A

F

K

L

L

G

L

L

A

G

A

P

V

Q

A

L

Q

G

H

AG

LV

NVS

T

L

F

YHF

A

G

I G

TN

T

V

V

I

DA

T

YN

ASGG

FS

I F

A

H

F

YYMW

H

I

Y

E

N

LV

L

A

G

G

EAAA

GA

G

GA

DAGT

DV

G

R

T

N

RS

GG

G

E

QP

P

R

K

N

C

W

C

W

H

C

W

H

M

Y

Q

G

H

H

Y

H

W

C

H

H

Y

N

K

D

N

S

V

I

W

H

M

M

C

C

H

C

W

C

C

C

C

C

H

C

H

C

W

H

W

H

Y

H

H

C

W

C

W

C

P

S

C

C

C

M

Q

Y

H

Q

Q

Q

Y

Y

F

K

N

I

F

N

Y

K

N

D

G

G

G

V

V

A

G

T

G

G

D

D

NSS

K

VV

ER

R

L

E

K

DN

KTK

E

E

D

A

SAA

N

I

R

D

R

K

D

PNDDL

D

D

I

V

V

S

N

C

W

H

C

W

C

W

H

M

C

W

H

W

H

M

Q

Y

F

R

N

S

T

K

D

N

S

Q

T

Y

N

S

G

A

T

Y

K

R

N

S

G

A

T

Y

K

R

N

S

T

K

N

S

G

A

T

I

S

G

T

K

S

G

A

T

I

F

K

N

S

G

T

Y

F

K

N

S

T

Y

F

K

N

S

V

T

Y

F

K

N

S

T

Y

F

L

K

N

S

G

V

T

K

N

S

V

T

Y

K

D

S

G

V

T

K

D

S

G

V

G

K

P

D

N

S

Q

V

I

K

D

N

S

G

V

T

I

D

N

S

Q

V

T

I

D

N

S

V

I

F

N

Y

I

F

P

D

N

S

V

T

F

P

S

T

Y

F

K

D

S

Q

T

Y

F

P

N

Y

P

H

N

S

Y

F

D

N

S

V

Y

F

K

D

N

S

V

Y

K

N

S

K

D

N

S

G

V

Y

K

E

D

N

S

G

V

Y

I

D

N

S

G

V

D

N

S

Y

N

Y

N

Q

Y

N

Y

N

YW

N

Y

N

Y

N

YY

N

Y

P

E

L

REL

A

NDA

G

V

L

G

L

M

D

I

YH

G

F

TTA

Y

M

L

WC

H

M

F

Y

H

M

F

Y

R

N

Q

Y

N

Q

Y

D

N

S

TG

AG

A

Q

QQ

P

R

K

K

L

G

V

FS

SA

TATL

NDS

I

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

A

A

A

L

I

FY

D

I

F

H

M

C

W

H

M

C

W

H

M

C

W

C

PK

E

KD

S

VST

F

F

HW

F

YY

Y

QRSE

GG

G

VV

ST

T I

I

0.0

T

I

E

PK

RS

C

M

W

V

GN

A

L

Y

C

C

W

M

W

F

R

V

Y

I

G

R

I

T

C

C

Y

F

CM

WC

W

H

I

Q

C

R

YW

I

T

A

L

V

S

T

D

V

I

Y

FYFP

R

I

F

I V

T

Q

K

C

V

V

S

L I

G

E

S

L

PD

N

H

PQ

T

P

K

N

P

S

QN

S

N

ET

DS

I

K

K

G

V

S

L

A

DG

I

F

R

P

E

E

D

V

YR

R

NA

F

L

R

F

MY

G

R

P

VY

E

TA

VL

V

T

V

F

V

E

I

K

E

QPD

L

A

A

L

E

A

L

D

T

T

K

ES

G

DN

K

G

E

C

Q

Q

C

H

C

C

C

M

W

H

M

Y

H

M

I

K

LTVT

W

L

AR

G

A

Q

Q

N

R

CYN

M

MW

Q

Q

C

C

R

H

M

V

Y

I

H

M

W

W

Q

S

D

E

D

S

I

H

F

H

Q

L

G

FY

C

H

AY

G

R

D

V

R

N

QRR

A

N

L

A

S

L

E

T

S

R

YM

0.02

L

A

P

NS

RP

D

S

G

D

S

E

T

A

V

Y

F

R

K

E

T

E

SP

K

FL

G

L

T

V

E

I

I

D

E

K

DN

I

F

L

A

V

L

A

A

V

ESD

S EG

K

GKNSA

L

A

V

L

A

DK

I

G

A

G

GD

AEG

V

L

A

0.04

L

L

|

|

T

S

L

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

C

C

C

C

C

C

C

C

W

VG

V

C

C

C

H

M

H

M

W

H

N

M

T

I

W

W

WW

W

W

W

W

M

M

M

Q

F

Y

F

P

R

W

W

WWW

W

M

H

M

H

P

R

N

I

H

M

M

H

M

H

M

H

H

M

M

M

F

Q

Y

E

D

WW

M

M

H

H

H

H

F

Q

Y

P

M

C

C

S

Y

MY

C

H

W

M

H

I

C

C

C

C

C

C

C

C

N

N

N

I

N

I

N

I

N

N

Y

F

M

Y

F

H

M

Y

M

W

K

C

C

C

C

C

C

C

C

W

H

H

H

N

H

L

L

H

F

F

E

K

E

S

S

D

L

I

N

R

K

Q

N

K

P

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

V

F

GG

V

S

Q

E

L

G

G

L

W

Q

Y

F

Q

P

R

F

Y

Q

F

YW

K

R

A

PDSS

NSDD

R

A

W

C

C

C

H

H

H

W

L

T

I

G

D

D

K

K

D

D

K

S

K

TVVVVV

DT

N

N

N

V

N

E

D

D

D

E

D

E

N

DTT

G

S

ET

E

S

I

K

S

S

S

SS

N

LT

I

T

K

DTTTT

N

T

E

K

A

PK

D

K

D

E

K

E

E

V

S

I

E

G

ESTT

V

T

ES

V

DGGGGG

ES

ST

T

VT

G

G

L

L

V

LA

I

I

Y

G

V

Q

Q

S

S

G

S

S

C

S

G

G

C

C

S

S

H

M

W

H

W

H

W

H

Y

R

D

T

I

D

R

V

T

I

V

T

I

L

K

R

V

T

I

L

K

E

D

V

T

I

L

K

V

T

E

D

T

N

C

D

D

ES

G

D

A

D

Y

R

Y

R

H

M

H

N

R

E

P

N

Q

D

P

K

G

A

L

V

AV

V

L

VV

LA

0.0

A

V

SGV

V

SS

FT

TTG

I

F

E

L

QR

LA

NA

A

I

F

F

F

F

F

MC

R

Y

Y

Y

Y

E

V

TT

I

I

K

L

I

ST

P

T

T

P

S

N

H

P

C

H

M

S

G

GDG

P

PFPFYYFFP

K

P

PN

NN

NS

PFFP

N

GS

D

N

K

C

GGG

G

P

D

N

D

R

RD

A

L

A

LG

E

A

D

T

N

KS

E

D

D

SA

KS

E

V

L

GNEAL

L

GG

L

VVT

I

I

T

L

L

L

LA

A

AA

T

I

G

PS

ND

I

R

V

AG

K

G

C

C

WW

Q

C

C

W

Y

Q

H

M

M

C

M

M

M

F

M

C

Y

Q

WC

W

W

Q

C

F

R

H

M

H

M

W

W

G

M

M

M

M

G

E

D

S

G

V

K

PP

KS

TG

T

I

TD

I TVVS

Y

LT

I

KG

A

V

DNTS

S

M

Q

M

H

F

M

C

A

Y

QY

F

R

V

V

V

A

TV

ST

DD

VT

V

S

I

I L

RQ

FR

A

V

QQQYQFRE

F

YQ

MH

M

Y

Y

L

L

GR

TD

V

T

ESG

QQ

FRR

Q

F

QQQ

I

G

E

A

L

A

L

D

G

I

V

I

I

KE

I EK

V

RR

EL

KR

KA

D

N

I V

R

S

NR

NR

FR

E

V

A

KV

I K

A

E

A

KLL

G

DE

LDSN

S

N

V

E

G

E

LV

D

AEAEK

A

L

V

L

G

L

L

E

ADEA

GKDG

ALSKL

L

L

A

0.04

L

A

G

A

GA

I

AAA

V

I

H

LLLA

G

K

L

A

A

C

C

M

WWW

W

DDDG

VVL

V

A

LVV

R

R

YQ

M

YQ

F

Y

H

Q

MMCM

H

M

MY

M

M

M

M

M

WW

G

Q

QY

C

C

I

G

GDSE

SESE

SD

DEKTTE

G

G

SKTKKS

L

V

Q

FFFR

YQ

F

Q

F

M

Y

M

I

KA

I L

V

GGG

V

E

S

T

TD

VV

VT

G

V

GV

SV

TT

G

S

G

G

G

I

FY

L

A

A

G

L

I

R

Q

|

|

A

L

MY

G

C

C

H

E

D

T

S

N

G

F

H

YHMM

I

H

PA

R

MY

F

CC

WW

K

C

P

R

Y

I

W

P

Q

R

N

H

T

V

K

E

D

S

Q

I

1

2

3

4

5

6

7

8

9

10

11

V

F

F

bits

L

A

D

E

S

K

YP

bits

V

K

E

T

S

VT

F

DT

I

V

S

G

S

P

K

N

Y

H

Y

F

P

H

C

T

F

N

S

N

F

P

D

K

P

P

T

T

P

N

S

G

NTS

K

N

DT

N

SV

S

I

G

bits

L

A

MY

F

F

PG

NVY

R

E

L

0.02

KV

QNQ

R

R

Q

F

E

A

L

I

L

K

EEKA

K I EVL

I EA

RRR

I

N

N

RQQ

RRR

I

I

V

C

W

D

E

CCCCC

C

C

W

F

H

M

Y

H

Y

C

W

C

R

YF

G

N

D

E

R

PK

G

A

ND

S

K

VD

E

A

L

A

L

|

G

0.0

YF I V

T

R

0.02

I

D

K

A

SN

LED

N

S

Separation: 12

0.04

M

I

L

Separation: 9

0.06

R I

AEE

V

0.0

I

N

H

N

W

W

I

I

T

K

Q

T

Q

D

S

S

E

C

C

F

H

Y

M

I

L

G

D

F

M

Y

F

H

Y

M

V

R

W

LA

F

V

0.04

L

LDDL

EAAA

KKK

LVV

A

ALES

LED

Q

Q

C

|

I

G

F

R

H

M

C

Y

H

M

C

W

C

W

Q

P

A

YH

R

V

V

AA

GGA

L G

C

C

W

H

M

W

H

M

W

0.02

Y

V

QQ

NQ

I

KP

E

N

S

G

T

A

ER

V

D

V

DT

G

Y

M

W

0.0

V

F

L

A

L

T

PG

N

R

G

D

V

I

K

TSG

V

GG

G

H

W

P

S

K

Separation: 20

E

0.04

I

L

L

F

F

0.06

RT

M

Y

M

FL

A

V

R

Q

F

YY

M

M

Q

M

M

W

W

W

AV

L

E

0.0

D

0.02

KG

F

T

M

H

M

RRVL

R

Q

I

R

Q

F

M

F

FY

Q

MW

Q

I

R

G

A

I

L

V

I

V

E

I

A

|

0.04

A

V

K

E

R

QQ

LTVV

I

R

I

K

S

E

T

RQ

A

V

GD

S

V

G

D

A

E

L

D

L

ENNS

K

D

PPPK

E

DN

NS

E

L

KA

L

E

R

Separation: 7

0.06

E

S

T

Q

PQ

R

G

L

A

E

L

I

0.02

L

NEE

I

A

E

GSE

EKD

KSE

DT K

G

S

L

RAL

S

N

LA

A

R

L

KA

K

DDG

A

V

T

E

DD

EK

D

N

A

L

0.04

L

G

AE

LK

D

L

A

GG

G

| |

| |

| |

L

A

|

0.06

A

AA

0.12

0.1

0.08

0.06

0.04

0.02

0.0

LL

bits

Separation: 2

Figure 4: Sequence logos for increasing sequence separation. A margin of 4 residues on both ends of the logo is used. Symbols displayed upside down indicate that their amount is lower than the background amount. This figure is available in full color at

http://www.cbs.dtu.dk/services/distanceP/.

and 13, the center peak shifts from slight over to slight underrepresentation of alanine: helices are no longer dominant

for distances below the threshold.

The smearing out of the center peak for large sequence

separations does not indicate lack of bending/turn properties, but reflects that such amino acids can be placed in a

large region in between the two separated amino acids. What

we see for small separations, is that the signal in addition

must be located in a very specific position relative to the

separated amino acids.

Neural networks: predictions and behavior

As found above, optimized prediction of distance constraints

for varying sequence separation, should be performed by a

non-linear predictor. Here we use neural networks. Clearly,

far most of the information is found in the sequence logos,

so we expect only a few hidden neurons (units) to be necessary in the design of the neural network architecture. As

earlier, we only use two-layer networks with one output unit

(above/below threshold). We fix the number of hidden units

to 5, but the size of the input field may vary.

We start out by quantitatively investigating the relation between the sequence motifs in the logos above, and

the amount of sequence context needed in the prediction

scheme. First, we choose the amount of sequence context,

by starting with local windows around the separated amino

acids and the extending the sequence region, r, to be included. We only include additional residues in the direction

towards the center. The number of residues used in the direction away from the center is fixed to 4, except in the cases of

r = 0 and r = 2, where that number is zero and two respectively. At some point the two sequence regions may reach

each other. Whether they overlap, or just connect does not

affect the performance. For each r = 0; 2; 4; 6; 8; 10; 12; 14,

we trained networks for sequence separations 2 to 99, resulting in the training of 8000 networks, since we used 10 crossvalidation sets. The performances in “fraction correct” and

“correlation coefficient” are shown in Figure 5.

Figure 5(b) shows the performance in terms of correlation coefficient. We see that each time the sequence separation becomes so large that the two context regions do not

connect the performance drops when compared to contexts

that merge into a single window. This behavior is generic, it

happens consistently for increasing size of sequence context

region, though the phenomenon is asymptotically decreasing. An effect can be found up to a region size of about 30,

see Figure 6. Several features appear on the performance

curve, and they can all be explained by the statistical observations made earlier. First, the curve with no context (r = 0)

corresponds to the curve one obtains using probability pair

density functions (Lund et al. 1997). The bad performance

of such a predictor is to be expected due to the lack of motif

that was not included in the model. The green curve (r = 4)

is the curve that corresponds to the neural network predictor in (Lund et al. 1997). As observed therein a significant

improvement is obtained when including just a little bit of

sequence context. As the size of the context region is increased, so is the performance up to about sequence separation 30. Then the performance remains the same indepen-

dent of the sequence separation. We even note the drop in

performance for sequence separation 12, as predicted from

the statistical analysis above. It is also characteristic that,

as the distribution of physical distances approaches the universal shape, and as the center peak of the sequence logos

vanishes, the performance drops and becomes constant with

approximately 0.15 in correlation coefficient. The change

in prediction performance takes place at sequence separations for which changes in the distribution of physical distance and sequence motifs change. Due to the not vanishing signal in the logos for large separations, it is possible

to predict distance constraints significantly better than random, and better than with a no-context approach. The conclusion is not surprising: the best performing network is that

which uses as much context as possible. However, for large

sequence separations more than 30–35 residues, the same

performance is obtained by just using a small amount of

sequence context around the separated amino acids, as in

(Lund et al. 1997). We found that the performance between

the worst and best cross-validation set is about 0.1 for separations up to 12 residues, then about 0.07 for separations up

to 30 residues, and then 0.1–0.2 for separations larger than

30. Hence, at sequence separation 31 the performance start

to fluctuate indicating that the sequence motif becomes less

defined throughout the sequences in the respective crossvalidation sets. Hence we can use the networks as an indicator for when a sequence motif is well defined.

As an independent test, we predicted on nine CASP3

(http://predictioncenter.llnl.gov/casp3/) targets (and submitted the predictions). None of these targets (T0053 T0067

T0071 T0077 T0081 T0082 T0083 T0084 T0085) have sequence similarity to any sequence with known structure. The

previous method (Lund et al. 1997) had on the average

64.5% correct predictions and an average correlation coefficient of 0.224. The method presented here has on average

70.3% correct predictions and an average correlation coefficient of 0.249. The performance of the original method corresponds to the performance previously published (Lund et

al. 1997). However, the average measure is a less convenient

way to report the performance, as the good performance on

small separations are biased due to the many large separation

networks that have essentially the same performance. Therefore we have also constructed performance curves similar to

the one in Figure 5 (not shown). The main features of the

prediction curve was present. In Figure 8 we show a prediction example of the distance constraints for T0067. The

server (http://www.cbs.dtu.dk/services/distanceP/) provides

predictions up a sequence separation of 100. Notice that

predictions up to a sequence separation of 30 clearly capture

the main part of the distance constraints.

We also investigated the qualitative relation between the

network performance and information content in the sequence logos. Interestingly, and in agreement with the observations made earlier, we found that the two curves have

the same qualitative behavior as the sequence separation increase (Figure 7). Both curves peak at separation 3, both

curves drop at separation 12, and both curves reaches the

plateau for sequence separation 30. We note that the relative

entropy curve increases slightly as the separation increases.

Mean performances

(a)

0.75

r=0

r=2

r=4

r=6

r=8

r=10

r=12

r=14

Fraction correct

0.70

0.65

0.60

0.55

0.50

0.45

0

20

40

60

80

Sequence Separation (residues)

100

Mean performances

(b)

0.50

r=0

r=2

r=4

r=6

r=8

r=10

r=12

r=14

Correlation coefficient

0.40

0.30

0.20

0.10

0.00

0

20

40

60

80

Sequence separation (residues)

100

Figure 5: The performance as function of increasing sequence context when predicting distance constraints. The sequence context is the

number of additional residues r = 0; 2; 4; 6; 8; 10; 12; 14 from the amino acid and toward the other amino acid. The performance is showed in

percentage (a), and in correlation coefficient (b). This figure is available in full color at http://www.cbs.dtu.dk/services/distanceP/.

180

160

140

120

100

80

60

40

CASP3 target T0067

20

This is due to a well known artifact for decreasing sampling

size, and entropy measures. The correlation between the

curves also indicates that the most information used by the

predictor stem from the motifs in the sequence logos.

180

Single window curves

0.50

160

Plain

Correlation coefficient

0.40

140

Profile

120

0.30

100

80

0.20

60

0.10

40

0.00

20

0

20

40

60

80

Sequence Separation (residues)

100

Figure 6: The performance curve using a single windows only.

We also show the performance when including homologue in the

respective tests. This complete a profile.

Relative entropy and NN performance

Arbitrary scale

20

40

60

80

]0.4 ; 0.5]

]0.7 ; 0.8]

]0.2 ; 0.3]

]0.5 ; 0.6]

]0.8 ; 0.9]

]0.3 ; 0.4]

]0.6 ; 0.7]

]0.9 ; 1.0]

Figure 8: Prediction of distance constraints for the CASP3 target T0067. The upper triangle is the distance constraints of published coordinates, where dark points indicate distances above

the thresholds (mean values) and light points distances below the

threshold. The lower triangle shows the actual neural network

predictions. The scale indicates the predicted probability for sequence separations having distances below the thresholds. Thus

light points represent prediction for distances below the thresholds and dark points prediction for distances above the threshold. The numbers along the edges of the plot show the position in the sequence. This figure is available in full color at

http://www.cbs.dtu.dk/services/distanceP/.

NN-performance

Relative entropy

0

] - ; 0.2]

100

Sequence separation (residues)

Figure 7: Information content and network performance for increasing sequence separation.

Finally, we conclude by an analysis of the neural network

weight composition with the aim of revealing significant

motifs used in the learning process. In general, we found the

same motifs appearing for the each of the 10 cross-validation

sets for a given sequence separation. As described in the

Methods section, we compute saliency logos of the network

parameters, that is we display the amino acids for which

the corresponding weights, if removed, cause the largest increase in network error. We made saliency logos for each of

the 5 hidden neurons. For the short sequence separations the

network strongly punish having a proline in the center of the

input field, that is a proline in between the separated amino

acids. This makes sense as proline is not located within secondary structure elements, and the helix lengths for small

separations are smaller than the mean distances. (The network has learnt that proline is not present in helices.) In

contrast, the presence of aspartic acid can have a positive or

negative effect depending on the position in the input field.

For larger sequence separations, some of the neurons display a three peak motif resembling what was found in the sequence logos. The center of such logos can be very glycine

rich, but also show a strong punishment for valine or tryptophan. Sometimes two neurons can share the motif of what is

present for one neuron in another cross-validation set. Other

details can be found as well. Here we just outlined the main

features: the networks not only look for favorable amino

acids, they also detects those that are not favorable at all. In

Figure 9 we show an example of the motifs for 2 of the 5

hidden neurons for a network with sequence separation 13.

D

NN

DHD

H

N

A

Q

K

H

G

G

G

R

R

G

E

V

E

V

GHK

G

XH

P

N

P

F

G

KC

C

D

N

G

N

DY

N

N

P

N

X

W

F

K

D

V

Y

F

D

V

V

K

W

K

N

N

Y

H

W

I

W

X

X

E

T

H

N

W

V

E

F

H

H

Y

N

V

Y

V

Y

R

S

S

V

D

S

Y

W

T

F

T

T

H

D

R

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

C

M

M

W

G

Q

F

H

M

M

T

L LI

P

G

V

DG

E

G

GP

D

NP

FMYLF

I

D

YWPPE

TR

FHN

E

CSCN

E

NS

I

V

I

V

V F

I

C

L

MNL I R

EN

E

FAL

SE

K

GDQRL

M

DG

GN

R

CVC

QVL

Y

C

I

NK

G

D K

DKNNP

GP

KX

W Y FV

Y FWV

WV

C

SS

PN

W

L

FV

P

T

N

P

N

G

W

KG

D

C

I

FML

G

N

F

G E

XXL

NPR

N

RP

G

D

IF

W

WW

W

I

X

G

TX

DAX

S

HH

S

GW

C

I

F

KG

N

W

E

W

L

W

Q

EE

LWC

RC

S

TW

FWV

CF

Q

C

HA

QT

V

C

CH

R

I

M

Y

I

M

E

V

C

H

H

Y

K

V

A

C

T

W

H

A

Q

D

R

Y

T

P

P

E

R

D

E

E

X

D

X

EA

Q

SY

V

GV

I

Q

H

R

T

Q

P

M

Q

Y

N

L

A

R

Y

P

R

A

R

H

W

A

S

F

Y

T

T

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

Y

K

M

Y

L

G

S

M

M

V

R

E

D

M

S

K

KW

H

F

M

D

M

T

Q

H

M

V

Q

C

Y

Q

P

A

D

T

T

X

T

Q

H

X

X

X

R

TA

P

I

Q

V

I

A

Y

A

X

P

H

E

H

L

X

T

S

S

P

S

D

K

F

K

H

S

DS

T

Y

D

A

E

NX

K

K

A

P

H

X

A

XX

X

T

W

F

Q

A

F

A

H

I

S

M

K

N

S

K

T

V

S

Q

D

C

T

W

R

N

GX

K

L

F

K

E

M

Q

R

E

YYA

G

C

N

E

L

K

R

M

C

G

GGG

G

FML

M

S

C

FAW

N

S

DW

N

I

T

Y

T

Q

P

A

N

S

A

Q

L

A

K

L

F

Y

T

XX

E

Q

I

X

G

E

EQS

E

X

S

K

NW

K

G

E

K

V

E

M

R

Q

K

A

L

X

R

S

G

X

P

I

R

X

W

X

K

Y

H

A

T

Q

Q

A

P

K

A

D

K

T

R

N

T

N

T

H

F

H

M

Y

M

N

S

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

M

C

S

R

E

L

Q

MAY

A

C

A

R

F

A

X

Y

X

X

D

H

H

X

E

C

A

H

X

P

P

C

L