Information-Geometric Studies on Neuronal Spike Trains Shun-ichi Amari

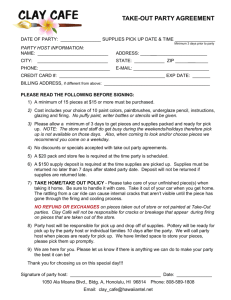

advertisement

Computational Neuroscience ESPRC Workshop

--Warwick

Information-Geometric

Studies on Neuronal Spike

Trains

Shun-ichi Amari

RIKEN Brain Science Institute

Mathematical Neuroscience Unit

Neural Firing

x1

x2

x3

xn

xi = 1, 0

p(x) = p( x1 , x2 ,..., xn ) : joint probability

ri = E[ xi ]

----firing rate

S = { p ( x1 , x2 ,..., xn )}

vij = Cov[ xi , x j ] ----covariance: correlation

higher-order correlations

orthogonal decomposition

Multiple spike sequence:

{xi (t ), i = 1,..., n; t = 1,..., T }

xi (t ) = 0,1

time t

neuron i

1

n

1

T

x1 (1)

x1 (T )

x2 (1)

x2 (T )

xn (1)

xn (T )

Information Geometry ?

S = {p (x; μ , )}

2

1

(x μ ) p (x; μ , ) =

exp 2

2

2

{p (x )}

S = {p (x; è )}

è = (μ , )

μ

Riemannian metric

Dual affine connections

Information Geometry

Systems Theory

Statistics

Combinatorics

Information Theory

Neural Networks

Information Sciences

Physics

Math.

AI

Riemannian Manifold

Dual Affine Connections

Manifold of Probability Distributions

Manifold of Probability Distributions

x = 1, 2, 3

{p ( x)}

p = ( p1 , p2 , p3 )

p3

p1

p1 + p2 + p3 = 1

M = {p (x; )}

p2

Manifold of Probability Distributions

M = {P (x, p )}

x = 1, 2,3

3

3

P (x, p ) = pi i (x )

( p3 = 1 p1 p2 )

i =1

M

p = ( p1 , p2 , p3 )

2

1

p1 + p2 + p3 = 1

3

i =

pi

12 + 22 + 32 = 1

1

2

1 = log

= log

2

p1

p3

p2

p3

= ( 1 , 2 )

Invariance

S = {p (x, )}

1. Invariant under reparameterization

p ( x, ) = p ( x, )

2.

D = i2 2

i

Invariant under different representation

2

y = y (x ), { p ( y, )} = { p ( x, )}

p (x, ) p (x, ) dx

| p ( y, ) p ( y, ) | dy

1

2

2

1

2

Two Structures

Riemannian metric

affine connection --- geodesic

g ij = E log p

log p j

i

Fisher information

ds 2 = gij ( )di d j =< d , d >

Affine Connection

covariant derivative

c X = Y

& & X=X(t)

geodesic X=X

s=

i

j

(

)

g

d

d

ij

minimal distance

straight line

Affine Connection

covariant derivative; parallel transport

XY ,

c X = Y

geodesic x& =x& x=x(t)

s=

i

j

g

d

d

(

)

ij

minimal distance : straight line

< X , Y >=< X , Y >

i

gij X Y

j

Duality: two affine connections

X , Y = X , Y

{S , g , , *}

< X , Y >= gij X iY j

*Y

X

Y

X

Riemannian geometry:

= (, )

Dual Affine Connections

*

( , )

e-geodesic

log r (x, t ) = t log p (x ) + (1 t )lg q (x ) + c (t )

m-geodesic

x& x& (t ) = 0

*x& x& (t ) = 0

r (x, t ) = tp (x ) + (1 t )q (x )

q (x )

p (x )

Information Geometry

-- Dually Flat Manifold

Convex Analysis

Legendre transformation

Divergence

Pythagorean theorem

I-projection

Dually Flat Manifold

1. Potential Functions

---convex

2. Divergence

(Bregman, Legendre transformation)

D [p : q]

3. Pythagoras Theorem

p

D [ p : q ]+ D [q : r ] = D [ p : r ]

r

4. Projection Theorem

5.Dual foliation

q

Pythagoras Theorem

correlations

p

D[p:r] = D[p:q]+D[q:r]

q

r

p,q: same marginals 1 ,2

r,q: same correlations

independent

p( x)

D[ p : r ] = p ( x) log

q( x)

x

estimation correlation

testing

invariant under firing rates

Projection Theorem

min D [P : Q ]

QM

Q=

m-geodesic

projection of

P

to

M

min D [Q : P ]

QM

Q = e-geodesic projection of

P to M

Multiple spike sequence:

{xi (t ), i = 1,..., n; t = 1,..., T }

xi (t ) = 0,1

time t

neuron i

1

n

1

T

x1 (1)

x1 (T )

x2 (1)

x2 (T )

xn (1)

xn (T )

spatial correlations

x = (x1 , L , xn )

p ( x ) = exp i xi + ij xi x j + LL+ 1L n x1

i< j

xn ( )

ri = E [xi ] = Prob {xi = 1}

rij = E xi x j = Prob {xi = x j = 1}

L

= (i , ij , L , 1L n )

r = (ri , rij , L , r1L n )

orthogonal structure

S = {p ( x )} : coordinates

Spatio-temporal correlations

correlated Poisson

p (x )

: temporally independent

correlated renewal process

p ( xt )

: firing rate ri (t )

modified

spatial correlations fixed

Two neurons:

{ p00 , p01 , p10 , p11}

x

1

x2 x3

firing rates: r1 , r2 ; r12

correlation—covariance?

Correlations of Neural Firing

{p (x , x )}

1

x1

x2

2

2

{p00 , p10 , p01 , p11}

r1 = p1 = p10 + p11

r2 = p1 = p01 + p11

p11 p00

= log

p10 p01

1

firing rates

correlations

{( r1 , r2 ), }

orthogonal coordinates

Independent Distributions

x1 , x2 = 0,1

S = { p ( x1 , x2 )}

M = {q ( x1 )q ( x2 )}

Orthogonal Coordinates:

correlation fixed

scale of correlation

firing rates

r’

r

two neuron case

r1 , r2 , r 12 ; 1 , 2 , 12

r12 (1 + r12 r1 r2 )

p00 p11

= log

12 = log

p01 p10

(r1 r12 )(r2 r12 )

r12 = f (r1 , r2 , )

r12 (t ) = f (r1 (t ), r2 (t ), )

Decomposition of KL-divergence

correlations

D[p:r] = D[p:q]+D[q:r]

p

q

p,q: same marginals 1 ,2

r,q: same correlations

p( x)

D[ p : r ] = p ( x) log

q( x)

x

r

independent

pairwise correlations

covariance: cij = rij ri rj

not orthogonal

independent distributions

rij = ri rj ,

rijk = ri rj rk , L

How to generate correlated spikes?

(Niebur, Neural Computation [2007])

higher-order correlations

Orthogonal

higher-order correlations

= (i , ij ; L , 1L n )

r = (ri , rij ;

L , r1L n )

tractable models of

distributions M = { p(x)}

n

Full model: 2 1 parameters

Homogeneous model: n parameters

Boltzmann machine: only pairwise

correlations

Mixture model

Models of Correlated Spike Distributions (1)

Reference

y (t )

0110001011

x%

1 (t )

1001011001

x%2 (t )

0101101101

x%3 (t )

Models of Correlated Spike Distributions (2)

xi (t ) = x%i (t )o y (t )

additive interaction

eliminating interaction

Niebur replacement

y (t )

0110001011

x%

1 (t )

%

x 2 (t )

1001001101

1100010011

Mixture Model

y (t ) :

0110001011 mixing sequence

p1 ( x; u ) = p (xi , ui ) when y = 1

p2 ( x; u ) = p (xi , vi ) when y = 0

p ( x, u ) : x = 1 with probability u

p ( x) = p ( y ) p ( x | y )

Mixture model

p ( x; u ) = p (xi , ui )

: independent distribution

p ( x; u, v , m ) = mp ( x; u ) + mp ( x; v )

u = (u1 , L , un )

m = 1 m

v = (v1 , L , vn )

M n = {p ( x; u, v , m )}, S n = {p ( x )}

firing rates

ri = mui + mvi

rij = mui u j + mvi v j

rijk = mui u j uk + mvi v j vk

L

Conditional distributions

and mixture

y = 1, 2,3,...

p (x | y ) : Hebb assembly y

p ( x) = p ( y ) p ( x | y )

State transition of Hebb assemblies

mixture and covariances

p ( x , t ) = (1 t ) pu ( x ) + tpv ( x )

cij (t ) = (1 t )cij (u ) + tcij (v ) + t (1 t )(ui vi )(u j v j )

Extra increase (decrease) of covariances

within Hebb assemblis---- increase

between Hebb assemblies ---decrease

Temporal correlations

{x(t)}, t =1, 2, …T

Prob{x(1),..., x(T )}

Poisson sequence:

Markov chain

Renewal process

Orthogonal parameter of

correlation ---- Markov case

p ( xt +1 | xt )

aij = Pr{xt +1 = i | xt = j}

Pr{xt } = Pr( x1 )p ( xt +1 | xt )

r1 = Pr{xt = 1}

2

1

c = r11 r

a11a00

= log

a10 a01

Spatio-temporal correlation

correlated Poisson

T

p ({xt }) = p (xt )

t =1

Correlated renewal process

Pr( xt ) = k (t t ') : t ' last spike time

Pr( xi ,t ) = k (t t ') p ( xi | x)

Population and Synfire

x1

x2

xn

Neurons

xi = 1(ui )

ui = Gaussian

E[ui u j ] = Population and Synfire

ui = wij s j h

x1

xn

xi = 1[ui ]

ui = (1 ) i + h

i , N (0, 1)

E[ui u j ] = 2

E[ui ] = 1

s

pi = Prob {i neurons fire at the same time }

i

r=

n

q(r , ) = e

Pr = Pr{nr neurons fire}

nH (r )

e

nz ( )

d

2

z ( ) =

r log F (1 r )log (1 F )

2n

1

F = F (a h ) =

2

a h

0

e

t2

2

dt

2 1

1

2

q (r , ) = c exp[

{F ( ) h} ]

2(1 )

2 1

p (x, ) = exp{ i xi + ij xi x j + ijk xi x j xk + ...}

i1i2 ...ik = O(1/ n k 1 )

Synfiring

p ( x ) = p ( x1 ,..., xn )

1

r = xi

n

q (r )

q(r )

r

Bifurcation

xi : independent---single delta peak

pairwise correlated

Pr

higher-order correlation

r

Semiparametric Statistics

M = {p (x, , r )}

p ( x, ) = r ( x )

y

x

y =x

yi = i + i

'

x

=

+

i

i

i

p (x, y; ) = p (x, y; , )r ( )d mle, least squre, total least square

Linear Regression: Semiparametrics

(x1 , y1 )

(x2 , y2 )

M

(xn , yn )

y = x

xi = i + i

yi = i + i , '

i

'

i

N (0, 2

)

Statistical Model

1

2

2

1

p (x, y , ) = c exp (x ) ( y ) 2

2

p (xi , yi , i ) : , 1 ,L , n

p (x, y ) = p (x, y , )Z ( )d

semiparametric

Least squares?

2

L ( ) = ( yi xi ) min

y

x

yi

1

,

n

xi

mle,

i

i

TLS

(y

i

Neyman-Scott

xi )( yi + xi ) = 0

: ˆ =

x y

x

i

i

2

i

Semiparametric statistical model

x1 , x2 ,L

p ( x, , Z )

Estimating function

y ( x, )

E , Z y (x, ) = 0

E ' , Z y (x, ) 0

Estimating equation

y (xi , ) = 0

ˆ

u ( x , y ; , Z ) =

log p

= sE s L

v (x, y; , Z ) = E f ( ) s = k {s (x, y; )}

u

I

(x, y, ) = u E u s = k (x + y )( y x )

Fibre bundre

function space

r

z ( ) N (μ , 2

)

u I (x, , Z ) = (x + y + c )( y x )

c = μ 2

f (x, ; c ) = (x + y + c )( y x )

2

c = μ 1

μ = xi

n

2

1

= xi2 μ n

2

( )

2

Poisson process

Poisson Process: Instantaneous firing rate is constant over

time.

dt

For every small time window dt,

generate a spike with probability dt.

T

Cortical Neuron

(Softky & Koch, 1993)

Poisson Process

p(T ) = e T

Poisson process

cannot explain interspike interval

distributions.

Gamma distribution

Gamma Distribution: Every -th spike of the Poisson process is left.

( ) 1 T

q(T; , ) =

T e

.

( )

T

=1 (Poisson)

=3

Two parameters : Firing rate

: Irregularity

Gamma distribution

r ) 1

(

f (T ) =

T exp {r T }

( )

=1

: Poisson

: regular

Integrate-and fire

Markov model

Irregularity is unique to

individual neurons.

Regularlarge

t

t

t

Irregularsmall

Irregularity varies among

)

neurons.

We assume that is independent of

time.

Information geometry of

estimating function

•Estimating function y(T,):

N

y(T ; ) = 0

l

l =1

E[y(T,)]=0

•Maximum likelihood Method:

N

d

log p(T1 )L p(TN ) = u(Tl ; ) = 0

d

l =1

How to obtain an estimating

function y:

d log p(T;ê , k )

u

T;ê

k

(

,

)

dê

Score log p(T;ê , k )

function

v (T;ê , k ) s

k ( î )

(See poster for details)

< uv >

y = u

v

< vv >

temporal correlations

x (1) x (2 )L x (N )

independent with firing probability r (t )

spike counts: Poisson

ISI: exponential

renewal:

r (t ) = k (t ti ) : last

spike

ISI distribution

f (t )

T

f (T ) = ck (T )exp k (t )dt 0

Estimation of by estimating functions

No estimating function exists if the neighboring firing rates are

different.

m() consecutive observations must have the same firing rate.

Example:

m=2

l-th

set:

Model: p(T1 , T2 ; , k ) = q(T1 ; , )q(T2 ; , )k ( )d

0

(T1l ,T2l )

Estimating function:

(E[y]=0)

1

y=

N

l

,m

log

l =1

T1 T2

(T

1

l

y = log

l

+ T2

)

l 2

+ 2ö ( 2ê ) 2ö ( ê ) = 0

T1T2

+ 2 ö ( 2 ê ) 2ö ( ê )

2

(T1 +T 2 )

Case of m=1

(spontaneous discharge)

Firing rate continuously

changes and is driven by

Gaussian noise.

: Correlation

can be approximated if

the firing rate changes

slowly.

Estimating function (m=2):

1

y=

N

slow

l

,m

log

l =1

T1 T2

(T

1

l

l

+ T2

)

l 2

+ 2ö ( 2 ê ) 2ö ( ê ) = 0