Parameter estimation in diffusion processes from observations of first hitting-times Susanne Ditlevsen

advertisement

Parameter estimation in diffusion

processes from observations of

first hitting-times

Susanne Ditlevsen

Department of Mathematical Sciences

University of Copenhagen, Denmark

Email: susanne@math.ku.dk

Joint work with

Petr Lansky, Academy of Sciences of the Czech Republic, Prague

EPSRC Symposium Workshop on Computational Neuroscience,

Warwick, December 8–12, 2008

All characteristics of the neuron are

collapsed into a single point in space

1

All characteristics of the neuron are

collapsed into a single point in space

=⇒

1

•

Data: spiketrains

|

{z

T

}

|

{z

T

}

time

2

Underlying process

-

-

3

The model

dXt = µ(Xt ) dt + σ(Xt ) dW (t) ; X0 = x0

Xt : membrane potential at time t after a spike

x0 : initial voltage (the reset value following a spike)

An action potential (a spike) is produced when the membrane voltage

Xt exceeds a firing threshold

S(t) = S

>

X(0) = x0

After firing the process is reset to x0 . The interspike interval T is

identified with the first-passage time of the threshold,

T = inf{t > 0 : Xt ≥ S}.

4

Data

We observe the spikes: the first-passage-time of Xt through S:

Data: {t1 , t2 , . . . , tn } i.i.d. realizations of the random variable T .

Note: There is only information on the time scale, nothing on the

scale of Xt . Thus, obviously something is not identifiable in the

model from these data, and something has to be assumed known.

5

Estimation

dXt = µ(Xt , θ) dt + σ(Xt , θ) dW (t)

;

θ ∈ Θ ⊆ IRp

y 7→ fθ (t − s, x, y)

Transition density:

Corresponding

distribution function:

Fθ (t − s, x, y) =

Ry

fθ (t − s, x, u)du

T = inf{t > 0 : Xt ≥ S}.

Data: {t1 , t2 , . . . , tn } i.i.d. realizations of the random variable T .

How do we estimate θ?

6

Maximum likelihood estimation

... is possible if we know the distribution of T .

Let pθ (t) be the probability density function of T .

Recall:

Likelihood function:

Qn

Ln (θ)

=

log Ln (θ)

=

Score function(s):

∂θ log Ln (θ)

=

i=1 pθ (ti )

Pn

i=1 log pθ (ti )

Pn

i=1 ∂θ log pθ (ti )

Estimator θ̂ is such that

∂θ log Ln (θ̂)

=

0

Log-likelihood function:

7

Example: Brownian motion with drift

dXt = µ dt + σ dW (t)

;

µ > 0, σ > 0

S

;

X0 = 0 < S

Then

pθ (t)

=

√

2πσ 2 t3

exp −

2

(S − µt)

2σ 2 t

Thus

Ln (θ) =

n

Y

pθ (ti )

=

i=1

log Ln (θ) =

n

X

i=1

log pθ (ti )

n

Y

S

p

i=1

=

n

X

i=1

8

!

log

2πσ 2 t3i

exp −

S

p

2πσ 2 t3i

n

X

i=1

!

−

(S − µti )2

2σ 2 ti

n

X

(S − µti )2

i=1

2σ 2 ti

!

4

µ=1

2

σ=1

1

σ = 0.5

0

probability density

3

σ = 0.1

0.0

0.5

1.0

T

9

1.5

2.0

Score functions:

∂µ log Ln (θ)

n

X

(S − µti )

=

σ2

i=1

n

X (S − µti )2

n

= − 2+

2 )2 t

2σ

2(σ

i

i=1

∂σ2 log Ln (θ)

Maximum likelihood estimators:

S

µ̂ =

t̄

σ̂

2

= S

2

1

n

n

X

i=1

1

1

−

ti

t̄

where

n

t̄

=

1X

ti

n i=1

10

!

Example: The Ornstein-Uhlenbeck model

Consider the Ornstein-Uhlenbeck process as a model for the

membrane potential of a neuron:

dXt

=

Xt

−

+ µ dt + σ dWt ; X0 = x0 = 0.

τ

where

Xt : membrane potential at time t after a spike

τ : membrane time constant, reflects spontaneous voltage decay (>0)

µ: characterizes constant neuronal input

σ: characterizes erratic neuronal input

x0 : initial voltage (the reset value following a spike)

11

The conditional expectation is

E[Xt |X0 = 0] =

µτ (1 − e−t/τ )

The conditional variance is

Var[Xt |X0 = x0 ]

=

−t/τ

Thus (Xt |X0 = 0) ∼ N (µτ (1 − e

τ σ2 1 − e−2t/τ

2

),

τ σ2

2

−2t/τ

1−e

).

Asymptotically (in absence of a threshold) Xt ∼ N (µτ, τ σ 2 /2).

12

Two firing regimes:

Suprathreshold: µτ S (deterministic firing - the neuron is active

also in the absence of noise)

Subthreshold: µτ S (firing is caused only by random fluctuations

(stochastic or Poissonian firing)

6

Suprathreshold

µτ

S

Xt

T

T

T

T

T

T

T

T

time

6

Subthreshold

S

µτ

Xt

T

time

13

T

Model parameters: µ, σ, τ, x0 , S

Assumed known:

Intrinsic or characteristic parameters of the neuron: τ, x0 , S

τ ≈ 5 − 50 msec, S − x0 ≈ 10 mV ; (We set x0 = 0)

To be estimated:

√

Input parameters: µ (in [mV/msec]) and σ (in [mV/ msec])

14

dXt =

Xt

+ µ dt + σ dW (t) ; τ > 0, µ ∈ IR, σ > 0 ; X0 = 0 < S

−

τ

The distribution of T = inf{t > 0 : Xt ≥ S} is only known if S = µτ

(the asymptotic mean of Xt in absence of a threshold):

pθ (t)

=

√

2S exp(2t/τ )

πτ 3 σ 2 (exp(2t/τ ) − 1)3/2

2

S

exp − 2

σ τ (exp(2t/τ ) − 1)

Maximum likelihood estimator (µ = S/τ by assumption):

n

σ̂ 2

=

2S 2

1X

n i=1 τ (exp(2ti /τ ) − 1)

15

µ=2

20

30

40

0.6

Threshold

C

σ = 10

0.0000

0.05

0.00

10

σ=1

µ=3

0

0.15

3000

4000

5000

D

µ=5

σ = 10

σ=5

0.3

σ=1

σ=1

0.0

0.00

0.1

0.05

2000

0.2

0.10

σ=5

1000

Suprathreshold

0.4

0.20

0.25

0

µ=2

0.0004

σ=1

B

0.5

0.10

σ=5

Subthreshold

0.0008

A

σ = 10

0.15

0.0012

Subthreshold

0

5

10

15

20

25

30

0

2

4

6

8

10

Interspike intervals (ms)

Figure 1: Empirical densities from simulated data, each density corresponds to 10.000 ISIs

√

µ =√2 V/s and σ = 1, 5 or 10 mV/ ms. B:

Subthreshold regime, µ = 2 V/s and σ = 1 mV/ ms, enlarged from panel A. C: Threshold

√16

regime, µ = 3 V/s and σ = 1, 5 or 10 mV/ ms. D: Suprathreshold regime, µ = 5 V/s and

√

measured in ms. A: Subthreshold regime,

We reformulate to the equivalent dimensionless form

√ X X

t

Wt µτ

σ τ

t

t

d

d

d √

= −

+

+

S

S

S

τ

S

τ

or

dYs = (−Ys + α) ds + β dWs ,

Y0 = 0

where

√

Xt

Wt

σ τ

µτ

t

√

, Ws =

, β=

s = , Ys =

, α=

τ

S

S

S

τ

and T /τ = inf{s > 0 : Ys ≥ 1}.

17

dYs = (−Ys + α) ds + β dWs ,

Y0 = 0

E[Ys |Y0 = 0] = α(1 − e−s )

1 2

Var[Yt |Y0 = 0] = β (1 − e−2s )

2

Let fT /τ (s) be the density of T /τ .

An exact expression is only known for α = 1:

1

2e2s

exp − 2 2s

fT /τ (s)α=1 = √

2s

3/2

β (e −1)

πβ(e −1)

The maximum likelihood estimator:

α=1:

N

X

1

2

2

β̌ =

N i=1 e2si − 1

18

The Laplace transform of T :

2

kT /τ E e

=

α

exp{ 2β

2 }Dk

√

2α

β

Hk α

β

√

=

(α−1)2

2(α−1)

(α−1)

exp{ 2β 2 }Dk

Hk

β

β

for k < 0, where Dk (·) and Hk (·) are parabolic cylinder and Hermite

functions, respectively.

19

Ricciardi & Sato, 1988 derived series expressions for the moments of

T . In particular

∞

1 X 2n (1 − α)n − (−α)n n E[T /τ ] =

Γ

n

2 n=1 n!

β

2

The expression is difficult to work with, especially if |α| 1

(strongly sub- or suprathreshold) because of the canceling effects in

the alternating series. The expression for the variance includes the

digamma function also.

Inoue, Sato & Ricciardi, 1995, proposed computer intensive methods

of estimation by using the empirical moments of T .

20

Another approach: Martingales (Laplace transform)

dXt

=

Xt

−

+ µ dt + σdWt ; X0 = x0 = 0;

τ

with solution

Xt

− τt

= µτ (1 − e

Z

t

)+σ

−

e

(t−s)

τ

dWs

0

Define the martingale:

Z

t

τ

Yt = (µτ − Xt )e = µτ − σ

s

τ

e dWs

0

21

t

If M (t) is a martingale, then E[M (T ∧ t)] = E[M (0)]

We need more than that:

Doob’s Optional-Stopping Theorem

Let T be a stopping time and let M (t) be a uniformly integrable

martingale. Then E[M (T )] = E[M (0)].

22

Yt is obviously not uniform integrable (UI) (it is equivalent to a

Brownian Motion). CLAIM:

Y T (t) := Y (T ∧ t),

the process stopped at T , is UI in certain part of the parameter

region. We show that

E[|YtT |p ] < K

for all t and some p > 1 and some positive K < ∞.

23

First observe that

YT ∧t = (µτ − XT ∧t )e

(T ∧t)

τ

≥ (µτ − S)e

(T ∧t)

τ

>0

for all t if µτ > S (suprathreshold regime).

Set p = 2. We have

E[|YtT |2 ]

= E[(YtT )2 ]

Z

= E[(µτ − σ

T ∧t

s

e τ dWs )2 ]

0

=

Z

(µτ )2 − 0 + σ 2 E[(

0

24

T ∧t

s

e τ dWs )2 ]

M (t)

=

Z t

Z t

2s

s

2

τ

e τ ds

(

e dWs ) −

0

0

is a martingale due to Itôs isometry:

Z t

Z t

f (s, ω)dWs )2 =

E[f (s, ω)2 ]ds

E(

0

0

such that E[M (T ∧ t)] = E[M (0)] = 0. This yields

Z

E[(

T ∧t

s

τ

2

e dWs ) ]

Z

= E[

0

T ∧t

2s

τ

e ds]

0

τ 2(T ∧t)

= E[ (e τ − 1)]

2

2T

τ

≤

E[e τ ]

2

25

Thus, we have:

2T

τ

E[|YtT |2 ] ≤ (µτ )2 + σ 2 E[e τ ]

2

We need to show that this is finite.

26

Define the martingale (to be trusted):

2

Y2 (t) = (µτ − X(t)) e

2t

τ

2t

τ σ2

(1 − e τ )

+

2

such that

E[Y2 (T ∧ t)] = E[Y2 (0)] = (µτ )2

which yields

2

(µτ )

2(T ∧t)

τ σ2

= E[(µτ − X(T ∧ t)) e

+

(1 − e τ )]

2

2(T ∧t)

τ σ2

τ σ2

2

≥

(µτ − S) −

E[e τ ] +

2

2

2

27

2(T ∧t)

τ

2

If (µτ − S) >

τ σ2

2

then

τ σ2

(µτ ) −

2

2

τ

σ

(µτ − S)2 −

2

2

≥ E[e

2(T ∧t)

τ

].

Taking limits on both sides we obtain

2

τ

σ

(µτ )2 −

2

2

τ

σ

(µτ − S)2 −

2

≥

lim E[e

t→∞

since T is almost surely finite.

28

2(T ∧t)

τ

] = E[e

2T

τ

]

BINGO! Doob is good.

2

If S < µτ (suprathreshold regime) and (µτ − S) >

E[Y T (0)]

τ σ2

2

= E[Y T (T )]

such that

µτ = E[Y T (0)]

= E[Y T (T )]

T

τ

= E[(µτ − X(T ))e ]

T

(µτ − S)E[e τ ].

=

29

then

Beautiful result:

T

τ

E[e ]

µτ

µτ − S

=

With a little more work:

τ σ2

(µτ ) −

2

2

τ

σ

(µτ − S)2 −

2

2

E[e

2T

τ

]

=

Explicit expressions for the parameters:

µ =

SE[e

τ (E[e

TS

τ

TS

τ

]

] − 1)

2

σ2

2S Var[e

=

τ (E[e

2TS

τ

TS

τ

] − 1)(E[e

30

]

TS

τ

] − 1)2

Straightforward estimators:

n

Ê[Z]

=

1 X ti /τ

e

= Z1

n i=1

=

1 X 2ti /τ

e

= Z2

n i=1

n

Ê[Z 2 ]

where ti , i = 1, . . . , n, are the i.i.d. observations of the FPT’s.

Moment estimators of the parameters are then

µ̂ =

SZ1

τ (Z1 − 1)

2

2S 2 (Z2 − Z12 )

.

2

τ (Z2 − 1)(Z1 − 1)

σ̂

=

31

Another approach: The Fortet integral equation

Set S = 1. The probability

Z

P [Xt > 1 | X0 = x0 ] =

fθ (t, x0 , y)dy = 1 − Fθ (t, x0 , 1) = LHS(t)

y>1

can alternatively be calculated by the transition integral

Z t

pθ (u) 1 − Fθ (t − u, 1, 1) du = RHS(t)

P [Xt > 1 | X0 = x0 ] =

0

32

Parameter estimation

Sample t1 , . . . , tn of independent observations of T . Fix θ.

RHS can be estimated at t from the sample by the average

Z t

RHS(t; θ) =

pθ (u) 1 − Fθ (t − u, 1, 1) du

0

≈

n

1X

RHSemp (t; θ) =

1 − Fθ (t − ti , 1, 1) 1{ti ≤t}

n i=1

since for fixed t it is the expected value of

1T ∈[0,t]

1 − Fθ (t − T, 1, 1; θ)

with respect to the distribution of T .

33

Parameter estimation

Error measure:

L(θ) = sup |(RHSemp (t) − LHS(t))/ω|

t>0

Estimator:

θ̃ = arg min L(θ)

θ

34

Fortet integral equation

Let f (s) be the density function for the time t/τ from zero to the

first crossing of the level 1 by Y . The probability

α(1 − e−s ) − 1 √

P [Y (s) > 1] = Φ √

−2s

1−e

β/ 2

can alternatively be calculated by the transition integral

!

Z s

−(s−u)

α−1 1−e

√ √

P [Y (s) > 1] =

f (u) Φ

du

−2(s−u)

β/ 2 1−e

0

35

Parameter estimation

LHS(s) = Φ

−s

α(1−e )−1

√

√

1−e−2s β/ 2

q

Rs

−(s−u)

α−1

1−e

= 0 f (u) Φ β/√2 1+e−(s−u) du = RHS(s)

Sample: s1 ≤ s2 ≤ . . . ≤ sn , iid observations of T /τ .

s

!

n

α − 1 1 − e−(s−si )

1X

√

Φ

RHS(s) ≈

1{si ≤s}

−(s−s

)

i

n i=1

β/ 2 1 + e

since it is the expected value of

α−1

√

1U ∈[0,s] Φ

β/ 2

s

1−e−(s−U )

1+e−(s−U )

!

with respect to the distribution of U = T /τ for given α and β.

36

interpretable quantities of µ and σ through (11).

Simulated data. Trajectories of the OU process were simulated according to the Euler scheme

C

0.8

1.3

0.9

α̂

1.0

1.1

1.9

α̂

2.0

2.1

F

β̂

1.5

0.10

0.15

β̂

5

0

0

0.05

3

H

50

1.0

2

α̂

G

0

0

0.5

1

α̂

5

50

E

D

0

0

0

0

0.3

5

B

50

A

50

5

for four different combinations of parameter values: (α, β) = (0.8,1), (1,0.1), (2,0.1), and

0.05

0.10

β̂

0.15

0.5

1.0

1.5

β̂

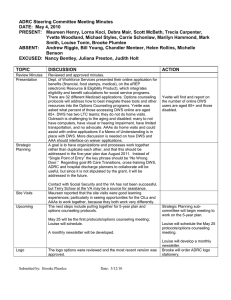

FIG. 1: Densities of estimates for simulated data based on 1000 estimates. Upper panels: Estimates

of α. Lower panels: Estimates of β. Solid black line: sample sizes of 500. Dashed black line: sample

sizes of 100. Solid grey line: sample sizes of 50. Dashed grey line: sample sizes of 10. Vertical line:

37

1.0

0.5

1.0

0.5

2

B

0.0

0.0

A

4

0.01

0.02

ISIs (s)

ISIs (s)

uditory neurons. Comparison of the (normalized) right hand side of Eq.

the

Figur 1: Auditory neurons. A: Spontaneous record;

α̂ = 0.85; empirical

β̂ = 0.09. B:Eq.

Stimulated

record; α̂ curves),

= 4.8; β̂ = calculated

0.63. Note

(normalized)

(13) (dashed

different time axes.

with estima

ical axes can be interpreted as a cumulative probability, horizontal axes are

us record; α̂ = 0.85; β̂ = 0.09. B: Stimulated record; α̂ = 4.8; β̂ = 0.63. No

38

Feller process

β p

dYs = (−Ys + α) ds + √

Ys dWs

α

E[Ys |Y0 = y0 ] = α + (y0 − α)e−s

h

2y

i

β2

0

Var[Ys |Y0 = y0 ] =

(1 − e−s ) 1 +

− 1 e−s

2

α

39

S2

S1

µτ

VI

time

40

Ditlevsen & Lansky, 2006 give the moments

α − y0

if α > 1

α−1

2α(α − y0 )2 + β 2 (α − 2y0 )

2T /τ

E[e

]=

2α(α − 1)2 + β 2 (α − 2)

E[eT /τ ] =

Moment estimators:

α̂ =

if

p

1 + 2(α/β)2

Z1 − y0

Z1 − 1

and

2

2

2(1

−

y

)

(Z

−

Z

0

2

1)

2

β̂ =

α̂

2(Z1−1)(Z2−y0 ) − (Z1−y0 )(Z2−1)

41

<1+

2α(α − 1)

β2

µτ

S

α̂ and β̂ 2 are valid

1√

2− 2

g(k)

only α̂ is valid

1

1

k=

42

2µ

σ2

Feller process

β p

dYs = (−Ys + α) ds + √

Ys dWs

α

E[Ys |Y0 = y0 ] = α + (y0 − α)e−s

h

2y

i

β2

0

(1 − e−s ) 1 +

− 1 e−s

Var[Ys |Y0 = y0 ] =

2

α

Chapman-Kolmogorov integral equation:

Z s

f (u){1 − Fχ2 [a(s − u), ν, δ(s − u, 1)]} du

1 − Fχ2 [a(s), ν, δ(s, y0 )] =

0

a(s) = (4α)/β 2 (1 − e−s ), δ(s, y0 ) = (4αy0 /β 2 )[e−s /(1 − e−s )] and

ν = 4(α/β)2 .

43

1.0

0.5

1.0

0.5

1

ISIs (s)

B

0.0

0.0

A

2

1

ISIs (s)

2

C

5

10

15

time (s)

20

0.5

0.5

1.0

1.0

0

2

ISIs (s)

E

0.0

0.0

D

4

2

ISIs (s)

4

F

0

20

40

time (s)

60

FIG. 3: Olfactory neurons. A, B, C: Neuron that fits the models. D, E, F: Neuron that did n

fit the models. A, D: OU model, normalized comparison of the right hand side of Eq. (12) (sol

44

CLE IN PRESS

sen / Probabilistic Engineering Mechanics (

)

5

–

tions, and the

mates of α and

ates:

α̃

0.83 ± 0.14

1.01 ± 0.15

1.97 ± 0.15

2.99 ± 0.17

3.94 ± 0.21

10.76 ± 0.37

β̃

0.97 ± 0.10

0.98 ± 0.11

0.98 ± 0.09

0.98 ± 0.09

1.00 ± 0.09

0.99 ± 0.09

Fig. 2. Normalized empirical distribution functions of the sample of 100 joint

estimates of α and β compared to the standardized normal distribution function.

45

0.97 ± 0.10

0.98 ± 0.11

0.98 ± 0.09

0.98 ± 0.09

1.00 ± 0.09

0.99 ± 0.09

Fig. 2. Normalized empirical distribution functions of the sample of 100 joint

estimates of α and β compared to the standardized normal distribution function.

ervations each.

m (11) and (12)

98 ± 0.0.07 for

ime. In the

rms slightly

n the other

ment and is

rathreshold.

able 1. The

n the strong

rms as well.

tions of the

29) indicate

y distributed

o construct

Fig. 3. Scatterplots of the 996 pairs of estimates of (α, β), each estimated from

a sample of 10 simulated first-passage times corresponding to the true values

α = 2 and β = 1.

separated into 1000 samples with only 10 observations in each

46

sample and (α, β) is estimated for each of these. For four of

ARTICLE IN PRESS

levsen, O. Ditlevsen / Probabilistic Engineering Mechanics (

a Feller process.

elow, a property which is

he action of an inhibitory

its dimensionless form

quation

(30)

)

–

Fig. 5. Comparison of the (normalized) left-hand side of the integral equation

(25) (smooth curves) with the empirical (normalized) right-hand side given by

(26) for five simulated samples of 100 first-passage times of the OU process of

the level 1 corresponding to the true α-values 1, 2, 3, 4, 11, respectively, and the

true β = 1 (right to left). For these samples the estimates of (α, β) according to

(29) are (1.212, 0.926), (1.677, 0.996), (2.657, 1.039), (4.055, 1.029), (10.801,

0.956), respectively.

1 is directly obtained as

47

ARTICLE IN

8

S. Ditlevsen, O. Ditlevsen / Probabilistic Enginee

48

49