From: FLAIRS-01 Proceedings. Copyright © 2001, AAAI (www.aaai.org). All rights reserved.

Hierarchical Representatives Clustering with Hybrid Approach

Byung-Joo

An and Eun-Ju Kim and Yill-Byung

Department of Computer Science,

College of Engineering,

Yonsei University, Korea

{joo,outframe,yblee} @csai.yonsei.ac.kr

Abstract

Clustering is a discovering process of meaningfulintbrmation by grouping similar data into compactclusters. Most of traditional clustering methodsare in

favor of small datasets and have difficulties handling

very large datasets. Theyare not adequate clustering

methodsfor partitioning huge datasets in data mining

perspective. Wepropose a newclustering technique,

HRC(hierarchicalrepresentatives clustering), that can

be applied to large datasets andfind clusters with good

quality. HRC

is a two phase algorithmthat take advantage of a hybrid approachthat combineSOM

and hierarchical clustering. Experimentalresults showthat HRC

can discover better clusters efficiently in comparison

to

traditional clustering methods.

Introduction

Data clustering is an important technique for exploratory

data analysis. It has been used practically in real world application of data classification, imageprocessing, and information retrieval. Clustering is a basic technique to find useful information hidden in databases by grouping data with

similar characteristics (Zhang, Ramakrishnan, & Miron June

1996; 1997). It is a process to maximize within-clusters

similarity and minimize between-clusters similarity (Duda

& Hart 1973). Discovered clusters explain distributions of

dataset and give a foundation for further analysis (Berry

Linoff 1997). So clustering is a starting point in data mining

process. Clustering technique is applicable to group customers according to buying pattern, categorize web documents by subject, and extract interesting spatial pattern in

GIS databases. In recent, there have been manystudies of

data mining or knowledgediscovery in databases that is defined as the discovery of interesting, implicit, and previously unknown knowledge from large databases (Fayyad,

Piatetsky-Shapiro, & Smyth 1996). As data mining emerges,

manyresearchers are interested in efficient and effective

clustering algorithm that can reduce the numberof scanning

raw data and computational complexity.

In this paper, we propose a new clustering technique,

HRC(hierarchical representatives clustering), that can be efficiently applied to large datasets and find clusters with good

Copyright @2000, AmericanAssociation for Artificial Intelligence(www.aaai.org).All rights reserved.

124

FLAIRS-2001

Lee

OOOO0

OOOOO

ooooo

O00OO

?2222,’

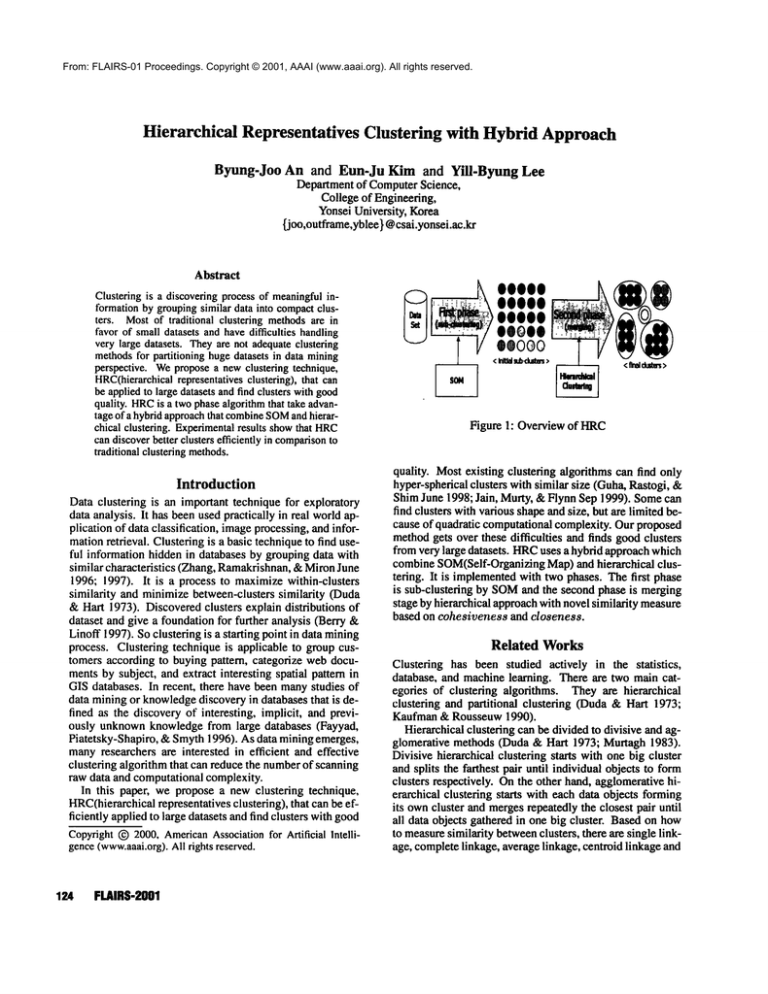

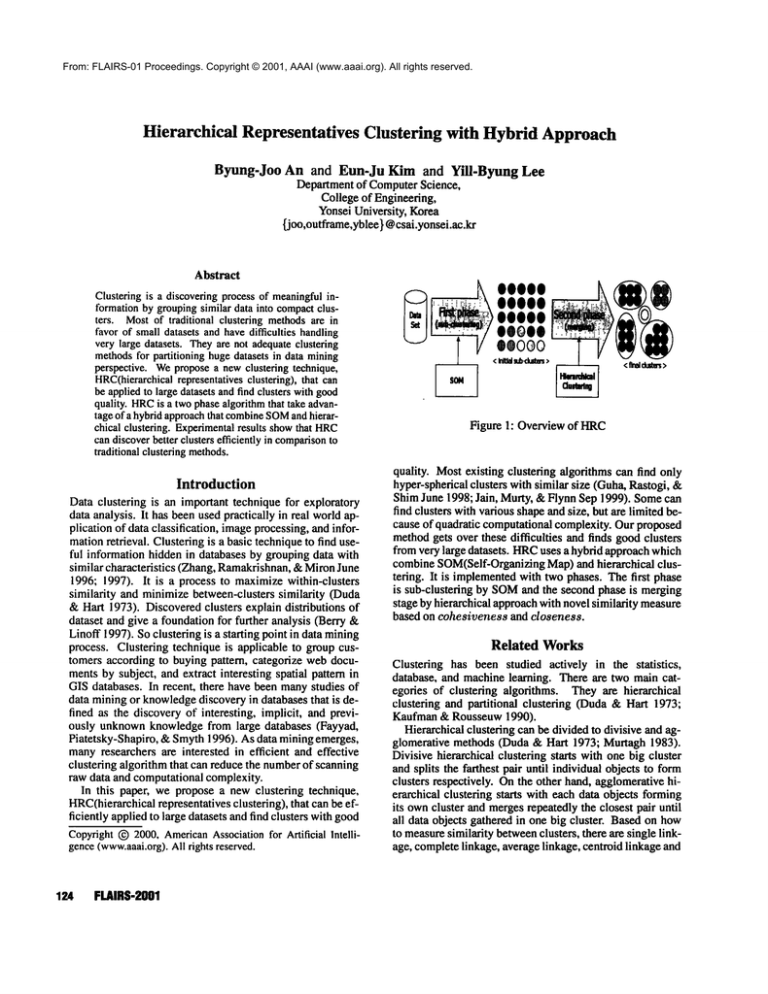

Figure 1: Overview of HRC

quality. Most existing clustering algorithms can find only

hyper-spherical clusters with similar size (Guha, Rastogi,

Shim June 1998; Jain, Murty, & Flynn Sep 1999). Some can

find clusters with various shape and size, but are limited because of quadratic computational complexity. Our proposed

methodgets over these difficulties and finds good clusters

from very large datasets. HRCuses a hybrid approach which

combine SOM(Self-Organizing Map) and hierarchical clustering. It is implemented with two phases. The first phase

is sub-clustering by SOMand the second phase is merging

stage by hierarchical approach with novel similarity measure

based on cohesiveness and closeness.

Related

Works

Clustering has been studied actively in the statistics,

database, and machine learning. There are two main categories of clustering algorithms. They are hierarchical

clustering and partitional clustering (Duda & Hart 1973;

Kaufman & Rousseuw 1990).

Hierarchical clustering can be divided to divisive and agglomerative methods (Duda & Hart 1973; Murtagh 1983).

Divisive hierarchical clustering starts with one big cluster

and splits the farthest pair until individual objects to form

clusters respectively. Onthe other hand, agglomerative hierarchical clustering starts with each data objects forming

its owncluster and merges repeatedly the closest pair until

all data objects gathered in one big cluster. Based on how

to measuresimilarity betweenclusters, there are single linkage, complete linkage, average linkage, centroid linkage and

ward’s method(Duda &Hart 1973).

Partitional clustering optimizesobjective function to partition datasets into k clusters. K-Means,K-Medoid,and

PAMare belong to this category and K-Meansis the most

common

algorithm because of its simplicity. In K-Means,

weselect randominitial centroids and assign data objects to

cluster whosecentroid is nearest, then update cluster centroids. This processof assigningdata objects to cluster and

re-calculating centroids is continuesuntil stoppingcondition

is satisfied.

Somerecent researches targeted on handling large

datasets by using a summarized

cluster representation or a

special data structure. BIRCH(Balanced

Iterative Reducing

and Clustering using Hierarchies) (Zhang, Ramakrishnan,

MironJune 1996; 1997) defined a cluster feature that is

summarizedcluster representation. A cluster feature consists of the numberof data objects, the linear sumof data

objects, and the square sumof data objects. This method

utilizes a balancedtree of cluster features without dealing

with all data objects. Whena newdata object is inserted

to a cluster, the newcluster feature can be calculated from

the previouscluster feature withoutrequiring all data objects

in the cluster, The incremental BIRCH

algorithm is a fast

clustering algorithmwith limited resources, but can’t find

diverse shapeof clusters becauseit is basedon the centroidbased approach.

Chameleon(Karypis, Hun, & KumarAugust 1999) suggested a dynamicmodelingof cluster similarity. Through

two phases, chameleonconstructs graph and partitions the

graph, then mergesthese partitions. It yielded goodresults

for finding highly variable clusters. Thusit is moreapplicable to spatial data mining.

Hierarchical

Representatives

Clustering

HRC

is a hybrid methodthat can be applicable to very large

datasets. Becauseit exploits representatives of cluster to

reduce computationalcomplexity,it is scalable and robust

to outliers andnoises.

HRCis a two phase algorithm that take advantage of

a hybrid approach which combine SOMand hierarchical

clustering. SOMhas advantagesin applying large datasets

due to its on-line learning process. Hierarchical clustering is knownto be better than SOM

in point of clustering

quality, although slower. HRCadopted good features of

two methods,SOM’sefficiency of processing large datasets

and hierarchical clustering’s cluster quality. In the first

phase, HRC

uses SOM

to partition dataset into initial small

sub-clusters that have two representatives. In the second

phase, these sub-clusters foundin the first phaseare merged

by agglomerative hierarchical manner. At this time, to

measure betweenclusters similarity we use cohesiveness

and closeness. Similarity based on cohesiveness and

closeness can take account of contextual relation between

clusters.

The first phase: sub-clustering

Weuse SOM

to divide dataset into initial small sub-clusters.

Neuronsin the mapform sub-clusters respectively. The two

Figure 2: Flow of HRCalgorithm

Figure3: Tworepresentativesof initial sub-cluster

representativesof initial sub-cluster are determinedas following. Wecomputeeigenvector having the largest eigenvalue of data objects fallen on a sub-clusterand project data

objects on the eigenvector.Asin figure 3, twomiddlepoints

on eigenvectorare determinedto represent sub-cluster.

After finding representativesof sub-clusters, in the second

phase only these representatives are used to comparesubclusters similarity. Thereforeweget a data reductioneffect,

in additionto, reduceinfluenceof outliers andnoises.

The second phase: merging

In this phase, we mergerepeatedly sub-clusters foundin the

first phase by wayof agglomerativehierarchical approach

andfind final clusters. It is efficient to compare

clusters similarity becauseit is requiredonlysub-clusterrepresentatives

not rawdata objects.

Howto measuresimilarity of twoclusters is critical to

discovering good results (Karypis, Hun, & KumarAugust

1999). Tofind better clusters, weused a similarity measure

based on cohesiveness and closeness that considers contextual relation betweenclusters, cohesivenessis measured

by cohesiveDistance in equation (2) and closeness is by

closeDistancein equation (3).

1

cohcsiveDistance q x closeDistance ij 2

(1)

CohesiveDistance shows a degree of compactness for

internal cluster and closeDistanceshowsa degree of external nearnessfor between-clusters.

similarity ij =

link ij

cohesiveDistance~ - link i-I-link j

(2)

2

DATAMINING& THEWEB 125

clustering

method

SOM

K-Means

hierarchical clustering

HRC

time

space

complexity

complexity

O(nkl)

O(k + n)

O(nkl)

O(k + n)

2)

2)

O(n

O(n

O(nkl + m2) O(k + n + 2)

dataset

I

dataset

Z

dataset

3

Table !: Complexityof clustering methods

Figure 4: Twodimensionalspatial datasets

closeDistanceij = y~n, ~-~j w~. × w~b × zlira -rbll

ni

x nj

(3)

Wedefinedthe degreeof linking. It is the sumof weighted

distances that are betweenpairs of representatives. The

degree of linking betweencluster i and j is the sumof

weighteddistances betweenrepresentatives in cluster i and

representatives cluster j. Thedegreeof linking in cluster i

is the sumof weighteddistances which are betweenrepresentatives in cluster i. In equation(3)(4)(5), ra rb are

representativevectors, w,., is the numberof rawdata objects

that representative ra represent, ni is the numberof representatives of sub-clusteri.

nl

linkij

nj

= EEwr, x wrb x lira

a

-rbll 2 (4)

b

link,= E2’ E~," w~. xw~x Ilra--rbll 2 (5)

2

coheaiveDista~zeeijis represented by linkij normalized

by average of linki and linkj. This measures compactness,if cluster i and cluster j were merged,whether the

mergedcluster is more compactthan average compactness

of cluster i and j. eloseDistanceij is the averageweighted

distance betweenrepresentatives in cluster i and representatives in cluster j.

Complexity Analysis

Whenthe numberof data objects is n, the numberof cluster is k, the iteration frequency is l, and the numberof

sub-clusters is m, time and space complexity of SOM,KMEANS,

hierarchical clustering and HRCare in table 1.

Timeand space complexity of SOMand K-Meansare linearly proportional to the numberof data objects, but hierarchical clustering is quadratic. HRC’scomplexityis linearly proportional to n, althoughit needsmorecomputation

than SOMand K-Meansfor the second phase. Because the

numberof sub-clusters, m, is very small comparedto n, it

is negligible. ThusHRCis moreefficient than hierarchical

clustering of quadratic complexityand havegoodclustering

results with linear complexity.

Experiments

In experiments, we comparedour methodwith K-Means,hierarchical clustering, and a simple hybrid method.Thesimple hybrid methodis a merecombinationof SOM

and hierarchical clustering with single, completeand averagelinkage.

126

FLAIRS-2O01

Wetested with three spatial datasets and seven UCImachinelearningrepositorydatasets(australian,diabetes, heart,

iris, soybean,wineand zoo) (UC12000).

To confirmclustering results visually, spatial datasets are expressedas points

in two-dimensionaleuclidean space.

Q

=

~

(6)

i=1

Di

= E:£1

Eb=~

ll~a

- ~bll

nl

(7)

n~(n~- 1)

Goodness

of clustering results is evaluated by Q in equation(6). Equation(7)showsthat Di is the average pairwise

distancewithin cluster i. Q is the averageof Dis.

Experimental Results

Experimentalresults with three spatial datasets are shown

and compared

by clustering quality(Q) in table 2. In dataset

1, Wehave observedthat K-Meanscan find two elliptical

clusters with similar size on the upperside, but failed to one

big circular cluster and twoadjacent small clusters on the

middle. The big circular cluster is divided and two small

clusters are merged. BecauseK-Meansalgorithm implicitly assumesthat clusters are hyper-ellipsoidalandof similar

size, it can’t find clusters variedin size as dataset 1. Hierarchical clustering showsdifferent results accordingto how

to measurecluster similarity. Single linkage and complete

linkage mergemostdifferent clusters becausethey are very

sensitive to noise and outlier. Averagelinkage, althoughit

is less sensitive to noise, splits big cluster and mergessmall

clusters due to its centroid-based nature. The simple hybrid methodhas similar results without regard to simple,

complete, or average linkage in mergingphase because it

reducesinfluenceof noise by sub-clustering.But it failed to

find correct clusters. HRC

finds twoelliptical clusters that

are connectedby line and of similar size on the upperside,

and separates one big circular cluster and twoadjacentsmall

clusters on the middle.Results with datasets 2 and dataset 3

are also similarto the result of dataset1. In table 2, clustering quality of HRC

is better than other methodsfor dataset 1,

dataset 2, and dataset 3. Fromthese experimentalresults we

confirmedthat HRC

could find variable sized clusters with

noises.

To verify with real world datasets, seven UCImachine

learningrepositorydatasets (australian, diabetes,heart, iris,

soybean,wine, and zoo) are tested and evaluated by Q, the

K-Means

dataset 1

dataset 2

dataset 3

5.609

6.217

2.708

hierarchical clustering

single complete average

8.063

6.012

5.673

5.854

9.700

8.042

2.495

2.620

2.577

HRC

dataset 1

dataset 2

dataset 3

4.369

5.274

1.960

single

5.917

5.884

2.613

simple hybrid

complete average

5.699

5.602

6.523

5.646

2.517

2.616

Table2: Comparisonof clustering quality(Q) with spatial

datasets

K-Means

australian

diabetes

heart

iris

soybean

wine

zoo

1.322

0.540

1.475

0.918

3.399

0.715

I. 185

HRC

australian

diabetes

heart

iris

soybean

wine

zoo

1.535

0.521

1.284

0.786

2.933

0.663

1.090

hierarchical clustering

single complete average

1.538

1.334

1.538

0.625

0.604

0.604

1.673

1.624

1.378

0.890

0.844

0.917

3.095

3.095

3.095

0.988

0.719

0.988

1.354

i .361

! .306

simple hybrid

single complete average

i .048

1.322

1.322

0.534

0.534

0.534

1.358

i.488

1.487

0.938

0.956

0.956

3.127

3.081

3.095

0.839

0.732

0.707

1.223

1.317

1.380

Table 3: Comparisonof clustering quality(Q) with UCI

datasets

measureof clustering quality, in table 3. In table 3, wehave

obtained the fact that HRCis superior to other methodswith

six datasets exceptfor one, australian dataset.

In the experimentalresults with spatial datasets and real

world datasets, weconfirm that HRCcan find goodclusters

andpartition efficiently large datasets.

on cohesiveness and closeness.

By experimental results we confirm that HRCcan discoverbetter clusters efficiently in comparison

to traditional

clustering methodsand the simple hybrid method.

References

Berry, M. J. A., and Linoff, G. 1997. Data MiningTechniques for Marketing, Sales, and CustomerSupport. Jone

Wiley & Sons.

Duda, R. O., and Hart, P.E. 1973. Pattern Classification andSceneAnalysis. A Wiley-IntersciencePublication,

NewYork.

Fayyad; Piatetsky-Shapiro; and Smyth. 1996. Advances

in knowledgediscovery and data mining. AAAIPress/The

MITPress.

Guha, S.; Rastogi, R.; and Shim, K. June 1998. Cure:

Anefficient clustering algorithmfor large databases, the

ACMSIGMOD

Conference on Managementof Data, Seattle, Washington.

Jain, A. K.; Murty,M.N.; and Flynn,P. J. Sep. 1999. Data

clustering: a review, the ACM

Comput.Surv. 31:264- 323.

Karypis, G.; Han, E.-H.; and Kumar, V. August 1999.

Chameleon:Hierarchical clustering using dynamicmodeling. IEEEComputer32.

Kaufman,L., and Rousseuw,P.J. 1990. Finding Groups

in Data- AnIntroductionto Cluster Analysis. WileySeries

in Probability and Mathematical

Statistics.

Murtagh,F. 1983. A survey of rescent advancesin hierarchical clustering algorithms. The ComputerJournal.

UCI.

2000.

Maching Learning Repository,

http:l/www.ics,uci.edulrelearn.

Zhang, T.; Ramakrishnan,R.; and Miron. 1997. Birch: A

newdata clustering algorithm and its applications. Data

Minningand KnowledgeDiscover), 1:141-182.

Zhang, T.; Ramakrishnan, R.; and Miron. June 1996.

Birch: Anefficient data clustering methodfor very large

databases, the ACMSIGMOD

Conference on Management

of Data, Montreal, Canada.

Conclusions

In this paper we presented HRC,the newclustering algorithm, whichcan be applicable to very large datasets. Because it exploits representatives of cluster to reduce computational complexity,it is scalable and robust to outliers

and noises. HRCis a two phases algorithm that take advantage of a hybrid approachthat combineSOM

and hierarchical clustering. HRCadopted goodfeatures of two methods, SOM’s

efficiency of processinglarge datasets and hierarchical clustering’s cluster quality. In addition, to measure

betweenclusters similarity we use a similarity, whichcan

take accountof contextual relation betweenclusters, based

DATAMINING& THEWEB 127