Online Semantic Extraction by Backpropagation Neural Network

with Various Syntactic Structure Representations

Heidi H. T. Yeung

City University of Hong Kong

Tat Chee Road, Kowloon Tong,

Kowloon, Hong Kong, China

Summary of Demonstration

The sub-symbolic approach on Natural Language

Processing (NLP) is one of the mainstreams in Artificial

Intelligence. Indeed, we have plenty of algorithms for

variations of NLP such as syntactic structure representation

or lexicon classification theoretically. The goal of these

researches is obviously for developing a hybrid architecture

which can process natural language as what human does.

Thus, we propose an online intelligent system to extract the

semantics (utterance interpretation) by applying a 3-layer

back propagation neural network to classify the encoded

syntactic structures into corresponding semantic frame

types (e.g. AGENT_ACTION_PATIENT). The results are

generated dynamically according to training sets and user

inputs in webpage-form. It can diminish the manipulating

time while using extra tools and share the statistical results

with colleagues in clear and standard forms.

Functionalities

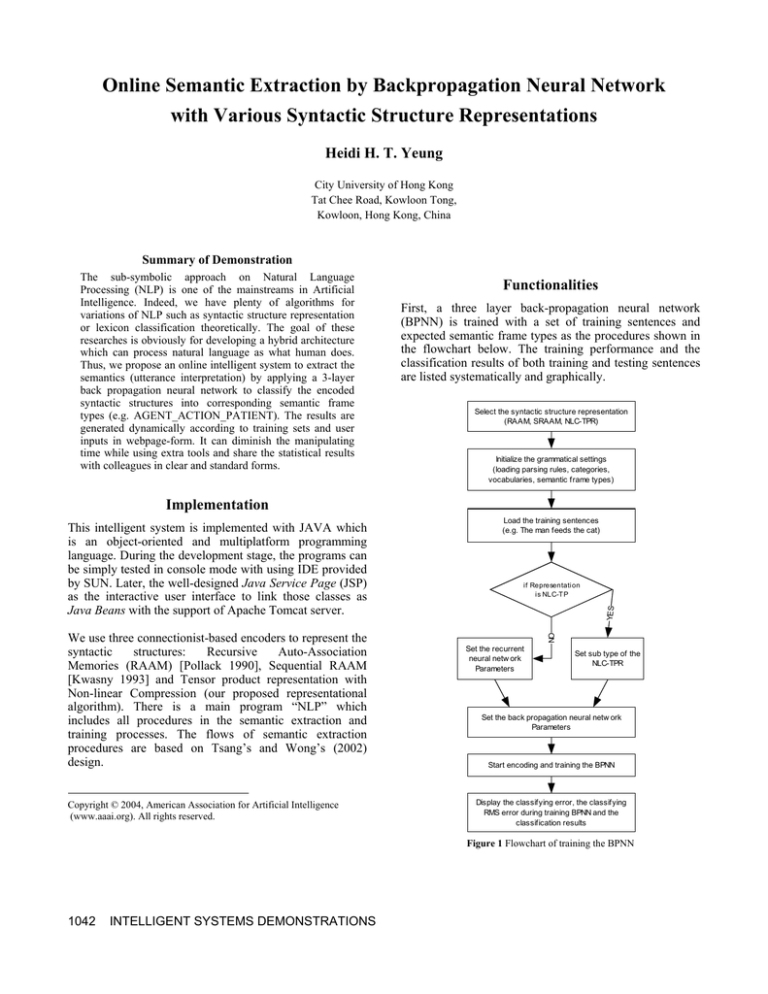

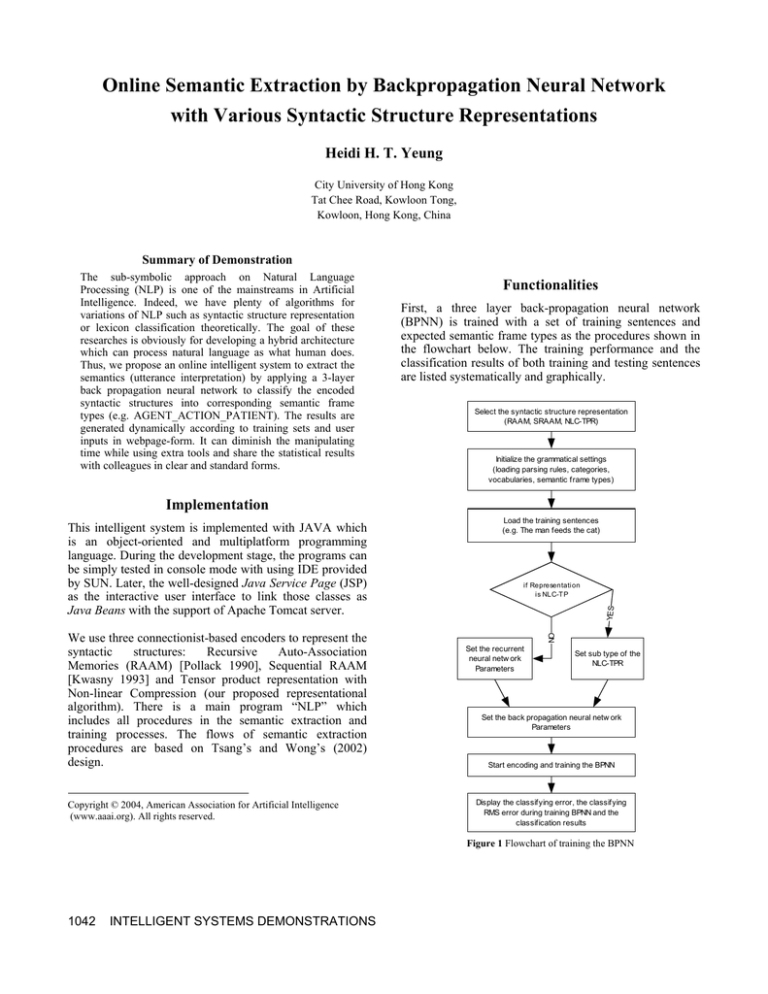

First, a three layer back-propagation neural network

(BPNN) is trained with a set of training sentences and

expected semantic frame types as the procedures shown in

the flowchart below. The training performance and the

classification results of both training and testing sentences

are listed systematically and graphically.

Select the syntactic structure representation

(RAAM, SRAAM, NLC-TPR)

Initialize the grammatical settings

(loading parsing rules, categories,

vocabularies, semantic frame types)

Implementation

We use three connectionist-based encoders to represent the

syntactic

structures:

Recursive

Auto-Association

Memories (RAAM) [Pollack 1990], Sequential RAAM

[Kwasny 1993] and Tensor product representation with

Non-linear Compression (our proposed representational

algorithm). There is a main program “NLP” which

includes all procedures in the semantic extraction and

training processes. The flows of semantic extraction

procedures are based on Tsang’s and Wong’s (2002)

design.

Copyright © 2004, American Association for Artificial Intelligence

(www.aaai.org). All rights reserved.

Load the training sentences

(e.g. The man feeds the cat)

YES

if Representation

is NLC-T P

NO

This intelligent system is implemented with JAVA which

is an object-oriented and multiplatform programming

language. During the development stage, the programs can

be simply tested in console mode with using IDE provided

by SUN. Later, the well-designed Java Service Page (JSP)

as the interactive user interface to link those classes as

Java Beans with the support of Apache Tomcat server.

Set the recurrent

neural netw ork

Parameters

Set sub type of the

NLC-TPR

Set the back propagation neural netw ork

Parameters

Start encoding and training the BPNN

Display the classifying error, the classifying

RMS error during training BPNN and the

classification results

Figure 1 Flowchart of training the BPNN

1042

INTELLIGENT SYSTEMS DEMONSTRATIONS

To test the classifying performance of BPNN, user can

input the grammatical sentence with the interactive

interface provided. The interface will result the plots of the

output layer activation of BPNN and the determined

semantic frame type. If the expected type is chosen, the

classification error is also calculated as a reference.

After the training of BPNN, the latest data will be stored as

the references and the 4 types of results can be revised

from the system.

1) Encoding Progress – Real time plots of the Rootmean-square (RMS) Error of the recurrent NN during

training for RAAM and SRAAM

2) Classifying Progress – Real time plots of the RMS

Error of the BPNN during training for different data

sets and syntactic structure representations.

Figure 3 Output layer activation of BPNN

The tree from is previewed with the last Flash movie in

Figure 4.

3) Cluster Results – The syntactic structure

representations and the BPNN hidden activation of

training sentences are clustered in the distance map as

Postscript files for different data sets and syntactic

structure representations.

4) Classifying Results – The output layer activations of

BPNN are tabled against both training and testing

sentences for different data sets and syntactic structure

representations.

Special Features

Making use of the dynamic properties in Macromedia

Flash Movie, we create 3 SWF documents for graphical

display. One of them is for plotting the Root-mean square

errors in output layers of recurrent neural network for

RAAM and SRAAM and that of BPNN during training

shown in Figure 2.

Figure 4 Tree display

The cluster results of memories and BPNN hidden layer

activations are generated and stored in server side. Then, a

Java class invokes the Cluster Application and Postscript

Generating Program in operating system.

References

Kwasny, S. C., Kalman, B. L., & Chang, N. 1993.

Distributed Patterns as Hierarchical Structures.

Proceedings of the World Congress on Neural Networks,

Portland, OR, July 1993, v. II, pp. 198-201.

Pollack, J. B. 1990. Recursive distributed representations.

Artificial Intelligence, 36, pp. 77-105.

Tsang, W. M., and Wong, C. K. 2002. Extracting Frame

Based

Semantics

from

Recursive

Distributed

Representation - A Connectionist Approach to NLP. IC-AI'

02.

This System is powered by Apache Tomcat 4.1,

Macromedia Flash MX

Figure 2 Classifying progress display

The second one is the activation plots for BPNN output

layer activation as below.

Copyright © 2004, American Association for Artificial Intelligence

(www.aaai.org). All rights reserved.

INTELLIGENT SYSTEMS DEMONSTRATIONS 1043