From: AAAI-87 Proceedings. Copyright ©1987, AAAI (www.aaai.org). All rights reserved.

Learning

and Representation

Ghan

Jeffrey C. ScM.immer*

Department

of Information

and Computer

University of California, Irvine

schlimmer@ics .uci. edu

1986).

Science

92717

First, a new method

tinuously

new method

with

to overcome

guage.

concept

limitations

After

Secondly,

the existing

refine a distributed

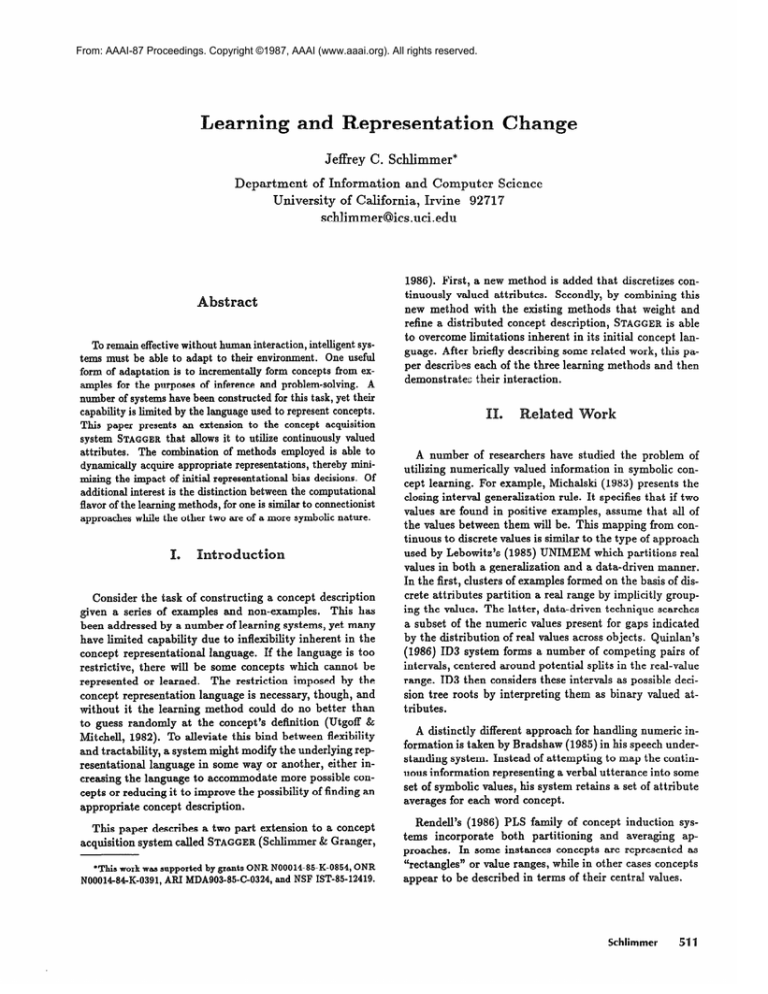

To remain effective without human interaction, intelligent systems must be able to adapt to their environment. One useful

form of adaptation is to incrementally form concepts from examples for the purposes of inference and problem-solving. A

number of systems have been constructed for this task, yet their

capability is limited by the language used to represent concepts.

This paper presents an extension to the concept acquisition

system STAGGER that allows it to utilize continuously valued

attributes. The combination of methods employed is able to

dynamically acquire appropriate representations, thereby minimizing the impact of initial representational bias decisions. Of

additional interest is the distinction between the computational

flavor of the learning methods, for one is similar to connectionist

approaches while the other two are of a more symbolic nature.

is added that discretizes

valued attributes.

methods

inherent

this

weight

and

STAGGER is able

in its initial concept

lan-

some related work, this pa-

per describes each of the three learning

demonstrates

that

description,

briefly describing

con-

by combining

methods

and then

their interaction.

IL

A number

utilizing

of researchers

numerically

have studied

valued information

the problem

in symbolic

of

con-

cept learning. For example, Michalski (1983) presents the

closing interval generalization rule. It specifies that if two

values are found in positive

examples,

assume that all of

the values between them will be. This mapping from continuous to discrete values is similar to the type of approach

used by Lebowitz’s

(1985) UNIMEM

values in both a generalization

which partitions

and a data-driven

real

manner.

In the first, clusters of examples formed on the basis of disConsider

the task of constructing

a concept

description

given a series of examples and non-examples.

This has

been addressed by a number of learning systems, yet many

have limited

concept

capability

language.

a subset of the numeric values present for gaps indicated

inherent

in the

by the distribution

If the language

is too

(1986)

due to inflexibility

representational

crete attributes partition a real range by implicitly grouping the values. The latter, data-driven technique searches

of real values across objects.

there will be some concepts which cannot be

The restriction imposed by the

represented or learned.

intervals,

concept

sion tree roots by interpreting

restrictive,

representation

language is necessary, though,

and

without it the learning method could do no better than

to guess randomly at the concept’s definition (Utgoff 8c

Mitchell, 1982). To all eviate this bind between flexibility

and tractability,

resentational

a system might modify the underlying

language

creasing the language

in some way or another,

to accommodate

cepts ok reducing it to improve

appropriate

concept

more possible con-

the possibility

of finding an

description.

This paper describes

acquisition

rep-

either in-

a two part extension

system called STAGGER (Schlimmer

to a concept

& &anger,

“This work was snpported by grants ONR N0001485-K-0854,

ONR

NOOO1484K-0391,

AM MDA903-85-C-0324,

and NSF ET-85-12419.

range.

Quinlan’s

ID3 sy st em forms a number of competing

centered

around potential

pairs of

splits in the real-value

ID3 then considers these intervals

as possible deci-

them as binary

valued at-

tributes.

A distinctly

different

approach for handling

numeric in-

formation is taken by Bradshaw (1985) in his speech understanding system. Instead of attempting to map the continuous information

representing

a verbal utterance

into some

set of symbolic values, his system retains a set of attribute

averages for each word concept.

Rendell’s

(1986)

PLS

family

of concept

induction

sys-

tems incorporate

both partitioning

and averaging

approaches.

In some instances concepts are represented as

“rectangles”

or value ranges, while in other cases concepts

appear to be described

in terms of their central values.

Schlimmer

511

Along

representational

lines, Utgoff

(1982)

and Mitchell

were perhaps the first to address the issue of constrictive

representational assumptions. In this and subsequent work

1986) they develop a method which explicitly

(Utgoff,

tifies shortcomings

descriptive

language.

STAGGER’S numeric

learning

ing class, for it divides

termined

number

between

of STAGGER alleviates

description

justment

belongs

ones.

Perhaps

language.

This

de-

more impor-

the three learning

the impact

occurs in a continuous

of the methods

in the partition-

real values into a dynamically

of discrete

tantly, interaction

concept

iden-

and triggers procedures for relaxing the

components

of an ill-fitting

initial

representational

ad-

and natural manner; each

assists the others while performing

its own

task.

STAGGER uses three

and represents

concepts

interacting

learning

components

as a set of weighted,

scription

pieces.

One of the learning

weights,

another

adds new Boolean

adds new pieces corresponding

symbolic

methods

deLS

adjusts the

pieces, and the third

to an aggregation

Figurewedium

ments influence

learning,

mechanism

for they exert influence on each other by changing

proceeds.

Given

a new example,

Concept

Concepts

weighted,

representation

are represented

symbolic

combination.

Figure 1 depicts a typical concept description

for size=medium

& color=red.

Each descriptive element is

dually weighted to capture positive and negative implication. One weight formalizes the element’s sufficiency (solid

weights

are based on the logical

ical necessity

Gaschnig,

(LN)

LS

LN

measures

=

=

A weight

(LS)

These

and log-

used in Prospector

(Duda,

.m

(1)

p(Tmatehedlexample)

p(lmatchedl-example)

greater

ness; less than one denotes

of each matching

odds(Ejfeatures)

piece and the LN weight

= odds(E)

x

LS x

Vhf

LN

(2)

V4-f

The resulting

matching

itive example

and reflects the degree of match between

concept

description

score is the odds in favor of a pos-

and the example.

This holistic

the

flavor

of matching differs from many machine learning systems in

which a single characterization

completely influences concept prediction.

.

Modif$ng

element

The weights associated

weights

with each of the concept descrip-

than one indicates

an element

in terms

that predicts

pretation.

For both, a weight of one indicates

non-

=

matched&example

1

matched&examplej/

1 examples

-examples

(31

predictive-

EN has the same range but the opposite

.

LN=

~matched&example

I

Tmatched&-example

I

examples

Texamples

inter-

irrelevance.

lTo convertodds into probability,divideodds by one plus odds.

Machine Learning & Knowledge Acquisition

examples; these counts are used to compute estimates of

the probabilities in Equation 1.

LS

and is interpreted

examples.

512

the

tion elements are easily adjusted by incrementally counting

the number of different matches between an element and

LS ranges from zero to infinity

of 0dds.l

=s- -example.

sufficiency

1979).

& Hart,

ele-

and the other represents its

(dashed line), or lmatched

necessity

concept

Following

et aI., 1979), the prior expec-

of the concept

description may be a single attribute-value pair, a range of

acceptable values for a real-valued attribute, or a Boolean

line), or matched ==sexample,

of its identity.

of each unmatched one.

and matching

Each element

description.

tation of a positive example is metered by multiplying in

in STAGGER as a set of dually-

pieces.

all of the weighted

expectation

used in (Duda

the LS weight

A.

& red concept

of real-

values into a few discrete ones. The interaction between

these methods may be viewed as a form of representational

the substrate from which induction

I.24

.m~.m~.m--.m~

Keeping counts of matchings between elements and examples also allows calculating the prior expectation

example: odds(example)

= lexamplesl/l~examplesl.

for an

By adjusting

scriptive

the weights associated

connection&

with each of the de-

STAGGER is behaving

elements,

model.

Without

hidden

as a single layer

units, these mod-

els suffer from the same representational

STAGGER does without its Boolean

limitations

that

learning method.

Both

are unable to assign weights to a combination

this severely limits the number

to only linearly-separable

ones.

point of view,

of values, and

of discoverable concepts

From a representational

the weight method

can only form concepts

in terms of existing description elements.

Instead of including an element for all possible Boolean combinations

of the attribute-values,

tively

this method

begins with the rela-

strong bias of only single attribute-value

elements.

These end-points

through

Forming

new Boolean

break up the range into discrete

pairs.

search frontier is the set of single attribute-value

The B oo 1ean method uses three search operators,

specialization,

ements

generalization,

to the search frontier.

proposing

a new element

expectation

error.

constructive

by

extends

the descriptive

process while retaining

of a limited

the

representation.

is expected

STAGGER is behaving

to be a

too inclusively, too

and thus a more specific element may be needed.

So, STAGGER expands

a new AND

the search frontier

formed

necessary for the concept.

is unmatched

ements typically

by tentatively

from two elements

The selection

ments is based on two observations:

element

search is limited

when a non-example

positive example,

generally,

adjusting

properties

For instance,

The

only when STAGGER makes an

This cautiously

power of the weight

adding

and inversion to add new el-

which are

of component

ele-

at least one necessary

in a non-example,

and necessary el-

have strong logical necessity weights.

The other type of prediction

error also triggers

Specifically,

A guess that a positive example is negative is

pansion.

overly specific. To correct for this underestimation,

search

is expanded to include a more general element; a new OR

formed from two sufficient elements is tentatively added.

Both predictive

ments.

errors are opportunities

Further details concerning

documented

Though

in (Schlimmer

the space of possible

to invert poor ele-

the Boolean

$t Granger,

method

are

Boolean

point.

is

valued at-

tributes. Therefore, STAGGER has a third learning method

which extends this space by adding discrete values for real

value ranges.

D.

Partitioning

In order

real-valued

to carve

into a set of discrete

ple statistic

intervals,

real-valued

STAGGER retains

for a number of potential’interval

a two by two

and negative

exend-

as the end points

By interpreting

attributes

The utility

these divisions

values, STAGGER maps the

of discrete

of subsequent instances into discrete

measure is similar to Equation

2, for

it involves

the prior odds of each class and a conditional

probability

ratio similar to LS and LN.

IClCZ88@.8l

U( end-point) =

p(cZass; 1 < e-p)

odds( class;) x

These conditional

probabilities

number of positive

may be computed

and negative

examples

and greater than the measured end-point.

tial end-points

is independent

(4)

p(classi 1 > e-p)

i=l

from the

with values less

Since the poten-

are taken from actual examples, the method

of scale considerations

and does not en-

tail any assumptions about the range of values. Furthermore, because partitioning

is driven by a statistic based

on class information,

the method

is able to uncover effec-

tive partitionings even when the values are uniformly distributed across all classes, something a gap finding method

(Lebowitz,

1985) is unable to do.

A straightforward

strategy for fractioning the real-value

range would be to choose the end-point with a maximal

utility

and thereby

ues: greater

divide the range into two discrete val-

and less. Rowever,

for concept learning

tasks

it would be more effective

to divide the range into a number of discrete values. So after applying

a local smoothing

the end-points

function,

STAGGER chooses

that are locally maximal.

These end-points

represent pivotal values, for Equation 4 favors those that

are predictive of concept indentity. This approach has the

advantage

that it naturally

and an effective

continuous

formed

end-points

the real-valued range, attributes

values in successive

into their discrete

sults in example

examples

counterparts.

descriptions

input requirements

ing methods

selects appropriate

number of discrete values.

may

This mapping

that are consistent

above.

Furthermore,

re-

with the

of both the weight and Boolean

described

with

be trans-

learn-

it embodies

a

type of representational

learning, because by partitioning

values into ranges, the concept description languages for

*

the other learning methods is expanded.

attributes

up an attribute’s

end-point,

A measure apphed to these numbers indicates useful

divisions in the value range.

Having aggregated

combinations

and in turn

amples with values less and greater than this potential

1986).

large, it does not include states for numerically

for each potential

which require finer distinctions,

the ex-

Each new exam-

record is kept of the number of positive

adds new elements

by beamSTAGGER selectively

searching through the space of all possible Booleans. The

initial

values.

and,

to naturally

this method transforms successive examples into a palatable form for the weight and Boolean learning methods.

real-valued

combinations

examples

the best are utilized

ple supplies a value to update these statistics,

values.

c.

are taken from processed

a beam-search,

range

a sim-

end-points.

E.

Interactions

between

STAGGER'S three learning

the learning

methods

methods

cooperatively

inter-

Schlimmer

513

BOOLEAN

LEARNING

rz

I

I

I

I

I

I

I

\

/

//

To illustrate the functioning

consider the set of potential

NUMERICAL

LEARNING

,e--

picted

piiqJFigure 2: Interaction

act as Figure

between

2 depicts.

method;

Note

that

identifies

the partitioning

5 and 15 that are used to partition

three discrete

measure

the two local maxima

near

the size attribute

into

values.

U( end-point)

25

Boolean

learning

method

base for the weight adjusting

it is restructuring

method

3.

4) clearly

STAGGER'S three methods.

The

alters the-representational

justing

in Figure

(Equation

of the partitioning

method,

end-points for this task de-

the input

so the latter

to the weight

ad-

is able to capture

the concept’s description.

The numeric learning method has this

administrative role for both the weight and Boolean learn-

ing processes;

it rewrites

the real-valued

attributes

into a

form suitable for induction

by the latter methods.

pendent

also exert influence on the rep-

learning

resentational

methods

processes which counsel them.

The de-

The Boolean

0

method draws its components from the pool of ranked elements maintained by the weight learner. The weighting

method

also provides

a weak form of feedback

meric method:

the similarity

uation function

(Equation

(Equation

attributes

2) implicitly

for the nu-

the numeric

4) and the matching

ensures that

will be amenable

IV.

between

the division

to weighting

Empirical

of real

and matching.

The interaction of the three learning methods is perhaps

best illustrated by examining their behavior on concept

learning tasks. Consider STAGGER'S acquisition of a pair

of simple object concepts.

Each object is describable in

0 and 20), its color

(one of 3 discrete values), and shape (3 discrete values).

For the first concept, an object is a positive example if

red and between 5 and 15 in size. Optimally, the size attribute

should be divided

into three ranges:

size < 5, 5 <

size < 15, and 15 < size. In each of 10 executions, STAGGER'S numeric partitioning method discovers this threeway split, and its Boolean combination

technique forms

a conjunction

combining

the middle

value of size and the

color red. The weight adjusting method further gives this

element more influence over matching than any other. The

following

is typical

of the elements

ative action of the three learning

514

formed

by the cooper-

methods.

Machine Learning & Knowledge Acquisition

10

SIZE

I

20

for 5.0 ssize<

15.0 & red.

equation

Performance

of its size (real value between

I

15

I

5

Figure 3: End-points

eval-

The complete,

denly.

Though

conjunctive

th e methods

element does not appear sudare not explicitly

synchro-

nized, they appear to operate in a staged manner as Figure 2 indicates. First, the numerical partitioning method

begins

to search for

ranges.

terms

1

0

a reasonable

At the same time,

way to partition

the weight

adjusting

real

method

searches for appropriate element strengths. The weight of

color=red

is adjusted at this time, but combination with

the size attribute must wait until the numerical method

settles down.

After processing about 50 examples, the

tripartite division of the size attribute is stable, and the

weight method is able to assign a strong LN weight to the

middle value of the size attribute. After this, the Boolean

method combines the size and color elements to form the

element depicted above. Weight adjusting finishes the job

by giving this element strong LS and LN values.

Regrettably,

these three learning methods are not sufficient for all concept learning tasks; there are some concepts

for which the numerical method is unable to uncover an effective partitioning.

For example, consider the concept of

objects that have a size between 5 and 15 or are red but not

both.

Figure 4 indicates

able to identify

limitation

that the numerical

a reasonable

partitioning

method

is un-

in this case. This

also arises if we consider the capabilities

of the

U ( end-point)

the weight method:

if a concept involves ‘an exclusive-or

of

a real-value range, STAGGER is unable to discover it.

251

Acknowledgements

Thanks to Rick Granger and Michal Young who provided

much of the early foundations

0

5

Figure 4: End-points

for this work; to Ross Quin-

Ian for his assistance in formulating the numeric learning

method; to Doug Fisher for insight on the interactions be-

20

10

15

SIZE

for 5.0 lsize<

15.0 @ red.

tween the weight, Boolean,

and to the machine

and numeric learning methods;

learning

group at UC1 for ready dis-

cussion and suggestions.

weight method used without the other methods; the weight

learner alone is only able to describe linearly-separable concepts (which

method

does not include exclusive-or).

allows it to overcome

its representational

this limitation

language.

direct the numerical method,

able to move beyond

by rewriting

If the Boolean

method

could

then the latter would also be

linearly-separable

eferences

The Boolean

concepts.

This is

an area for future study.

Bradshaw,

G.

sounds:

L. (1985).

Learning

to recognize ’speech

and model. PhD thesis, Department

A theory

of Psychology,

Carnegie-Mellon

Duda, R., Gaschnig,

J., & Hart,

in the Prospector

V.

Conclusions

ration.

and Future

University,

Pittsburgh,

PA.

In D. Michie

cro electronic

P. (1979).

consultant

age,

Model

design

system for mineral exploExpert

(Ed.),

Edinburgh:

systems

in the mi;

Edinburgh

University

Press.

This paper describes

of learning

learning

a three part approach

a concept from examples.

method

modifies

a simple concept

changing the weights associated

A second,

binations

symbolic

learning

of these descriptive

method

to overcome

third learning

set of discrete

elements

ranges,

style

description

with descriptive

method

forms

by

elements.

Boolean

com-

and allows the first

a representational

method

to the task

A connectionist

shortcoming.

divides real-valued

attributes

The

into a

so other methods

are able to conOverall, the

concepts.

struct descriptions of numerical

interaction between these three methods

is a type of co-

operative representational

learning. The numeric method

changes the bias for both the Boolean and weight method.

Similarly,

the Boolean

method

forms new compound

ments and thus increases the representational

of the weight adjusting method.

One drawback

illustrated

in the previous

ele-

capabilities

section arises

because cooperation

between the methods is incomplete.

Dynamic

feedback is lacking for the numeric method.

Though

it attempts

effective

learning

information

to form

about the progress

to alter its course of action.

method

value partitions

by the other

methods,

that

allow

it does not use

of learning

at those levels

Consequently

the numerical

can only uncover partitions

that can be utilized by

Lebowitz,

M. (1985).

generalization.

Michalski,

Categorizing

Cognitive

R. S. (1983).

ductive learning.

& T.

numeric information

Science,

A theory

and methodology

In R. S. Michalski,

M. Mitchell

Machine

(Eds.),

tificiaZ intelligence

approach.

for

9,285-308.

of in-

J. G. Carbonell,

learning:

Los Altos,

An

CA:

ar-

Morgan

Kaufmanu.

Quinlan, J. R. (1986).

Learning,

Rendell,

of decision trees. Machine

Induction

1, N-106.

L. (1986).

A g eneral framework

a study of selective

induction.

for induction

Machine

and

Learning,

I,

317-354.

Schlimmer,

J. C., & Granger,

R. H., Jr.

(1986).

mental learning from noisy data. Machine

317-354.

Utgoff, P. E., & Mitchell, T. M. (1982))

appropriate bias for inductive concept

ceedings

ligence

mann.

Utgoff,

of the National

(pp.

414-41‘7).

P. E. (1986).

Incre-

Learning,

I,

Acquisition

of

learning. Pro-

Conference

on Artificial

Intel-

Pittsburgh,

PA: Morgan

Kauf-

Shift of bias for inductive

c\oncept

learning.

In R. S. Michalski, J. G. Carbonell & T.

An artificial inM. Mitchell (Eds.), 1Mac h ine learning:

telligence

approach

(Vol.

2).

Los Altos,

CA:

Morgan

Kaufmann.

Schlimmer

515