From: AAAI-84 Proceedings. Copyright ©1984, AAAI (www.aaai.org). All rights reserved.

FIVE

PARALLEL

ALGORITHMS

ON THE

FOR

PRODUCTION

DAD0

MACHINE*

Salvatore

Computer

New York

EXECUTION

J. Stolfo

Science

Columbia

SYSTEM

Department

University

City,

N.Y.

10027

Abstract

In

this

paper

parallel

inherent

our

conclusions

under

by

algorithm

construction

in

a

abstract

is

empirically

on

the

at Columbia

algorithms

systems

designed

variety

Ongoing

programs.

each

five

production

algorithm

parallelism

system

specify

of

Each

machine.

of

we

execution

of

research

to

different

aims

evaluating

DAD02

on

to

the

prototype,

for

the

while

WM

elements

are

simple

lists

of

equals

sign),

constant

symbols

(corresponding

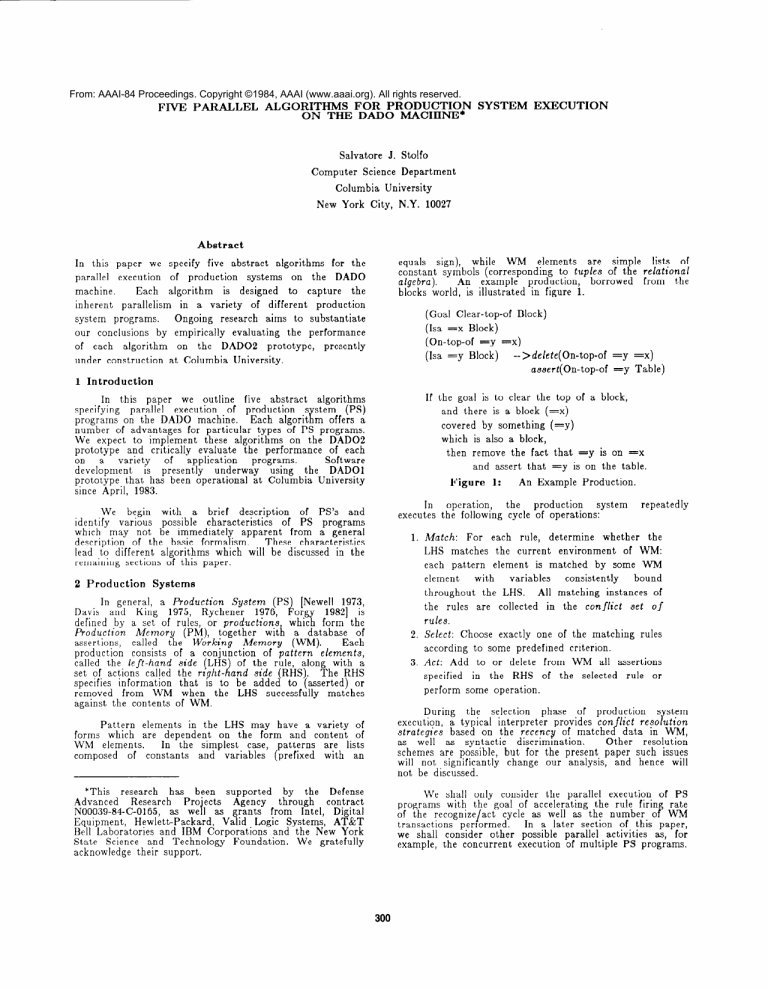

to tuples of the relational

An

example

production,

borrowed

from

the

algebra).

blocks world, is illustrated

in figure 1.

the

DAD0

capture

the

production

(Goal

substantiate

(Isa

performance

Clear-top-of

=x

(On-top-of

presently

(Isa

University.

Block)

Block)

=y

=y

=x)

-- > delete( On-top-of

Block)

assert(On-top-of

=y

=x)

=y

Table)

1 Introduction

If the

five abstract

algorithms

In this

paper

we outline

specifying

parallel

execution

of production

s stem

(PS)

programs

on the DAD0

machine.

Each algorit !I m offers a

number

of advantages

for particular

types of PS programs.

We expect

to implement

these algorithms

on the DAD02

prototype

and critically

evaluate

the performance

of each

on

a

variety

of

application

programs.

Software

development

is presently

underway

using

the

DAD01

prototype

that has been operational

at Columbia

University

since April, 1983.

is to clear

there

covered

by something

a block,

then

remove

the

Match:

For

each

rule,

matches

the

current

each

pattern

element

with

throughout

In general,

a Production

Systenl

(PS

[Newell 1973,

Davis

and

King

1975,

Rychener

1976,

Aorgy

19821 IS

defined

by a set of rules, or prodzlctions,

which form the

Production

Memory

(PM),

together

with

a database

of

called

the

Worlcing

Memory

(WM).

assert ions,

Each

production

consists

of a conjunction

of pattern

elements,

called the left-hand

side (LHS

of the rule, along with a

set of actions

called the rzgfzt- R and side (RHS).

The RHS

specifies

information

that

is to be added

to (asserted)

or

removed

from

WM when

the LHS successfully

matches

against

the contents

of WM.

the

rules

=y

LHS.

collected

is on =x

is on the

table.

Production.

system

determine

is matched

repeatedly

whether

the

of

WM:

environment

variables

the

are

that

=y

the

production

cycle of operations:

LHS

element

Systems

fact

that

An Example

1:

operation.,

the followmg

of a block,

(=y)

is also

assert

top

(=x)

which

Figure

In

executes

the

is a block

and

We

begin

with

a brief

description

of PS’s

and

identify

various

possible

characteristics

of PS programs

which

may not be immediately

apparent

from a general

description

of the basic formalism.

These

characteristics

lead to different

algorithms

which will be discussed

in the

remaining

sections

of this paper.

2 Production

goal

and

by

some

consistently

All

matching

in

instances

conflict

the

WM

bound

of

set

of

rules.

Select:

Choose

according

Act:

Add

exactly

to some

to

specified

in

perform

some

one of the

predefined

or

delete

the

RHS

from

of

matching

rules

criterion.

the

WM

all

selected

assertions

rule

or

operation.

Pattern

elements

in the LHS may have a variety

of

forms

which

are dependent

on the form

and content

of

WM elements.

In the simplest

case,

patterns

are lists

composed

of constants

and

variables

(prefixed

with

an

During

the

selection

phase

of production

system

execution,

a typical

interpreter

provides

confht

resolution

strategies

based on the recency of matched

data

in WM,

as syntactic

discrimination.

Other

resolution

as well

schemes

are possible,

but for the present

paper such issues

will not significantly

change

our analysis,

and hence will

not be discussed.

*This

research

h as been

supported

by

the

Defense

Advanced

Research

Projects

Agency

through

contract

N00039-84-C-0165,

as well as gr&ts

from

Intel,

Digital

Eauinment.

Hewlett-Packard.

Valid

Logic Svstems.

AT&T

Be’11&Labor&tories and IBM Corporations-and

-the New York

State

Science

and Technology

Foundation.

We gratefully

acknowledge

their support.

We shall only consider

the parallel

execution

of PS

programs

with the goal of accelerating

the rule firing rate

of the recognize/act

cycle as well as the number

of Wh4

transactions

perrormed.

In a later section

of this paper,

we shall consider

other

possible

parallel

activities

as, for

example,

the concurrent

execution

of multiple

PS programs.

300

state

new

of

temporal

this

to be always

3. Many

by

may

similar

1. Assign

some

of

WM.

algorithm

illustrated

in

of rules

to a set of (distinct)

on

WM.

subset

of WM

elements

processors

of a rule

operation

is not

appropriate.

(possibly

distinct

from

those

in

each

but

step

to

may

a

1).

until

no rule

6. Global

is active:

LHS

a.

Broadcast

storing

an

to

in

conflict

b.

the

system.

Report

Broadcast

the

step

3.b

to

their

local

2:

of

(non-temporally

action

specifications

have

large

is

global

the

unlikely,

of

not

scope

of

the

state.

matches.

which

may

relatively

need

in

effects

i.e., saving

pattern

The

potentially

Thus,

small.

access

small

to

all

a

of WM

subset

within

its instantiated

all

the

of

data

processors,

which

end

Production

in

update

System

prior

to

the

cycle.

to only

9 Small

Algorithm.

PM.

This very

PS cycle

10. Large

simple view of the parallel

implementatjon

forms the basis of our subsequent

analysis.

3 Characteristics

of Production

System

thousands

11. Large

Programs

thousands

In this section we enumerate

various

characteristics

of

PS programs

in general

terms.

The reader

will note that

these

characteristics

are less indicative

of a specific

PS

but

rather

are

characteristics

of

various

formalism,

problems

whose

solutions

are encoded

in rule form.

It

the “inherent

parallelism”

should

be noted,

though,

that

not

be represented

by the

problems

may

Ert;gc0u;“a”r PS f ormalksm used for their solution.

1. Temporal

made

on

between

need

Redundancy.

each

each

not

[Forgy

cycle,

be

19821

relatively

Rules.

few

WM

rules

on

need

changes

by

saving

matching

the

incorporating

to

WM

The

repeated.

Affected

changes

previous

is probably

PS interpreter

Few

Thus,

cycle.

this

Few

each

are

algorithm

be matched

are

This

against

case

of characteristic

On

each

conflict

initiating

the

number

of

rules

match

rules

may

5.

cycle

of

may

be

phase

of

is restricted

a few hundred.

WM.

PM.

Similarly,

A

of rules

WM.

WM

may

consist

of only

elements.

PS

may

Similarly,

of data

consist

of

several

in PM.

WM

may

consist

of

elements.

Algorithms

The reader

is assumed

to be knowledgeable

about the

Rete match

algorithm

(see [Forgy

19791 and [Forgy

19821 .

We will thus freely discuss

the details of the Rete mate h

We begin with a

when needed without

prior explication.

brief description

of the DAD0

architecture.

(The reader

is

19831 and [Stolfo and Miranker

encouraged

to see Stolfo

19841 for complete

d etails of the system.)

of a

strategy.

cycle,

elements

which

state

example

rules

WM

of

than

tests

In this section

we outline

five different

algori;ha;;

suitable

for direct execution

on the DAD0

machine.

will

be

independently

discussed

leadin

to

various

t fl ey are

most

conclusions

about

which

characteristics

Ongoing

research

aims to verify

appropriate

for capturing.

our conclusions

by empirically

evaluating

their performance

for different

classes of PS programs.

operations

Rete

best

4 Five

The

rather

value).

firings.

executed

next

of

converse

rule

WM,

in the

requiring

example,

threshold

number

8 Small

Repeat;

number

elements

conditions

of

(for

a

operation,

test

portions

as the

7 Multiple

entire

reported

the

be viewed

RHS.

to WM

accordingly.

Abstract

instance

the

large

constant

Pattern

may

elements

compare

some

rated

to

of WM.

rule

individual

local

compute

rule

changes

WM

a

a

a few hundred

by

rules.

WM

relatively

tests

of

access

phase,

instances.

processor,

rated

processors

match

maximally

each

maximally

all

the

formation

each

within

Figure

to

begin

set of matching

Considering

c.

instruction

rules

resulting

2. Few

where

in many

elements.

3. Repeat

of the

cycle.

to

elements

rule

rule

are

each

in situations

restricting

scope

rules

on

case,

seems

of WM

single

to a set of

WM

appear

may

Thus,

match

match

some

In this

number

processors.

2. Assign

RHS

that

guarantee

Many

to

example,

changes

the

not

case.

elements

5. Restricted

subset

for

arise,

however,

does

Rules.

changes

pattern

redundant).

update

abstract

the

the

4. Massive

algebraic

operation.

processed

by a parallel

by the

this

approach

figure 2.

alone

Affected

affected

This

Note,

WM.

redundancy

affected

and

against

thus

the

301

4.1

The

DAD0

4.2

Machine

DAD0

is

a

fine-grain,

parallel

machine

where

A

processing

and memory

are extensively

intermingled.

full-scale

production

version

of the DAD0 machine

would

the

order

of a hundred

comprise

a very

large

(on

each

thousand)

set

of

processing

elements

(PE’s ,

containing

its own processor,

a small amount

(16 k bytes,

rototype

version

of local

in the current

design

of the

random

access

memory

(RAM P, and

a specia I ized I/O

The PE’s are interconnected

to form a complete

switch.

binary tree.

Algorithm

1: Full

Distribution

of PM

small

number

of distinct

In

this

case,

a very

rules

are

distributed

to each

of the

1023

production

DAD02 PE’s, as well as all WM elements

relevant

to the

rules

in question,

i.e., only

those

data

elements

which

match

some pattern

in the LHS of the rules.

Algorithm

1

alternates

the entire DAD0

tree between

MIMD and SIMD

modes of operation.

The match

phase is implemented

as

an MIMD process,

whereas

selection

and act execute

as

SIMD operations.

In simplest

terms,

each PE executes

the match

phase

for its own small PS.

One such PS is allowed

to “fire”

a

which

is communicated

to all other

PE’s.

rule, however,

is illustrated

in figure 3.

The algorithm

Within

the DAD0

machine,

each PE is capable

of

executing

in either of two modes under the control

of runIn the first,

which

we will call SIA4D

time software.

mode

for single instruction

stream,

multiple

data stream),

the P Ii executes

instructions

broadcast

by some ancestor

PE within

the tree.

(SIMD typically

re&hs, t;A;;ingol;

stream

of “machine-level”

instructions.

the other

hand,

SIMD is generalized

to mean

a single

Thus,

stream

of remote

procedure

invocation

instructions.

DAD0 makes

more effective

use of its communication

bus

by broadcasting

more

“meaningful”

instructions.)

In the

second,

which

will be referred

to as MIMD

mode (for

mult,iple instruction

stream,

mulifle

data stream),

each PE

its

own

local

RAM,

executes

instructions

stored

A single

conventional

independently

of the other

PE’s.

adjacent

to the

root

of the

DAD0

tree,

coprocessor,

controls

the operation

of the entire ensemble

of PE’s.

1. Initialize:

Distribute

PE.

PE.

Set CHANGES

2. Repeat

3. Act:

Distribute

the

For

a.

each

rule

matcher

rules

to initial

elements.

WM

to

to each

in CHANGES

WM-change

Broadcast

b.

simple

a few distinct

following:

WM-change

specific

When

a D,4DO

PEsuE;ters

MIMD mode,

its logical

a WaY as

to

effectively

is changed

in

state

“disconnect”

it and its descendants

from

all higher-level

In particular,

a PE in MIMD mode does

PE’s in the tree.

not receive

any instructions

that

might

be placed

on the

tree-structured

communication

bus by one of its ancestors.

however,

broadcast

instructions

to be

Such

a PE may,

executed

by its own descendants,

providing

all of these

descendants

have themselves

been switched

to SIMD mode.

The DAD0

machine

can thus be configured

in such a way

that an arbitrary

internal

node in the tree acts as the root

of a tree-structured

SIMD device in which all PE’s execute

a single instruction

(on different

data) at a given point in

time.

This flexible architectural

design supports

multipleSIMD

execution

(MSIMD).

Thus,

the

machine

may

be

logically

divided

into distinct

partitions,

each executing

a

distinct

task, and is the primary

source of DADO’s s eed

in executing

a large number

of primitive

pattern

mate K ing

operations

concurrently.

a

each

WM

Broadcast

(add

element)

a

or

command

to

locally

PE

operates

independently

mode

and

modifies

its

is a deletion,

it checks

and

rule

If this

and

a

local

WM.

instances

modifies

as

If this

conflict

set

appropriate.

it matches

its

match.

in MIMD

its local

is an addition,

rules

delete

to all PE’s.

[Each

removes

do:

local

its set

of

conflict

set

instruction

to

accordingly].

end

C.

4. Find

do;

local

maxima:

PE

to

rate

according

to

some

resolution

strategy

each

Broadcast

its

an

local

matching

predefined

(see

instances

criteria

[McDermott

(conflict

and

Forgy,

19781).

5. Select:

Using

circuit

of

execution

Our comments

will be directed

towards

the DAD02

consisting

of

1023

PE’s

constructed

from

prototype

commercially

available

chips.

Each PE contains

an 8 bit

Intel 8751 processor,

16K bytes of local RAM, 4K bytes of

local ROM and a semi-custom

I/O switch.

The DAD02

I/O

swit,ch,

which

is being

implemented

in semi-custom

gate array

technology,

has been designed

to support

rapid

communication.

In

addition,

a

specialized

global

combinational

circuit

incorporated

within

the I/O switch

will

allow

for

the

very

rapid

selection

of a single

distinguished

PE from a set of candidate

PE’s in the tree,

call

mu-resolving.

(The

a process

we

max-resolve

instruction

computes

the maximum

of a s ecified register

in all PE’s in one instruction

cycle,

whit Tl can then be

used to select a distinct

PE from the entire

set of PE’s

taking

part in the operation.)

Currently,

the 15 processing

element

version

of DAD0

performs

these

operations

in

firmware

embodied

in its off-the-shelf

components.

from

6. Instantiate:

Figure

4.2.1

high-speed

identify

among

Report

Set CHANGES

7. end

the

DADOB,

the

to the

max-RESOLVE

a

all PE’s

single

with

instantiated

reported

rule

active

RHS

for

rules.

actions.

WM-changes.

Repeat;

3:

Discussion

Full

Distribution

of Algorithm

of Production

Memory.

1

We have left the details

of the local match

routine

unspecified

at step 3.b.

Thus,

a simple precompiled

Rete

match

network

and interpreter

may be distributed

to each

processor.

However,

it is not clear whether

a simple naive

matching

algorithm

may be more appropriate

since only a

very

small

number

of rules

is present

in each

PE.

Memory

considerations

may decide this issue: the overhead

associated

with

linking

and

manipulating

intermediate

partial

matches

in a Rete network

may be more expensive

than

direct

pattern

matching

against

the local W’M on

each cycle.

302

Performance

of

this

algorithm

varies

with

the

In the best case, the time

complexity

of the local match.

to match

the rule set is bounded

by the time to match

The worst

case is dependent

on the

only a. few rules.

maximum

number

of WM elements

accessed

during

the

If a simple

naive match

is used at

match

of the rules.

each

PE,

this

may

require

a considerable

amount

of

computation

even though

the size of the local WM’s IS

limited.

Simple hashing

of WM may dramatically

improve

a local naive matching

operation,

however.

suited

1.

We conclude

that

this algorithm

is probably

to implementing

PS programs

characterized

by:

Temporal

would

execution

3.

Many

to

though,

5.

WM

would

it

separately.

Note,

also

of

WM.

three

or

for

4.

be

PM

of

common

quite

depending

pattern

elements

large.

Even

is

resident

element

number

replicated

individual

of

on

if an

in

additional

in

other

elements

the

average

between

average

of

efficiently

implemented.

3.

above

each

PE

saving

state

Many

rules

cycle.

each

PE

local

PE’s),

may

rules,

WM

WM

of

may

unique

(while

elements

minimum

be stored

in WM.

of

6.

Global

8.

are

1000

DAD0

each

cycle,

The

allow

tests

WM

such

ability

to

to

WM

for

may

also

be

an

environment,

has

few advantages.

by

WM-changes

changes

to

many

on

WM

rules

concurrency

many

are

matchings

each

may

may

achieved

rule

is, unfortunately,

one

of the

DAD02,

for

4 of

store

the

AA,

be

potentially

at

the

PM-

to be achieved

30

Algorithm

with

a few hundred

may

be quite

configuration

each

PE

thousands

has

of

WM

for

WM

elements

point

each

we

for

32

rules

rule

For

above

would

consisting

allows

may

a 32-way

PE.

be accessed

In

system,

systems

example,

allow

of 32 PE’s.

storage

elements

at

each

appropriate.

however.

noted

would

Rule

more

the

a PM-level

PE’s

a thousand

are

large,

Since

storage,

is underutilized.

performance.

PM-level

may

PM

envisage

considerable

WM

this

Furthermore,

The original

DAD0

algorithm

detailed

in [Stolfo 19831

makes

direct

use of the machine’s

ability

to execute

in

both

MIMD and SIMD modes

of operation

at the same

point in time.

The machine

is logically

divided

into three

conceptually

distinct

components:

a PM-/eve/,

an upper

tree and a number

of WM-subtrees.

The PM-level

consists

of MIMD-mode

PE’s executing

the match

phase

at one

appropriately

chosen

level of the tree.

A number

of

distinct

rules are stored

in each PM-level

PE.

The WMsubtrees

rooted

by the PM-level

PE’s consist

of a number

of SIMD mode PE’s collectively

operating

as a hardware

content-addressable

memory.

WM elements

relevant

to the

for

WM-

device.

in size.

for rule

Thus,

rules

by the

parallel

restricted

machine

decreasing

WM-subtrees,

handled

mode

is used

example,

WM

DAD02

tree

machine.

roughly

potentially

11

rather

level of the

capacity

efficiently

as an SIMD

PM

level

indeed

a later

also

operating

DAD0

2: Original

cycles

the

access

to

For

affected

only

full

are

to

parallel

massive

affected.

subtrees

In

4.3 Algorithm

PS

efficiently.

be

WM

The most serious

drawback

of this algorithm

is the

a local WM is too large to be conveniently

case where

stored in a PE.

Clearly,

characteristic

5 is appropriate

for

this

algorithm

only

in the presence

of characteristic

9,

small WM.

Multiple

rule

firings

(characteristic

7)

A discussion

of this case is deferred

possible.

section.

on

level would

a significant

a

for

content-addressable

a

changes

between

Since

permitted

3000-4000

large

are

in

only

tests

number

of one

not

but

be

Similarly,

allows

matching,

rules.

11.

designed

Indeed,

elements

a

at

consisting

redundant.

WM

memory

each

the

stored

2

specifically

was

as:

Non-temporally

distribute

appropriate.

rules

of Algorithm

against

global

Given

Discussion

This

algorithm

programs

characterized

cycle.

Clearly,

match

4.3.1

suitable,

would

on each

Hence,

four

a

be

PE’s

matches.

potentially

possible.

possible

Thus,

it would

results

be particularly

is

characteristics,

pattern

to

local

not

PM

make

of

match

WM.

of PM,

may

number

new

small

Large

2

small

required

relatively

rules

WM

sequential

cycle.

distribution

characteristic

scope

is

each

It is probably

best to view WM as a distributed

Each

WM-subtree

PE

thus

stores

relational

relation.

tuples.

The PM-level

PE’s match

the LHS’s of rules in a

In terms

ueries.

manner

similar

to processing

relational

of the Rete match,

e’ntraconditkon

tests o? pattern

elements

in the LHS of a rule are executed

as relational

selection,

equi-join

while

intercondition

tests

correspond

to

operations.

Each

PM-level

PE

thus

stores

a set

of

relational

tests

compiled

from

the LHS of a rule

set

assigned

to it.

Concurrency

is achieved

between

PM-level

PE’s

as well as in accessing

PE’s of the WM-subtrees.

The algorithm

is illustrated

in figure 4.

best

to

of

its local

on

similar

computing

Restricted

rule

9.

partition

relatively

actively

to modify

initial

changes

amount

affected

the

that

a

PE

are

on

massive

considerable

at each

best

but

a

rules

depending

be

since

redundancy,

require

rules stored

at the PM-level

root PE are fully distributed

The u per tree consists

of

throughout

the WM-subtree.

SIMD

mode

PE’s

lying

above

t rl e PM-level,

which

implement

synchronization

and selection

operations.

the

for

Since

32

each

capacity,

many

easily

stored.

be

parallel

total,

in parallel

access

nearly

at

to

1000

a given

in time.

While attempting

to implement

temporally

redundant

systems,

Algorithm

2 may recompute

much

of its match

results

calculated

on previous

cycles.

This indeed may not

be the case if we modify Algorithm

2 to incorporate

many

of the capabilities

of the Rete match.

303

1. Initialize:

Distribute

partitioned

Set CHANGES

2. Repeat

3. Act:

the

For

a.

match

of rules

to the

each

initial

a

PE.

elements.

in CHANGES

WM-change

PE’s

and

The

match

level

PE:

phase

PM-level

PE

by

SO,

its

element

addition,

PE

is

at

and

any

If

free

identified,

WM-

and

element

occur

of

PE

the

Any

are

subsequent

that

to the

matching

pattern

(relational

for

bindings

sequentially

for

PE

rules

below

variable

reported

PM-level

the

is broadcast

WM-subtree

the

of

elements

equi-join).

A local

conflict

stored

the

an

the

deleted.

a

a PM-level

matching.

rating

If

on

selection)

are

set

of rules

along

with

in a distributed

Algorithm

is formed

a

priority

manner

within

WM-subtree.

PM-level

of

the

match

PE’s

synchronize

The

max-RESOLVE

identify

with

the

upper

circuit

maximally

the

operation,

instance

7. Set

tree.

is used

(perhaps

3: Miranker’e

TREAT

Algorithm

TREAT views

the pattern

elements

in the LHS of

rules as relational

algebra

terms, as in Algorithm

2. Thus,

the

evaluation

of such

rela,tional

algebra

tests

is also

State

is saved

in a

executed

within

the WM-subtrees.

alpha

in

the

form

of

distributed

Rete

WM-subtree

corresponding

to partial

selections

of tuples

memories

Rule instances

in the

matching

various

pattern

elements.

conflict

set computed

on previous

cycles are also stored

in

a distributed

manner

within

the WM-subtrees.

These two

the

performance

of

substa,ntially

improve

additions

that

Anoop

Gupta

of CarnegieA’gorithm

2.

independently

v e note

analyzed

a

similar

Mellon

University

Compared

to Algorithm

2,

algorithm

in

Gupta

1983.

TREAT

shoul d perform

su 1 stantially

better

for temporally

redundant

systems.

We

note

that

Gupta’s

analysis

of

depends

on certain

assumptions

that

algorithm

2, however,

derive misleading

results.)

Another

aspect

of TREAT

is the clever manner

in

Pattern

elements

are first

which relevancy

is computed.

distributed

to the WM

subtrees.

When

a new WM

element

is added

to the system,

a simple

match

a,t each

WM-subtree

PE determines

the set of rules at the PMlevel which

are affected

by the change.

Those

identified

are

subsequently

matched

by

the

PM-level

PE

rules

restricting

the scope of the match

to a smaller

set of rules

than would otherwise

be possible with Algorithm

2.

conflict

rated

the

instantiated

to the

CHANGES

root

RHS

of

the

4.4.1

set

the

reported

action

Repeat;

Figure

4:

Original

DAD0

algorithm

is outlined

in figure

5.

Discussion

of Algorithm

3

The TREAT

algorithm

is a refinement

of Algorithm

2 incorporating

temporal

redundancy.

Hence,

TREAT

is

best suited for PS programs

characterized

as:

winning

of DADO.

to

TREAT

to

specifications.

8. end

matching

the

instance.

6. Report

for

do;

termination

5. Select:

rules

Daniel

Miranker

has

invented

an algorithm

which

modifies

Algorithm

2 to include

several

of the features

of

The TREe Associative

the Rete match

for saving

state.

Temporally

redundant

(TREAT)

algorithm

[Miranker

19841

makes use of the same logical division

of the DAD0

tree

as in Algorithm

2.

However,

the state

of the previous

is saved

in distributed

data

structures

match

operation

within the WM-subtrees.

The

4. Upon

to select

updated

is performed

an

pattern

stored

and

of

is added.]

ii. Each

. ..

set

routine.

is

instances

subtree

if WM-

[If th is is a deletion,

(relational

is

4.4

PM-

local

match

probe

matching

to

its

WM-subtree

associative

this

in each

to

a partial

element

PM-level

determines

is relevant

rules

do;

the

selection)

can be used

faster than hashing).

Consideration

of these techniques

led us to investigate

Rete for direct

implementation

on DAD02.

Algorithms

3

and 4 detail this approach.

to match.

is initiated

accordingly.

111.

to

an instruction

change

end

and

PM-level

WM

WM-change

i. Each

C.

routine

to each

following:

Broadcast

b.

a

subset

1.

Temporally

redundant.

3.

Many

are

6.

Global

8.

Small

PM.

11.

Large

WM.

rules

tests

affected

of WM

are

on each

also

cycle.

efficiently

handled.

Algorithm.

changes

improve

the

Simple

may _ dramatically

For example,

rather

than

lteratmg

over each

situation.

pattern

element

in each rule as in step S.b.ii, we may only

execute

the match

for those

rules affected

by new WM

changes.

The selection

of affected

rules can be achieved

quickly

using the WM subtree

as an associative

memory.

By distributing

pattern

elements

as relational

tu les in a

associative

probing

similar

to WM,

manner

Prelational

We note, though,

that minor

changes

allow TREAT

2 directly

(b

setting

L to all of

to implement

Algorithm

the rules at the PM-level

in step 3. B .ii and ignoring

step

3.d.i).

Thus, TREAT

may also efficiently

execute:

4.

Non-temporally

In

step

3.d.iii,

redundant

TREAT

systems.

also

implements

a

useful

1. Initialize:

simple

Distribute

matcher

set of rules.

each

appropriate

the

LHS

PE.

to the

pattern

of the

rules

Set

PM-level

below)

Distribute

the

level

to

(described

and

WM-subtree

elements

a

in the

to

the

compared

to Algorithm

2 executing

temporally

redundant

study

and detailed

analysis

systems.

(Th e implementation,

of TREAT

forms a major

part of Daniel Miranker’s

Ph.D.

thesis.)

PE’s

appearing

appearing

CHANGES

PE

a compiled

root

in

PM-

initial

4.5

WM

elements.

2. Repeat

3. Act:

the

For

a.

WM-change

Broadcast

in CHANGES

WM-change

to

the

do;

WM-subtree

PE’s.

b.

If this

change

instruction

elements

d.

At

each

PM-level

PE

to

do;

to WM-subtree

to match the

instruction

PE

against

the

local

ii. Report

the

affected

pattern

the

pattern

PE’s

an

WM-change

element.

rules

and

Algorithm

store

rules

of

4.5.1

the

each

rule

1. Match

rules

in L do;

2. For

remaining

patterns

of the

1.

Temporally

in

as

2.

Few

new

in

priority

3. end

L

in

2.

each

store

Discussion

specified

Algorithm

end

for

instance

found,

WM-subtree

with

10.

a

rating.

PM.

We

1023

Rete

nodes

9.

5. Report

6. Set

the

7. end

winning

CHANGES

winning

max-RESOLVE

rule

to

in the

to

find

the

the

instance.

the

instantiated

5:

The

TREAT

tree

This

believe

processed

overlay

and

changes.

Rete

processed

that

by

only

DADOB.

technique

can

networks

be

are

Thus,

in turn.

be achievable.

since

(stored

storage

at

alpha

capacity

the

Rete

for

storage

network

nodes

intermediate

and

beta

a

DAD02

of

partial

memories),

PE

may

size of WM.

Although

overlayed

Rete networks

would be processed

significant

performance

DADOB,

sequentially

on

can be achieved

by a natural

pipelinin

improvements

a successful

match

an 3

Immediately

following

effect.

communication

at a node, the next two-input

test from the

overlayed

network

is initiated.

Thus,

while

the parent

node is processing

the first network

node, its children

are

proceeding

with their tests of the second overlayed

network

node.

Algorithm.

strategy.

When

iterating

over

each

of the rules

in L

affected

by recent

changes

in WM, those pattern

elements

with the smallest

alpha memories

are processed

first. This

technique

tends

to process

the join operations

quickly

by

filtering

out many potentially

failing partial

joins.

As

algorithm,

Miranker

instance,

several

However,

restricting

WM

of the

instance.

Repeat;

Figure

of the

programs

19791.

be

forward

significant

results

require

RHS

may

limited

for

may

where

WM.

match

tree.

may,

in the

PM

Small

implementation

for

PS

by

in [Forgy

a straight

embedded

instance

4

affected

Large

implemented

each;

rated

are

is noted

However,

do;

do;

Use

maximally

6.

redundant

observation

require

4. Select:

of Algorithm

rules

large

e.

in figure

Since this algorithm

is a direct

Rete

match,

it

is

most

suitable

characterized

as:

in L appropriately.

iv. For

v. end

elements

4 is illustrated

in

L.

iii. Order

Rete

This

observation

leads

to Algorithm

4 whereby

a

logical

Rete network

is embedded

on the ph sical DAD0

In the simplest

case, i eaf nodes of

binary

tree structure.

the DAD0

tree store and execute

the initial

linear chains

whereas

internal

DAD0

PE’s

of one-input,

test

nodes,

operations.

The

physical

two-input

node

execute

connections

between

processors

correspond

to the logical

da.ta flow links in the Rete network.

The entire

DAD0

in MIMD

mode

while

executing

this

machine

operates

algorithm,

behaving

much

like

a pipelined

data

flow

architecture.

set

cycles.

to PM-level

Phase.

i. Broadcast

WM

conflict

on previous

Match

an

delete

affected

an instruction

the

broadcast

and

any

calculated

Broadcast

enter

match

and

instances

c.

is a deletion,

to

4: Fine-grain

A Rete network

compiIed

from the LHS’s of a rule

set consists

of a number

of simple

nodes encoding

match

Tokens,

representing

WM modifications,

flow

operations.

through

the network

in one direction

and are processed

by

Fortunately,

the

each node lying on their traversed

paths.

maximum

fan-m

of any node in a Rete network

is two.

Hence, a Rete network

can be represented

as a binary

tree

(with some minimal

amount

of node splitting).

following:

each

Algorithm

source

of

ninelining

can

improve

A

second

In this cker

the &tire

RHS action

performance

as well.

specification

is broadcast

at once to the DAD0

leaf PE’s

Immediately

following

the conclusion

of the

at step 3.a.

first match

operation

and communication

of the first WM

noted

above,

Gu ta’s analysis

of a TREAT-like

as well as su f sequent

analysis

performed

by

[1984],

show

TREAT

to

be highly

efficient

305

1. Initialize:

Map

network

on

provided

with

and

the

load

DAD0

the

the

compiled

Each

tree.

appropriate

network

information

details).

Set

match

code

[Forgy

(see

CHANGES

Rete

node

is

and

19821

to

for

initial

WM

1. Initialize.

2. Repeat

the

For

in CHANGES

WM-change

to

Distribute

do;

of the

a.

b.

Broadcast

WM-change

the

leaf PE’s.

DAD0

Broadcast

an

one-input

The

token.

for

root

of

are

tests,

the

The

to

the

to the

PE

is provided

the

with

control

the

the

chosen

Set

processor.

instantiated

completion

SIMD

of

PE

of

PS

(PS-level),

Algorithm

program

each

PS-level.

instruction

in

their

to each

PS-level

PE

MIMD

mode.

(Upon

each

respective

reconnects

to

programs,

the

tree

above

PE’s

are

in

to

in

mode.)

3. Repeat

a.

2.

to

the

following.

Test

if

all

PS-level

SIMD

mode.

End

Repeat;

4. Execution

Complete.

Halt.

Figure

7:

Simple

Multiple

PS Program

Execution.

RHS.

In the cases where

various

PS-level

PE’s need to

communicate

results with eachother,

step 3 is re laced with

appropriate

code sequences

to report

and broa a cast values

from

the PS-level

in the proper

manner.

Each

of the

programs

executed

by PS-level

PE’s are first modified

to

synchronize

as necessary

with the root PE to coordinate

the communication

acts, at, for example,

termination

of the

Act phase.

Repeat;

Figure

6:

Fine-grain

Rete

Algorithm.

token,

the leaf PE’s initiate

processing

of the second WM

Hence, as a WM token

flows up the DAD0

tree,

token.

subsequent

WM tokens flow close behind

at lower levels of

the tree in pipeline fashion.

4.0

execution

incorporate

DAD0

processor).

root

begin

to

physical

changes

in

at the

an

the

immediately

until

reports

the

to

which

PE’s

appropriate

2. Broadcast

PS-level

PM-level

the

the

DAD0

System-level

their

passing

two-input

maintained

new

waiting

by

tokens

repeated

DAD0

execute

the

communicated

their

set

from

CHANGES

4. end

idle

to

do;

The

instance

processors

computed

Those

is then

control

Select:

nodes

ancestors

conflict

end

on

processing

process

C.

sequences

lay

to

PE’s

leaf

tests

immediate

begin

all

the

results

descendants.

to

token)

test

interior

match

one-input

Rete

instruction

(First,

Match.

their

(a

divide

Production

similar

following:

each

Logically

static

a

elements.

3. Act:

algorithmic

process

executed

in the

controlled

by some

case is represented

upper

part

of the tree. The simplest

by the procedure

illustrated

in figure 7, which is similar in

some respects

to Algorithm

2.

Algorithm

In our

characteristic

as

5: Multiple

discussion

so far,

7, multiple

rule

- multiple,

independently

Asynchronous

Execution

no mention

was made about

We may view this

firings.

executing

PS

programs,

or

- executing

PS program

multiple

conflict

set

rules

of the

same

concurrently.

In this regard

we offer not a single algorithm,

but rather

an observation

that may be put to practical

use in each of

the abovementioned

algorithms.

We note that

any DAD0

PE may be viewed

as a

Thus,

any algorithm

operating

root of a DAD0

machine.

at the physical

root of DAD0 may also be executed

by

Hence, any of the aforementioned

some descendant

node.

algorithms

can be executed

at various

sites in the machine

concurrently!

(Th is was noted in [Stolfo and Shaw 1982 .)

This coarse

level of parallelism,

however,

will need to IIe

In addition

to concurrent

execution

of multiple

PS

programs,

methods

may

be employed

to

concurrently

execute

portions

of a single PS program.

These methods

are intimately

tied to the way rules are partitioned

in the

tree.

Subsets

of rules

may be constructed

by a static

analysis

of PM separating

those rules which do not directly

interact

with each other.

In terms of the match

problemsolving

paradigm,

for example,

it may be convenient

to

think

of

independent

subproblems

and

the

methods

implementing

their solution

(see [Newell 19731). Each such

method

may

be

viewed

as

a

high-level

subroutine

represented

as an independent

rule set rooted

by some

internal

node of DADO.

Algorithm

1, for example,

may

be applied

in parallel

for

each

rule

set

in question.

Asynchronous

execution

of these subroutines

proceeds

in a

straight

forward

manner.

The complexity

arises when one

subset

of rules

infers

data

required

by other

rule sets.

The coordination

of these communication

acts is the focus

of our

ongoing

research.

Space

does

not

permit

a

complete

specification

of this

approach,

and

thus

the

reader

is encouraged

to see [Ishida 1984) for details of our

initial thinking

in this direction.

5 Conclusion

References

We

have

outlined

five abstract,

algorithms

for the

parallel

execution

of PS programs

on the DAD0

machine

and indicated

what characteristics

they are best suited for.

We summarize

our results

in tabular

form as follows:

Algorithm

1. Fully

PS

Distributed

2. Original

3. Miranker’s

4. Fine-grain

5. Multiple

PM

DAD0

TREAT

Rete

Asynchronous

Davis,

and

J.

Forgy,

1, 3, 5, 7, 9, 11

C.

L.

Mellon

1, 3, 4, 6, 7, 8, 11

1, 2, 5, 7, 9, 10

Forgy

to all cases.

the

On

Ph.D.

C.

L.

Gupta,

A

Science,

Carnegie-Mellon

and

University,

and

Report,

Columbia

University,

University,

J.,

and

J.,

and

Report,

Department

University,

Computers).

307

D. P.

Maclaine:

1984.

a

Shaw.

Computer.

Science,

DADO:

of

Technical

Columbia

A

Tree-structured

Production

Computer

Artificial

August,

The DAD0

System-level

Systems.

on

University,

(Submitted

Technical

to AI Journal).

Conference

of

Programming

Thesis.

for

Miranker.

Control

Department

(Submitted

Carnegie-Mellon

System

as

Computer

National

Intelligence,

S.

E.

of

Intelligence.

Parallel

1983.

RETE.

Science,

1973.

Ph.D.

of

DAD0

Information

Vi’aual

University,

Architecture

Proceedings

Stolfo

D.

Computer

Models

(Ed.),

Systems

DAD0

the

and

1984.

Press,

1976.

August

Machine

of

Artificial

Department

for

TREAT

of

Systems:

Academic

The

J.

Systems,

Estimates

Chase

Science,

Report,

Conflict

Hayes-Roth

Inference

Carnegie-Mellon

Computer

S.

System

and

April

W.

for

Report,

Science,

Production

Department

Production

M.

Computer

Waterman

Comparison

In

Language

S.

In

Production

A.

Rychener,

of

of

Machines.

1978.

Technical

Processing,

Stolfo

Forgy.

A

Structures.

Firing

1984.

Machine.

Newell,

on

Computer

1983.

Simultaneous

Performance

P.

Systems

of

Tree-structured

on

Many

Problem.

Production

Department

Pattern-directed

D.

the

19, 17-37.

Stolfo.

C.

Press,

for

University,

Strategies.

(EW,

Academic

Science,

Matching

Department

Report,

Columbia

Resolution

Stolfo

J.

Technical

Miranker

1982,

Rules

J.

Carnegie-

Computer

Algorithm

Report,

S.

Production

McDermott,

of

OPS5

Technical

T.,

Report,

Pattern

Intelligence,

DA-DO.

Ishida

In this

paper,

we have

outlined

our

expectations

concerning

the suitability

of each of the algorithms

for a

variety

of possible

PS programs.

We expect ouirtreenyrted

findings

to

substantiate

our

claims,

and

to

demonstrate

this with working

examples

in the near future.

Fast

Object

Implementing

A.

of

Implementation

Technical

Department

Rete:

Artificial

Computer

Thesis.

Pattern/Many

Of the five reported

algorithms,

only

the original

DAD0

algorithm

(number

2) has been carefullv

studied

analyticallj;.

The ‘performanie

statistics

of the ;emaining

four

algorithms

have

yet

to

be

analyzed

in detail.

However,

much

of the performance

statistics

cannot

be

analyzed

without

specific

examples

and

detailed

implementations.

Working

in close

collaboration

with

researchers

at AT&T

Bell Laboratories,

in the course

of

the next year of our research

we intend to implement

each

of the stated

algorithms

on a working

prototype

of DADO.

of

1975.

Efficient

Systems.

Production

of

Department

University,

University,

1979.

Overview

Report,

Stanford

Production

3, 4, 6, 7, 8, 11

An

King.

Technical

Science,

Characteristics

Applies

R.

Systems.

1982.

Production

Details.

Technical

Science,

Columbia

to IEEE

Transactions

on