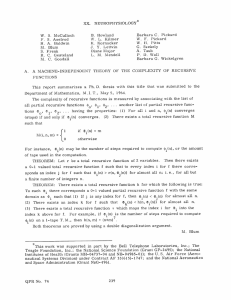

Lecture Notes No. 2

advertisement

2.160 Identification, Estimation, and Learning

Lecture Notes No. 2

February 13, 2006

2. Parameter Estimation for Deterministic Systems

2.1 Least Squares Estimation

u1

u2

M

um

Deterministic

System

w/parameter θ

M

y

Linearly parameterized model

y = b1u1 + b2 u2 + K + bm um

Input-output

Parameters to estimate: θ = [b1 K bm ]T ∈ R m

Observations: ϕ = [u1 K um ]T ∈ R m

y = ϕ Tθ

(1)

The problem is to find the parameters θ = [b1 K bm ]T from observation data:

ϕ (1), y (1)

ϕ ( 2), y (2)

→θ

M

ϕ ( N ), y ( N )

The system may be

a linear dynamic system, e.g.

y (t ) = b1u (t − 1) + b2 u (t − 2) + ⋅ ⋅ ⋅ ⋅ ⋅ ⋅ + bm u(t − m )

ϕ (t ) = [u(t − 1), u(

t − 2),⋅ ⋅ ⋅, u(t − m )]T ∈ R m

or a nonlinear dynamic system, e.g. y (t ) = b1u (t − 1) + b2 u (t − 2)u (t − 1)

ϕ (t ) = [u(t − 1), u(t − 2)u(t − 1)]T

Note that the parameters, b1, b2, are linearly involved in the input-output equation.

Using an estimated parameter vector θˆ , we can write a predictor that predicts the output

from inputs:

yˆ(t θ ) = ϕ (t ) T θˆ

(2)

1

We evaluate the predictor’s performance by the squared error given by

1 N

VN (θ ) = ∑ ( yˆ (t | θ ) − y (t ))2

N t =1

Problem: Find the parameter vector θˆ that minimizes the squared error:

θˆ = arg min V (θ )

(3)

(4)

N

θ

Differentiating VN (θ ) and setting it to zero,

dVN (θ )

=0

dθ

2

N

N

∑ (ϕ

T

(t)θ − y (t ))ϕ (t) = 0

(5)

t=1

N

N

T

(

ϕ

(t)

ϕ

(t)

θ

=

y (t )ϕ (t)

∑

∑

t =1

t=1

(6)

=

ΦΦ T

Consider m × N matrix

Φ = [ϕ (1) ϕ (2) K ϕ ( N )]

m≤N

(7)

If vectors ϕ (1) ϕ (2) K ϕ (N ) span the whole m-dimensional vector space

rank Φ = m ; full rank

If there are m linearly independent (column) vectors in this matrix Φ ,

rankΦ = m ; full rank

rankΦ = rankΦΦ T = m ; full rank, hence invertible

Under this condition, the optimal parameter vector is given by

θˆ = PB

(8)

N

∑ (ϕ (t)ϕ

where P =

T

t =1

(t))

−1

= (ΦΦ T )

−1

(9)

N

B = ∑ y ( t )ϕ (t)

(10)

t=1

2.2 The Recursive Least-Squares Algorithm

While the above algorithm is for batch processing of whole data, we often need to

estimate parameters in real-time where data are coming from a dynamical system.

2

A recursive algorithm for computing the parameters is a powerful tool in such

applications.

u(t)

θˆ = PB : Batch Processing

y(t)

Actual

System

On-Line Estimation

Based on {y(t), ϕ (t) } the latest

data

We update the estimate θˆ

Model

y (t ) = ϕ (t) T θ

Recursive Formula

• Simple enough to

complete within a given

sampling period

• No need to store the whole

observed data θˆ

θˆ

(t) = Pt Bt

Bt −1

Bt

Pt −1

Pt

θˆ(t − 1)

θˆ(t )

t=t-1

t=t

θˆ = PB

N

P = ∑ (ϕ (t)ϕ T (t))

t=1

−1

= (ΦΦ T )

−1

N

P −1 = ∑ (ϕ (t)ϕ T (t)) = (ΦΦ T )

t=1

3

N

B = ∑ y ( t )ϕ (t )

t =1

Three steps for obtaining a recursive computation algorithm

a) Splitting Bt and Pt

From (10)

t

t −1

i =1

i =1

Bt = ∑ y (i )ϕ (i ) = ∑ y (i )ϕ (i ) + y ( t )ϕ (t )

Bt = Bt −1 + y ( t )ϕ (t )

(11)

From (11)

Pt

−1

t

= ∑ (ϕ (i )ϕ T (i ))

i =1

Pt

−1

−1

= Pt −1 + ϕ (t )ϕ T (t )

(12)

b) The Matrix Inversion Lemma (An Intuitive Method)

Premultiplying Pt and postmultiplying Pt-1 to (12)yield

−1

−1

Pt Pt Pt −1 = Pt Pt −1 Pt −1 + Ptϕ (t )ϕ T ( t ) Pt −1

Pt −1 = Pt + Ptϕ (t )ϕ T (t ) Pt −1

(13)

Postmultiplying ϕ (t )

Pt −1ϕ (t ) = Ptϕ (t ) + Ptϕ (t )ϕ T (t )

Pt −1ϕ (t ) = Ptϕ (t )(1 + ϕ T (t ) Pt −1ϕ (t ) )

Ptϕ (t ) =

Pt −1ϕ (t )

(1 + ϕ T (t ) Pt −1ϕ (t ))

Postmultiplying ϕ T (t ) Pt −1

Ptϕ (t )ϕ T (t ) Pt −1 =

Pt −1ϕ (t )ϕ T (t ) Pt −1

(1 + ϕ T (t ) Pt −1ϕ (t ))

(18)

Pt −1 − Pt

Therefore,

Pt −1ϕ (t )ϕ T (t ) Pt −1

(1 + ϕ T (t ) Pt −1ϕ (t ))

Note that no matrix inversion is needed for updating Pt!

This is a special case of the Matrix Inversion Lemma.

Pt = Pt −1 −

[A + BCD]−1 = A−1 − A−1 B[DA−1 B + C −1 ]−1 DA−1

(14)

(15)

4

Exercise 1 Prove (15) and use (15) to obtain (14) from (12)

c) Reducing θˆ(t ) = Pt Bt

to the following recursive form:

(16)

T

ˆ

ˆ

ˆ

y (t ) − ϕ (t )θ (t − 1)

θ (t ) = θ (t − 1) + Κ t 1

4442444

3

Pr ediction Error

(17)

A type of gain for correcting the error From (16) θˆ(t ) = Pt Bt θˆ

(t − 1) = Pt −1 Bt −1

θˆ(t ) − θˆ(t − 1) = P B − P B

t

t

t −1

t −1

P ϕ (t )ϕ T (t )

Pt −1

= Pt −1 − t −1 T

(1 + ϕ (t ) Pt −1ϕ (t ))

(Bt −1 + y(t )ϕ (t )) − Pt −1Bt −1

P ϕ (t )ϕ T (t )

Pt −1

= Pt −1 y (t )ϕ (t ) − t −1 T

(1 + ϕ (t ) Pt −1ϕ (t )) (Bt −1 + y(t )ϕ (t ))

Pt −1 y (t )ϕ (t )(1 + ϕ T (t ) Pt −1ϕ (t ) ) − Pt −1ϕ (t )ϕ T (t ) Pt −1ϕ (t ) y (t ) − Pt −1ϕ (t )ϕ T (t ) Pt −1 Bt −1

(1 + ϕ T (t ) Pt −1ϕ (t ))

P y (t )ϕ (t ) − P ϕ (t )ϕ T (t )θˆ(t − 1)

=−

=

=

t −1

(1 + ϕ

t −1

T

(t ) Pt −1ϕ (t ) )

[

Pt −1ϕ (t )

y (t ) − ϕ T (t )θˆ(t − 1)

T

(1 + ϕ (t ) Pt −1ϕ (t ))

]

Replacing this by Κ t ∈ R m×1 , we obtain (17)

The Recursive Least Squares (RLS) Algorithm

θˆ(t ) = θˆ(t − 1) +

Pt = Pt −1 −

(

Pt −1ϕ (t )

y (t ) − ϕ T (t )θˆ(t − 1)

T

(1 + ϕ (t ) Pt −1ϕ (t ))

)

(18)

Pt −1ϕ (t )ϕ T (t ) Pt −1

(1 + ϕ T (t ) Pt −1ϕ (t )) t=1,2,… w/initial conditions

(14)

with

θˆ(0) : arbitrary

Po: positive definite matrix

This Recursive Least Squares Algorithm was originally developed by

Gauss (1777 – 1855)

5