LECTURE 19 Chebyshev’s inequality Limit theorems – I µ

advertisement

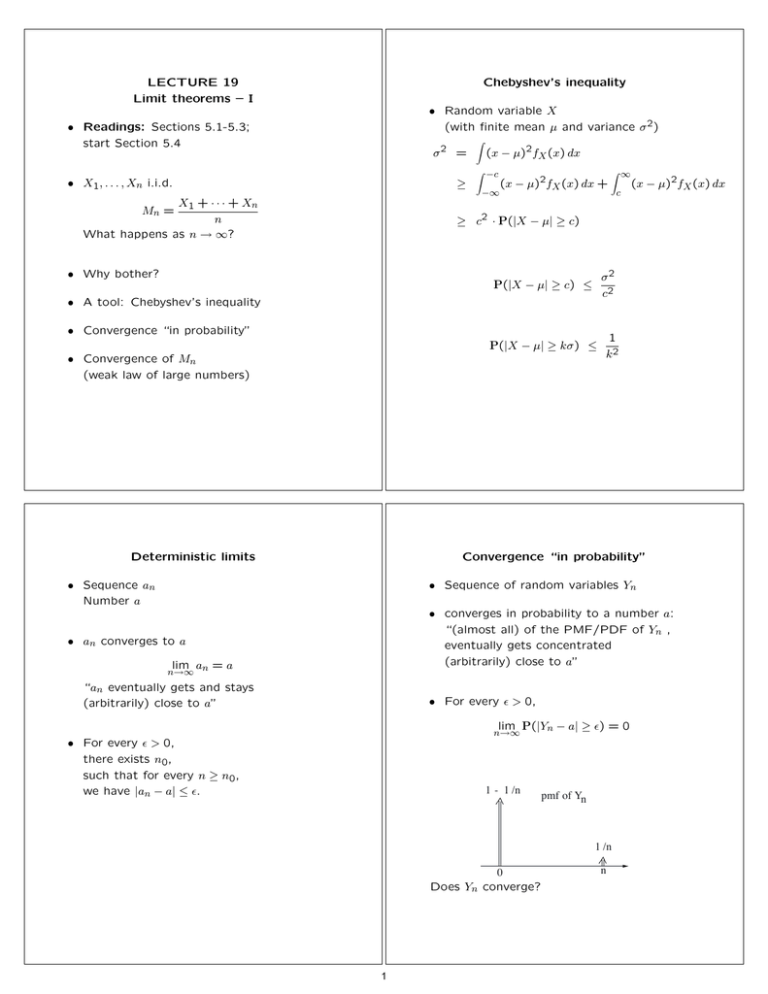

LECTURE 19 Limit theorems – I Chebyshev’s inequality • Random variable X (with finite mean µ and variance σ 2) • Readings: Sections 5.1-5.3; start Section 5.4 σ2 = • X1, . . . , Xn i.i.d. ≥ X1 + · · · + Xn n What happens as n → ∞? Mn = ! (x − µ)2fX (x) dx ! −c −∞ (x − µ)2fX (x) dx + ! ∞ c (x − µ)2fX (x) dx ≥ c2 · P(|X − µ| ≥ c) • Why bother? σ2 c2 P(|X − µ| ≥ c) ≤ • A tool: Chebyshev’s inequality • Convergence “in probability” P(|X − µ| ≥ kσ ) ≤ • Convergence of Mn (weak law of large numbers) Deterministic limits 1 k2 Convergence “in probability” • Sequence an Number a • Sequence of random variables Yn • converges in probability to a number a: “(almost all) of the PMF/PDF of Yn , eventually gets concentrated (arbitrarily) close to a” • an converges to a lim a = a n→∞ n “an eventually gets and stays (arbitrarily) close to a” • For every " > 0, lim P(|Yn − a| ≥ ") = 0 n→∞ • For every " > 0, there exists n0, such that for every n ≥ n0, we have |an − a| ≤ ". 1 - 1 /n pmf of Yn 1 /n 0 Does Yn converge? 1 n Convergence of the sample mean (Weak law of large numbers) The pollster’s problem • f : fraction of population that “. . . ” • X1, X2, . . . i.i.d. finite mean µ and variance σ 2 • ith (randomly selected) person polled: X + · · · + Xn Mn = 1 n Xi = 1, 0, if yes, if no. • Mn = (X1 + · · · + Xn)/n fraction of “yes” in our sample • E[Mn] = • Goal: 95% confidence of ≤1% error • Var(Mn) = P(|Mn − f | ≥ .01) ≤ .05 P(|Mn − µ| ≥ ") ≤ • Use Chebyshev’s inequality: σ2 Var(Mn) = "2 n"2 P(|Mn − f | ≥ .01) ≤ 2 σM n (0.01)2 σx2 1 = ≤ 4n(0.01)2 n(0.01)2 • Mn converges in probability to µ • If n = 50, 000, then P(|Mn − f | ≥ .01) ≤ .05 (conservative) Different scalings of Mn The central limit theorem • X1, . . . , Xn i.i.d. finite variance σ 2 • “Standardized” Sn = X1 + · · · + Xn: Zn = • Look at three variants of their sum: • Sn = X1 + · · · + Xn – zero mean variance nσ 2 – unit variance Sn variance σ 2/n n converges “in probability” to E[X] (WLLN) • Let Z be a standard normal r.v. (zero mean, unit variance) • Mn = Sn • √ n Sn − nE[X] Sn − E[Sn] = √ σSn nσ • Theorem: For every c: constant variance σ 2 P(Zn ≤ c) → P(Z ≤ c) – Asymptotic shape? • P(Z ≤ c) is the standard normal CDF, Φ(c), available from the normal tables 2 MIT OpenCourseWare http://ocw.mit.edu 6.041SC Probabilistic Systems Analysis and Applied Probability Fall 2013 For information about citing these materials or our Terms of Use, visit: http://ocw.mit.edu/terms.