1 Derandomizing Logspace Computations

advertisement

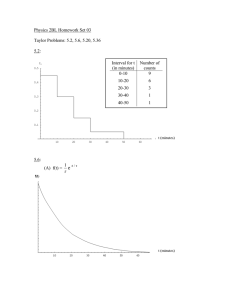

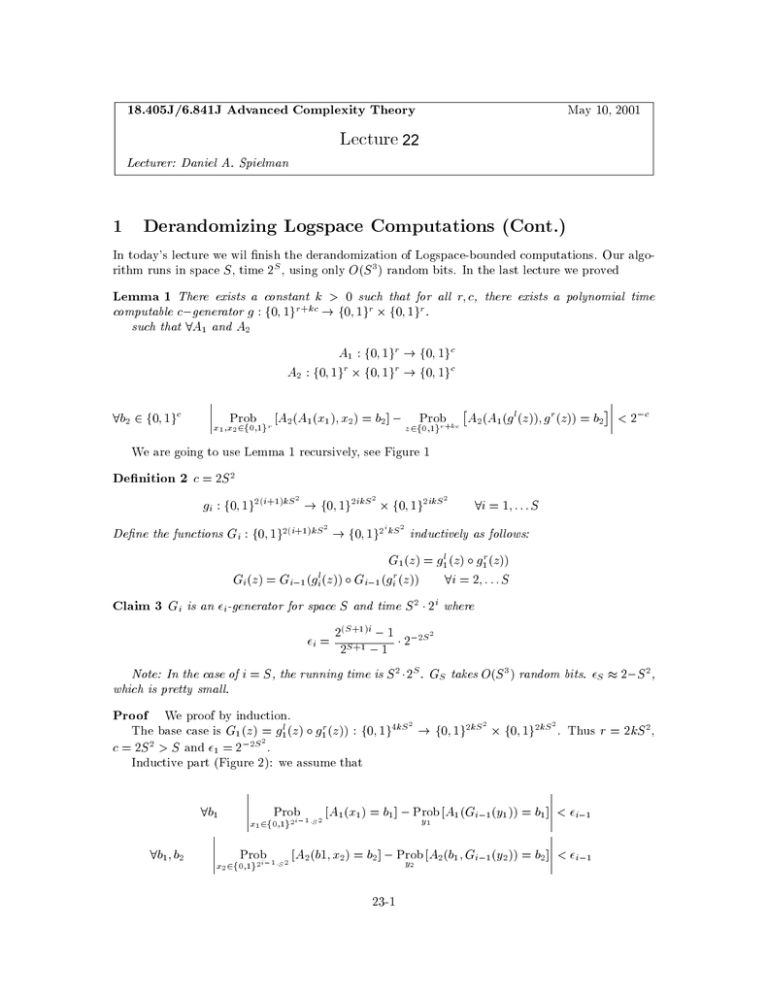

18.405J/6.841J Advanced Complexity Theory May 10, 2001 Lecture 22 Lecturer: Daniel A. Spielman 1 Derandomizing Logspace Computations (Cont.) In today's lecture we wil nish the derandomization of Logspace-bounded computations. Our algorithm runs in space S , time 2 , using only O(S 3 ) random bits. In the last lecture we proved S Lemma 1 There exists a constant k > 0 such that for all r c, there exists a polynomial time computable c;generator g : f0 1g + ! f0 1g f0 1g . such that 8A1 and A2 r kc r r A1 : f0 1g ! f0 1g A2 : f0 1g f0 1g ! f0 1g r 8b2 2 f0 1g c � � � � c r c A2 (A1 (x1 ) x2 ) = b2 ] ; Probr+kc A2 (A1 (g (z )) g (z )) = b � Prob x1 x2 r 2f0 1gr z l 2f0 1g r � �� � 2 � < 2; c We are going to use Lemma 1 recursively, see Figure 1 Denition 2 c = 2S 2 g : f0 1g2( +1) 2 ! f0 1g2 2 f0 1g2 2 8i = 1 : : : S Dene the functions G : f0 1g2( +1) 2 ! f0 1g2 2 inductively as follows: G1 (z ) = g1 (z ) g1 (z )) G (z ) = G ;1 (g (z )) G ;1 (g (z )) 8i = 2 : : : S Claim 3 G is an -generator for space S and time S 2 2 where ( +1) = 22 +1 ;;11 2;2 2 Note: In the case of i = S , the running time is S 2 2 . G takes O(S 3 ) random bits. 2;S 2, i i kS i i ikS ikS i kS kS l i i l i i r i i i i S i S i S S S which is pretty small. Proof We proof by induction. The base case is G1 (z ) 2= g1 (z ) g1 (z )) : f0 1g4 c = 2S 2 > S and 1 = 2;2 . Inductive part (Figure 2): we assume that l r kS 2 S 8b1 8b1 b2 � � � � �x2 r � � � � �x1 Prob 2f0 1g2i;1 �S2 Prob 2f0 1g2i;1 �S2 S ! f0 1g2 kS 2 f0 1g2 2 . Thus r = 2kS 2, kS � � � 1 � � � � � 2 � � A1 (x1 ) = b1 ] ; Prob A1 (G ;1 (y1 )) = b ] < ;1 1 y i i A2 (b1 x2 ) = b2 ] ; Prob A2 (b1 G ;1 (y2 )) = b ] < ;1 y2 23-1 i i Figure 1: Recursive Pattern. time = 2 , S = O(log n) s 23-2 A1 s A2 s Figure 2: Inductive Picture Then we sum over b1 (jb1 j = 2 )and apply the previous two inequalities to get S � � � �x 8b2 � � 2 �� Prob A2 (A1 (x1 ) x2 ) = b2 ] ; Prob A2 (G ;1 (y1 )) G ;1 (y2 )) = b ] < 2 2 ;1 S 1 x2 i y1 y2 i i Since g is a 2 S 2 -generator, we have i 8b2 � � � �y Prob A2 (G ;1 (y1 )) G ;1 (y2 )) = b2 ] ; Prob A2 (A1 (G ;1 (g (z ))) G ;1 (g (z ))) = b � 1 y2 i i l i i z i r i � �� � 2 � < 2;2 S 2 By triangular inequality, 8b2 � � � �x � �� Prob A2 (A1 (x1 ) x2 ) = b2 ] ; Prob A2 (A1 (G ;1 (g (z ))) G ;1 (g (z ))) = b2 �� < 2 2 ;1 + 2;2 = � 1 x2 i z l i i r i S i Another approach is the recurtion using hash functions. We can achieve S 3 radom bits, and more eciently only S 2 bits by randomly choose hash functions. Constructs hash functions: h1 ::: h = f0 1g ! f0 1g s s 23-3 s S 2 i And denes G1 (x) = x h1 (x) G2 (x) = G1 (x) G1 (h2 (x)) ::: G (x) = G ;1 (x) G ;1 (h (x)) i i i i 2 Hardness vs. Randomness by Nissan Wigderson Denition 4 for any f : f0 1g ! f0 1g, hardness is h(n) if 8 circuit C of size h(n) n 1+ 1 Prob f ( x ) = C ( x )] < n x2f0 1g 2 h(n) Assume a function of hardness nlog is computable in time 2 k for some k, we can prove n n BPP DTIME(2 ) 8 > 0 n hardness h(n) = 2; nc computable in EXP ) BPP = P: We will continue this topic in the next lecture. 23-4