Lecture 29 Generalization bounds for neural networks. 18.465

advertisement

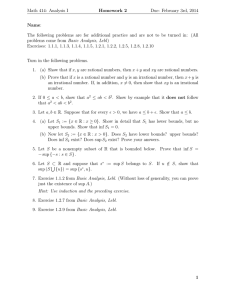

Lecture 29 Generalization bounds for neural networks. 18.465 In Lecture 28 we proved � � � � n k n �1 � � �1 � � � 8 � � � � εi (yi − h(xi ))2 � ≤ 8 (2LAj ) · E sup � εi h(xi )� + √ E sup � � � � � n n n h∈H h∈Hk (A1 ,...,Ak ) i=1 j=1 i=1 Hence, � � n � � 1� � � Z (Hk (A1 , . . . , Ak )) := sup L(yi , h(xi ))� �EL(y, h(x)) − � � n h∈Hk (A1 ,...,Ak ) i=1 � � � k n �1 � � � 8 t � � ≤8 (2LAj ) · E sup � εi h(xi )� + √ + 8 � � n n n h∈H j=1 i=1 with probability at least 1 − e−t . Assume H is a VC-subgraph class, −1 ≤ h ≤ 1. We had the following result: ⎛ n 1� K Pε ⎝∀h ∈ H, εi h(xi ) ≤ √ n i=1 n � √ n1 Pni=1 h2 (xi ) 0 � � �⎞ � n � � t 1 log1/2 D(H, ε, dx )dε + K � h2 (xi ) ⎠ n n i=1 ≥ 1 − e−t , where � dx (f, g) = n 1� (f (xi ) − g(xi ))2 n i=1 �1/2 . Furthermore, � � � � �⎞ � � √ n1 Pni=1 h2 (xi ) n n �1 � � � � K t 1 � � Pε ⎝∀h ∈ H, � εi h(xi )� ≤ √ log1/2 D(H, ε, dx )dε + K � h2 (xi ) ⎠ . �n � n n n 0 i=1 i=1 ⎛ ≥ 1 − 2e−t , Since −1 ≤ h ≤ 1 for all h ∈ H, � Pε � � � � � 1 n �1 � � K t � � sup � εi h(xi )� ≤ √ log1/2 D(H, ε, dx )dε + K ≥ 1 − 2e−t , � � n n n h∈H 0 i=1 Since H is a VC-subgraph class with V C(H) = V , 2 log D(H, ε, dx ) ≤ KV log . ε 76 Lecture 29 Generalization bounds for neural networks. 18.465 Hence, 1 � log 1/2 � 1 � 2 KV log dε ε 0 � √ � 1 √ 2 ≤K V log dε ≤ K V ε 0 D(H, ε, dx )dε ≤ 0 Let ξ ≥ 0 be a random variable. Then � ∞ � Eξ = P (ξ ≥ t) dt = 0 a 0 � V n = a and K � t n ∞ � P (ξ ≥ t) dt = a + P (ξ ≥ a + u) du a Let K P (ξ ≥ t) dt a ∞ � ≤a+ ∞ � P (ξ ≥ t) dt + 0 nu2 = u. Then e−t = e− K 2 . Hence, we have � � � � ∞ n �1 � � nu2 V � � Eε sup � εi h(xi )� ≤ K + 2e− K 2 du � � n h∈H n i=1 0 � � ∞ 2 V K √ e−x dx =K + n n 0 � � V K V ≤K +√ ≤K n n n for V ≥ 2. We made a change of variable so that x2 = nu2 K2 . Constants K change their values from line to line. We obtain, Z (Hk (A1 , . . . , Ak )) ≤ K k � � (2LAj ) · j=1 V 8 + √ +8 n n � t n −t with probability at least 1 − e . Assume that for any j, Aj ∈ (2−�j −1 , 2−�j ]. This defines �j . Let Hk (�1 , . . . , �k ) = � � Hk (A1 , . . . , Ak ) : Aj ∈ (2−�j −1 , 2−�j ] . Then the empirical process Z (Hk (�1 , . . . , �k )) ≤ K k � � (2L · 2 −�j j=1 )· V 8 + √ +8 n n � t n with probability at least 1 − e−t . For a given sequence (�1 , . . . , �k ), redefine t as t + 2 �k j =1 log |wj | where wj = �j if �j = � 0 and wj = 1 if �j = 0. With this t, Z (Hk (�1 , . . . , �k )) ≤ K k � � −�j (2L · 2 j=1 )· � V 8 + √ +8 n n t+2 �k j=1 log |wj | n 77 Lecture 29 Generalization bounds for neural networks. 18.465 with probability at least 1 − e−t−2 Pk j=1 log |wj | =1− k � 1 −t e . 2 |w j| j=1 By union bound, the above holds for all �1 , . . . , �k ∈ Z with probability at least � �k k � � � 1 1 −t 1− e =1− e−t 2 2 |w | |w | j 1 j=1 �1 ,...,�k ∈Z �1 ∈Z � �k π2 =1− 1+2 e−t ≥ 1 − 5k e−t = 1 − e−u 6 for t = u + k log 5. Hence, with probability at least 1 − e−u , ∀(�1 , . . . , �k ), Z (Hk (�1 , . . . , �k )) ≤ K k � � −�j (2L · 2 )· j=1 � 8 V + √ +8 n n 2 �k j=1 log |wj | + k log 5 + u n . If Aj ∈ (2−�j −1 , 2−�j ], then −�j − 1 ≤ log Aj ≤ �j and |�j | ≤ | log Aj | + 1. Hence, |wj | ≤ | log Aj | + 1. Therefore, with probability at least 1 − e−u , ∀(A1 , . . . , Ak ), Z (Hk (A1 , . . . , Ak )) ≤ K k � � (4L · Aj ) · j=1 � +8 2 �k j=1 V 8 +√ n n log (| log Aj | + 1) + k log 5 + u n . Notice that log (| log Aj | + 1) is large when Aj is very large or very small. This is penalty and we want the product term to be dominating. But log log Aj ≤ 5 for most practical applications. 78