Chapter 3 BLOCK SAMPLING 3.1 Introduction 1

advertisement

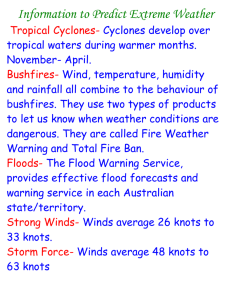

Chapter 3 BLOCK SAMPLING 3.1 Introduction As shown in chapter 2, single move sampling has its perils, even when the acceptance rates are very close to 1. The main problems are slow convergence and inefficiency in estimating posterior moments when the resulting chain has reached equilibrium. In order to avoid these separate, but highly related, pitfalls I now consider block sampling of the states. Liu, Wong and Kong (1994) suggest that a key feature to improve the speed of convergence for samples is to use blocks. A proof of this for Gaussian problems for which the off diagonal elements of the inverse variance are negative is given by Roberts and Sahu (1997), and is discussed in chapter 4. As mentioned in chapter 1, this has motivated a considerable time series statistics literature on this topic in models built out of Gaussianity, but with some non-normality mixed in: see the work of Carter and Kohn (1994), Shephard (1994) and de Jong and Shephard (1995), outlined in chapter 1. However, these methods are very model specific applying only to models which are in GSS form, conditional upon some parameters or mixture components. In this chapter I introduce a more robust, general and flexible approach for all non-Gaussian state space forms 51 C HAPTER 3 BLOCK SAMPLING 52 of the type in (3.1), yt f (ytjst); t+1 = dt + Ttt + Ht ut; ut NID(0; I ); 1 jY0 N(a j ; P j ); 10 st = ct + Zt t 10 (3.1) t = 1; :::; n; for which it is assumed that log f (yt jst ) is concave in st and consequently in t ; as in chapter 2. Sampling from j y may be too ambitious as this is highly multivariate and so if n is very large it is likely that we will run into very large rejection frequencies, counteracting the effectiveness of the blocking. Hence I will employ a potentially intermediate strategy. The sampling method will be based around sampling blocks of disturbances, say ut;k = (ut; ; :::; ut k; )0 1 + 1 given beginning and end conditions, t;1 and t+k+1 ; and the observations. There is, of course, a deterministic relationship between ut;k and t;k = (t ; :::; t k )0 so we could equally ima+ gine that the states are being sampled given the end conditions t;1 and t+k+1 . I call these end conditions “stochastic knots”, the nomenclature being selected by analogy with their role in splines. At the beginning and end of the data set, there will be no need to use two sided knots. In these cases typically only single knots will be required, simulating, for example, from 1;k jt+k+1 ; y1 ; :::; yt+k . In practice k will be a tuning parameter, allowing the lengths of blocks to be selected. Typically it will be chosen to be stochastic, varying the stochastic knots at each iteration of the samplers. If k is too large the sampler will be slow because of rejections, too small it will be correlated because of the structure of the model. 3.2 MCMC method In this section, I detail the Markov chain Monte Carlo method. In Section 3.2.1, I describe the issue of randomly placing the fixed states and signals for each sweep of the method. In Section C HAPTER 3 BLOCK SAMPLING 53 3.2.2, I describe how the proposal density is formed to approximate the true conditional density of a block of states between two knots. The next section, Section 3.2.3, shows how this proposal density can be seen as a GSSF model and therefore easily sampled from. The expansion points for the signals, usually chosen as the mode of the conditional density, are described in Section 3.2.4. Finally, the construction of the overall Metropolis method for deciding whether to update a block of states is detailed in Section 3.2.5. 3.2.1 Stochastic knots The stochastic knots play a crucial role in this method. A fixed number, K , of states, widely spaced over the time domain, are randomly chosen to remain fixed for one sweep of the MCMC method. These states, known as “knots”, ensure that as the sample size increases the algorithm does not fail due to excessive numbers of rejections. Since the knots are selected randomly the points of conditioning change over the iterations. I propose to work with a collection of stochastic knots, at times = (1 ; :::; K )0 and corresponding values n = (0 1 ; :::; 0 K )0 ; ~ which appropriately cover the time span of the sample. The corresponding signals are, of course, also regarded as fixed. The selection of the knots will be carried out randomly and independently of the outcome of the MCMC process. In this chapter I have used the scheme i = int fn (i + Ui )=(K + 2)g ; where Ui U(0; 1); i = 1; :::; K; (3.2) where int(:) means rounded to the nearest integer. Thus the selection of knots is now indexed by a single parameter K which is controlled. Certain, widely spaced, states are therefore chosen to retain their values from the previous MCMC sweep. I now detail the form of the conditional density between two knots and the corresponding proposal density. C HAPTER 3 BLOCK SAMPLING 54 3.2.2 The proposal density The basis of the MCMC method will be the use of a Taylor type expansion of the conditional density log f = log f (t;k j t; ; t 1 k + +1 ; yt ; :::; yt+k ) around some preliminary estimate of t;k made using the conditioning arguments t;1 ; t+k+1 and yt ; :::; yt+k . These estimates, and the corresponding st;k = (st ; :::; st k ), will be denoted + by hats. How they are formed will be discussed in Section 3.2.4. As in chapter 2, I will write l(st ) to denote log f (yt jst ) (an implicit function of t ) and its first and second derivatives with respect to st as l0 (st ) and l00 (st ) respectively. The expansion is then P log f = ; u0t;k ut;k + ti tk l(si); i = ci + Ti i + Hiui; si = di + Zii 1 2 ' ; u0t;k ut;k + 1 2 + = Pt+k i=t +1 l(sbi ) + (si ; sbi )T l0 (sbi ) + 12 (si ; sbi )T Di (sbi )(si ; sbi ) (3.3) = log g: where ut;k and st;k are defined, implicitly, in terms of t;k . Hence the approximating form in (3.3) is regarded as being in terms of the states. This is very similar to the single move method, based on a quadratic expansion, that I considered in chapter 2 in (2.5). I will require the assumption that the as yet unspecified matrix Di (s) is everywhere strictly negative as a function of s. Typically I will take Di (sbi ) = l00 (sbi ) so that the approximation is a second order Taylor expansion. This will be convenient, for in the vast majority of cases, for l concave for example, l00 will be everywhere strictly negative. However, I let the method have the possibility that we will not take Di as the second derivative so that I cover unusual (non log-concave) cases as well. Of course for those cases, I will have to provide sensible rules for the selection of Di . A crucially attractive feature of this expansion is that the ratio f=g , used in the Metropolis step, involves only the difference between l(si ) and l~ (si ) = l(sbi ) + (si ; sbi )T l0 (sbi ) + 1 2 (si ; bsi )T Di (sbi )(si ; sbi ), not the transition density u0t;k ut;k . The implication of this is that the al- C HAPTER 3 BLOCK SAMPLING 55 gorithm should not become significantly less effective as the dimension of i increases. This can be contrasted with other approaches such as the numerical integration routines used in Kitagawa (1987), whose effectiveness usually deteriorate as the dimension of i increases. This type of expansion also appears in the work of Durbin and Koopman (1992) where a sequential expansion based on filtering and smoothing algorithms was used to provide an approximate likelihood analysis of a wide class of non-Gaussian models. Their method is essentially modal estimation using a first order expansion rather than the expansion given above. 3.2.3 Simulating using GSSF model The density of g is highly multivariate Gaussian. It is not a dominating density for log f , but there is some hope that it will be a good approximation. Now it can be seen that, P log g = ; u0t;k ut;k + ti tk l(sbi) + (si ; sbi)T l0 (sbi) + (si ; sbi)T Di(sbi)(si ; sbi) + = 1 2 1 2 Xt k = c ; u0t;k ut;k ; (yb ; si)T Vi; (ybi ; si) i t i 1 2 1 2 where and Vi;1 + (3.4) 1 = ybi = sbi + Vi l0 (sbi ); i = t; :::; t + k: = ;Di (sbi) by equating coefficients of powers of si. It is now clear that the approx- imating density can be viewed as a GSS form model consisting of the required Gaussian measurement density with pseudo measurements ybi and the standard linear Gaussian Markov chain prior in the states. So the approximating joint density of t;k jt;1 ,t+k+1 ; yt ; :::; yt+k can be calculated by writing: ybi = si + "i; "i N (0; Vi) si = ci + Zii; i = t; :::; t + k; i+1 = di + Ti i + Hi ui ; ui NID(0; I ): (3.5) C HAPTER 3 BLOCK SAMPLING The knots are fixed by setting ybi measurement equation ybi 56 = i; i = t ; 1 and i = t + k + 1 and by making the = i + "i , "i N (0; I ), where is extremely small, at the positions of these knots, i = t ; 1 and i = t + k + 1. The model is now in GSSF. Consequently, it is possible to simulate from t;k j t;1 ; t+k+1 ; yb using the de Jong and Shephard (1995) simulation smoother, described in chapter 1, on the constructed set of pseudo-measurements ybt ; :::; ybt+k . As g does not bound f it is not possible to use this simulation smoother inside an acceptreject algorithm within the Gibbs sampler. Rather I will use the simulation smoother to provide suggestions for the pseudo-dominating Metropolis algorithm suggested by Tierney (1994) and discussed in chapter 2. However, before describing the Metropolis move probability, I will first detail how the expansion points sbt ; :::; b st+k are found. 3.2.4 Finding sbt; :::; sbt+k It is important to select sensible values for the sequence sbt ; :::; b st+k , the points at which the quadratic expansion is carried out. The most straightforward choice would be to take them as the mode of f (st ; :::; st+k jt;1 ; t+k+1; yt ; :::; yt+k ). An expansion which is similar in spirit to (3.5), but without the knots, is used in the work of Durbin and Koopman (1992). Their algorithm converges to the mode of the density of jy in cases where @2 log l=@t @t0 is neg- ative semi-definite; the same condition is needed for generalized linear regression models to have a unique maximum (see McCullagh and Nelder (1989, p. 117), Wedderburn (1976) and Haberman (1977)). This condition is typically stated as a requirement that the link function be log-concave. Durbin and Koopman (1992) use an expectation smoother to provide an estimate of jy using the first order Taylor expanded approximation. These authors do not adopt a Bayesian approach, the focus of their interest being on the mode. The approach based on the second order expansion of (3.5) is a sounder basis on which to C HAPTER 3 BLOCK SAMPLING 57 find the mode. In the applications to be presented the interest is on the mode given the knots. I first expand around some arbitrary starting value of sbt;k to obtain (3.5), set sbt;k to the means from the resulting expectation smoother (given in chapter 1) and then expand sbt;k again, and so on. This will ensure that we obtain b st;k as the mode of f (st ; :::; st+k jt;1 ; t+k+1 ; yt ; :::; yt+k ). This is, in fact, a very efficient Newton-Raphson method (since it avoids explicitly calculating the Hessian) using analytic first and second derivatives on a concave objective function. Thus the approximations presented here can be interpreted as Laplace approximations to a very high dimensional density function. In practice it is found that the after 3 iterations of the smoothing algorithm we obtain a sequence b st;k = (sbt; :::; bst k ) which is extremely close to the mode. + This is very important since this expansion will be performed for each iteration of the proposed MCMC sampler. In fact rather than considering a block st;k , it is possible to use the same method to find the mode of all the signals which are not knots, s say, conditional upon all the measurements and the knots, n , by using this scheme with the GSSF set analogously to (3.5). Again this is a Newton-Raphson scheme and the overall mode bs can be found. The resulting GSSF can then be simulated from and each block between the knots can be updated or remain the same based upon the Metropolis criteria of the following section. 3.2.5 Metropolis acceptance probability The setup of the proposal density and the expansion around the mode has been described. I now wish to describe the way the Metropolis acceptance probabilities are constructed. Suppose we have set up log g(t;k j t; ; t 1 k + +1 ; yt ; :::; yt+k ) as described. Then we can perform a o , with corresponding direct Metropolis method deciding whether to retain our old values t;k n and sn drawn from g (:). The probability signals sot;k , or to update to the proposed values t;k t;k C HAPTER 3 BLOCK SAMPLING n is of accepting t;k ( 58 ) ! (snt;k ) o n Pr(t;k ! t;k ) = min 1; o ; ! (st;k ) where ! (st;k ) = expfl(st;k ) ; l~ (st;k )g = exp " t+k X i=t (3.6) # l(si ) ; l~ (si ) : If we use accept-reject within Metropolis, as in chapter 2, of Tierney (1994), then we have the scheme that we sample t;k from the proposal density using the simulation smoother until accepting the proposed block with probability min[! (st;k ); 1] . When this stage of acceptance n has been achieved we set t;k = t;k . This now forms the proposed value at the M-H stage. The M-H probability of accepting this proposal is o Pr(t;k ! ( n ) t;k ) !ar (snt;k ) = min 1; ; !ar (sot;k ) where !ar (st;k ) = exp[l(st;k ) ; minfl~ (st;k ); l(st;k )g] = exp[maxf0; l(st;k ) ; l (st;k )g] = max f1; !(st;k )g : ~ As observed in chapter 2, ! (st;k ) should be close to 1, hence resulting in high probabilities of acceptance in both stages. We proceed in this fashion, sampling all the blocks between the fixed knots. This defines a complete MCMC sweep through the states. The parameters are then sampled from f (j) via their conditional densities described in chapter 2 . For the next sweep a fixed number of knots is again randomly chosen from the states and so the process continues. C HAPTER 3 BLOCK SAMPLING 59 3.3 Particular measurement densities In this section, I will detail interesting cases of measurement densities which can be analysed via the MCMC method introduced. 3.3.1 Example 1: Exponential family measurements with canonical link An exponential family for the measurement density arises when log f (yt jt ) = yt t ; b(t ) + c(t ) and we have a known function h(:) with h(t ) = st . If a canonical link is assumed, that is t = st ; then log f (ytjst) = ytst ; b(st ) + c(yt). So :: n : o vt;1 =b (sbt ) and ybt = sbt + vt yt ; b (sbt ) . : The notation b (:) and :: : b (:) indicates the first and second derivatives of b(:) respectively. A special case of this is the Poisson model, where b(st ) = exp(st ) and so vt;1 = exp(sbt ); ybt = sbt + exp(;sbt ) fybt ; exp(sbt )g : Another important example is the binomial, where b(sbt ) = n log f1 + exp(sbt )g. For this model vt;1 = npt (1 ; pt ); ybt = bst +(yt ; npt ) = fnpt (1 ; pt )g ; where pt = exp(sbt )= f1 + exp(sbt )g . C HAPTER 3 BLOCK SAMPLING 60 3.3.2 Example 2: SV model In this case st = t : As seen in chapter 2, this model has log f (ytjt) = ;t =2 ; yt2 exp(;t )=2 2. Thus vt 2 yt2 2 exp( ; b ) ; y b = b + y exp( ; b ) = ; 1 : t t t t 2 2 2 t vt;1 = This case is particularly interesting as vt depends on yt , which cannot happen in the exponential family canonical link case. At first sight this raises some problems for as yt and ybt ! 0 so vt; ! 0 1 ! ;1. This would suggest that the suggestions from the simulation smoother might always be rejected. However, this observation ignores the role of the prior distribution, which will in effect treat such observations as missing. Of course there is a numerical overflow problem here, but that can be dealt with in a number of ways without resulting in any approximation. 3.3.3 Example 3: heavy tailed SV model This argument can be extended to allow t in the SV model, see (2.4), to follow a scaled tdistribution, t p = tt = ( ; 2)= , where tt t . Then log f (ytjt ) = ; ; ( + 1) log 1 + yt exp(;t ) ; 2 2 ( ; 2) t 2 2 2 l0 (t ) = 1 4 22 (;2) yt2 exp(;t ) 2 ( +1) 1+ yt2 exp(;t ) 2 ( ;2) 3 ; 15 ; and 3 2 l00 (t ) = ; so ( + 1) 4 yt exp(;t )= ( ; 2) 5 : 2 4 (1 + yt2 ;;t ) 2 2 exp( ( 2) ) 2 The resulting vt;1 and ybt are easy to compute. This approach has some advantages over the generic outlier approaches for Gaussian models suggested in Shephard (1994) and Carter and Kohn (1994), which explicitly use the mixture C HAPTER 3 BLOCK SAMPLING 61 representation of a t-distribution, since they require mixtures in order to obtain conditionally GSS form models. 3.3.4 Example 4: factor SV model An economically interesting factor SV model can be constructed, from the corresponding ARCH work of Diebold and Nerlove (1989) and King, Sentana and Wadhwani (1994). In the simplest univariate case it takes on the form yt = t exp(t =2) + !t ; where the model is the same as (1.4.1) but with added measurement error !t NID(0; ). 2 With this model it is not possible to set t so as to drive the variance of yt to zero, as it is bounded from below by !2 . Interestingly yt log f (ytjt) = ; 1 log( exp(t) + ! ) ; ; 2 2( exp(t ) + ! ) 2 2 2 2 2 is not necessarily concave in t . Notice that l0 ( ) = t and so 1 l00 ( ) = ; t yt2 2 exp(t ) ;1 ; 2( 2 exp(t ) + !2 ) ( 2 exp(t ) + !2 ) 4 exp(2t ) 2yt2 ;1 ; 2 ( 2 exp(t) + !2 )2 ( 2 exp(t ) + !2 ) which can be positive if yt is small. There are a number of approaches which can be suggested to overcome this problem. We could ignore the contribution of the ;1=2 log(2 exp(t )+ !2 ) C HAPTER 3 BLOCK SAMPLING 62 term to the second derivative. This would give us Dt = ; yt2 2 exp(2t ) : ( 2 exp(t ) + !2 )3 This would be a good approximation if !2 is small or if yt2 is big. In any case the approximation does not affect the validity of the approach, only the rejection probability. A multivariate generalisation of this model is considered in chapter 7. 3.4 Illustration on SV model To illustrate the effect of blocking I will work with the SV model for purposes of comparison, considered in the previous two chapters. I now analyse the output from the suggested MCMC algorithm on simulated data and the real data examined in chapter 2. 3.4.1 Output of MCMC algorithms on simulated data The simulated data allow two sets of parameters, designed to reflect typical problems for weekly and daily financial data sets. In the weekly case, while in the daily case = 1, 2 = 0:1 and = 0:9, = 1, = 0:01 and = 0:99. Here I carry out the MCMC method 2 for a simulated SV model noting efficiency gains over the single-move algorithm of chapter 2. Table 3.1 reports some results from a simulation using n = 1; 000. The table splits into two sections. The first is concerned with estimating the states given the parameters, a pure signal extraction problem. It is clearly difficult to summarise the results for all 1; 000 time periods and so I focus on the middle state, 500 , in all the calculations. Extensive simulations suggest that the results reported here are representative of these general results. The second section of Table 3.1 looks at the estimation of the states at the same time as estimating the parameters of the model. The three parameters of the SV model are drawn, C HAPTER 3 BLOCK SAMPLING 63 conditional upon the states and measurements, in the manner described in chapter 2, Section 2.3.3. Hence for that simulation the problem is a four-fold one: estimate the states and three parameters. The simulations are analysed using a Parzen type window, see chapter 1. The table reports the ratio of the resulting variance of the single-move sampler to the multi-move sampler. Numbers bigger than one reflect gains from using a multi-move sampler. One interpretation of the table is that if the ratio is x then the single-move sampler has to be iterated x times more than the multi-move sampler to achieve the same degree of precision in the estimates of interest. So if a sample is 10 times more efficient, then it produces the same degree of accuracy from 1; 000 iterations as 10; 000 iterations from the inferior simulator. For the results of Table 3.1, the multi-move sampler was run for 100; 000 iterations with the bandwidth, discussed in Section 1.2.2.4, set as was run for 1000; 000 iterations with B B = 10; 000. The single move sampler = 100; 000. The run-in (number of iterations before the samples were recorded) for the iterations was 10; 000 and 100; 000 for the multi-move and single move samplers respectively. Table 3.1 indicates a number of results. In all cases the multi-move sampler outperformed the single move sampler. When the number of knots is 0, so all the states are sampled simultaneously, the gains for the weekly parameter case are not that great, whilst for the daily parameters they are substantial. This is because the Gaussian approximation is better for the daily parameters since the Gaussian AR(1) prior dominates for this persistent case. This is important, because it is for persistent cases where the single move method does particularly badly. There are two competing considerations here. If the number of knots is large then the blocks are small and the Gaussian approximation is good since it is over low dimension and so the Metropolis method will accept frequently. On the other hand we will be retaining a lot of states from the previous MCMC sweep leading to correlation over sweeps. Generally, the multi-move method does not appear to be too sensitive to block size but the best number of C HAPTER 3 BLOCK SAMPLING 64 Weekly parameters Statesjparameters States K=0 K=1 1.7 4.1 20 22 1.3 1.3 1.5 1.4 16 14 K=3 7.8 32 1.1 1.1 32 K=5 K=10 K=20 17 45 14 28 39 12 1.7 2.2 1.7 1.6 2.6 1.7 14 23 6 K=50 K=100 K=200 12 4.3 3.0 12 21 1.98 1.5 1.7 1.5 1.5 2.0 1.5 9 10 1 Daily parameters Statesjparameters States K=0 K=1 K=3 66 98 98 91 40 30 2.7 3.1 2.9 16 13 18 93 51 51 K=5 K=10 K=20 85 103 69 60 47 80 2.8 3.4 3.8 18 16 18 76 65 106 K=50 K=100 K=200 25 8.5 2.5 14 27 18 2.6 3.6 1.8 6.8 11 5.7 23 27 26 Table 3.1 Relative efficiency of block sampler to single-move Gibbs sampler. K denotes the number of stochastic knots used. The figures are the ratio of the computed variances, and so reflect efficiency gains. The variances are computed using 10,000 lags and 100,000 iterations in all cases except for the single-move sampler on daily parameters cases. For that problem 100,000 lags and 1,000,000 iterations were used. In all cases the burn-in period is the same as the number of lags. knots for these simulations appears to be about 10. The optimal size of blocks is considered in more detail in chapter 4. 3.4.2 Output of MCMC algorithms on real data To illustrate the effectiveness of this method I will return to the application of the SV model considered in chapter 2. I maintain exactly the same model used earlier, but now use 10 stochastic knots in the block sampler. The basic results are displayed in Figure 3.1. This was generated by using 200 iterations using the initial parameter, 300 iterations updating the parameters and states and finally recording 10; 000 iterations from the equilibrium path of the sampler. The graph shares the features of Figure 2.1, with the same distribution for the parameters. However, the correlations amongst the simulations are now quite manageable. This seems a workable tool. C HAPTER 3 BLOCK SAMPLING Sampling phi | y 1 Sampling beta | y 1.25 65 .95 1 .3 .9 .75 .2 .85 .5 .1 0 5000 10000 0 40 5 20 2.5 1 .85 .9 Correlogram .95 5000 10000 150 .5 .75 Correlogram 1 300 450 5000 10000 10 1 .1 .2 Correlogram 1 0 0 0 20 1 0 Sampling sigma _eta | y .4 .3 0 0 150 300 450 0 150 300 450 Figure 3.1 Daily returns for the Pound against the US Dollar. Top graphs: the simulation aginst iteraions number for block sampler using 10 knots. Middle graphs: histograms of the resulting marginal distributions. Bottom graphs: the corresponding correlogram for the iterations. It is often useful to report the precision of the results from the simulation estimation. Here I use the Parzen type window of chapter 1 using 1; 000 lags. Note that this estimates of integrated autocorrelation time less well as the autocorrelation increases. The results given in Table 3.2 are consistent with those given in Table 2.1, for the 1; 000; 000 iterations of the single-move algorithm. However, the precision achieved with the 1; 000; 000 iterations is broadly the same as that achieved by 10; 000 iterations from the multi-move sampler. 3.5 Conclusions The methods and results indicate that the single-move algorithms, for example those of chapter 2, are likely to be unreliable for real applications due to their slow convergence and high correl- C HAPTER 3 BLOCK SAMPLING Mean jy 0.9802 jy 0.1431 jy 0.6589 Computer time 322 66 Monte Carlo S.E. Covariance & Correlation of Posterior 0.000734 0.000105 -0.689 0.294 0.00254 -0.000198 0.000787 -0.178 0.0100 0.000273 -0.000452 0.00823 Table 3.2 Daily returns for Pound against Dollar. Summaries of Figure 3.1, 10,000 replications of the multi-move sampler, using 10 stochastic knots. The Standard Error of the simulation is computed using 1,000 lags. The figures in italics are correlations. Computer time is in seconds on a P5/133. ation properties. Instead I argue that the development of Taylor expansion based multi-move simulation smoothing algorithms can offer the delivery of reliable methods. The basis for the proposals in this chapter are approximations to the conditional densities which lead to the socalled independence Metropolis samplers. The resulting MCMC methods have five basic advantages. (1) they integrate into the analysis of non-Gaussian models the role of the Kalman filter and simulation smoother, so fully exploiting the structure of the model to improve the speed of the methods. (2) the central expansion in the chapter has been previously used in various approximate methods suggested in the time series and spline literatures (but not in this context). (3) the expansion and the Metropolis ratio only use log f (yt jst ) and its approximation so as the dimension of the state increases the computational efficiency of the method should not diminish significantly. (4) the methods are only of O (n), the time dimension, regardless of the number of knots (indeed the methods are constant in speed for different numbers of knots). (5) the methods can be straightforwardly extended to many multivariate cases as seen in chapter 7. It is clear from the results of this chapter, that the efficiency gains resulting from the use of multi-move samplers is considerable. Some theoretical justification for the use of multi-move samplers rather than single move samplers is provided in chapter 4. In addition, the role of the parameters indexing the non-Gaussian state space model has been largely suppressed during this chapter. In fact, it is often the case that the parameters are of central interest (often more so than the states in econometric applications). Issues relating to the choice among equivalent C HAPTER 3 BLOCK SAMPLING parameterisations are discussed in the following chapter. 67