E¢ cient Implementation of MCMC When Using An Unbiased Likelihood Estimator

advertisement

E¢ cient Implementation of MCMC When Using An

Unbiased Likelihood Estimator

Michael Pitt

University of Oxford

Joint work with A. Doucet, G. Deligiannidis & R. Kohn

Cambridge, 22/04/14

(Cambridge, 22/04/14)

1 / 26

Organisation of the Talk

1

MCMC with intractable likehood functions.

In physics, …rst appeared in Lin, Liu & Sloan (2000). In statistics,

Beaumont (2003), Andrieu, Berthelesen, D., Roberts (2006), Andrieu

& Roberts (2009).

(Cambridge, 22/04/14)

2 / 26

Organisation of the Talk

1

MCMC with intractable likehood functions.

In physics, …rst appeared in Lin, Liu & Sloan (2000). In statistics,

Beaumont (2003), Andrieu, Berthelesen, D., Roberts (2006), Andrieu

& Roberts (2009).

2

Ine¢ ciency of the MCMC scheme with likelihood estimator against

known likelihood.

(Cambridge, 22/04/14)

2 / 26

Organisation of the Talk

1

MCMC with intractable likehood functions.

In physics, …rst appeared in Lin, Liu & Sloan (2000). In statistics,

Beaumont (2003), Andrieu, Berthelesen, D., Roberts (2006), Andrieu

& Roberts (2009).

2

Ine¢ ciency of the MCMC scheme with likelihood estimator against

known likelihood.

3

Guidelines on an optimal precision for the estimator of the

log-likelihood.

(Cambridge, 22/04/14)

2 / 26

Bayesian Inference

Likelihood function p (y ; θ ) where θ 2 Θ

(Cambridge, 22/04/14)

Rd .

3 / 26

Bayesian Inference

Likelihood function p (y ; θ ) where θ 2 Θ

Rd .

Prior distribution of density p (θ ) .

(Cambridge, 22/04/14)

3 / 26

Bayesian Inference

Likelihood function p (y ; θ ) where θ 2 Θ

Rd .

Prior distribution of density p (θ ) .

Bayesian inference relies on the posterior

π (θ ) = p ( θ j y ) = R

(Cambridge, 22/04/14)

p (y ; θ ) p ( θ )

.

p

y ; θ0 p θ0 d θ0

Θ

3 / 26

Bayesian Inference

Likelihood function p (y ; θ ) where θ 2 Θ

Rd .

Prior distribution of density p (θ ) .

Bayesian inference relies on the posterior

π (θ ) = p ( θ j y ) = R

p (y ; θ ) p ( θ )

.

p

y ; θ0 p θ0 d θ0

Θ

For non-trivial models, inference relies typically on MCMC.

(Cambridge, 22/04/14)

3 / 26

Metropolis-Hastings algorithm

Set ϑ(0 ) and iterate for i = 1, 2, ...

(Cambridge, 22/04/14)

4 / 26

Metropolis-Hastings algorithm

Set ϑ(0 ) and iterate for i = 1, 2, ...

1

Sample ϑ

q

(Cambridge, 22/04/14)

j ϑ (i

1)

.

4 / 26

Metropolis-Hastings algorithm

Set ϑ(0 ) and iterate for i = 1, 2, ...

1

Sample ϑ

2

Compute

q

j ϑ (i

α = 1^

(Cambridge, 22/04/14)

1)

.

q ϑ (i

p (y ; ϑ ) p ( ϑ )

p y ; ϑ (i

1)

p ϑ (i

1)

1)

q ϑ j ϑ (i

ϑ

1)

.

4 / 26

Metropolis-Hastings algorithm

Set ϑ(0 ) and iterate for i = 1, 2, ...

1

Sample ϑ

2

Compute

q

j ϑ (i

α = 1^

3

1)

.

q ϑ (i

p (y ; ϑ ) p ( ϑ )

p y ; ϑ (i

1)

p ϑ (i

1)

q ϑ j ϑ (i

With probability α, set ϑ(i ) := ϑ and ϑ(i ) := ϑ(i

(Cambridge, 22/04/14)

1)

1)

ϑ

1)

.

otherwise.

4 / 26

Intractable Likelihood Function

In numerous scenarios, p (y ; θ ) cannot be evaluated pointwise; e.g.

p (y ; θ ) =

Z

p (x, y ; θ ) dx

where the integral cannot be evaluated.

(Cambridge, 22/04/14)

5 / 26

Intractable Likelihood Function

In numerous scenarios, p (y ; θ ) cannot be evaluated pointwise; e.g.

p (y ; θ ) =

Z

p (x, y ; θ ) dx

where the integral cannot be evaluated.

A standard “solution” consists of using MCMC to sample from

p ( θ, x j y ) =

p (x, y ; θ ) p (θ )

p (y )

by updating iterately x and θ.

(Cambridge, 22/04/14)

5 / 26

Intractable Likelihood Function

In numerous scenarios, p (y ; θ ) cannot be evaluated pointwise; e.g.

p (y ; θ ) =

Z

p (x, y ; θ ) dx

where the integral cannot be evaluated.

A standard “solution” consists of using MCMC to sample from

p ( θ, x j y ) =

p (x, y ; θ ) p (θ )

p (y )

by updating iterately x and θ.

Gibbs sampling strategies can be slow mixing and di¢ cult to put in

practice.

(Cambridge, 22/04/14)

5 / 26

MCMC with Intractable Likelihood Function

Let p

b (y ; θ, U ) be an unbiased non-negative estimator of the

likelihood where U mθ ( ); i.e.

p (y ; θ ) =

(Cambridge, 22/04/14)

Z

U

p

b (y ; θ, u ) mθ (u ) du.

6 / 26

MCMC with Intractable Likelihood Function

Let p

b (y ; θ, U ) be an unbiased non-negative estimator of the

likelihood where U mθ ( ); i.e.

p (y ; θ ) =

Z

U

p

b (y ; θ, u ) mθ (u ) du.

Introduce a target distribution on Θ

π (θ, u ) = π (θ )

U of density

p

b (y ; θ, u )

p (θ ) p

b (y ; θ, u ) mθ (u )

mθ (u ) =

p (y ; θ )

p (y )

then unbiasedness yields

Z

(Cambridge, 22/04/14)

U

π (θ, u ) du = π (θ )

6 / 26

MCMC with Intractable Likelihood Function

Let p

b (y ; θ, U ) be an unbiased non-negative estimator of the

likelihood where U mθ ( ); i.e.

p (y ; θ ) =

Z

U

p

b (y ; θ, u ) mθ (u ) du.

Introduce a target distribution on Θ

π (θ, u ) = π (θ )

U of density

p

b (y ; θ, u )

p (θ ) p

b (y ; θ, u ) mθ (u )

mθ (u ) =

p (y ; θ )

p (y )

then unbiasedness yields

Z

U

π (θ, u ) du = π (θ )

Any MCMC algorithm sampling from π (θ, u ) yields samples from

π ( θ ).

(Cambridge, 22/04/14)

6 / 26

Pseudo-Marginal Metropolis-Hastings algorithm

Set ϑ(0 ) , U (0 )

(Cambridge, 22/04/14)

and iterate for i = 1, 2, ...

7 / 26

Pseudo-Marginal Metropolis-Hastings algorithm

1

Set ϑ(0 ) , U (0 )

and iterate for i = 1, 2, ...

Sample ϑ

j ϑ (i

q

(Cambridge, 22/04/14)

1)

,U

mϑ ( ) to obtain p

b (y ; ϑ, U ).

7 / 26

Pseudo-Marginal Metropolis-Hastings algorithm

Set ϑ(0 ) , U (0 )

and iterate for i = 1, 2, ...

1

Sample ϑ

j ϑ (i

2

Compute

q

α = 1^

(Cambridge, 22/04/14)

1)

,U

p

b (y ; ϑ, U )

p

b y ; ϑ (i

1)

, U (i

mϑ ( ) to obtain p

b (y ; ϑ, U ).

p (ϑ )

1)

p ϑ (i

1)

q ϑ (i

1)

q ϑ j ϑ (i

ϑ

1)

7 / 26

Pseudo-Marginal Metropolis-Hastings algorithm

Set ϑ(0 ) , U (0 )

and iterate for i = 1, 2, ...

1

Sample ϑ

j ϑ (i

2

Compute

q

α = 1^

3

1)

,U

p

b (y ; ϑ, U )

p

b y ; ϑ (i

1)

, U (i

mϑ ( ) to obtain p

b (y ; ϑ, U ).

p (ϑ )

1)

p ϑ (i

With proba α, set ϑ(i ) , p

b y ; ϑ (i ) , U (i )

where you are otherwise.

(Cambridge, 22/04/14)

1)

q ϑ (i

1)

q ϑ j ϑ (i

ϑ

1)

:= (ϑ, p

b (y ; ϑ, U )) and stay

7 / 26

Importance Sampling Estimator

For latent variable models, one has

p (y ; θ ) =

Z

p (x, y ; θ ) dx =

Z

p (x, y ; θ )

qθ (x )dx

qθ (x )

where qθ (x ) is an importance sampling density.

(Cambridge, 22/04/14)

8 / 26

Importance Sampling Estimator

For latent variable models, one has

p (y ; θ ) =

Z

p (x, y ; θ ) dx =

Z

p (x, y ; θ )

qθ (x )dx

qθ (x )

where qθ (x ) is an importance sampling density.

An unbiased estimator is given by

p

b(y ; θ, U ) =

(Cambridge, 22/04/14)

1

N

p Xk, y; θ

∑ qθ (X k ) ,

k =1

N

Xk

i.i.d.

qθ ( )

8 / 26

Importance Sampling Estimator

For latent variable models, one has

p (y ; θ ) =

Z

p (x, y ; θ ) dx =

Z

p (x, y ; θ )

qθ (x )dx

qθ (x )

where qθ (x ) is an importance sampling density.

An unbiased estimator is given by

p

b(y ; θ, U ) =

1

N

p Xk, y; θ

∑ qθ (X k ) ,

k =1

N

Here U := X 1 , ..., X N and mθ (u ) =

(Cambridge, 22/04/14)

Xk

∏ k =1 qθ

N

i.i.d.

qθ ( )

xk .

8 / 26

Importance Sampling Estimator

For latent variable models, one has

p (y ; θ ) =

Z

p (x, y ; θ ) dx =

Z

p (x, y ; θ )

qθ (x )dx

qθ (x )

where qθ (x ) is an importance sampling density.

An unbiased estimator is given by

p

b(y ; θ, U ) =

1

N

p Xk, y; θ

∑ qθ (X k ) ,

k =1

N

Here U := X 1 , ..., X N and mθ (u ) =

Xk

∏ k =1 qθ

N

i.i.d.

qθ ( )

xk .

Whatever being N 1, the pseudo-marginal MH admits π (θ ) as

invariant distribution.

(Cambridge, 22/04/14)

8 / 26

Sequential Monte Carlo Estimator

fXt gt 0 is a X-valued latent Markov process with X0

Xt +1 jXt

f ( j Xt ; θ ).

(Cambridge, 22/04/14)

µ( ; θ ) and

9 / 26

Sequential Monte Carlo Estimator

fXt gt 0 is a X-valued latent Markov process with X0 µ( ; θ ) and

Xt +1 jXt

f ( j Xt ; θ ).

Observations fYt gt 1 are conditionally independent given fXt gt 0

with Yt j fXk gk 0 g ( jXt , θ ).

(Cambridge, 22/04/14)

9 / 26

Sequential Monte Carlo Estimator

fXt gt 0 is a X-valued latent Markov process with X0 µ( ; θ ) and

Xt +1 jXt

f ( j Xt ; θ ).

Observations fYt gt 1 are conditionally independent given fXt gt 0

with Yt j fXk gk 0 g ( jXt , θ ).

Likelihood of y1:T = (y1 , ..., yT ) is

p (y1:T ; θ ) =

(Cambridge, 22/04/14)

Z

XT +1

p (x0:T , y1:T ; θ )dx0:T .

9 / 26

Sequential Monte Carlo Estimator

fXt gt 0 is a X-valued latent Markov process with X0 µ( ; θ ) and

Xt +1 jXt

f ( j Xt ; θ ).

Observations fYt gt 1 are conditionally independent given fXt gt 0

with Yt j fXk gk 0 g ( jXt , θ ).

Likelihood of y1:T = (y1 , ..., yT ) is

p (y1:T ; θ ) =

Z

XT +1

p (x0:T , y1:T ; θ )dx0:T .

SMC provides an unbiased estimator of relative variance O (T /N )

where N is the number of particles.

(Cambridge, 22/04/14)

9 / 26

Sequential Monte Carlo Estimator

fXt gt 0 is a X-valued latent Markov process with X0 µ( ; θ ) and

Xt +1 jXt

f ( j Xt ; θ ).

Observations fYt gt 1 are conditionally independent given fXt gt 0

with Yt j fXk gk 0 g ( jXt , θ ).

Likelihood of y1:T = (y1 , ..., yT ) is

p (y1:T ; θ ) =

Z

XT +1

p (x0:T , y1:T ; θ )dx0:T .

SMC provides an unbiased estimator of relative variance O (T /N )

where N is the number of particles.

Whatever being N 1, the pseudo-marginal MH admits π (θ ) as

invariant distribution.

(Cambridge, 22/04/14)

9 / 26

A Nonlinear State-Space Model

Standard non-linear model

Xt =

1

2 Xt 1

Yt =

1

2

20 Xt

(Cambridge, 22/04/14)

+ 25 1 +XXt 21 + 8 cos(1.2t ) + Vt ,

+ Wt ,

t 1

Wt

i.i.d.

Vt

i.i.d.

N 0, σ2V ,

N 0, σ2W .

10 / 26

A Nonlinear State-Space Model

Standard non-linear model

Xt =

1

2 Xt 1

Yt =

1

2

20 Xt

+ 25 1 +XXt 21 + 8 cos(1.2t ) + Vt ,

+ Wt ,

t 1

Wt

i.i.d.

Vt

i.i.d.

N 0, σ2V ,

N 0, σ2W .

T = 200 data points with θ = σ2V , σ2W = (10, 10).

(Cambridge, 22/04/14)

10 / 26

A Nonlinear State-Space Model

Standard non-linear model

Xt =

1

2 Xt 1

Yt =

1

2

20 Xt

+ 25 1 +XXt 21 + 8 cos(1.2t ) + Vt ,

+ Wt ,

t 1

Wt

i.i.d.

Vt

i.i.d.

N 0, σ2V ,

N 0, σ2W .

T = 200 data points with θ = σ2V , σ2W = (10, 10).

Di¢ cult to perform standard MCMC as p ( x1:T j y1:T , θ ) is highly

multimodal.

(Cambridge, 22/04/14)

10 / 26

A Nonlinear State-Space Model

Standard non-linear model

Xt =

1

2 Xt 1

Yt =

1

2

20 Xt

+ 25 1 +XXt 21 + 8 cos(1.2t ) + Vt ,

+ Wt ,

t 1

Wt

i.i.d.

Vt

i.i.d.

N 0, σ2V ,

N 0, σ2W .

T = 200 data points with θ = σ2V , σ2W = (10, 10).

Di¢ cult to perform standard MCMC as p ( x1:T j y1:T , θ ) is highly

multimodal.

We sample from p ( θ j y1:T ) using a random walk pseudo-marginal

MH where p (y1:T ; θ ) is estimated using SMC with N particles.

(Cambridge, 22/04/14)

10 / 26

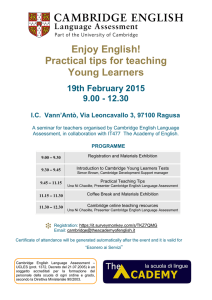

A Nonlinear State-Space Model

σ

σ

V

1.0

N=96, T=200

W

σ

σ

V

W

N=96, T=200

3

0.5

2

0

σ

200

V

σ

400

600

800

1000

N=600, T=200

W

0.0

1.0

0

100

200

σ

3

300

σ

V

W

400

500

N=600, T=200

0.5

2

0.0

0

200

σ

400

σ

V

600

800

0

1000

50

100

150

200

N=6667, T=200

W

1.0

3

σ

σ

V

W

N=6667, T=200

0.5

2

0

200

400

600

Figure: Autocorrelation of

(Cambridge, 22/04/14)

800

n

(i )

σV

1000

o

0.0

and

0

n

(i )

σW

50

o

100

150

200

of the MH sampler for various N.

11 / 26

How to Select the Number of Samples

A key issue from a practical point of view is how to select N?

(Cambridge, 22/04/14)

12 / 26

How to Select the Number of Samples

A key issue from a practical point of view is how to select N?

If N is too small, then the algorithm mixes poorly and will require

many MCMC iterations.

(Cambridge, 22/04/14)

12 / 26

How to Select the Number of Samples

A key issue from a practical point of view is how to select N?

If N is too small, then the algorithm mixes poorly and will require

many MCMC iterations.

If N is too large, then each MCMC iteration is expensive.

(Cambridge, 22/04/14)

12 / 26

Pseudo-Marginal Metropolis-Hastings algorithm

Consider the error in the log-likelihood estimator

Z = log p

b (y ; θ, U )

(Cambridge, 22/04/14)

log p (y ; θ )

gθ ( )

13 / 26

Pseudo-Marginal Metropolis-Hastings algorithm

Consider the error in the log-likelihood estimator

Z = log p

b (y ; θ, U )

log p (y ; θ )

gθ ( )

In the (θ, Z ) parameterization, the target is

π (θ, u ) = π (θ )

(Cambridge, 22/04/14)

p

b (y ; θ, u )

mθ (u ) ) π (θ, z ) = π (θ ) exp(z )gθ (z ).

p (y ; θ )

13 / 26

Pseudo-Marginal Metropolis-Hastings algorithm

Consider the error in the log-likelihood estimator

Z = log p

b (y ; θ, U )

log p (y ; θ )

gθ ( )

In the (θ, Z ) parameterization, the target is

π (θ, u ) = π (θ )

p

b (y ; θ, u )

mθ (u ) ) π (θ, z ) = π (θ ) exp(z )gθ (z ).

p (y ; θ )

The pseudo-marginal MH algorithm is

Q f(θ, z ) , (d ϑ, dw )g = q (ϑ jθ )gϑ (w ) min f1, r (θ, ϑ ) exp (w

+ 1

z )g d ϑdw

$Q (θ, z ) δ(θ,z ) (d ϑ, dw )

where r (θ, ϑ ) = π (ϑ )q (θ jϑ )/ fπ (θ )q (ϑ jθ )g .

(Cambridge, 22/04/14)

13 / 26

Ine¢ ciency Measure

Consider the Markov chain fθ i , Zi g of transition Q with invariant

distribution π (θ, z ) and h : Θ ! R. De…ne

π (h) := Eπ [h(θ )]

(Cambridge, 22/04/14)

and

b n (h ) = n

π

1

n

∑ h ( θ i ).

i =1

14 / 26

Ine¢ ciency Measure

Consider the Markov chain fθ i , Zi g of transition Q with invariant

distribution π (θ, z ) and h : Θ ! R. De…ne

π (h) := Eπ [h(θ )]

and

b n (h ) = n

π

1

n

∑ h ( θ i ).

i =1

Proposition (KV 1986). If fθ i , Zi g is stationary and ergodic,

Vπ [h(θ )] < ∞ and

IFhQ := 1 + 2

∞

∑ corrπ,Q fh (θ 0 ) , h (θ τ )g

τ =1

then

p

b n (h )

n fπ

(Cambridge, 22/04/14)

π (h)g ! N 0, Vπ [h(θ )] IFhQ .

14 / 26

Ine¢ ciency Measure

Consider the Markov chain fθ i , Zi g of transition Q with invariant

distribution π (θ, z ) and h : Θ ! R. De…ne

π (h) := Eπ [h(θ )]

and

b n (h ) = n

π

1

n

∑ h ( θ i ).

i =1

Proposition (KV 1986). If fθ i , Zi g is stationary and ergodic,

Vπ [h(θ )] < ∞ and

IFhQ := 1 + 2

∞

∑ corrπ,Q fh (θ 0 ) , h (θ τ )g

τ =1

then

p

b n (h )

n fπ

π (h)g ! N 0, Vπ [h(θ )] IFhQ .

The Integrated Autocorrelation Time IFhQ is a measure of

ine¢ ciency of Q which we want to minimize for a …xed

computational budget.

(Cambridge, 22/04/14)

14 / 26

Aim of the Analysis

Simplifying Assumption: The noise Z is independent of θ and

Gaussian; i.e. Z

N

σ2 /2; σ2 :

π (θ, z ) = π (θ ) exp (z ) g (z ) = π (θ ) N z; σ2 /2; σ2 .

|

{z

}

π Z (z )

(Cambridge, 22/04/14)

15 / 26

Aim of the Analysis

Simplifying Assumption: The noise Z is independent of θ and

Gaussian; i.e. Z

N

σ2 /2; σ2 :

π (θ, z ) = π (θ ) exp (z ) g (z ) = π (θ ) N z; σ2 /2; σ2 .

|

{z

}

π Z (z )

Aim: Minimize the computational cost

CThQ (σ ) = IFhQ (σ) /σ2

as σ2 ∝ 1/N and computational e¤orts proportional to N.

(Cambridge, 22/04/14)

15 / 26

Aim of the Analysis

Simplifying Assumption: The noise Z is independent of θ and

Gaussian; i.e. Z

N

σ2 /2; σ2 :

π (θ, z ) = π (θ ) exp (z ) g (z ) = π (θ ) N z; σ2 /2; σ2 .

|

{z

}

π Z (z )

Aim: Minimize the computational cost

CThQ (σ ) = IFhQ (σ) /σ2

as σ2 ∝ 1/N and computational e¤orts proportional to N.

Special cases:

(Cambridge, 22/04/14)

15 / 26

Aim of the Analysis

Simplifying Assumption: The noise Z is independent of θ and

Gaussian; i.e. Z

N

σ2 /2; σ2 :

π (θ, z ) = π (θ ) exp (z ) g (z ) = π (θ ) N z; σ2 /2; σ2 .

|

{z

}

π Z (z )

Aim: Minimize the computational cost

CThQ (σ ) = IFhQ (σ) /σ2

as σ2 ∝ 1/N and computational e¤orts proportional to N.

Special cases:

1

When q (ϑ jθ ) = p ( ϑ j y ), σopt = 0.92 (Pitt et al., 2012).

(Cambridge, 22/04/14)

15 / 26

Aim of the Analysis

Simplifying Assumption: The noise Z is independent of θ and

Gaussian; i.e. Z

N

σ2 /2; σ2 :

π (θ, z ) = π (θ ) exp (z ) g (z ) = π (θ ) N z; σ2 /2; σ2 .

|

{z

}

π Z (z )

Aim: Minimize the computational cost

CThQ (σ ) = IFhQ (σ) /σ2

as σ2 ∝ 1/N and computational e¤orts proportional to N.

Special cases:

1

When q (ϑ jθ ) = p ( ϑ j y ), σopt = 0.92 (Pitt et al., 2012).

2

When π (θ ) =

d

∏ f (θ i ) and q (ϑjθ ) is an isotropic Gaussian random

i =1

walk then, as d ! ∞, σopt = 1.81 (Sherlock et al., 2013).

(Cambridge, 22/04/14)

15 / 26

Sketch of the Analysis

For general proposals and targets, direct minimization of

CThQ (σ) = IFhQ (σ) /σ2 impossible so minimize an upper bound over

it.

(Cambridge, 22/04/14)

16 / 26

Sketch of the Analysis

For general proposals and targets, direct minimization of

CThQ (σ) = IFhQ (σ) /σ2 impossible so minimize an upper bound over

it.

We introduce an auxiliary π (θ, z ) reversible kernel

Q f(θ, z ) , (d ϑ, dw )g = q (ϑ jθ )g (w )αEX (θ, ϑ ) αZ (z, w ) d ϑdw

+ f1

$EX (θ ) $Z (z )g δ(θ,z ) (d ϑ, dw )

where

αEX (θ, ϑ ) = min f1, r (θ, ϑ )g , αZ (z, w ) = min f1, exp (w

(Cambridge, 22/04/14)

z )g

16 / 26

Sketch of the Analysis

For general proposals and targets, direct minimization of

CThQ (σ) = IFhQ (σ) /σ2 impossible so minimize an upper bound over

it.

We introduce an auxiliary π (θ, z ) reversible kernel

Q f(θ, z ) , (d ϑ, dw )g = q (ϑ jθ )g (w )αEX (θ, ϑ ) αZ (z, w ) d ϑdw

+ f1

$EX (θ ) $Z (z )g δ(θ,z ) (d ϑ, dw )

where

αEX (θ, ϑ ) = min f1, r (θ, ϑ )g , αZ (z, w ) = min f1, exp (w

Peskun’s theorem (1973) guarantees that IFhQ (σ)

CThQ (σ) CThQ (σ).

(Cambridge, 22/04/14)

z )g

IFhQ (σ) so that

16 / 26

A Bounding Chain

The Q chain can be interpreted in the following two step procedure

(Cambridge, 22/04/14)

17 / 26

A Bounding Chain

The Q chain can be interpreted in the following two step procedure

Let (θ, Z ) be the current state of the Markov chain.

(Cambridge, 22/04/14)

17 / 26

A Bounding Chain

The Q chain can be interpreted in the following two step procedure

Let (θ, Z ) be the current state of the Markov chain.

1

Propose ϑ

(Cambridge, 22/04/14)

q (ϑ jθ ) and w

g ( ; ϑ ).

17 / 26

A Bounding Chain

The Q chain can be interpreted in the following two step procedure

Let (θ, Z ) be the current state of the Markov chain.

q (ϑ jθ ) and w

g ( ; ϑ ).

1

Propose ϑ

2

Accept ϑ w.p. αEX (θ; ϑ ) = 1 ^

(Cambridge, 22/04/14)

π (ϑ ) q ( θ jϑ )

.

π (θ ) q ( ϑ jθ )

17 / 26

A Bounding Chain

The Q chain can be interpreted in the following two step procedure

Let (θ, Z ) be the current state of the Markov chain.

q (ϑ jθ ) and w

g ( ; ϑ ).

1

Propose ϑ

2

Accept ϑ w.p. αEX (θ; ϑ ) = 1 ^

3

π (ϑ ) q ( θ jϑ )

.

π (θ ) q ( ϑ jθ )

Accept w w.p. αZ (z; w ) = 1 ^ exp(w

(Cambridge, 22/04/14)

z ).

17 / 26

A Bounding Chain

The Q chain can be interpreted in the following two step procedure

Let (θ, Z ) be the current state of the Markov chain.

q (ϑ jθ ) and w

g ( ; ϑ ).

1

Propose ϑ

2

Accept ϑ w.p. αEX (θ; ϑ ) = 1 ^

3

4

π (ϑ ) q ( θ jϑ )

.

π (θ ) q ( ϑ jθ )

Accept w w.p. αZ (z; w ) = 1 ^ exp(w

z ).

(ϑ, w ) is accepted if and only if there is acceptance in both criteria.

(Cambridge, 22/04/14)

17 / 26

Sketch of the Analysis

Let (θ i , Zi )i

1

(Cambridge, 22/04/14)

be generated by Q .

18 / 26

Sketch of the Analysis

Let (θ i , Zi )i

1

ei

Denote e

θi , Z

be generated by Q .

i 1

sojourn times; i.e.

the accepted proposals and (τ i )i

e

e1 = (θ 1 , Z1 ) =

θ1 , Z

e

e2 = (θ τ1+1 , Zτ1 +1 ) =

θ2 , Z

e

ei +1 6= e

ei

θ i +1 , Z

θi , Z

(Cambridge, 22/04/14)

1

the associated

= ( θ τ 1 , Zτ 1 ),

= (θ τ2 , Zτ2 ) and so on where

a.s.

18 / 26

Sketch of the Analysis

Let (θ i , Zi )i

1

ei

Denote e

θi , Z

be generated by Q .

i 1

sojourn times; i.e.

the accepted proposals and (τ i )i

e

e1 = (θ 1 , Z1 ) =

θ1 , Z

e

e2 = (θ τ1+1 , Zτ1 +1 ) =

θ2 , Z

e

ei +1 6= e

ei

θ i +1 , Z

θi , Z

the associated

= ( θ τ 1 , Zτ 1 ),

= (θ τ2 , Zτ2 ) and so on where

a.s.

Q

IFhQ (σ) can be re-expressed in terms of IFh/J($

QJ f(θ, z ) , (d ϑ, dw )g =

(Cambridge, 22/04/14)

1

EX $Z )

(σ) where

q (d ϑ jθ )αEX (θ, ϑ ) g (dw )αZ (z, w )

$EX (θ )

$Z (z )

18 / 26

Sketch of the Analysis

Let (θ i , Zi )i

1

ei

Denote e

θi , Z

be generated by Q .

i 1

sojourn times; i.e.

the accepted proposals and (τ i )i

e

e1 = (θ 1 , Z1 ) =

θ1 , Z

e

e2 = (θ τ1+1 , Zτ1 +1 ) =

θ2 , Z

e

ei +1 6= e

ei

θ i +1 , Z

θi , Z

1

the associated

= ( θ τ 1 , Zτ 1 ),

= (θ τ2 , Zτ2 ) and so on where

a.s.

Q

IFhQ (σ) can be re-expressed in terms of IFh/J($

QJ f(θ, z ) , (d ϑ, dw )g =

EX $Z )

(σ) where

q (d ϑ jθ )αEX (θ, ϑ ) g (dw )αZ (z, w )

$EX (θ )

$Z (z )

Proof exploits spectral representations of these kernels + Positivity of

QJZ (z, dw ).

(Cambridge, 22/04/14)

18 / 26

Main Results

Proposition:

IFhQ (σ)

IFhEX

IFhQ (σ)

IFhEX

1

2

1+

1

IFhEX

1 + IF Z (σ)

1

IFhEX

and the bound is tight as IFhEX ! 1 or σ ! 0.

As IFJEX

! ∞,

,h/$

EX

IFhQ (σ)

1

! σ

.

EX

π Z ($Z (σ))

IFh

(Cambridge, 22/04/14)

19 / 26

Main Results

Proposition:

IFhQ (σ)

IFhEX

IFhQ (σ)

IFhEX

1

2

1+

1

IFhEX

1 + IF Z (σ)

1

IFhEX

and the bound is tight as IFhEX ! 1 or σ ! 0.

As IFJEX

! ∞,

,h/$

EX

IFhQ (σ)

1

! σ

.

EX

π Z ($Z (σ))

IFh

Results used to minimize w.r.t σ upper bounds on

CThQ (σ) = IFhQ (σ) /σ2 .

(Cambridge, 22/04/14)

19 / 26

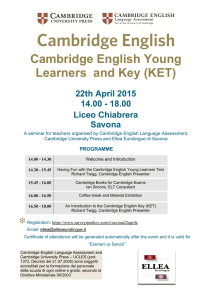

Bounds on Relative Computational Costs

30

R

R

R

R

20

C

C

C

C

T

T

T

T

against

σ

, IFEX=4

EX

,

I

F

=

40

h

Z

(2 )

h

(2 )

R C T h (2 ), I F E X = 2

R C T h (2 ), I F E X = 1 0

R C T h (2 ), I F E X = 1 0 6

10

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

1.1

1.2

1.3

1.4

1.5

1.6

1.7

1.8

1.9

2

1.3

1.4

1.5

1.6

1.7

1.8

1.9

2

30

R C T Z against

σ

R C T h (3 ), I F JE X = 4

R C T h (3 ), I F JE X = 4 0

RCT

20

R C T h (3 ), I F J E X = 2

R C T h (3 ), I F J E X = 1 0

R C T h (3 ), I F J E X = 1 0 6

10

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

1.1

1.2

Figure: Bounds on IFhQ (σ) / σ2 IFhEX

(Cambridge, 22/04/14)

20 / 26

Practical Guidelines

For good proposals, select σ

σ 1.7.

(Cambridge, 22/04/14)

1 whereas for poor proposals, select

21 / 26

Practical Guidelines

For good proposals, select σ

σ 1.7.

1 whereas for poor proposals, select

When you have no clue about the proposal e¢ ciency,

(Cambridge, 22/04/14)

21 / 26

Practical Guidelines

For good proposals, select σ

σ 1.7.

1 whereas for poor proposals, select

When you have no clue about the proposal e¢ ciency,

1

If σopt = 1 and you pick σ = 1.7, computing time increases by

150%.

(Cambridge, 22/04/14)

21 / 26

Practical Guidelines

For good proposals, select σ

σ 1.7.

1 whereas for poor proposals, select

When you have no clue about the proposal e¢ ciency,

1

If σopt = 1 and you pick σ = 1.7, computing time increases by

150%.

2

If σopt = 1.7 and you pick σ = 1, computing time increases by

(Cambridge, 22/04/14)

50%.

21 / 26

Practical Guidelines

For good proposals, select σ

σ 1.7.

1 whereas for poor proposals, select

When you have no clue about the proposal e¢ ciency,

1

If σopt = 1 and you pick σ = 1.7, computing time increases by

150%.

2

If σopt = 1.7 and you pick σ = 1, computing time increases by

3

If σopt = 1 or σopt = 1.7 and you pick σ = 1.2, computing time

increases by 15%.

(Cambridge, 22/04/14)

50%.

21 / 26

Example: Noisy Autoregressive Example

Consider

Xt = µ(1

φ) + φXt + Vt ,

Yt = Xt + Wt ,

Wt

i.i.d.

Vt

i.i.d.

N 0, σ2η ,

N 0, σ2ε ,

where θ = φ, µ, σ2η .

(Cambridge, 22/04/14)

22 / 26

Example: Noisy Autoregressive Example

Consider

Xt = µ(1

φ) + φXt + Vt ,

Yt = Xt + Wt ,

Wt

i.i.d.

Vt

i.i.d.

N 0, σ2η ,

N 0, σ2ε ,

where θ = φ, µ, σ2η .

Likelihood can be computed exactly using Kalman.

(Cambridge, 22/04/14)

22 / 26

Example: Noisy Autoregressive Example

Consider

Xt = µ(1

φ) + φXt + Vt ,

Yt = Xt + Wt ,

Wt

i.i.d.

Vt

i.i.d.

N 0, σ2η ,

N 0, σ2ε ,

where θ = φ, µ, σ2η .

Likelihood can be computed exactly using Kalman.

Autoregressive Metropolis proposal of coe¢ cient ρ for ϑ based on

multivariate t-distribution.

(Cambridge, 22/04/14)

22 / 26

Example: Noisy Autoregressive Example

Consider

Xt = µ(1

φ) + φXt + Vt ,

Yt = Xt + Wt ,

Wt

i.i.d.

Vt

i.i.d.

N 0, σ2η ,

N 0, σ2ε ,

where θ = φ, µ, σ2η .

Likelihood can be computed exactly using Kalman.

Autoregressive Metropolis proposal of coe¢ cient ρ for ϑ based on

multivariate t-distribution.

N is selected so as to obtain σ θ

(Cambridge, 22/04/14)

constant where θ posterior mean.

22 / 26

Empirical vs Asymptotic Distribution of Log-Likelihood

Estimator

0.5

ρ =0

Probitexample:N=120,

0.5

0.4

0.4

0.3

0.3

0.2

0.2

0.1

0.1

-4

0.5

-2

0

2

4

-4

ρ =0.50

AR1 plus noiseexample:N=60,

0.4

0.4

0.3

0.3

0.2

0.2

0.1

0.1

-4

-2

0

2

4

ρ = 0 .9 7

Probitexample:N=120,

-2

0

2

4

ρ = 0 .9

AR1 plus noiseexample:N=60,

-4

-2

0

2

4

Figure: Histograms of proposed (red) and accepted (pink) values of Z in

PMCMC scheme. Overlayed are Gaussian pdfs from our simplifying Assumption

for a target of σ = 0.92.

(Cambridge, 22/04/14)

23 / 26

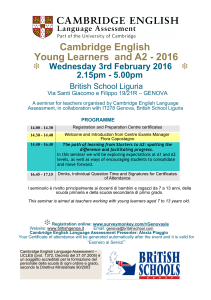

Relative Ine¢ ciency and Computing Time

ρ =0

RCT

500

500

250

250

0

100

ρ = 0 .4

200

300

RCT

500

2

100

ρ = 0 .6

200

300

0

1

2

500

50

250

25

100

200

300

500

250

0

100

1

2

RCT

RCT

200

300

300

50

250

25

1

2

100

200

300

RIF

2

RIF

1

50

2

RIF

25

0

500

1

50

25

0

250

ρ = 0 .9

200

25

500

0

100

RIF

RCT

RCT

RIF

25

50

RCT

250

0

50

RIF

25

1

500

250

50

RCT

100

200

300

RIF

1

50

2

RIF

25

0

100

200

300

1

2

Figure: From left to right: RCThQ vs N, RCThQ vs σ(θ ), RIFhQ against N and

RIFhQ against σ(θ ) for various values of ρ and di¤erent parameters.

(Cambridge, 22/04/14)

24 / 26

Acceptance Probability

0.75

0.75

Pr(Accept) of Ex act

Pr(Accept) of Particle MH

Pr(Accept) of Bo und

Pr(Accept) of Ex act

Pr(Accept) of Particle MH

Pr(Accept) of Bo und

0.50

0.50

ρ=0

0.25

0.5

ρ = 0 .4

0.25

1.0

1.5

2.0

0.75

0.5

1.0

0.75

2.0

Pr(Accept) of Ex act

Pr(Accept) of Particle MH

Pr(Accept) of Bo und

Pr(Accept) of Ex act

Pr(Accept) of Particle MH

Pr(Accept) of Bo und

0.50

1.5

0.50

ρ = 0 .6

0.25

0.5

ρ = 0 .9

0.25

1.0

1.5

2.0

0.5

1.0

1.5

2.0

(Cambridge,

22/04/14) probability against σ.Theoretical (upper bound) vs Actual.

25 / 26

Figure:

Acceptance

Discussion

Simpli…ed quantitative analysis of pseudo-marginal MCMC.

(Cambridge, 22/04/14)

26 / 26

Discussion

Simpli…ed quantitative analysis of pseudo-marginal MCMC.

1

Much weaker assumptions than previous work on proposal and target.

(Cambridge, 22/04/14)

26 / 26

Discussion

Simpli…ed quantitative analysis of pseudo-marginal MCMC.

1

Much weaker assumptions than previous work on proposal and target.

2

Assumption on orthogonality of the noise.

(Cambridge, 22/04/14)

26 / 26

Discussion

Simpli…ed quantitative analysis of pseudo-marginal MCMC.

1

Much weaker assumptions than previous work on proposal and target.

2

Assumption on orthogonality of the noise.

For good proposals, select σ

σ 1.7.

(Cambridge, 22/04/14)

1 whereas for poor proposals, select

26 / 26

Discussion

Simpli…ed quantitative analysis of pseudo-marginal MCMC.

1

Much weaker assumptions than previous work on proposal and target.

2

Assumption on orthogonality of the noise.

For good proposals, select σ

σ 1.7.

1 whereas for poor proposals, select

In scenarios where the proposal e¢ ciency is unknown, select σ

(Cambridge, 22/04/14)

1.2.

26 / 26