Quantifying Gymnast Performance using a 3D Camera Contents Brian Reily

advertisement

Quantifying Gymnast Performance using a 3D

Camera

CSCI512 Spring 2015

Brian Reily

Colorado School of Mines

Golden, Colorado

breily@mines.edu

May 4, 2015

Contents

1 Introduction

1.1 Motivation and Aims . . . . . . . . . . . . . . . . . . . . . . .

1.2 Problem Setting and Assumptions . . . . . . . . . . . . . . . .

3

3

3

2 Discussion of Previous Work

4

3 Approach

8

3.1 Extracting the Gymnast Figure . . . . . . . . . . . . . . . . . 8

3.2 Fitting an Axis to the Gymnast’s Body . . . . . . . . . . . . . 9

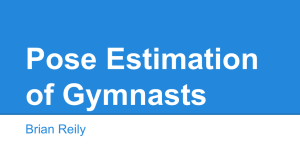

3.3 Calculating Spin Extrema . . . . . . . . . . . . . . . . . . . . 11

4 Results

4.1 Evaluation Method . . . .

4.2 Accuracy . . . . . . . . . .

4.3 Data Available to Coaches

4.4 Performance . . . . . . . .

5 Discussion

5.1 Achievements

5.2 Limitations .

5.3 Future Work .

5.4 Conclusion . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

1

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

12

12

13

13

15

.

.

.

.

16

16

16

16

17

References

18

Code

19

List of Figures

1

2

3

4

5

6

7

8

9

10

Gymnastics Dataset . . . . . .

Kinect Skeleton . . . . . . . . .

Mean Background . . . . . . . .

Isolating the Gymnast . . . . .

Contour Around the Gymnast. .

Axis Fitted to the Gymnast. . .

Fitting Cubic Splines . . . . . .

Error Histograms . . . . . . . .

Foot Position over Time . . . .

Bar Graphs of Spin Times . . .

2

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

4

6

9

10

11

11

12

14

15

15

1

Introduction

The pommel horse is an event in male gymnastics competitions, where a

gymnast swings around in a circular motion on all parts of the apparatus.

The United States Olympic Committee is interested in using computer vision

techniques to quantify their gymnasts’ performance on this event by calculating things that may be too minute for the coaches to directly percieve but

may influence the judges’ scores.

1.1

Motivation and Aims

A pommel horse routine is comprised of different gymnastics moves. While

some moves can involve just one leg (e.g. swinging a leg vertically), the

majority of moves involving performing double leg circles, increasing difficulty

by using just one hand or performing circles on different parts of the horse.

An important part of the score can be determined by how consistently a

gymnast executes these spins - ideally one spin should take the exact same

amount of time as the next spin. Additionally, knowing tendencies about how

fast a gymnast is spinning can provide important information in training - if a

gymnast consistently slows down as he goes through his routine, conditioning

may be an issue. The Olympic Committee is interested in using computer

vision to quantify these spin speeds, in order to contribute to these issues

and possibly find other ways to use the data.

The aim of my project is to develop an algorithm that can identify a spin

and calculate the timing between spins. It will use a single depth camera,

and will be developed in C++ with OpenCV - though testing was done with

an identical algorithm implemented in Matlab. While I’ve continued past

this objective into beginning to identify an entire body pose, I won’t discuss

that work here.

1.2

Problem Setting and Assumptions

The data set (Figure 1) I built for this problem was collected entirely at the

United States Olympic Committee’s training center in Colorado Springs. The

gymnasts captured in the data set are potential members of the Olympic team

3

that will compete in the 2016 Summer Olympic Games in Rio de Janeiro,

Brazil. The data set was captured using a Microsoft Kinect 2 camera, placed

on a tripod approximately 2.3 meters in front of the pommel horse. Because

the Kinect is a depth camera that uses infrared light to calculate the depth at

each pixel, the lighting is mostly irrelevant. The word ’mostly’ is used since

a second Kinect camera was placed to the side of the pommel horse in order

to capture footage of the gymnast from the side (not utilized in this experiment). Because this second Kinect also emits infrared light, there is possible

interference between the two cameras. While visible noise can be seen in the

Kinect footage, there is no evidence that it effected the results. The dataset

consists of 39 different uses of the apparatus, separated by segments of other

activity that wasn’t used (people walking back and forth, adjusting the pommel horse, or simple a blank scene). It was hand processed to extract the 39

scenes from the Kinect 2 file format and store them as PNG image sequences.

These sequences are in the process of being annotated by hand, to mark the

position of the head, hands, and feet. Additionally, a start frame (when the

gymnast starts using the apparatus) and an end frame (when the gymnast

dismounts the apparatus) were specified.

(b) Gymnast on the Pommel Horse

(a) Pommel Horse Without Gymnast

Figure 1: Examples of the dataset generated for the project.

2

Discussion of Previous Work

When reviewing related work for this project, I read a variety of papers.

While creating an algorithm to track the gymnasts’ feet did not require a full

4

body estimated pose, I felt that it would be good background as I worked

on the project. Tracking and recognizing body parts is a widely researched

area, as is estimating a pose from the resulting body part positions. In

the case of pose estimation for human bodies, pose estimation typically has

a different meaning than traditional 3D pose estimation. While typical 3D

pose estimation attempts to reconstruct the 6 degrees of freedom of an object

in 3D space, for a human body it refers to the actual pose of the person - the

layout of the extremities, limbs, joints, etc. This process is also referred to

as skeletonization, as it results in a skeleton model fitted to the image. The

majority of this research has been done on RGB images, as affordable depth

cameras have only arrived recently. The arrival of the Kinect initiated a wide

variety of research using depth data, and in fact the device includes body

part tracking and pose estimation out of the box. However, this included

method does not work well for this problem setting.

The Kinect camera includes a pose estimation algorithm based on the

depth data it collects, developed by Shotton et al. [7] at Microsoft Research.

Their method classifies each of a body’s pixels individually as belonging to

one of 31 distinct parts. This classification is based on different features of

the pixel - e.g. it’s relation to the depth of a pixel above it or to two pixels

below and to the side. These features are used to train a decision forest,

where each branch is one of these features - approximately 2000 different

features. Their method clusters the classified pixels into body parts, infers

joint locations from this, and connects these into a skeleton. The Kinect

algorithm is both very quick (approximately 5ms per frame on an Xbox

console) and accurate - for most cases. It fails, however, on positions like

those in my gymnastics dataset. Since it depends so heavily on training

data (approximately 1 million images), it incorrectly classifies images that

are not similar to the training set. For instance, it may correctly classify the

gymnast’s upper body as it is a standard upper body pose that may be used

in home gaming, but legs swung out to the side or an upside down gymnast

cause it to fail dramatically, as seen in Figure 2.

In addition to Shotton’s work, a number of other pose estimation methods

have been created for depth imagery. One such work is from Zhu et al., used a

model based approach [9]. Their work uses basic image features that are not

processed with a SVM or similar classifier to fit a head-neck-torso model.

This model is constrained by detected features and the model position in

previous frames - taking time data into account, unlike the Kinect approach.

5

Figure 2: An example of Kinect generating an incorrect skeleton for a gymnast.

While they don’t state any quantitative results, they have good qualitative

demonstrations and state that their approach has been used to effectively

map human motion to a robot. However, their method is currently limited

to the upper body.

A few pose estimation methods for depth images have also been developed

that do not rely (extensively or at all) on training data. Two similar methods

focus on finding body extrema. Plagemann et al. [5] uses the depth data to

construct a surface mesh of the person. First, they find the point furthest

from the centroid in terms of geodesic distance - the distance along a surface

instead of direct Euclidean distance. Then they iteratively locate the next

furthest from all previously found points. This typically locates body extrema

- head, hands, and feet - in a small number of iterations. These interest points

are fed to a logistic regression classifier trained on image patches of body

parts, and results in significant speed improvements over a typical sliding

window approach. While this method still requires training data, a similar

method developed by Schwarz et al. [6] uses the same approach of geodesic

distance. Schwarz’s approach does require the addition of RGB data, but

with an initial pose and optical flow tracking is accurate enough to generate

full skeletons without training data.

I also investigated body part recognition algorithms based on RGB images. Most works in this area are based off two important papers. The first

is the work done on HOG features - Histograms of Oriented Gradients - done

by Dalal et al. [2]. This paper introduced HOG features as the accepted way

6

to detect people in images. HOG features use a binning process for gradient

orientation, and the features are used to train a Support Vector Machine

(SVM). Then the test image is processed with a sliding window approach,

and using a multiresolution pyramid the window is classified at various sizes

by the SVM. Building on this is work done in the area of ’poselets’ by Bourdev et al. [1]. Poselets utilize HOG features, but instead of detecting an

entire body they are trained on different parts of it. Additionally, instead

of the researcher defining parts such as ’arm’ or ’leg’, the poselet approach

learns which portions of the body are significant, and often ends up learning

HOG features that describe areas such as ’torso and left arm’. These poselets

can be combined, as seen in Wang et al.’s [8] work on heirarchical poselets.

Their method designs a body part detector that can look for traditional

poselets - for instance, the ’torso and left arm’. But it is then heirarchically

broken down to detect ’torso’ and ’left arm’, which could be further broken

down to detect ’upper left arm’ and ’lower left arm’. This allows them to

find specific body parts, which they demonstrate briefly could be combined

using kinematic constraints. The most advanced work in this line of research

seems to be from Pishchulin and Andriluka et al., who extended the poselet

idea into what they call ’Pictorial Structures’ [4]. Pictorial Structures are a

model of a body pose using a conditional random field (CRF). Their work

uses poselets to provide a basis prior pose for an image, augmenting terms

in their CRF-based model. This is used to estimate a variety of poses much

more accurately than previous poselet work, nearing the best in field performance and only being beaten by an approach that uses data from images

across the dataset.

One work that is based on the poselet method but uses depth images was

published by Holt et al. [3]. Their work runs a multiscale sliding window

over the depth image, processing each window with a classifier trained on

poselets represented as a vector of pixel intensities over a 24x18 window. Holt

questions the use of HOG features on depth imagery but does not explain this

reasoning. The classifier, built as a decision forest, is run over the entire image

and classifications are conglomerated into body parts. Holt shows very good

results for major body parts (head, shoulders), with reduced effectiveness

for upper and lower arms. Perhaps their biggest contribution is a dataset of

depth imagery poses from the waist up, with 10 body parts labeled as ground

truth.

7

3

Approach

My approach to this problem was based on finding the extrema of a gymnast’s

spin. If I could determine the exact time the gymnast’s feet were at their

furthest left point, then I could determine the amount of time it took for

them to reach the furthest left point again. This would be the exact time

that it took for the gymnast to complete one rotation around their center

axis (similarly for the furthest right point if they began spinning in that

direction).

In my explanation of the algorithm I will consider the case where the

gymnast began spinning from the camera’s left side, to the right side, and

back to the left side. I’ll use the term extrema to refer to the moment the

gymnast’s feet reach their furthest extension the moment when their circle

of travel in (X, Y, Z) space intersects with the image plane in (x, y) space.

To determine the furthest point that the gymnast’s feet travel to the left, we

can take a number of approaches. The simplest is to use image processing

techniques to isolate the area belonging to the gymnast, and determine which

pixel is farthest left and farthest right. The problem with this is that it results

in extrema being marked at the position of the head, shoulders and arms of

the gymnast in addition to his feet. One can attempt to diminish this by

trying to restrict the extrema to be furthest from the center of the pommel

horse, but this does not work in a large number of situations where the

gymnast is not rotating around the center of the pommel horse. My eventual

solution to this problem was to fit a bent axis to the gymnast’s body, ideally

extending from his feet, bending at the body center, and extending to his

head.

3.1

Extracting the Gymnast Figure

To start, I segment the gymnast from the background and pommel horse.

I built a simple background subtractor in C++, that takes the average of

N frames. A typical frame without a gymnast is seen in Figure 1a in the

dataset description. This mean background is thresholded by it’s depth as we know how far away the pommel horse is, it is simple to only use the

pixels in the depth range that a gymnast using the apparatus could appear

in. Finally, the background is cleaned up with basic morphological process,

and one instance can be seen in Figure 3.

8

Figure 3: Mean Background

When processing the video of a gymnast’s performance, each frame (for

example, Figure 4a) is similarly processed - thresholded by depth and opened

with a small structuring element. An example of the depth thresholding can

be seen in Figure 4b. The background is then subtracted from the frame.

Pixels that are less than zero are discarded - this can occur due to noise from

the Kinect. Pixels less than a threshold are also discarded to reduce noise.

A typical resulting image can be seen in Figure 4c.

This resulting image is fed to a contour detection algorithm. In OpenCV,

this is as simple as one function call. In Matlab, the easiest way to do

this is to find the largest connected component, and then find the boundary

belonging to that region. An example can be seen in Figure 5. At the same

time, the centroid of this contour is found. In Matlab, this has already been

computed by the connected component detection. In OpenCV, this is done

by calculating the moments of the contour, found with Green’s Theorem,

which - to simplify a lot - integrates over the curve formed by the contour.

3.2

Fitting an Axis to the Gymnast’s Body

The result of fitting an axis to the gymnast can be seen in Figure 6. The first

step in my algorithm iterates through the contour, searching for two points -

9

(a) Gymnast on the Pommel Horse

(b) After Depth Thresholding

(c) After Subtraction

Figure 4: The process of isolating a gymnast.

the furthest from the centroid, and the closest to the centroid. The reasoning

here is that the point furthest from the centroid is almost always the feet or

head, and especially so when the gymnast is extended to the side. I’ll refer

to the vector from the centroid to this point as Vector A, and it can be seen

in green in Figure 6. The point closest to the centroid is typically the waist,

especially in situations where the gymnast is bent at the waist. The vector

to this closest point can be seen in red in Figure 6 - I’ll refer to this vector

as Vector B. The angle between these vectors is important, and I’ll refer to

this as Angle C. Then the contour is searched again, this time looking for

the point that would form the other end of the axis. The vector is picked

based on the angles it forms to the two vectors found previously. Its angle to

Vector B should be the same as Angle C, and its angle to Vector A should

be twice as large as Angle C. This final vector can be seen in blue in Figure

6, and the relation between the angles should be clear.

10

Figure 5: Contour Around the Gymnast.

Figure 6: Axis Fitted to the Gymnast.

3.3

Calculating Spin Extrema

The x coordinate of the feet is tracked for each frame. To determine if f ramei

is an extrema, I compare the x coordinate to the value from two frames ago

and two frames after. If xi is less than both xi−2 and xi+2 , then f ramei is a

left extrema. An identical method is used for right extrema, but checking if

the value is greater than the other two.

The Kinect has a peak framerate of 30 frames per second, but often skips

frames for unknown reasons. Thus some frames may be separated by 33ms,

11

some by 66ms, up to a peak of 133ms (that I have encountered). Since

the gymnasts spin quickly and are very consistent between spins, ideally

we want to produce the actual time of the extrema, and not simply the

timestamp of the frame. To do this, I fit a curve to the points (timei−1 , xi−1 ),

(timei , xi ), and (timei+1 , xi+1 ) using a cubic spline - an example can be seen

in Figure 7. This enables the algorithm to return the actual extreme x

coordinate and its exact timestamp. An identical method can fit a curve to

the points (f ramei−1 , xi−1 ), (f ramei , xi ), and (f ramei+1 , xi+1 ) to return the

exact frame number (e.g. frame 144.7 instead of frame 145). The final step in

the algorithm is to merge very close extrema. Depending on the positioning

of the gymnast’s feet, multiple extrema may be recorded within a few frames

of eachother. My method merges these, averaging their timestamps and

frame numbers.

(b) A Right Extrema.

(a) A Left Extrema.

Figure 7: Cubic Splines Fitted to a Gymnast’s Path. Time (ms) is shown on

the x Axis and the x Coordinate of the Gymnast’s Feet is shown on the y

Axis.

4

4.1

Results

Evaluation Method

As described above, the dataset was split into sections corresponding to activity on the pommel horse. For each frame, the timestamp from the Kinect

is known. While positions of the head and hands was also annotated, for this

evaluation I just used my annotations of the gymnasts’ feet. The position

12

of these was put through the same cubic spline based interpolation method

as above to create a ground truth result consisting of the timestamp and

frame number of every extrema. The advantage of this over simply hand

identifying extrema frames and treating those as the ground truth is that

interpolating in this manner allows for extrema that occur between recorded

frames. For each segment, the image sequence of the gymnast performing

was processed by the described detection algorithm. The resulting timestamps and frame numbers were compared against the ground truth for that

sequence to compute the error for each extrema. Currently, I have results for

over 176 extrema.

4.2

Accuracy

This method, though simple, is remarkably accurate at this specific task. To

show this, I computed the root mean squared error (RMSE) of both ground

truth vs detection timestamps and frame numbers. Additionally, I compute

the average absolute error, taking the average error in both timestamps and

frame numbers, but treating a detection 6ms too early the same as a detection

6ms too late (similarly for frame numbers). These results can be seen in

Table 1, showing that the method is able to detect extrema almost within a

hundredth of a second of ground truth data.

Error

Error Metric

RMSE (Time)

12.9942 ms

RMSE (Frame Number)

0.2393

Average Absolute (Time) 7.8168 ms

0.1352

Average Absolute (Frame)

Table 1: Error over 176 Extrema

Additionally, Figure 8 displays the error frequency graphically. These

histograms show the frequency of timestamp errors and frame number errors,

displaying counts for both actual and absolute errors.

Finally, the detection method does not detect any more or any less extrema than are in the ground truth dataset.

4.3

Data Available to Coaches

This timing data is accurate enough to provide the information that the

coaches were looking for - how consistent their gymnasts are. One example

13

(b) Absolute Timestamp Errors

(a) Timestamp Errors

(c) Frame Number Errors

(d) Absolute Frame Number Errors

Figure 8: Histograms of Errors.

sequence is displayed in Figure 9. This figure plots time along the x axis and

the left-right position of the gymnast’s feet along the y axis. Also annotated

are spin times - i.e. the time between one left extrema and the next left

extrema, and the same for the right. This same sequence is displayed in

Figure 10 with a different method - each bar is the time for that spin. Note

the second graph in Figure 10, where the outlier spin at the end is removed

(possibly this spin was slower due to a dismount). With all 22 spins, this

gymnast’s average spin time was 973.86ms, with a standard deviation on

66.77ms. Removing the outlier, his average spin time was 960.62ms, with

a standard deviation of only 25.07ms. In a vacuum this data isn’t useful,

but would allow for good comparisons between gymnasts - a low standard

deviation being a sign of consistency. Additionally, the graphically displayed

data could prove useful to coaches who are more visually oriented.

14

Figure 9: Graph displaying the gymnast’s foot position over time.

(b) Without the Outlier Spin

(a) All Performed Spins

Figure 10: Spin times for one gymnast’s performance on the pommel horse.

4.4

Performance

In a real world implementation, little benefit would be had from running this

approach in real time. The coaches can’t give input to the gymnast while he

is performing, so it would make little difference if the application recorded the

gymnast and computed the extrema with a slight delay. That said, currently

the C++ implementation of this method easily works in real time, on a midrange modern laptop. Though the Kinect does not seem to reliably provide

30 frames per second, no delay is caused by the image processing described

here. The Matlab implementation used for the evaluation section does not

work in real time however - on a four year old laptop, it achieves roughly 10

frames per second.

15

5

5.1

Discussion

Achievements

The method described here works remarkably well for its simplicity. Built on

basic image processing techniques and a basic concept of human kinematics,

it provides accuracy to nearly within a one hundredth of a second from the

ground truth. Additionally, the literature review and dataset construction

set this area up for more advanced pose estimation techniques.

5.2

Limitations

This approach does have some limitations. With how it is currently built,

it is only able to provide information about a limited set of pommel horse

techniques. Advanced moves, such as the gymnast being upside-down or

performing split leg scissors, do not adhere to the spin pattern it was built to

detect and thus just return nonsense data. Along the same lines, the method

can pick up ’noise’ extremas from actions like the gymnast approaching the

apparatus, as this again does not follow the pattern. This was not mentioned

in the results section as the dataset was constructed to consist only of actual

gymmastics sequences.

5.3

Future Work

These limitations go right into my planned future work. The next step is to

develop a pose estimation method that is aware of what body parts are where,

instead of this naive axis method. This would provide more information when

the gymnast is doing more atypical moves or simply entering and leaving the

pommel horse area, as well as allow extension to other sports. My current

approach to this is based on some work that estimates body part position

through geodesic distance from the body centroid, and I believe I have already

made some improvements over published methods. Following that, I will

work on developing an effective feature representation for body parts in depth

images, as no single standard has shown itself to be the best. While HOG

features are the standard for RGB images, they are not used in depth imagery.

In addition to that, I’ve begun working on skeleton fitting, to find a full body

pose based on recognized body parts. I see potential here to improve over

16

current methods. Finally, I will assist a team of undergraduate students in

building the method described here into a full application suitable for use by

gymnastics coaches.

5.4

Conclusion

In conclusion, I presented an effective method for foot tracking through the

major part of a pommel horse routine. This method is without question

accurate enough for real world use, and runs much faster than real-time.

17

References

[1] Lubomir Bourdev and Jitendra Malik. Poselets: Body Part Detectors

Trained Using 3D Human Pose Annotations. ICCV, 2009.

[2] Navneet Dalal and Bill Triggs. Histograms of Oriented Gradients for

Human Detection. CVPR, 2005.

[3] Brian Holt, Eng Jon Ong, Helen Cooper, and Richard Bowden. Putting

the Pieces Together: Connected Poselets for Human Pose Estimation.

ICCV, 2011.

[4] Leonid Pishchulin, Mykhaylo Andriluka, Peter Gehler, and Bernt Schiele.

Poselet Conditioned Pictorial Structures. CVPR, 2013.

[5] Christian Plagemann and Daphne Koller. Real-time Identification and

Localization of Body parts From Depth Images. ICRA, 2010.

[6] Loren Arthur Schwarz, Artashes Mkhitaryan, Diana Mateus, and Nassir

Navab. Estimating human 3D pose from Time-of-Flight Images Based on

Geodesic Distances and Optical Flow. IEEE Conf. Autom. Face Gesture

Recognit., 2011.

[7] Jamie Shotton, Andrew Fitzgibbon, Mat Cook, Toby Sharp, Mark Finocchio, Richard Moore, Alex Kipman, and Andrew Blake. Real-Time Human Pose Recognition in Parts from Single Depth Images. CVPR, 2011.

[8] Yang Wang, Duan Tran, and Zicheng Liao. Learning Hierarchical Poselets

for Human Parsing. CVPR, 2011.

[9] Youding Zhu, Behzad Dariush, and Kikuo Fujimura. Controlled Human

Pose Estimation from Depth Image Streams. CVPR, 2008.

18

Listing 1: Detection Approach

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

%

%

%

%

%

%

%

D e t e c t i o n Method

−−−−−−−−−−−−−−−−

This i s an i m p l e m e n t a t i o n o f my d e s c r i b e d approach i n Matlab .

There i s a c o r r e s p o n d i n g C++ v e r s i o n o f t h i s approach .

Ground t r u t h a n n o t a t i o n and e r r o r c h e c k i n g a r e done e l s e w h e r e .

function [ EXTREMAS, FEET, TIMESTAMPS ] = g y m d e t e c t ( images , s t a r t f r a m e , end frame , BG )

% minimum s i z e o f c o n t o u r

CONTOUR LEN THRESH = 5 0 ;

% d e p t h t h r e s h o l d s (8 b i t ∗ s t e p )

DEPTH THRESH MIN = 6 0 ∗ 1 9 ;

DEPTH THRESH MAX = 1 8 0 ∗ 1 9 ;

HEIGHT THRESH = 3 7 0 ;

% noise threshold for subtraction

SUBTRACT THRESH = 1 0 0 ;

% minimum l e n g t h f o r f e e t v e c t o r

FEET LEN THRESH = 1 0 0 ;

% o p e r a t o r used t o c l e a n up n o i s e

STREL3 = s t r e l ( ’ d i s k ’ , 3 ) ;

n = s i z e ( images , 3 ) ;

h e i g h t = s i z e (BG, 1 ) ;

width = s i z e (BG, 2 ) ;

% do d e p t h t h r e s h o l d i n g on b a c k g r o u n d

BG = BG . ∗ (BG > DEPTH THRESH MIN & BG < DEPTH THRESH MAX ) ;

f o r j = HEIGHT THRESH : h e i g h t

BG( j , : ) = zeros ( 1 , width ) ;

end

BG = BG . ∗ (BG > DEPTH THRESH MIN & BG < DEPTH THRESH MAX ) ;

BG = ime rode (BG, STREL3 ) ;

BG = i m d i l a t e (BG, STREL3 ) ;

% data s t r u c t u r e s to track d e t e c t i o n s

FRAME TIMES = zeros ( 1 , 5 ) ;

FRAME IDS = zeros ( 1 , 5 ) ;

EX COORDS = zeros ( 2 , 5 ) ;

EX LEN = zeros ( 1 , 5 ) ;

N EXTREMAS = 0 ;

EXTREMAS = [ 0 0 ] ;

FEET = zeros ( n , 2 ) ;

TIMESTAMPS = zeros ( n , 1 ) ;

f o r i = s t a r t f r a m e −5 : e n d f r a m e+5

I = images ( : , : , i ) ;

t = get timestamp ( I ) ;

TIMESTAMPS( i ) = t ;

% i f t h i s timestamp i s n ’ t z e r o e d , image won ’ t d i s p l a y p r o p e r l y

I (1 , 2) = 0 ;

% t h r e s h o l d frame

f o r j = HEIGHT THRESH : h e i g h t

I ( j , : ) = zeros ( 1 , width ) ;

19

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

end

I = I . ∗ ( I > DEPTH THRESH MIN & I < DEPTH THRESH MAX ) ;

I = imopen ( I , STREL3 ) ;

% s u b t r a c t background

I = abs ( I − BG) ;

I = I

.∗ ( I > 0);

I = I . ∗ ( I > SUBTRACT THRESH ) ;

% f i n d l a r g e s t c o n n e c t e d component

R = main region ( I ) ;

B = bwboundaries (R. F i l l e d I m a g e , 4 , ’ n o h o l e s ’ ) ;

largest = 0;

f o r j = 1 : s i z e (B, 1 )

i f s i z e (B{ j } , 1 ) > l a r g e s t

l a r g e s t = s i z e (B{ j } , 1 ) ;

contour = B{ j } ;

end

end

i f l a r g e s t < CONTOUR LEN THRESH

continue ;

end

f o r j = 1 : s i z e ( contour , 1 )

contour ( j , 1 ) = contour ( j , 1 ) + R. BoundingBox ( 2 ) ;

contour ( j , 2 ) = contour ( j , 2 ) + R. BoundingBox ( 1 ) ;

end

% k e e p t r a c k o f l a s t 5 ti m e s t a m p s

% t h i s i s u s e f u l i n t h e v e r y odd c a s e t h a t a c o n t o u r can ’ t be found

FRAME TIMES( 5 ) = FRAME TIMES ( 4 ) ;

FRAME TIMES( 4 ) = FRAME TIMES ( 3 ) ;

FRAME TIMES( 3 ) = FRAME TIMES ( 2 ) ;

FRAME TIMES( 2 ) = FRAME TIMES ( 1 ) ;

FRAME TIMES( 1 ) = t ;

FRAME IDS( 5 ) = FRAME IDS ( 4 ) ;

FRAME IDS( 4 ) = FRAME IDS ( 3 ) ;

FRAME IDS( 3 ) = FRAME IDS ( 2 ) ;

FRAME IDS( 2 ) = FRAME IDS ( 1 ) ;

FRAME IDS( 1 ) = i ;

% find centroid

c e n x = R. C e n t r o i d ( 1 ) ;

c e n y = R. C e n t r o i d ( 2 ) ;

% f i n d p o i n t / v e c t o r on c o n t o u r f u r t h e s t from c e n t r o i d

% a l s o f i n d p o i n t / v e c t o r on c o n t o u r c l o s e s t t o c e n t r o i d

d i s t a n c e s = zeros ( s i z e ( contour , 1 ) , 1 ) ;

f o r j = 1 : s i z e ( contour , 1 )

x = contour ( j , 2 ) ;

y = contour ( j , 1 ) ;

d i s t a n c e s ( j ) = norm ( [ c e n x c e n y ] − [ x y ] ) ;

end

% d e f i n e t h e l o n g e s t and s h o r t e s t v e c t o r s

[ l o n g l e n , l o n g i ] = max( d i s t a n c e s ) ;

l o n g v e c = [ c e n x c e n y ] − [ contour ( l o n g i , 2 ) contour ( l o n g i , 1 ) ] ;

[ s h o r t l e n , s h o r t i ] = min( d i s t a n c e s ) ;

s h o r t v e c = [ c e n x c e n y ] − [ contour ( s h o r t i , 2 ) contour ( s h o r t i , 1 ) ] ;

% a n g l e b e t w e e n l o n g e s t v e c t o r and s h o r t e s t v e c t o r

l o n g a n g = acos ( dot ( l o n g v e c , s h o r t v e c ) / ( l o n g l e n ∗ s h o r t l e n ) ) ;

20

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

% f i n d p o i n t / v e c t o r most o p p o s i t e t h e s h o r t v e c t o r

% f i n d p o i n t / v e c t o r most o p p o s i t e t h e l o n g v e c t o r

s h o r t s c o r e = [ Inf 0 ] ;

l o n g s c o r e = [ Inf 0 ] ;

f o r j = 1 : s i z e ( contour , 1 )

x = contour ( j , 2 ) ;

y = contour ( j , 1 ) ;

vec = [ cen x cen y ] − [ x y ] ;

l e n = norm( v e c ) ;

% search for vector opposite longest vector

% b u t a t t h e same a n g l e t o s h o r t e s t v e c t o r

maj ang = acos ( dot ( vec , l o n g v e c ) / ( l e n ∗ l o n g l e n ) ) ;

min ang = acos ( dot ( vec , s h o r t v e c ) / ( l e n ∗ s h o r t l e n ) ) ;

s c o r e = abs ( min ang − l o n g a n g ) + abs ( maj ang − ( 2 ∗ l o n g a n g ) ) ;

i f isinf ( long score (1)) | | score < long score (1)

long score (1) = score ;

long score (2) = j ;

end

end

% d e f i n e end c o o r d i n a t e s f o r t h e two l o n g v e c t o r s

l o n g x = contour ( l o n g i , 2 ) ;

l o n g y = contour ( l o n g i , 1 ) ;

l o n g 2 x = contour ( l o n g s c o r e ( 2 ) , 2 ) ;

l o n g 2 y = contour ( l o n g s c o r e ( 2 ) , 1 ) ;

% s e e which l o n g v e c t o r p o i n t s down , assume t h a t ’ s t h e f e e t

i f long y > long2 y

feet len = long len ;

feet x = long x ;

feet y = long y ;

else

f e e t l e n = norm ( [ c e n x c e n y ] − [ l o n g 2 x l o n g 2 y ] ) ;

f e e t x = long2 x ;

f e e t y = long2 y ;

end

% track l a s t 5 feet points

EX COORDS ( : , 5 ) = EX COORDS ( : ,

EX COORDS ( : , 4 ) = EX COORDS ( : ,

EX COORDS ( : , 3 ) = EX COORDS ( : ,

EX COORDS ( : , 2 ) = EX COORDS ( : ,

EX COORDS ( : , 1 ) = [ f e e t x ; f e e t

EX LEN ( 5 ) = EX LEN ( 4 ) ;

EX LEN ( 4 ) = EX LEN ( 3 ) ;

EX LEN ( 3 ) = EX LEN ( 2 ) ;

EX LEN ( 2 ) = EX LEN ( 1 ) ;

EX LEN ( 1 ) = f e e t l e n ;

4);

3);

2);

1);

y ];

% track a l l foot points for possible replay

FEET( i , : ) = [ f e e t x f e e t y ] ;

% we may want f o o t c o o r d i n a t e s f o r t h e p r e v i o u s p o i n t s ,

% b u t don ’ t want t o draw extrema

i f i < s t a r t f r a m e | | i > end frame

continue ;

end

% i f p o i n t 3 i s f a r t h e r l e f t than 1 and 5 , l e f t extrema

% i f p o i n t 3 i s f a r t h e r r i g h t than 1 and 5 , r i g h t extrema

extrema = f a l s e ;

21

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

[ ˜ , max i ] = max(EX COORDS( 1 , : ) ) ;

[ ˜ , m i n i ] = min(EX COORDS( 1 , : ) ) ;

i f EX LEN ( 3 ) > FEET LEN THRESH && ( max i == 3 | | m i n i == 3 )

i f EX COORDS( 1 , 3 ) < EX COORDS( 1 , 5 ) && EX COORDS( 1 , 3 ) < EX COORDS( 1 , 1 )

extrema = t r u e ;

e l s e i f EX COORDS( 1 , 3 ) > EX COORDS( 1 , 5 ) && EX COORDS( 1 , 3 ) > EX COORDS( 1 , 1 )

extrema = t r u e ;

end

end

% i f an extrema , i n t e r p o l a t e i t ’ s time and frame

i f extrema

% o n l y u s e t h e extrema and one p o i n t on e i t h e r s i d e

x = [EX COORDS( 1 , 4 ) EX COORDS( 1 , 3 ) EX COORDS( 1 , 2 ) ] ;

% f i t s p l i n e over t h o s e 3 timestamps

t = [ FRAME TIMES( 4 ) FRAME TIMES( 3 ) FRAME TIMES ( 2 ) ] ;

t r g = FRAME TIMES ( 5 ) : FRAME TIMES ( 1 ) ;

% and t h o s e 3 frame numbers , down t o 0 . 1

f = [ FRAME IDS( 4 ) FRAME IDS( 3 ) FRAME IDS ( 2 ) ] ;

f r g = FRAME IDS ( 5 ) : . 1 : FRAME IDS ( 1 ) ;

% f i t s p l i n e t o time , p i c k max p o i n t d e p e n d i n g on l e f t / r i g h t

yy = s p l i n e ( t , x , t r g ) ;

i f EX COORDS( 1 , 3 ) > EX COORDS( 1 , 5 )

[ ˜ , i d x ] = max( yy ) ;

else

[ ˜ , i d x ] = min( yy ) ;

end

ex time = t r g ( idx ) ;

% f i t s p l i n e t o frame #

yy = s p l i n e ( f , x , f r g ) ;

i f EX COORDS( 1 , 3 ) > EX COORDS( 1 , 5 )

[ ˜ , i d x ] = max( yy ) ;

else

[ ˜ , i d x ] = min( yy ) ;

end

ex frame = f r g ( idx ) ;

% record i t

N EXTREMAS = N EXTREMAS + 1 ;

EXTREMAS(N EXTREMAS, 1 ) = e x f r a m e ;

EXTREMAS(N EXTREMAS, 2 ) = e x t i m e ;

end

end

% sometimes m u l t i p l e extrema a r e d e t e c t e d a t t h e end o f a s p i n

% combine n e a r b y extrema w i t h i n 4 frames

removed = 0 ;

f o r i = 1 : N EXTREMAS

i f i == N EXTREMAS

continue ;

end

i f abs (EXTREMAS( i , 1 ) − EXTREMAS( i +1 , 1 ) ) < 4

EXTREMAS( i +1 , 1 ) = (EXTREMAS( i , 1 ) + EXTREMAS( i +1 , 1 ) ) / 2 ;

EXTREMAS( i +1 , 2 ) = (EXTREMAS( i , 2 ) + EXTREMAS( i +1 , 2 ) ) / 2 ;

EXTREMAS( i , 1 ) = 0 ;

EXTREMAS( i , 2 ) = 0 ;

removed = removed + 1 ;

end

22

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

end

% o n l y r e t u r n t h e nonzero e l e m e n t s

EXTREMAS = EXTREMAS;

EXTREMAS = zeros ( nnz (EXTREMAS) / 2 , 2 ) ;

ct = 0;

f o r i = 1 : N EXTREMAS

i f EXTREMAS ( i , 1 ) ˜= 0

ct = ct + 1;

EXTREMAS( ct , 1 ) = EXTREMAS ( i , 1 ) ;

EXTREMAS( ct , 2 ) = EXTREMAS ( i , 2 ) ;

end

end

end

23