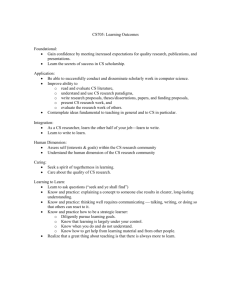

Pattern Classification • Lecture # 8 Session 2003

advertisement

Lecture # 8

Session 2003

Pattern Classification

• Introduction

• Parametric classifiers

• Semi-parametric classifiers

• Dimensionality reduction

• Significance testing

6.345 Automatic Speech Recognition

Semi-Parametric Classifiers 1

Semi-Parametric Classifiers

• Mixture densities

• ML parameter estimation

• Mixture implementations

• Expectation maximization (EM)

6.345 Automatic Speech Recognition

Semi-Parametric Classifiers 2

Mixture Densities

• PDF is composed of a mixture of m component densities

{ω1 , . . . , ωm }:

m

X

p(x) =

p(x|ωj )P(ωj )

j=1

• Component PDF parameters and mixture weights P(ωj ) are

typically unknown, making parameter estimation a form of

unsupervised learning

• Gaussian mixtures assume Normal components:

µk , Σ k )

p(x|ωk ) ∼ N(µ

6.345 Automatic Speech Recognition

Semi-Parametric Classifiers 3

0.15

0.10

0.05

0.0

Probability Density

0.20

0.25

Gaussian Mixture Example: One Dimension

-4

-2

0

2

4

x

σ

p(x) = 0.6p1 (x) + 0.4p2 (x)

p1 (x) ∼ N(−σ, σ 2 )

6.345 Automatic Speech Recognition

p2 (x) ∼ N(1.5σ, σ 2 )

Semi-Parametric Classifiers 4

Gaussian Example

-150

-50

0

50

s ( dimension 7 )

6.345 Automatic Speech Recognition

100

-100

0.0 0.0020.0040.0060.008

Probability Density

-100

0

s ( dimension 5 )

100

-50

0

50

s ( dimension 8 )

100

0.0

Probability Density

-200

200

0.00.002

0.004

0.006

0.008

0.010

-100

0

100

s ( dimension 3 )

-150

-50

0

50 100 150

s ( dimension 6 )

0.0 0.005 0.010 0.015

200

-200

Probability Density

-100

0

100

s ( dimension 4 )

200

0.005 0.010 0.015

-200

-400

-200

0

s ( dimension 2 )

Probability Density

Probability Density

-600

0.00.002

0.004

0.006

0.008

0.010

3000

0.00.002

0.004

0.006

0.008

0.010

0.012

-300

Probability Density

1500

2000

2500

s ( dimension 1 )

0.0 0.0020.0040.0060.008

Probability Density

1000

0.00.001

0.002

0.003

0.004

0.005

Probability Density

0.0 0.00050.00100.0015

Probability Density

First 9 MFCC’s from [s]: Gaussian PDF

-100

-50

0

50

s ( dimension 9 )

100

Semi-Parametric Classifiers 5

Independent Mixtures

-150

-50

0

50

s ( dimension 7 )

6.345 Automatic Speech Recognition

100

-100

0.0 0.0020.0040.0060.008

Probability Density

-100

0

s ( dimension 5 )

100

-50

0

50

s ( dimension 8 )

100

0.0

Probability Density

-200

200

0.0 0.0020.0040.0060.008

-100

0

100

s ( dimension 3 )

-150

-50

0

50 100 150

s ( dimension 6 )

0.0 0.005 0.010 0.015

200

-200

Probability Density

-100

0

100

s ( dimension 4 )

200

0.005 0.010 0.015

-200

-400

-200

0

s ( dimension 2 )

Probability Density

Probability Density

-600

0.00.002

0.004

0.006

0.008

0.010

3000

0.00.002

0.004

0.006

0.008

0.010

-300

Probability Density

1500

2000

2500

s ( dimension 1 )

0.0 0.0020.0040.0060.0080.010

Probability Density

1000

0.00.001

0.002

0.003

0.004

0.005

Probability Density

0.0 0.00050.00100.0015

Probability Density

[s]: 2 Gaussian Mixture Components/Dimension

-100

-50

0

50

s ( dimension 9 )

100

Semi-Parametric Classifiers 6

Mixture Components

-150

-50

0

50

s ( dimension 7 )

100

6.345 Automatic Speech Recognition

-100

0.0 0.0020.0040.0060.008

Probability Density

-100

0

s ( dimension 5 )

100

-50

0

50

s ( dimension 8 )

100

0.0

Probability Density

-200

200

0.0 0.0020.0040.0060.008

-100

0

100

s ( dimension 3 )

-150

-50

0

50 100 150

s ( dimension 6 )

0.0 0.005 0.010 0.015

200

-200

Probability Density

-100

0

100

s ( dimension 4 )

200

0.005 0.010 0.015

-200

-400

-200

0

s ( dimension 2 )

Probability Density

Probability Density

-600

0.00.002

0.004

0.006

0.008

0.010

3000

0.00.002

0.004

0.006

0.008

0.010

-300

Probability Density

1500

2000

2500

s ( dimension 1 )

0.0 0.0020.0040.0060.0080.010

Probability Density

1000

0.00.001

0.002

0.003

0.004

0.005

Probability Density

0.0 0.00050.00100.0015

Probability Density

[s]: 2 Gaussian Mixture Components/Dimension

-100

-50

0

50

s ( dimension 9 )

100

Semi-Parametric Classifiers 7

ML Parameter Estimation:

1D Gaussian Mixture Means

log L(µk ) =

n

X

log p(xi ) =

i=1

n

X

log

i=1

m

X

p(xi |ωj )P(ωj )

j=1

X ∂

X 1

∂ log L(µk )

∂

=

log p(xi ) =

p(xi |ωk )P(ωk )

∂µk

∂µ

p(x

)

∂µ

k

i

k

i

i

∂

1

∂p(xi |ωk )

√

=

e

∂µk

∂µk 2πσk

−

(xi − µk )2

2σk2

= p(xi |ωk )

(xi − µk )

σk2

X P(ωk )

(xi − µk )

∂ log L(µk )

=

=0

p(xi |ωk )

2

∂µk

p(xi )

σk

i

X

P(ωk |xi )xi

p(xi |ωk )P(ωk )

= P(ωk |xi )

µ̂k = iX

since

p(xi )

P(ωk |xi )

i

6.345 Automatic Speech Recognition

Semi-Parametric Classifiers 8

Gaussian Mixtures: ML Parameter Estimation

The maximum likelihood solutions are of the form:

1X

P̂(ωk |xi )xi

n i

µk =

µ̂

1X

P̂(ωk |xi )

n i

1X

µk )(xi − µ̂

µ k )t

P̂(ωk |xi )(xi − µ̂

n i

Σk =

Σ̂

1X

P̂(ωk |xi )

n i

1X

P̂(ωk ) =

P̂(ωk |xi )

n i

6.345 Automatic Speech Recognition

Semi-Parametric Classifiers 9

Gaussian Mixtures: ML Parameter Estimation

• The ML solutions are typically solved iteratively:

µk , Σ̂

Σk

– Select a set of initial estimates for P̂(ωk ), µ̂

– Use a set of n samples to reestimate the mixture parameters

until some kind of convergence is found

• Clustering procedures are often used to provide the initial

parameter estimates

• Similar to K-means clustering procedure

6.345 Automatic Speech Recognition

Semi-Parametric Classifiers 10

Example: 4 Samples, 2 Densities

1. Data: X = {x1 , x2 , x3 , x4 } = {2, 1, −1, −2}

2. Init: p(x|ω1 ) ∼ N(1, 1)

p(x|ω2 ) ∼ N(−1, 1)

P(ωi ) = 0.5

3. Estimate:

P(ω1 |x)

P(ω2 |x)

x1

0.98

0.02

x2

0.88

0.12

x3

0.12

0.88

x4

0.02

0.98

p(X ) ∝ (e−0.5 + e−4.5 )(e0 + e−2 )(e0 + e−2 )(e−0.5 + e−4.5 )0.54

4. Recompute mixture parameters (only shown for ω1 ):

.98+.88+.12+.02

4

P̂(ω1 ) =

.98(2)+.88(1)+.12(−1)+.02(−2)

.98+.88+.12+.02

= 1.34

.98(2−1.34)2 +.88(1−1.34)2 +.12(−1−1.34)2 +.02(−2−1.34)2

.98+.88+.12+.02

= 0.70

µ̂1 =

σ̂12

=

= 0.5

5. Repeat steps 3,4 until convergence

6.345 Automatic Speech Recognition

Semi-Parametric Classifiers 11

6.345 Automatic Speech Recognition

10

8

6

4

2

Probability Density

0

0.15

0.20

Duration (sec)

0.25

0.30

0.05

0.10

0.15

0.20

Duration (sec)

0.25

0.30

10

8

6

4

10

12

0

2

Probability Density

12

0.10

8

log p(X )

2.727

2.762

2.773

2.778

2.781

2.783

2.784

2.785

0.05

6

σ2

23

24

25

25

26

26

26

26

4

P(ω2 )

.616

.624

.631

.638

.644

.651

.656

.662

σ1

35

37

39

41

42

43

44

44

Probability Density

P(ω1 )

.384

.376

.369

.362

.356

.349

.344

.338

µ2

95

97

98

100

100

102

102

102

2

Iter

0

1

2

3

4

5

6

7

µ1

152

150

148

147

146

146

146

145

0

Iter

0

1

2

3

4

5

6

7

12

[s] Duration: 2 Densities

0.05

0.10

0.15

0.20

Duration (sec)

0.25

0.30

Semi-Parametric Classifiers 12

Gaussian Mixture Example: Two Dimensions

3-Dimensional PDF

PDF Contour

4

3

2

4

2

1

0

-2

0

0

-1

2

4 -2

6.345 Automatic Speech Recognition

-2

-2

-1

0

1

2

3

4

Semi-Parametric Classifiers 13

Two Dimensional Mixtures

.

.

.

-200

-150

-100

-50

0

s ( dimension 5 )

50

100

50

.

0

.

-50

.

.

.

-200

-150

-100

-50

0

s ( dimension 5 )

50

100

100

50

0

-50

-150 -100

-150 -100

-50

0

50

s ( d mens on 6 )

100

150

Three Mixtures

150

Two Mixtures

s ( d mens on 6 )

.

.

.

100

. .

.

.

..

.

.

.

. .

.

.

.

.

. . . .

..

.

.

..

..

. . . . . . ... . .. ..... . . . . .. . . . . . . . . . .

.

..

. .. . .. . . .. . . . . . .. . .. .. . ..... ... . ... .... ... . . .. .. . . ...

..

. . .. ...... ... ..

... .... ..... . ... . .... ... ... .. . ... . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.. . .. . . .... .. . ... ..... . ..... . .. . . .... .. .. .. . .. . . . .. . .. . . . .. . . . .

.

. .. . . .. .. . . .. ....... .. ..... ........ . ..... .. ... . ......... . .. . . .. . .. . . .

.

. .

..

.. . . .. . .. ...... .. . . ......... ..... ..... ..... .... .... ... .................................. ........ ... . .. ...... . . .. .

. .. . ... ........... . ................................................................................................................................................................................................................................ .. .......... .. .. .. . . . . ... ..

.

. . . . ... .............................. ...................... .................................................................. ................................. ....... . .... . ........ .... . .. . . . . ..

.

. . .... .... . ........... ... .... ............................................................................................................................................. ....... ..... ... ..... . . . .... .

. ..

..

. . .. . ...... ............................................................................................................................................................................................................................................................................ .............................................................................. . ....... . . . . .. .

. . ... . . ....... ................................................................................................................... ................................. . ... .. ... .. .. ... . .. .

.

.

.

.. . .. . .. ... .. .............. ............................................................................................................................................................................................................................................................................................................................................................................ ...... ............ .. .. ... .. . . ...

.

... . . .......... ... ... ................................................................................................................................... ........................... ............. ..... .... . ... .... ... ..

. . . . . ... ........ ............... .................................................................................................................................................................................................................................................................................................................................................................................................................................................... .................. . .. .. . . . . .

.

.

.

. . . . .. .... . .. ....... ... .. .... .. ... ..... ............. ...... .... . . .. . . ... . . ... . . .

. . . . .. .................. ...................................................................................................................................................................................................................................................................................................................................................................................................................................... ........ .... .... ... . . . .. . . . . .

. .. . . .. . .. .... ..... .......................... .............................................................................................................................................................................................. .................................. .... ...

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. . .... .. .... .. .. ..... ........................ .......................... ....................................... ........ ......... .... .... .. ............ .. .... . . . . . .. . .

. .. . . .. .. .... .... ......... ......... ................................................................................................................................................................................................................................................................................................................................................................................................................................... ......... . .. .. .

..

..... . ..... ..... ... ...... ................... ........................................................................................................................................................................................................................................ .................. . ... .. .. .

.

.. . . .. ... .. . .. ..

. . . .. . .. . .

.

. .. . .. . . .. .

. ... .. . .. ... ..... .. ... . ............................................................................................................................................................................................................................................................................................................................................................................................................... ... .... .......... ......

. ..... ... ..... ....... ...... ................................................................................................................................................................................................................................................................................................................................................................. .......... .. . .

. .. .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

..

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. . . . .. .. .

.

.

.. . . .

.

. .

.

.

. . . . .......... .. . ........... ...................................................................................................................................................................................................................................................................................................................................... ................ ... . .. .

.

.

. ... . .... ... .. .... .............. ..... .... .... . .. . ... . . . . .

. . . ..

.

.. . . .. . .. .. ................... ... .............. .............................................................................................. ... ...... ...... ...... .. ... .. .... . ..

.

. ... . . ... . ............ ................... ....................................................................................................................................................................................................................................... ....... .... . . . .. .. . ..

.

. . . .. .

.

..

.

.

.

. . ... . ... .... ..................................................................................................................................... ............... .... .... ............. .. . ..

..

.. . . ... .. .. .... . ... ............ ... .. ......... ... ... .. . . .. ... . . .. . . .

..

.

.

. .

. . . . . ...... .. . ... ... ......... ...... .................................. ..... . ... ... ... ..

. . ..

. .

.

. .. .. .. . . .. . ...... .... . .. . ....... .

.

.

. . .

. .

. . .. . . ... . ... . . . .

..

..

.

.

... . .

. . .

.

.

. . .

.

-150 -100

.

s ( dimension 6 )

100

50

0

-50

-150 -100

s ( dimension 6 )

.

.

.

.

.. ..

.

.

.

.. .

. .

. .. ..

..

. . . . . . .... . . . . . . . . . . . . . . . . .

.

.

. . ... . . .... .. ..... ... .. . . . . . .. ..

.

.

. . . .. ..

.

.. . . . .. . .. . . .. . .. .. .... .....

. .. . . ... .. . ...... .... ....... ..... .......... ...... ........... .... ............. .. ..... ............ .. .... .... .... .... . . .. .... .

.

.. . . .

..

..

.. . .

.

... .. ... .. .. .. ..... . ... .......................... ... ................... . ....... ................ ...... .. .................. . .. . . . . .

. .... ....... .... ... .............................................. ...................................................................................................................................................... ....................... .. .. ....... ..... . . . . . . . .

. . . .. . .. .. ... .................................................................... ....................................................... ............. ............... ............. ......... ... ... . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

..

. . . . . ... .... ... .. ... ............... ................................................................................................................................................................................................................................... ................. .... . ... ...... ...... .

..

. ..

. . . ...

.

.

. . . ..

.

......................................................... ... ......... ............ . ... ..

... .... .. . ............................................................................................................................................................................................................................................................

. .

. . . . ... . . .... .. .. ................ .. ... .......... . . .................................................................................................. . ... .... .... ... .... ...... . . .

.. . ..

..

. .. . . ..... . ....... ........................................................................................................................................................................................................................................................................................................... ......... ... ..... ... . .. . ...

.

.. . . ............ ..................... ........................................................................................................................................................................................................................................................................................................................................................................................................................................................................................ ....... .. ..... .. .. . . .. . .

.

............................................................................................................................................................... ..................... .. .. ....... .... .. .

. . . . . . .... ........ ...........................................................................................................................................................................................................................................................................................................................

.

. . ............................................................................. .. .. ..... ..

.

. . ... . . ............ ........ ............................................................................................................................................................................................................................................................................................................................................................................

.......................... ...... .... . .. ....... . . .. ... . . . . ..

. . . ..... . ... .... .. .................................................................................................................................................................................................................................................................................................................................................................................................................................................................................... .......... .. . . .. .

. ... . ......... . .................................................................................................................................................................................................................................................................................................... ........... ...... .. ....

.

.

... . . .. ... ... ............... . .................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................. ... .. . . .. .

.

. . . . ............ .... ... .... .......................................................................................................................................................................................................................................................................................................................................................................................................................... ....... . . .

.

.

.

.

.

.

. . .. . . . .. .. . ...... ........................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................... ..... . . .. .

. . .. .. ..... .................. ............................................................................................................................................................................................................................................................................................................................................... .... .... .... . ... . . . . .

....................................... ...... ... . ..

. . . . . . . . .... ................. ........ .................................................................................................................................................................................................................................................................................................

.

. .

. . ............................................................... .. .. ..... .... . . . . .

.

..... . ........ ............................................................................................................................................................................................................................................................................................................................................................................................................................ .... .. . .

.. . ... . ....... . ... .. ..... ....... ................................................................................................................................................................................................. ..... .................. . . ... .

.

.

. . .

. . . .. . . ...... ............................................................................................................................................................................................................................................................. . .................. .. . ..

.

..

.

.. . . .. . ... ... .... . .... ... ... . . .. . .. .. . .. ...

.

. . .

. . . ..... ..... ..................................................................................................................................................................................................... ....................... . . ... . . . .

.

..

. . . .. ... ...................................................................................................... ........... ... ....... . . .. . .. . .

.

.

.

. . . .. .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

. . .

. . ... .. . ...... . .... . ... .......... ... . .. .. . ... .... .

.

.

.. .... .

.. . .. . . . . .

. .

..

. .

. . . ..

.

. .. .

..

. . . . . . . . . . .. . .. . .. .

.

. . .. .

..

. . .

.

. .

.

Full Covariance

150

150

Diagonal Covariance

-200

-150

-100

-50

0

s ( d mens on 5 )

6.345 Automatic Speech Recognition

50

100

-200

-150

-100

-50

0

s ( d mens on 5 )

50

100

Semi-Parametric Classifiers 14

Two Dimensional Components

100

50

0

-150 -100

-100

-50

0

s ( dimension 5 )

50

100

-200

-150

-100

-50

0

s ( dimension 5 )

50

100

-200

-150

-100

-50

0

s ( dimension 5 )

50

100

-200

-150

-100

-50

0

s ( dimension 5 )

50

100

100

50

0

-50

-150 -100

-50

0

50

s ( dimension 6 )

100

150

-150

150

-200

-150 -100

s ( dimension 6 )

-50

s ( dimension 6 )

50

0

-50

-150 -100

s ( dimension 6 )

100

150

Components

150

Mixture

6.345 Automatic Speech Recognition

Semi-Parametric Classifiers 15

Mixture of Gaussians:

Implementation Variations

• Diagonal Gaussians are often used instead of full-covariance

Gaussians

– Can reduce the number of parameters

– Can potentially model the underlying PDF just as well if enough

components are used

• Mixture parameters are often constrained to be the same in order

to reduce the number of parameters which need to be estimated

– Richter Gaussians share the same mean in order to better model

the PDF tails

– Tied-Mixtures share the same Gaussian parameters across all

classes. Only the mixture weights P̂(ωi ) are class specific. (Also

known as semi-continuous)

6.345 Automatic Speech Recognition

Semi-Parametric Classifiers 16

Richter Gaussian Mixtures

1.2

1.0

0.8

0.6

0.0

0.0

0.2

0.4

Probability Density

1.0

0.8

0.6

0.4

0.2

Probability Density

1.2

1.4

1.4

[s] Log Duration: 2 Richter Gaussians

-3.5

-3.0

-2.5

-2.0

log Duration (sec)

Richter Density

6.345 Automatic Speech Recognition

-1.5

-3.5

-3.0

-2.5

-2.0

log Duration (sec)

-1.5

Richter Components

Semi-Parametric Classifiers 17

Expectation-Maximization (EM)

• Used for determining parameters, θ, for incomplete data, X = {xi }

(i.e., unsupervised learning problems)

• Introduces variable, Z = {zj }, to make data complete so θ can be

solved using conventional ML techniques

X

log p(xi , zj |θ)

log L(θ) = log p(X , Z|θ) =

i,j

• In reality, zj can only be estimated by P(zj |xi , θ), so we can only

compute the expectation of log L(θ)

XX

E = E(log L(θ)) =

P(zj |xi , θ) log p(xi , zj |θ)

i

j

• EM solutions are computed iteratively until convergence

1. Compute the expectation of log L(θ)

2. Compute the values θ0 , which maximize E

6.345 Automatic Speech Recognition

Semi-Parametric Classifiers 18

EM Parameter Estimation:

1D Gaussian Mixture Means

• Let zi be the component id, {ωj }, which xi belongs to

XX

E = E(log L(θ)) =

P(zj |xi , θ) log p(xi , zj |θ)

i

j

• Convert to mixture component notation:

XX

P(ωj |xi ) log p(xi , ωj )

E = E(log L(µk )) =

i

j

• Differentiate with respect to µk :

X

X

∂

xi − µk

∂E

=

P(ωk |xi )

log p(xi , ωk ) =

P(ωk |xi )(

) =0

2

∂µk

∂µ

k

σk

i

i

X

P(ωk |xi )xi

µ̂k = iX

P(ωk |xi )

i

6.345 Automatic Speech Recognition

Semi-Parametric Classifiers 19

EM Properties

• Each iteration of EM will increase the likelihood of X

X

XX

p(X |θ0 )

p(xi |θ0 )

p(xi |θ0 )

=

=

log

log

P(zj |xi , θ) log

p(X |θ)

p(xi |θ)

p(xi |θ)

i

i j

XX

p(xi , zj |θ0 )

p(xi |θ0 ) p(xi , zj |θ)

+ log

)

=

P(zj |xi , θ)(log

0

p(xi , zj |θ ) p(xi |θ)

p(xi , zj |θ)

i j

• Using Bayes rule and the Kullback-Liebler distance metric:

p(xi , zj |θ)

= P(zj |xi , θ)

p(xi |θ)

X

j

P(zj |xi , θ)

P(zj |xi , θ) log

≥0

0

P(zj |xi , θ )

• Since θ0 was determined to maximize E(log L(θ)):

XX

i

j

p(xi , zj |θ0 )

≥0

P(zj |xi , θ) log

p(xi , zj |θ)

• Combining these two properties: p(X |θ0 ) ≥ p(X |θ)

6.345 Automatic Speech Recognition

Semi-Parametric Classifiers 20

Dimensionality Reduction

• Given a training set, PDF parameter estimation becomes less

robust as dimensionality increases

• Increasing dimensions can make it more difficult to obtain

insights into any underlying structure

• Analytical techniques exist which can transform a sample space to

a different set of dimensions

– If original dimensions are correlated, the same information may

require fewer dimensions

– The transformed space will often have more Normal distribution

than the original space

– If the new dimensions are orthogonal, it could be easier to

model the transformed space

6.345 Automatic Speech Recognition

Dimensionality Reduction 1

Principal Components Analysis

• Linearly transforms d-dimensional vector, x, to d 0 dimensional

vector, y, via orthonormal vectors, W

y = W tx

W = {w1 , . . . , wd 0 }

W tW = I

• If d 0 < d, x can be only partially reconstructed from y

x̂ = Wy

• Principal components, W , minimize the distortion, D, between x,

and x̂, on training data, X = {x1 , . . . , xn }

D=

n

X

kxi − x̂i k2

i=1

• Also known as Karhunen-Loève (K-L) expansion

(wi ’s are sinusoids for some stochastic processes)

6.345 Automatic Speech Recognition

Dimensionality Reduction 2

PCA Computation

6

x2

I

-

y2

y1

x1

• W corresponds to the first d 0 eigenvectors, P, of Σ

P = {e1 , . . . , ed }

ΛP t

Σ = PΛ

wi = ei

• Full covariance structure of original space, Σ , is transformed to a

diagonal covariance structure, Λ 0

• Eigenvalues, {λ1 , . . . , λd 0 }, represent the variances in Λ 0

• Axes in d 0 -space contain maximum amount of variance

d

X

D=

λi

i=d 0 +1

6.345 Automatic Speech Recognition

Dimensionality Reduction 3

PCA Example

• Original feature vector mean rate response (d = 40)

• Data obtained from 100 speakers from TIMIT corpus

• First 10 components explains 98% of total variance

Percent of Total Variance

100%

90%

80%

Cosine

70%

PCA

60%

50%

40%

1

2

3

4

5

6

7

8

9

10

Number of Components

6.345 Automatic Speech Recognition

Dimensionality Reduction 4

PCA Example

5

1

6

2

7

3

8

4

9

Value

Value

Value

Value

Value

0

0

10

Frequency (Bark)

6.345 Automatic Speech Recognition

20

0

10

20

Frequency (Bark)

Dimensionality Reduction 5

PCA for Boundary Classification

• Eight non-uniform averages from 14 MFCCs

• First 50 dimensions used for classification

0.6

0.3

0.5

0.3

0.25

0.5

0.4

0.25

0.2

0.4

0.3

0.2

0.15

0.3

0.2

0.15

0.1

0.2

0.1

0.1

0.05

0.1

0

6.345 Automatic Speech Recognition

0

Left 8

Left 4

Left 2

Left 1

Right 1

Right 2

Right 4

Right 8

Left 8

Left 4

Left 2

Left 1

Right 1

Right 2

Right 4

Right 8

Second Component

0

0.05

C0

C1

C2

C3

C4

C5

C6

C7

C8

C9

C10

C11

C12

C13

0

C0

C1

C2

C3

C4

C5

C6

C7

C8

C9

C10

C11

C12

C13

0.6

Seventh Component

Dimensionality Reduction 6

PCA Issues

• PCA can be performed using

– Covariances Σ

– Correlation coefficients matrix P

ρij = √

σij

σii σjj

|ρij | ≤ 1

• P is usually preferred when the input dimensions have

significantly different ranges

• PCA can be used to normalize or whiten original d-dimensional

space to simplify subsequent processing

Σ =⇒ P =⇒ Λ =⇒ I

• Whitening operation can be done in one step: z = V t x

6.345 Automatic Speech Recognition

Dimensionality Reduction 7

Significance Testing

• To properly compare results from different classifier algorithms,

A1 , and A2 , it is necessary to perform significance tests

– Large differences can be insignificant for small test sets

– Small differences can be significant for large test sets

• General significance tests evaluate the hypothesis that the

probability of being correct, pi , of both algorithms is the same

• The most powerful comparisons can be made using common train

and test corpora, and common evaluation criterion

– Results reflect differences in algorithms rather than accidental

differences in test sets

– Significance tests can be more precise when identical data are

used since they can focus on tokens misclassified by only one

algorithm, rather than on all tokens

6.345 Automatic Speech Recognition

Significance Testing 1

McNemar’s Significance Test

• When algorithms A1 and A2 are tested on identical data we can

collapse the results into a 2x2 matrix of counts

A1 /A2

Correct

Incorrect

Correct

n00

n10

Incorrect

n01

n11

• To compare algorithms, we test the null hypothesis H0 that

n01

1

p1 = p2 , or n01 = n10 , or q =

=

n01 + n10 2

• Given H0 , the probability of observing k tokens asymmetrically

classified out of n = n01 + n10 has a Binomial PMF

! n

1

n

P(k) =

k

2

• McNemar’s Test measures the probability, P, of all cases that meet

or exceed the observed asymmetric distribution, and tests P < α

6.345 Automatic Speech Recognition

Significance Testing 2

McNemar’s Significance Test (cont’t)

• The probability, P, is computed by summing up the PMF tails

l

n

X

X

P=

P(k) +

P(k) l = min(n01 , n10 ) m = max(n01 , n10 )

k=m

Probability Distribution

k=0

0

min

max

n

• For large n, a Normal distribution is often assumed

6.345 Automatic Speech Recognition

Significance Testing 3

Significance Test Example

(Gillick and Cox, 1989)

• Common test set of 1400 tokens

• Algorithms A1 and A2 make 72 and 62 errors

• Are the differences significant?

A1

A2

1266 62

72

0

n = 134

A1

A2

1325 3

13

59

n = 16

m = 13

P = 0.0213

A1

A2

1328 0

10

62

n = 10

m = 10

P = 0.0020

6.345 Automatic Speech Recognition

m = 72

P = 0.437

Significance Testing 4

References

• Huang, Acero, and Hon, Spoken Language Processing,

Prentice-Hall, 2001.

• Duda, Hart and Stork, Pattern Classification, John Wiley & Sons,

2001.

• Jelinek, Statistical Methods for Speech Recognition. MIT Press,

1997.

• Bishop, Neural Networks for Pattern Recognition, Clarendon

Press, 1995.

• Gillick and Cox, Some Statistical Issues in the Comparison of

Speech Recognition Algorithms, Proc. ICASSP, 1989.

6.345 Automatic Speech Recognition

Pattern Classification