Introduction to Simulation - Lecture 3 Karen Veroy and Jacob White

advertisement

Introduction to Simulation - Lecture 3

Basics of Solving Linear Systems

Jacob White

Thanks to Deepak Ramaswamy, Michal Rewienski,

Karen Veroy and Jacob White

SMA-HPC ©2003 MIT

1

Outline

Solution Existence and Uniqueness

Gaussian Elimination Basics

LU factorization

Pivoting and Growth

Hard to solve problems

Conditioning

2

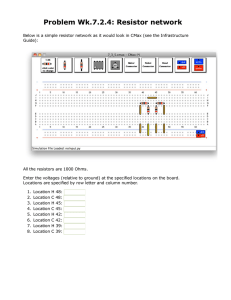

Application

Problems

[

G

M

]

Vn = I s

x

b

• No voltage sources or rigid struts

• Symmetric and Diagonally Dominant

• Matrix is n x n

SMA-HPC ©2003 MIT

3

Systems of Linear

Equations

⎡↑

⎢

⎢ M1

⎢↓

⎣

↑

M2

↓

⎡ x ⎤ ⎡b ⎤

↑ ⎤⎢ 1 ⎥ ⎢ 1 ⎥

b

⎥ x

MN ⎥ ⎢ 2 ⎥ = ⎢ 2 ⎥

⎢ ⎥ ⎢ ⎥

↓ ⎥⎦ ⎢ ⎥ ⎢ ⎥

⎣ xN ⎦ ⎣bN ⎦

x1M 1 + x2 M 2 +

+ xN M N = b

Find a set of weights, x, so that the weighted

sum of the columns of the matrix M is equal to

the right hand side b

SMA-HPC ©2003 MIT

4

Key Questions

Systems of Linear

Equations

• Given Mx = b

– Is there a solution?

– Is the solution Unique?

• Is there a Solution?

There exists weights, x1 ,

x1M 1 + x2 M 2 +

xN , such that

+ xN M N = b

A solution exists when b is in the

span of the columns of M

SMA-HPC ©2003 MIT

5

Systems of Linear

Equations

Key Questions

Continued

• Is the Solution Unique?

Suppose there exists weights, y1 ,

y1M 1 + y2 M 2 +

y N , not all zero

+ yN M N = 0

Then if Mx = b, therefore M ( x + y ) = b

A solution is unique only if the

columns of M are linearly

independent.

SMA-HPC ©2003 MIT

6

Systems of Linear

Equations

Key Questions

Square Matrices

• Given Mx = b, where M is square

– If a solution exists for any b, then the

solution for a specific b is unique.

For a solution to exist for any b, the columns of M must

span all N-length vectors. Since there are only N

columns of the matrix M to span this space, these vectors

must be linearly independent.

A square matrix with linearly independent

columns is said to be nonsingular.

SMA-HPC ©2003 MIT

7

Important Properties

Gaussian

Elimination Basics

Gaussian Elimination Method for Solving M x = b

• A “Direct” Method

Finite Termination for exact result (ignoring roundoff)

• Produces accurate results for a broad range of

matrices

• Computationally Expensive

SMA-HPC ©2003 MIT

8

Gaussian

Elimination Basics

Reminder by Example

3 x 3 example

⎡ M 11 M 12 M 13 ⎤ ⎡ x1 ⎤

⎡b1 ⎤

⎢

⎥⎢ ⎥

⎢ ⎥

⎢ M 21 M 22 M 23 ⎥ ⎢ x2 ⎥ = ⎢b2 ⎥

⎢ M 31 M 32 M 33 ⎥ ⎢⎣ x3 ⎥⎦

⎢⎣b3 ⎥⎦

⎣

⎦

M 11 x1 + M 12 x2 + M 13 x3 = b1

M 21 x1 + M 22 x2 + M 23 x3 = b2

M 31 x1 + M 32 x2 + M 33 x3 = b3

SMA-HPC ©2003 MIT

9

Gaussian

Elimination Basics

Reminder by Example

Key Idea

Use Eqn 1 to Eliminate x1 From Eqn 2 and 3

M 11 x1 + M 12 x2 + M13 x3 = b1

21 x1⎞+ M ⎛

22 x2 + M

⎛

MM

M 2123 x3 =⎞ b2

M 21

21

M

−

M

x

+

M

−

M

x

=

b

−

b1

M

⎜ 22

⎜ x23 + M x 13=⎟ b3

12 ⎟ 21

2

2

M

x

+

M

M

M

M

−

(

M

x

+

M

x

+

M

x

=

b

)

31

1

32

2

33

3

3

11

1112 2 ⎠

111

⎝

⎠

⎝ 11 1

13 3

M 11

⎛

M 31

M 31x = ⎞ b

M 31

MM

x1⎞⎟+x2M+ 32⎛⎜ Mx233+−M

3112

33 M

3 13 ⎟ x33 = b3 −

b1

⎜ M 32 −

M

M

M

⎝

⎠

⎠

11

11

11

M 31 ⎝

−

( M 11 x1 + M12 x2 + M13 x3 = b1 )

M 11

SMA-HPC ©2003 MIT

10

Gaussian

Elimination Basics

Pivot

⎡

⎢

⎢ M 11

⎢

⎢

⎢

⎢0

⎢

⎢

⎢

⎢

⎢0

⎢⎣

Reminder by Example

Key Idea in the Matrix

⎡

⎤

⎢

⎥

x

⎡

⎤

1

⎤

b

⎥

⎥⎢ ⎥ ⎢ 1

⎢

⎥

⎢

⎥

M 12

M 13

⎥

⎢

⎥

⎢

⎥

⎥

⎢

⎥

⎢

⎥

⎥

⎢

⎥

⎢

⎥

⎥

⎛

⎞ ⎛

⎞

⎛ M 21 ⎞

⎛ M 21 ⎞

⎛ M 21 ⎞ ⎥

⎢

⎢

⎥

M

−

M

M

−

M

x

=

b

−

b

⎥

⎜ 22 ⎜

⎟ 12 ⎟ ⎜ 23 ⎜

⎟ 12 ⎟ 2

⎜

⎟ 1

2

⎝ M 11 ⎠

⎝ M 11 ⎠

⎝ M11 ⎠ ⎥

⎝

⎠ ⎝

⎠⎥ ⎢ ⎥ ⎢

⎥

⎥⎢ ⎥ ⎢

⎢

⎥

⎢

⎥

⎥

⎢

⎥

⎢

⎥

⎥

⎛

⎞ ⎛

⎞ ⎢ ⎥ ⎢

⎛ M 31 ⎞

⎛ M 31 ⎞

⎥

⎜ M 32 − ⎜

⎟ M 12 ⎟ ⎜ M 33 − ⎜

⎟ M 12 ⎟ ⎥ ⎢ ⎥ ⎢

⎥

⎛

⎞

M

⎝ M 11 ⎠

⎝ M 11 ⎠

⎝

⎠ ⎝

⎠ ⎥⎦ ⎣⎢ x3 ⎦⎥ ⎢b3 − ⎜ 31 ⎟ b1 ⎥

⎝ M11 ⎠ ⎦⎥

⎣⎢

MULTIPLIERS

SMA-HPC ©2003 MIT

11

Gaussian

Elimination Basics

Reminder by Example

Remove x2 from Eqn 3

Pivot

M 12

⎡ M11

⎢

⎢

⎢

⎢

⎢

⎢

⎢

⎛

⎞

⎢

⎛ M 21 ⎞

⎜ M 22 − ⎜

⎟ M12 ⎟

⎢0

⎝ M11 ⎠

⎝

⎠

⎢

⎢

⎢

⎢

⎢

⎢0

0

⎢

⎢

⎢⎣⎢

M13

⎛

⎞

⎛ M 21 ⎞

⎜ M 23 − ⎜

⎟ M 12 ⎟

⎝ M11 ⎠

⎝

⎠

⎛

⎜

⎛ M 31 ⎞

⎜

⎜ M 33 − ⎜ M ⎟ M12 −

⎝ 11 ⎠

⎜

⎜

⎝

⎛

⎞

⎛ M 31 ⎞

⎜ M 32 − ⎜

⎟ M 12 ⎟

⎝ M 11 ⎠

⎝

⎠

⎛

⎞

⎛ M 21 ⎞

⎜ M 22 − ⎜

⎟ M 12 ⎟

⎝ M 11 ⎠

⎝

⎠

⎞

⎟

⎛

⎞⎟

⎛ M 21 ⎞

⎜ M 23 − ⎜

⎟ M 12 ⎟ ⎟

⎝ M11 ⎠

⎝

⎠⎟

⎟

⎠

⎤ ⎡ x1 ⎤ ⎡b1

⎤

⎥⎢ ⎥ ⎢

⎥

⎥⎢ ⎥ ⎢

⎥

⎥⎢ ⎥ ⎢

⎥

⎢

⎥

⎥

⎢

⎥

⎥⎢ ⎥ ⎢

⎥

⎥⎢ ⎥ ⎢

⎥

⎛

⎞

M

⎢

⎥

21

⎥

⎥

⎢b2 − ⎜

b

⎟

1

⎥⎢ ⎥ ⎢

⎥

⎝ M11 ⎠

⎥ ⎢ x2 ⎥ = ⎢

⎥

⎢

⎥

⎥

⎥

⎢

⎢

⎥

⎥

⎥

⎢

⎥⎢ ⎥ ⎢

⎥

⎥⎢ ⎥ ⎢

⎛

⎞

⎛ M 31 ⎞

⎥

⎢

⎥

−

M

M

⎜ 32 ⎜

⎥

⎟ 12 ⎟

⎥

⎢

⎝ M11 ⎠

⎠ ⎛ b − ⎛ M 21 ⎞ b ⎞ ⎥

⎥ ⎢ ⎥ ⎢b − ⎛ M 31 ⎞ b − ⎝

⎜ 2 ⎜

⎟ 1 ⎟⎥

⎥ ⎢ ⎥ ⎢ 3 ⎜⎝ M11 ⎟⎠ 1 ⎛

M11 ⎠ ⎠

⎞⎝

⎛ M 21 ⎞

⎝

⎢

⎥

⎥

⎥

⎢

⎜ M 22 − ⎜

⎟ M 12 ⎟

⎢ ⎥

⎥⎦

⎝ M11 ⎠

⎝

⎠

⎦⎥⎥ ⎣⎢ x3 ⎦⎥ ⎣⎢

Multiplier

SMA-HPC ©2003 MIT

12

Gaussian

Elimination Basics

⎡

⎢

⎢ M 11

⎢

⎢

⎢

⎢0

⎢

⎢

⎢

⎢

⎢0

⎢⎣

Reminder by Example

Simplify the Notation

⎡

⎤

⎢

⎥

x

⎡

⎤

⎤ 1

b

⎥

⎥⎢ ⎥ ⎢ 1

⎥

M 12

M 13

⎥⎢ ⎥ ⎢

⎢

⎥

⎢

⎥

⎥

⎢

⎥

⎢

⎥

⎥

⎢

⎥

⎢

⎥

⎛

⎞ ⎛

⎞⎥

⎛ M 21 ⎞

⎛ M 21 ⎞

⎛ M 21 ⎞ ⎥

⎢

⎢

⎥

M

−

M

M

−

M

x

=

b

−

b

⎥

⎜ 22 ⎜

⎟ 12 ⎟ ⎜ 23 ⎜

⎟ 12 ⎟ 2

⎜

⎟ 1

2

11 ⎠

⎝ M 1122

⎠

⎝ M23

⎝ M11 ⎠ ⎥

⎝

⎠ ⎝

⎠⎥ ⎢ ⎥ ⎢

2 ⎥

⎥⎢ ⎥ ⎢

⎢

⎥

⎢

⎥

⎥

⎢

⎥

⎢

⎥

⎛

⎞ ⎛

⎞ ⎥⎢ ⎥ ⎢

⎛ M 31 ⎞

⎛ M 31 ⎞

⎥

⎥

M

−

M

M

−

M

⎜ 32 ⎜

⎟ 12 ⎟ ⎜ 33 ⎜

⎟ 12 ⎟ ⎢ ⎥ ⎢

⎥

⎛

⎞

M

11 ⎠

⎝ M 1132

⎠

⎝ M33

⎝

⎠ ⎝

⎠ ⎥⎦ ⎢⎣ x3 ⎥⎦ ⎢b3 − ⎜ 331 ⎟ b1 ⎥

⎢⎣

⎝ M11 ⎠ ⎥⎦

M

M

b

M

M

b

SMA-HPC ©2003 MIT

13

Gaussian

Elimination Basics

⎡

⎢

⎢ M 11

⎢ 0

⎢

⎢ 0

⎢

⎣

Pivot

M 12

M 22

0

M 13

M 23

M 33 −

M 32

M 22

Reminder by Example

Remove x2 from Eqn 3

⎤

⎡

⎤

⎥

⎢

⎥

b1

x

⎡

⎤

1

⎥

⎢

⎥

⎢

⎥

⎥ x2 = ⎢

⎥

b2

⎢

⎥

⎥

⎢

⎥

⎢

⎥

x

M

⎣ 3⎦ ⎢

M 23 ⎥

b3 − 32 b2 ⎥

⎥

⎢

M 22 ⎥⎦

⎦

⎣

Multiplier

SMA-HPC ©2003 MIT

14

Gaussian

Elimination Basics

Reminder by Example

GE Yields Triangular System

x⎤⎡

⎤ x

⎡ y ⎤⎤ ⎡b1 ⎤

⎡M11 ⎡⎢U MU12 UM⎤⎡

1

3

⎥⎢x ⎥ = ⎢1y ⎥

0

U

U

⎢ 0 ⎢ M M⎥⎢ ⎥⎢

⎥ ⎢ ⎥⎥ ⎢ ⎥

⎥⎦ x

⎢⎣ 2y ⎥⎦ = ⎢b2 ⎥

⎦⎣23x⎥⎢

⎢ ⎢⎣ 0 220 U ⎥⎢

⎥

y

x3⎢

⎣= 0U 3 0 M33 ⎥⎢

⎦⎣x3 ⎥⎦ ⎢⎣b3 ⎥⎦

33

11

x2 =

12

13

1

1

22

23

2

2

33

3

3

y2 − U 23 x3

U 22

x1 =

SMA-HPC ©2003 MIT

Altered

During

GE

y1 − U12 x2 − U13 x3

U11

15

Gaussian

Elimination Basics

Reminder by Example

The right-hand side updates

⎡b1

⎡b1 ⎤

⎡ y1 ⎤ ⎢

⎥

y

⎡

⎤

⎢

1

⎢ ⎥ ⎢

⎥

⎢⎛ M 21⎥⎞ ⎢

⎢ ⎥ ⎢

⎥

⎢ y2 ⎥ = ⎢b2 − ⎜⎢ ⎥⎟ b1 ⎢

⎥

⎛

M

⎢ ⎥ ⎢

⎥

⎝ 11 ⎠

−

b

⎢

⎥

⎢

=

y

⎢ ⎥ ⎢

⎜⎥

2

2

⎢⎣ y3 ⎥⎦ ⎢b − ⎛⎢M 31⎥⎞ b −⎢M 32 b ⎝

⎥

⎢⎣ 3 ⎜⎝ M 11 ⎟⎠ 1 M 22 2 ⎥⎦

⎢ ⎥ ⎢

⎢⎣ y3 ⎥⎦

⎡

⎤⎤

⎢

⎥

0 0⎥⎥

⎢ 1

⎥⎡ y ⎤ ⎡b ⎤

⎢ M21

⎥⎢ 1⎥ ⎢ 1⎥

M 21 ⎞ ⎢ M 1 0⎥⎥⎢ y2 ⎥ = ⎢b2 ⎥

⎥⎥⎢ y ⎥ ⎢b ⎥

⎟ b⎢1 11

M 11 ⎠ ⎢ M31 M32 ⎥⎥⎣ 3 ⎦ ⎣ 3⎦

1⎥

⎢

⎢⎣ M11 M22 ⎥⎦⎥

⎛M ⎞

M

31

⎢b −

b1 − 32 b2 ⎥

⎜

⎟

3

⎢⎣

M 22 ⎥⎦

⎝ M 11 ⎠

SMA-HPC ©2003 MIT

16

Gaussian

Elimination Basics

Reminder by Example

Fitting the pieces together

⎤

M

M

M

M

M

M

⎡ M111 12 13 ⎤⎥⎥

M

M ⎥⎥

⎢ MM M

1 M ⎥

M

⎢ M M22 23⎥⎥⎥

M

M

M 1⎥

⎢⎣ MM MM M33⎥⎦⎥⎦

⎡

⎢

⎢

⎢

⎢

⎢

⎢

⎢

⎢⎣

11

21

2 1

12

13

22

23

11

1 1

31

3 1

1111

32

3 2

33

22 3 2

SMA-HPC ©2003 MIT

17

Basics of LU

Factorization

Solve M x = b

Step 1

M = LiU

=

Step 2 Forward Elimination

Solve L y = b

Step 3 Backward Substitution

Solve U x = y

SMA-HPC ©2003 MIT

Recall from basic linear algebra that a matrix A can be factored into the product of a

lower and an upper triangular matrix using Gaussian Elimination. The basic idea of

Gaussian elimination is to use equation one to eliminate x1 from all but the first

equation. Then equation two is used to eliminate x2 from all but the second

equation. This procedure continues, reducing the system to upper triangular form as

well as modifying the right- hand side. More is

needed here on the basics of Gaussian elimination.

18

Basics of LU

Factorization

⎡l11 0

⎢l

⎢ 21 l22

⎢l13

⎢

⎢

⎢

⎢

⎢

⎢

⎢⎣lN 1

Solving Triangular

Systems

Matrix

0 ⎤

⎥

⎥

⎥

⎥

⎥

⎥

⎥

0⎥

lNN ⎥⎦⎥

⎡ y1 ⎤

⎡b1 ⎤

⎢ ⎥

⎢b 2 ⎥

⎢ y2 ⎥

⎢ ⎥

⎢ ⎥

⎢ ⎥

⎢ ⎥

⎢ ⎥

⎢ ⎥ = ⎢ ⎥

⎢ ⎥

⎢ ⎥

⎢ ⎥

⎢ ⎥

⎢ ⎥

⎢ ⎥

⎢y ⎥

⎢b ⎥

⎣ N⎦

⎣⎢ N ⎦⎥

The First Equation has only y1 as an unknown.

The Second Equation has only y1 and y2 as

unknowns.

SMA-HPC ©2003 MIT

19

Solving Triangular

Systems

Basics of LU

Factorization

⎡l11 0

⎢l

⎢ 21 l22

⎢l13

⎢

⎢

⎢

⎢

⎢

⎢

⎣⎢l N 1

Algorithm

0 ⎤

⎥

⎥

⎥

⎥

⎥

⎥

⎥

0 ⎥

l NN ⎦⎥⎥

⎡ y1 ⎤

⎡b1 ⎤

⎢y ⎥

⎢b 2 ⎥

⎢ 2⎥

⎢ ⎥

⎢ ⎥

⎢ ⎥

⎢ ⎥

⎢ ⎥

⎢ ⎥ = ⎢ ⎥

⎢ ⎥

⎢ ⎥

⎢ ⎥

⎢ ⎥

⎢ ⎥

⎢ ⎥

⎢y ⎥

⎢ bN ⎥

⎣ ⎦

⎣ N⎦

1

b1

l11

1

y2 = (b2 − l21 y1)

l22

1

y3 = (b3 − l31 y1 − l32 y2)

l33

y1 =

yN =

1

l NN

N −1

(bN− ∑ lN i yi )

i =1

SMA-HPC ©2003 MIT

Solving a triangular system of equations is straightforward but expensive. y1 can be

computed with one divide, y2 can be computed with a multiply, a subtraction and a

divide. Once yk-1 has been computed, yk can be computed with k- 1multiplies, k- 2

adds, a subtraction and a divide. Roughly the number of arithmetic operations is

N divides + 0 add /subs + 1 add /subs + ……. N- 1 add /sub

for y2

for y1

+

0 mults

for y1

+ 1 mult

for yN

+ …….. + N- 1 mults

for y2

for yN

=

(N

- 1)(N

- 2) / 2 add/subs + (N

- 1)(N

- 2) / 2 mults + N divides

=

Order N2 operations

20

Factoring

Basics of LU

Factorization

⎡ M 11

⎢M

M

⎢ M 21

M

⎢M

M 31

⎢M

⎣M

M 41

21

11

31

11

41

11

Picture

M 12 M 13 M 14 ⎤

⎥

M

M 22

M 23

22 M

23 M 24

24

⎥

M

M 3333 M 34

⎥

M

M

32

34

M

⎥

M

M

M

M

M

M444444 ⎦

M

43

42

43

43

42

M

M

32

22

42

43

22

33

SMA-HPC ©2003 MIT

The above is an animation of LU factorization. In the first step, the first equation is

used to eliminate x1 from the 2nd through 4th equation. This involves multiplying

row 1 by a multiplier and then subtracting the scaled row 1 from each of the target

rows. Since such an operation would zero out the a21, a31 and a41 entries, we can

replace those zero’d entries with the scaling factors, also called the multipliers. For

row 2, the scale factor is a21/a11 because if one multiplies row 1 by a21/a11 and

then subtracts the result from row 2, the resulting a21 entry would be zero. Entries

a22, a23 and a24 would also be modified during the subtraction and this is noted by

changing the color of these matrix entries to blue. As row 1 is used to zero a31 and

a41, a31 and a41 are replaced by multipliers. The remaining entries in rows 3 and 4

will be modified during this process, so they are recolored blue.

This factorization process continues with row 2. Multipliers are generated so that

row 2 can be used to eliminate x2 from rows 3 and 4, and these multipliers are

stored in the zero’d locations. Note that as entries in rows 3 and 4 are modified

during this process, they are converted to gr een. The final step is to used row 3 to

eliminate x3 from row 4, modifying row 4’s entry, which is denoted by converting

a44 to pink.

It is interesting to note that as the multipliers are standing in for zero’d matrix

entries, they are not modified during the factorization.

21

Factoring

LU Basics

For i = 1 to n-1 {

For j = i+1 to n {

M ji =

Algorithm

“For each Row”

“For each target Row below the source”

M ji

M ii

Pivot

For k = i+1 to n { “For each Row element beyond Pivot”

M jk ← M jk − M ji M ik

}

Multiplier

}

}

SMA-HPC ©2003 MIT

22

Factoring

LU Basics

At Step i

Zero Pivots

⎡ Factored Portion ⎤

⎢Multipliers M

⎥

⎢ (L)

⎥

M

⎢⎣

⎥⎦

ii

ji

Row i

Row j

M ji

What if M ii = 0 ? Cannot form

M ii

Simple Fix (Partial Pivoting)

If M ii = 0

Find M ji ≠ 0 j > i

Swap Row j with i

SMA-HPC ©2003 MIT

Swapping row j with row i at step i of LU factorization is identical to applying LU

to a matrix with its rows swapped “a priori”.

To see this consider swapping rows before beginning LU.

⎡−

⎢−

⎢

⎢

⎢

⎢−

⎢

⎢

⎢−

⎢

⎢

⎣⎢ −

− − − − − − − r1 − − − − − − − − ⎤

− − − − − − − r2 − − − − − − − − ⎥

⎥

⎥

⎥

− − − − − − − rj − − − − − − − − ⎥

⎥

⎥

− − − − − − − ri − − − − − − − − ⎥

⎥

⎥

− − − − − − − r N − − − − − − − − ⎦⎥

⎡ x1 ⎤

⎡ b1 ⎤

⎢x2 ⎥

⎢b2 ⎥

⎢ ⎥

⎢ ⎥

⎢ | ⎥

⎢| ⎥

⎢ ⎥

⎢ ⎥

⎢ xi ⎥ = ⎢bj ⎥

⎢ | ⎥

⎢| ⎥

⎢ ⎥

⎢ ⎥

⎢ xj ⎥

⎢bi ⎥

⎢ | ⎥

⎢| ⎥

⎢ ⎥

⎢ ⎥

⎢⎣ b N ⎥⎦

⎣⎢ x N ⎦⎥

Swapping rows corresponds to reordering only the equations. Notice that

the vector of unknowns is NOT reordered.

23

Factoring

LU Basics

Zero Pivots

Two Important Theorems

1) Partial pivoting (swapping rows) always succeeds

if M is non singular

2) LU factorization applied to a strictly diagonally

dominant matrix will never produce a zero pivot

SMA-HPC ©2003 MIT

Theorem Gaussian Elimination applied to strictly diagonally dominant matrices

will never produce a zero pivot.

Proof

1) Show the first step succeeds.

2) Show the (n- 1) x (n- 1) sub matrix

n- 1

n

n- 1

n

is still strictly diagonally dominant.

n

as

a11 ≠ 0

| a11 |> ∑ | aij |

Second row after first step

j =2

First Step

0, a 22 −

Is

a 22 −

a 21

a 21

a 21

a12, a 23 −

a13,… , a 2 n −

a 1n

a11

a11

a11

a 21

a 21

a12 > a 2 j −

a1 j ?

a11

a11

24

Numerical Problems

LU Basics

Small Pivots

Contrived Example

⎡10−17

⎢

⎣ 1

1⎤

⎥

2⎦

⎡ 1

L = ⎢ 17

⎣10

⎡ x1 ⎤ ⎡1 ⎤

⎢ x ⎥ = ⎢3⎥

⎣ 2⎦ ⎣ ⎦

0⎤

1 ⎥⎦

⎡10−17

U= ⎢

⎣ 0

⎤

⎥

−1017 + 2 ⎦

1

Can we represent

this ?

SMA-HPC ©2003 MIT

In order to compute the exact solution first forward eliminate

0⎤ ⎡ y1 ⎤ ⎡1 ⎤

=

1⎥⎦ ⎢⎣ y2 ⎥⎦ ⎢⎣ 3⎥⎦

and therefore y1 = 1, y2 = 3 -1017.

⎡ 1

⎢1017

⎣

Backward substitution yields

⎡10−17

⎢

⎣ 0

⎤

⎥

2 − 10 ⎦

⎤

⎡ x1 ⎤ ⎡1

⎢x ⎥ = ⎢

17 ⎥

⎣ 2 ⎦ ⎣ 3 − 10 ⎦

3 − 1017

and therefore x2 =

+1

2 − 1017

1

17

17

17

3 − 1017

17 2 − 10 − (3 − 10 )

and x1 = 10 (1 −

) = 10 (

)

2 − 1017

2 − 1017

17

+1

In the rounded case

⎡ 1

⎢1017

⎣

⎡10−17

⎢

⎣ 0

0⎤ ⎡ y1 ⎤ ⎡1 ⎤

= ⎢ ⎥ y1 = 1 y2 = − 1017

⎢

⎥

⎥

1⎦ ⎣ y2 ⎦ ⎣3⎦

1 ⎤ ⎡ x1 ⎤ ⎡ 1 ⎤

⎥⎢ ⎥ = ⎢

⎥ x1 = 1 x2 = 0

1017 ⎦ ⎣ x2 ⎦ ⎣ −1017 ⎦

25

Numerical Problems

LU Basics

Small Pivots

An Aside on Floating Point Arithmetic

Double precision number

X.X X X X … X i10exponent

64 bits

sign

Basic Problem

11 bits

52 bits

1.0 − 0.000000000000001 = 1.0

or

1.0000001 − 1 + (π ⋅ 10−7 ) right 8 digits

Key Issue

Avoid small differences between large

numbers !

SMA-HPC ©2003 MIT

26

LU Basics

Numerical Problems

Small Pivots

Back to the contrived example

LU Exact

LU Rounded

⎡ 1

= ⎢ 17

⎣10

⎡ 1

= ⎢ 17

⎣10

⎡ x1 ⎤

⎡1⎤

=

⎢x ⎥

⎢1⎥

⎣ ⎦

⎣ 2 ⎦ Exact

0⎤ ⎡10−17

1 ⎥⎦ ⎢⎣ 0

0 ⎤ ⎡10−17

⎢

1 ⎥⎦ ⎣ 0

⎤ ⎡ x1 ⎤ ⎡1 ⎤

⎥⎢ ⎥ =

2 − 1017 ⎦ ⎣ x2 ⎦ ⎢⎣ 3⎥⎦

1

1 ⎤ ⎡ x1 ⎤ ⎡1 ⎤

⎥⎢ ⎥ = ⎢ ⎥

−1017 ⎦ ⎣ x2 ⎦ ⎣3⎦

⎡ x1 ⎤

⎡ 0⎤

=

⎢x ⎥

⎢ ⎥

⎣ 2 ⎦ Rounded ⎣1 ⎦

SMA-HPC ©2003 MIT

27

Numerical Problems

LU Basics

Small Pivots

Partial Pivoting for Roundoff reduction

If | M ii | < max | M ji |

j >i

Swap row i with arg (max | M ij |)

j >i

⎡ 1

LU reordered = ⎢ −17

⎣10

0⎤

1 ⎥⎦

2

⎡1

⎤

⎢ 0 1 + 2 ⋅ 10−17 ⎥

⎣

⎦

This multiplier

is small

This term gets

rounded

SMA-HPC ©2003 MIT

To see why pivoting helped notice that

0⎤ ⎡ y1 ⎤ ⎡3⎤

=

1⎥⎦ ⎢⎣ y2 ⎥⎦ ⎢⎣1 ⎥⎦

yields y1 = 3, y 2 = 1 − 10−17 1

Notice that without partial pivoting y2 was 3-1017 or -1017 with rounding.

The right hand side value 3 in the unpivoted case was rounded away, where as now

it is preserved. Continuing with the back substitution.

⎡ 1

⎢10−17

⎣

2

⎡1

⎤

⎢ 0 1 + 2 ⋅ 10−17 ⎥

⎣

⎦

x2 1 x1 1

⎡ x1 ⎤ ⎡ 3⎤

⎢ ⎥=⎢ ⎥

⎣ x2 ⎦ ⎣1 ⎦

28

LU Basics

Numerical Problems

Small Pivots

If the matrix is diagonally dominant or partial pivoting

for round-off reduction is during

LU Factorization:

1) The multipliers will always be smaller than one in

magnitude.

2) The maximum magnitude entry in the LU factors

will never be larger than 2(n-1) times the maximum

magnitude entry in the original matrix.

SMA-HPC ©2003 MIT

To see why pivoting helped notice that

0⎤ ⎡ y1 ⎤ ⎡3⎤

=

1⎥⎦ ⎢⎣ y2 ⎥⎦ ⎢⎣1 ⎥⎦

yields y1 = 3, y 2 = 1 − 10−17 1

Notice that without partial pivoting y2 was 3-1017 or -1017 with rounding.

The right hand side value 3 in the unpivoted case was rounded away, where as now

it is preserved. Continuing with the back substitution.

⎡ 1

⎢10−17

⎣

2

⎡1

⎤

⎢ 0 1 + 2 ⋅ 10−17 ⎥

⎣

⎦

x2 1 x1 1

⎡ x1 ⎤ ⎡ 3⎤

⎢ ⎥=⎢ ⎥

⎣ x2 ⎦ ⎣1 ⎦

29

Hard to Solve

Systems

Fitting Example

Polynomial Interpolation

Table of Data

f

t0

f (t0)

t1 f (t1)

f (t0)

tN f (tN)

t0 t1

t2

tN

t

Problem fit data with an Nth order polynomial

f (t ) = α 0 + α1 t + α 2 t 2 + … + α N t N

SMA-HPC ©2003 MIT

30

Example Problem

Hard to Solve

Systems

Matrix Form

⎡1

⎢

⎢1

⎢

⎢

⎢

⎢

⎢

⎢1

⎣

2

t0

2

t1

t0

t0

t1

t1

tN

tN

2

N

N

tN

N

⎤ ⎡α 0 ⎤ ⎡ f (t0 ) ⎤

⎥⎢ ⎥ ⎢

⎥

⎥ ⎢α1 ⎥ ⎢ f (t1 ) ⎥

⎥⎢ ⎥ ⎢

⎥

⎥⎢ ⎥=⎢

⎥

⎥⎢ ⎥ ⎢

⎥

⎥⎢ ⎥ ⎢

⎥

⎥⎢ ⎥ ⎢

⎥

⎥ ⎣⎢α N ⎦⎥ ⎣⎢ f (t N ) ⎦⎥

⎦

M interp

SMA-HPC ©2003 MIT

The kth row in the system of equations on the slide corresponds to insisting that the

Nth order polynomial match the data exactly at point tk. Notice that we selected the

order of the polynomial to match the number of data points so that a square system

is generated. This would not generally be the best approach to fitting data, as we

will see in the next slides.

31

Hard to Solve

Systems

Fitting Example

Fitting f(t) = t

f

Coefficient

Value

t

Coefficient number

SMA-HPC ©2003 MIT

Notice what happens when we try to fit a high order polynomial to a function that is

nearly t. Instead of getting only one coefficient to be one and the rest zero, instead

when 100th order polynomial is fit to the data, extremely large coefficients are

generated for the higher order terms. This is due to the extreme sensitivity of the

problem, as we shall see shortly.

32

Perturbation Analysis

Hard to Solve

Systems

Measuring Error Norms

Vector Norms

L2 (Euclidean) norm :

x

2

1

∑

=

i=1

L1 norm :

x

Unit circle

n

=

xi

x

2

2

< 1

1

n

∑

xi

i=1

1

x

1

< 1

L∞ norm :

x

∞

= max

i

xi

Square

x

∞

< 1

SMA-HPC ©2003 MIT

33

Perturbation Analysis

Hard to Solve

Systems

Measuring Error Norms

Matrix Norms

Vector induced norm :

A = max

x

Ax

x

= max

x =1

Ax

Induced norm of A is the maximum “magnification” of x by A

Easy norms to compute:

n

A

A

A

1

∞

2

= max

∑

⎡1

⎢0

j

i=1

Why? Let x = ⎢

⎢

n

= max

A ij = max abs row sum ⎡ ± 1 ⎤ ⎢⎣ 0

i

⎢

⎥

j =1

⎥

Why? Let x = ⎢

⎢

⎥

⎢

⎥

= not so easy to compute!!

⎣ ± 1⎦

∑

A ij = max abs column sum

⎤

⎥

⎥

⎥

⎥

⎦

SMA-HPC ©2003 MIT

34

Hard to Solve

Systems

Perturbation Analysis

Perturbation Equation

(M + δ M ) ( x + δ x) = M x + δ M x + M δ x + δ M δ x =

Models LU

Roundoff

b

Unperturbed

RightHandSide

Models Solution

Perturbation

Since M x - b = 0

M δ x = − δ M ( x + δ x ) ⇒ δ x = − M −1δ M ( x + δ x )

1

Taking Norms

δ x ≤ M −1 δMM x + δδxM x + δ x

M

δx

δM

−1

≤

M

M

Relative Error Relation

M

x +δ x

"Condition

Number "

SMA-HPC ©2003 MIT

As the algebra on the slide shows the relative changes in the solution x is bounded

by an M

- dependent factor times the relative changes in M. The factor

|| M −1 || || M ||

was historically referred to as the condition number of M, but that definition has

been abandoned as then the condition number is norm

- dependent. Instead the

condition number of M is the ratio of singular values of M.

cond ( M ) =

σ max( M )

σ min( M )

Singular values are outside the scope of this course, consider consulting Trefethen

& Bau.

35

Perturbation Analysis

Hard to Solve

Systems

Geometric Approach is clearer

M = [M1 M 2 ], Solving M x = b is finding x1 M1 + x2 M 2 = b

x2

M2

|| M 2 ||

x2

M1

|| M 1 ||

x1

0 ⎤

Case

1 ⎡1 orthogonal

Columns

⎢0 10−6 ⎥

⎣

⎦

M2

|| M 2 ||

M1

|| M 1 ||

x1

1

1 − 10−6 ⎤

Case

1 ⎡⎢ nearly

Columns

aligned

⎥

−6

⎣1 − 10

1

⎦

When vectors are nearly aligned, difficult to determine

how much of M 1 versus how much of M 2

SMA-HPC ©2003 MIT

36

Geometric Analysis

Hard to Solve

Systems

Polynomial Interpolation

log(cond(M))

~1020

1

1020

1

1010

t

~1013

1015

~314

4

~106

8

n

t2

16

32

1

The power series polynomials

are nearly linearly dependent

⎡1

⎢

⎢1

⎢

⎢

⎢

⎢⎣ 1

t1

t2

t12

t 22

tN

t N2

t

⎤

⎥

⎥

⎥

⎥

⎥

⎥⎦

SMA-HPC ©2003 MIT

Question Does row

- scaling reduce growth ?

0 ⎤

⎡ d 11 0

⎢0

⎥

⎢

⎥

0 ⎥

⎢

⎢

⎥

0 dNN ⎦

⎣0

⎡ a11

⎢

⎢

⎢

⎢

⎣ aN 1

a1N ⎤ ⎡ d 11 a11

⎥ ⎢

⎥=⎢

⎥ ⎢

⎥ ⎢

aNN ⎦ ⎣ dNN aN 1

d 11 a1N ⎤

⎥

⎥

⎥

⎥

dNN aNN ⎦

Does row

- scaling reduce condition number ?

|| M || || M −1 || ≡ condition number of M

Theorem If floating point arithmetic is used, then row scaling (D M x = D b) will

not reduce growth in a meaningful way.

If

ˆ LU xˆ = b

M

and

D M LU xˆ ' = D b

then

xˆ = xˆ ' ← No roundoff reduction

37

Summary

Solution Existence and Uniqueness

Gaussian Elimination Basics

LU factorization

Pivoting and Growth

Hard to solve problems

Conditioning

38