1.2.2 Gauss-Jordan Elimination of the general

advertisement

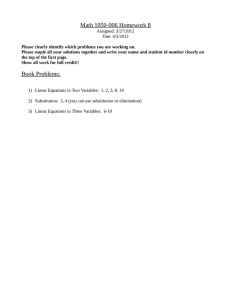

1.2.2 Gauss-Jordan Elimination In the method of Gaussian elimination, starting from a system A x = b of the general form a11 a21 : : an1 a12 a13 ... a22 a23 ... : : : : an2 an3 ... a1n a2n : : ann x1 b1 x2 b2 : = : : : xN bn is converted to an equivalent system A’ x = b ’ after (1.2.2-1) 2 3 N FLOP’s that is of upper 3 triangular form a ' 11 a ' 12 a '22 ' 13 ... a '23 ... ' 33 ... a a a '2N ' a 3N ' a NN a ' 1N ' x1 b 1 x2] b ' 2 : = b 3' : : xN b ' N (1.2.2-2) At this point, it is possible, through backward substitution, to solve for the unknowns in N2 the order xN, xN-1, xN-2, … in steps. 2 In the method of Gauss-Jordan elimination, one continues the work of elimination, placing zeros above the diagonal. To “zero” the element at (N-1, N), we write the last two equations of (1.2.2-2) a 'N −1, N −1 x N −1 + a 'N −1, N x N = b 'N −1 a 'N, N x N = b 'N (1.2.2-3) We then define λ N −1, N = a 'N −1, N (1.2.2-4) a 'NN And replace the N-1st row with the equation obtained after performing the row operation (a 'N −1, N −1 x N −1 + a 'N −1, N x N = b 'N −1 ) − λ N −1, N (a 'NN x N = b 'N ) (1.2.2-5) a 'N −1, N −1 x N −1 + (a 'N −1, N − λ N −1, N a 'NN )x N = b 'N −1 − b 'N λ N −1, N Defining b 'N' −1 = b 'N −1 − b 'N λ N −1, N (1.2.2-6) and noting a '' N −1 =a ' N −1, N − λ N −1, N a ' NN =a ' N -1, N −( a 'N −1, N a ' NN )a 'NN = 0 (1.2.2-7) After this row operation the set of equations becomes ' a 11 ' a 12 ' a 13 ... a 1,' N-1 a '22 a '23 ... a '2, N-1 ' a 33 ... a 3,' N-1 : a 'N' -1, N-1 ' ' x1 a 1.N b1 a '2, N x2 b '2 a 3,' N x 3 b 3' = : : : '' 0 x N -1 b N -1 x ' a 'NN N b N (1.2.2-2) We can continue this process until the set of equations is in diagonal form a ''' a 22 ''' a 33 ''' a NN ''' 11 ''' x1 b 1 x2 b ' ' ' 2 : = b 3' ' ' : : xN b ' ' ' N (1.2.2-9) Dividing each equation by the value of its single coefficient yields b1' ' ' ''' a 11 1 x 1 b '2' ' 1 x a ' ' ' 2 22 1 x 3 = b 3' ' ' ''' : : a 33 1 x N : b''' ' 'N' a NN (1.2.2-10) The matrix on the left that has a one everywhere along the principal diagonal and zeros everywhere else is called the identity matrix, and has the property that for any vector v, Iv = v (1.2.2-11) The form (1.2.2-10) therefore immediately gives the solution to the problem. In practice, we use Gaussian Elimination, stopping at (1.2.2-2) to begin backward substitution rather than continue the elimination process because backward substitution is so fast, N2 << 2N3/3 for all but small problems. We therefore do not consider the method of Gauss-Jordan Elimination further.