Receivers, Antennas, and Signals Modulation and Coding Professor David H. Staelin

advertisement

Receivers, Antennas,

and Signals

Modulation and Coding

Professor David H. Staelin

Lec14.10-1

2/6/01

Multi-Phase-Shift Keying, “MPSK”

BPSK: Binary Phase-Shift Keying

S1(t)

T

S2(t)

M = 2 phases

M = 4 (2 bits)

M = 8 (3 bits)

M = 16

B/R

(4 bits/baud)

1.0

Pe for

Ideal

Receiver

-2

10

BER

(Bit Error

Rate) 10-4

10

Lec14.10-2

2/6/01

Bandwidth/bit = B/R

0.5 Small

Loss

0

-6

0

QPSK: Quadrature Phase-Shift Keying

Step

“Eye

Diagram”

10

20

[Hz] bits/sec

M=2

4

8

16 32

E/No(dB)

10

20

30

QPSK is bandwidth efficient,

but has little E/No penalty.

E/No(dB) (E is J/bit)

F1

Error Probabilities for Binary Signaling

Matched filter reception of unipolar

baseband, coherent OOK or coherent FSK

Pe = Probability of Bit Error

1.0

Pe 0.5

-1

10

10

-2

10

-3

10

-4

10

-5

10

-6

10

-7

–1 0

Q

Eb /No

Eb = average energy per limit

Matched filter

reception of

bipolar baseband,

BPSK or QPSK

Q

2(Eb /No )

2

4

3 dB

6

8 10 12 14

(Eb/No) (dB)

Source: Digital and Analog Communication Systems,

L.W. Couch II, (4th Edition), Page 351

Lec14.10-3

2/6/01

F2

Phasor Diagrams

S(t) = Re{Sejωt}

Im{S}

Equal-Noise

Contour

Im{S}

Re{S}

Decision

Region for H1

Decision

Region for H1

Phasor

Im{S}

M = 16, Amplitude

and Phase

Re{S}

H1

H1

Rectangular

Lec14.10-4

2/6/01

16-PSK

QPSK

Re{S}

Im{S}

M = 19

Re{S}

Superior to

Rectangular

Hexagonal

F3

Intersymbol Interference

MPSK Examples:

Boxcar

Envelope

Typical

Baud

Si(f)

0

Si(f)

Typical

Baud

Window

Function

0

Baud

Boundary Overlapping

S (f)

Window Functions i

0

Want symbols to be orthogonal

within and between windows

Lec14.10-5

2/6/01

Spectrum for

Channel i

f

fi Sidelobes Interfere

with Adjacent Channels

Broader Channel

Bandwidth

f

fi Lower Sidelobes

Narrowband with

Lower Sidelobes

f

fi

F4

Performance Degradation Due to Interchannel Interfence

N = 1 Interfering Channel

N=∞

“Tamed FM” (window overlap)

Boxcar (QPSK)

10

Signal degradation

(dB) same as required

signal boost for

constant Pe

1

0.1

0 0.5 1

2

Channel spacing (Hz)

∆f/R for given E/No

BPS

Closer channel spacing requires more signal power to maintain Pe

Recover by boosting signal power (works until No becomes negligible)

BER

10

-2

N = ∞ Interfering Channels

BER

(for a given E/No)

N=1

10

Lec14.10-6

2/6/01

“Tamed FM”

-8

0.4 0.5 0.6 0.7

∆f/R

F5

Error Reduction via Channel Coding

Shannon’s Channel Capacity Theorem

We want Pe → 0 (banking, etc.) ⇒ E/No → ∞ using prior methods

Theorem: Pe → 0 if channel capacity “C” not exceeded, in bits/sec

C = B LOG2(1 + S/N) bits/sec

[Hz]

Noise Power = NoB

Average Signal Power

(Shannon showed “can,” not “how”)

Examples: S/N = 10 yields C = 3B (3 bits/Hz), S/N = 127 yields C = 7B (7 bits/Hz)

∼21 dB

e.g. 3-kHz phone at 9600 bps requires S/N ≥~10 dB

Lec14.10-7

2/6/01

H1

Channel Codes

Definitions:

1. “Channel codes” reduce Pe

2. “Source codes” reduce redundancy

3. “Cryptographic” codes conceal

Solomon Golomb: A message with content and clarity

has gotten to be quite a rarity;

to combat the terror of serious error,

use bits of appropriate parity.

Channel codes are our principal approach to letting

R → C with acceptable Pe

Lec14.10-8

2/6/01

H2

Coding Delays Message and Increases Bandwidth

Can show: Pe ≤ 2–Tk(C,R), T = time delay in coding process

e.g. use M = 2RT possible messages in T sec.

(RT = #bits in T sec; “block coding”)

use M = 2RT frequencies spaced at ∼1/T Hz

then B = 2RT/T (can → ∞!)

Lec14.10-9

2/6/01

H3

Minimum S/No for Pe → 0

∆

Can show : C ∞ = lim C = S (No ln 2 ) ≥ R( P →0 ) bits/sec

e

B→∞

Therefore

S/No ≥ 0.69 R for Pe → 0 as B → ∞

Pe{Bit Error}

1

∞ = log2 M

-1

10

-2

10

-3

10

-4

10

-5

10

0.1

Lec14.10-10

2/6/01

Typically 2 ≤ M ≤ 64

20 = log2 M (M ≅ 10 )

6

M≅2

0.69

1

10

E/No →

(J/bit)

(W/Hz)

M = number of

frequencies

H4

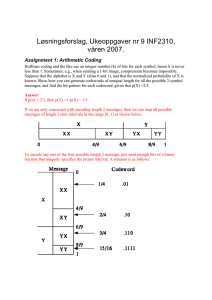

Error Detection K + R Code

Blocks:

message

check bits

K bits

R bits

Simple parity check –

xxx…x P

K bits

where P ∋ Σ 1’s =

even

or (2 standards)

odd

R = 1 bit

i.e. = A single bit error transforms its block to “illegal” message set

(half are illegal here).

Lec14.10-11

2/6/01

H5

Error Correction Code

Message = m1 m2…mK

Checks = mK + 1….mK + R

Any of these K + R bits can be erroneous

Receive:

ˆ1

m

ˆ 2……………m

ˆK+R

m

Correct:

m1

m2……………mK + R

Sum (modulo 2) = 0’s if no error → 0

0

0

Consider locations of “1”s in K + R slots of Sum

If we wish to detect and correct 0 or 1 bit error in the block of K + R bits,

we need

K + R ≥ K + LOG2 (K + R + 1) bits/block

Original

Information

Number of

Slots for

1-bit error

Lec14.10-12

2/6/01

“No Error”

Message

H6

Single-Bit Error Correction

If we wish to detect and correct 0 or 1 bit error in the block of K + R bits,

we need

K + R ≥ K + LOG2 (K + R + 1) bits/block

Original

Information

Number of

Slots for

1-bit error

K=

1

2

3

4

5

100

3

10

6

10

Lec14.10-13

2/6/01

R≥

2

3

3

3

4

7

~10

~20

R/(R +K)

0.67

0.6

Not too

0.5

efficient

0.4

0.4

0.07

0.01

0.002

“No Error”

Message

R = Check bits needed

to detect and fix ≤ 1 error

in a block of K + R

H7

Two-Bit Error Correction

If we wish to correct two errors:

We need

K + R ≥ K + LOG2 1 + K + R +

(K + R)(K + R - 1)

“No Error” 1 Error

Message

K=

R≥

5

7

0.6

3

∼20

0.02

6

∼40

0.004

10

10

Lec14.10-14

2/6/01

2

2 Errors

R/(R +K)

R = Check bits needed

to detect and fix ≤ 2 errors

in a block of K + R bits

J1

Implementation: Single-Error Correction

∆

Block =

m1

m2

m3

m4

C1

C2

C3

(K = 4, R = 3)

(4 message bits, 3 check bits)

∆

Let C1 = m1 ⊕ m2 ⊕ m3

∆

∆

C2 = m1 ⊕ m2 ⊕ m4 C3 = m1 ⊕ m3 ⊕ m4

∆

(Note: C1 ⊕ C1 = m1 ⊕ m2 ⊕ m3 ⊕ C1 ≡ 0)

Modulo-2 1

matrix 1

multiply 1

1

1

0

1

0

1

0

1

1

∆

=H

(Note: m1 ⊕ m2 ⊕ m3 = C1)

1

0

0

0

1

0

∆

Q=

0

0

1

⊕

0

1

0

0

1

1

1

0

“Sum, modulo-2”

Truth Table

m1

m2

m3

m4

C1

C2

C3

∆

=

0

0

0

If no errors

HQ = 0 defines legal codewords Q

Lec14.10-15

2/6/01

J2

Implementation: Single-Error Correction

HQ = 0 defines legal codewords Q

Only 1/8 of all 7-bit words are legal because C1, C2, and C3 are each

3

correct only half the time and (0.5) = 1/8

Suppose transmitted Q is legal and received R = Q + E then HR = HQ + HE

≡0 ≠0

Interpret to yield error-free Q from R

1

1

Say HR = 0 ⇒ Error in m3 Note that Hi3 = 0

1

1

Can even rearrange transmitted word so:

0 0 0 1 1 1 1 C3

0 1 1 0 0 1 1 C2 = E = Binary representation

of error location “L”

1 0 1 0 1 0 1 m4

L = 1 2 3 4 5 6 7 C1

m3

m2

m1

Lec14.10-16

2/6/01

J3

Pe Benefits of Channel Coding

Suppose Pe = 10 , then P{error in 4-bit word} = 1 – (1 – 10 ) ≅ 4 × 10

(no-coding case)

P{no errors}

-5

-5 4

-5

If we add 3 bits to block (4 + 3 = 7) for single-error correction,

and send it in the same time ⇒ 4 less E/No (2.4 dB loss)

7

-4

Pe → 6 × 10 (per bit; depends on modulation)

p{2 errors in 7 bits @ 6 × 10 } = p{no error} • p{error}

-4

5

2

7 ≅ 8 × 10-6

2

(6 × 10 ) 7 • 6/2!

-4 2

Compare new p{block error} 8 × 10 to 4 × 10 without coding

-6

-5

Coding reduced block errors by a factor of 5 with same transmitter power

Alternatively, reduce power and maintain Pe

Benefits depend on Pe(E/No) relation

Lec14.10-17

2/6/01

J4

Benefits of Soft Decisions

Soft decisions can yield ∼2 dB SNR improvement for same Pe

e.g.

i=1

“Hard Decision:” 2 Alternatives

i=2

v

A B C D E F G H “Soft Decision:”

Say 8 Levels

Example: Parity bit implies one of n bits was received

incorrectly. Choose the one bit for which the

decision was least clear.

Lec14.10-18

2/6/01

J5

Convolutional Codes

Constraint

Length

Convolutional codes

employ overlapping

blocks (sliding window)

Example:

R (bits/sec)

In

2R bits/sec output

3-bit shift register

Sum modulo 2

This is a “rate 1/2, constraint-length-3 convolutional coder”

One advantage: accommodates soft decisions

Here each message bit impacts 3 output bits and therefore

impacts decoder decisions impacting 3 or more reconstructed

bits, so soft decisions help identify erroneous bits.

Lec14.10-19

2/6/01

J6

Receivers, Antennas,

and Signals

Modulation and Coding

Professor David H. Staelin

Lec14.10-20

2/6/01

Rayleigh Fading Channels

e.g. Fading from deep vigorous multipath

N

Consider multipath with output signal z(t) = Σ xi cos ωt + yi sin ωt

i=1

(sum of N phasors, one per path)

Im{z}

z2 (t)(filtered)

z

i=1

Re{z}

0

0

Deep Fade

t

Rayleigh fading: xi and yi are independent g.r.v.z.m.

Im{z}

P{|z|}

1

2

Re{z}

0

eσ

0

σ

|z|

z

Lec14.10-21

2/6/01

K1

Rayleigh Fading Channels

Rayleigh fading: xi and yi are independent g.r.v.z.m.

Im{z}

1

eσ2

Re{z}

0

P{|z|}

σ

0

|z|

z

Variance of Re{z}, Im{z} ≡ σ2

⟨|z|⟩

=

σ π/2

2

⟨|z| ⟩

=

σ2(2 – π/2)

≅

2σ/3 ≠ f(N)

|z| – ⟨|z|⟩

P{|z| > zo}

Lec14.10-22

2/6/01

2

=

–(zo/σ)2/2

e

K2

Effect of Fading on Pe(Eb/No)

Pe curve increases and flattens when there is fading

Pe

Pe

New Pe{Eb/No} Relation

After Fading

p{Eb/No}

Eb(t)

Deep fades produce

bursts of errors

(error clusters)

Lec14.10-23

2/6/01

Eb/No(dB)

Fading history,

increases Pe(t)

t

K3

Remedies for Error Bursts

1. Diversity – Space

– Frequency

– Polarization

≥ 2 independent paths

2. General error-correcting codes

3. Same, plus interleaving:

Error burst, hits only,

one bit per block

t

Burst hits fewer

bits per block

4. Reed-Solomon codes

(Tolerate adjacent errors better than random ones)

e.g. multivalue symbols A (say 4 bits each, 16 possibilities)

so then block error-correct the symbols A:

AAA…A AAA…A A…

Lec14.10-24

2/6/01

K4

Remedies for Error Bursts

Fading flattens Pe(Eb/No) curve, so potential coding gain

can exceed 10 dB sometimes

Pe

Increase in Eb required to accommodate coding

New Pe for coded fading channel

10-2

Reduction in Pe using coding

Flatter Pe(Eb/No) for

fading channel

Coding Gain

-8

10

10 dB

Eb/No (dB)

Note: Coding gain greater for flatter Pe(Eb/No)

Lec14.10-25

2/6/01

K5