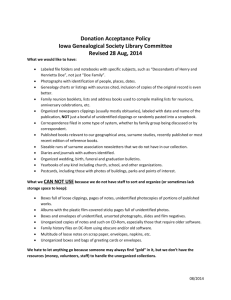

W N M

advertisement