Agenda Processes and Threads

advertisement

TDDI12:

Operating Systems, Real-time and

Operating Systems

Agenda

[SGG7] Chapter 3 and 4

•

Processes and Threads

Introduce the notion of process and thread

Describe the various features of processes

Describe interprocess communication

•

Process

+ Process Concept

+ Process Scheduling

+ Operation on Processes

+ Cooperating Processes

+ Interprocess Communication

Threads

Copyright Notice: The lecture notes are mainly based on Silberschatz’s, Galvin’s and Gagne’s book (“Operating System

Concepts”, 7th ed., Wiley, 2005). No part of the lecture notes may be reproduced in any form, due to the copyrights

reserved by Addison-Wesley. These lecture notes should only be used for internal teaching purposes at the Linköping

University.

Andrzej Bednarski, IDA

Linköpings universitet, 2006

Process Concept

4.2

Silberschatz, Galvin and Gagne ©2005

4.4

Silberschatz, Galvin and Gagne ©2005

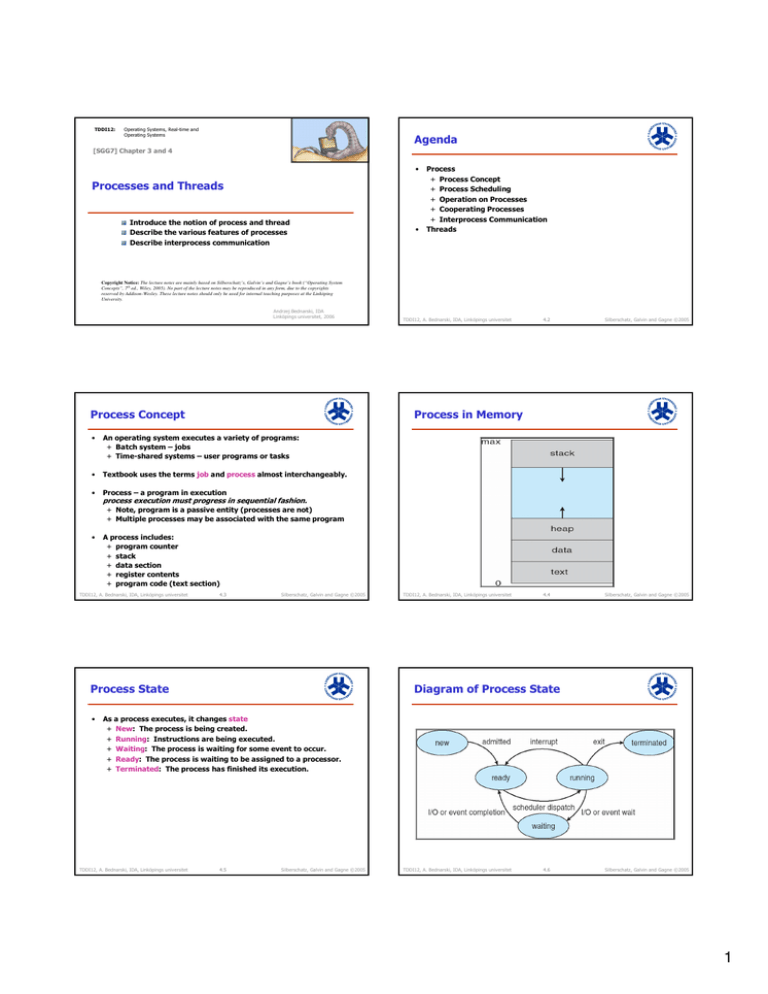

Process in Memory

•

An operating system executes a variety of programs:

+ Batch system – jobs

+ Time-shared systems – user programs or tasks

•

Textbook uses the terms job and process almost interchangeably.

•

TDDI12, A. Bednarski, IDA, Linköpings universitet

Process – a program in execution

process execution must progress in sequential fashion.

+ Note, program is a passive entity (processes are not)

+ Multiple processes may be associated with the same program

•

A process includes:

+ program counter

+ stack

+ data section

+ register contents

+ program code (text section)

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.3

Silberschatz, Galvin and Gagne ©2005

Process State

•

TDDI12, A. Bednarski, IDA, Linköpings universitet

Diagram of Process State

As a process executes, it changes state

+ New: The process is being created.

+ Running: Instructions are being executed.

+ Waiting: The process is waiting for some event to occur.

+ Ready: The process is waiting to be assigned to a processor.

+ Terminated: The process has finished its execution.

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.5

Silberschatz, Galvin and Gagne ©2005

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.6

Silberschatz, Galvin and Gagne ©2005

1

Process Control Block (PCB)

Process Control Block PCB (Cont.)

Information associated with each process.

•

Memory management information

+ Base and limit registers

•

Accounting information

•

I/O status information

+ List of I/O devices allocated to process, open files etc.

•

Process state

+ New, ready, running, waiting, …

•

Program counter

+ Address of the next instruction

•

CPU registers

+ Accumulators, index registers, stack pointers, general-purpose

registers

•

PCB is sometimes denoted as task control block.

CPU scheduling information

+ Process priorities, pointers to scheduling queues etc.

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.7

Silberschatz, Galvin and Gagne ©2005

Process Control Block (PCB)

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.9

Job queue

set of all processes in the system

•

Ready queue

set of all processes residing in main memory,

ready and waiting to execute

•

Device queues

set of processes waiting for an I/O device

4.8

Silberschatz, Galvin and Gagne ©2005

CPU Switch From Process to Process

Silberschatz, Galvin and Gagne ©2005

Process Scheduling Queues

•

TDDI12, A. Bednarski, IDA, Linköpings universitet

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.10

Silberschatz, Galvin and Gagne ©2005

Ready Queue And Various I/O Device Queues

Processes migrate among the various queues

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.11

Silberschatz, Galvin and Gagne ©2005

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.12

Silberschatz, Galvin and Gagne ©2005

2

Representation of Process Scheduling

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.13

Silberschatz, Galvin and Gagne ©2005

Schedulers

•

Long-term scheduler (or job scheduler)

selects which processes should be brought into the ready queue.

•

Short-term scheduler (or CPU scheduler)

selects which process should be executed next and allocates CPU.

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.14

Silberschatz, Galvin and Gagne ©2005

Schedulers (Cont.)

Addition of Medium Term Scheduling

•

Short-term scheduler is invoked very frequently (milliseconds)

⇒ (must be fast).

•

•

Long-term scheduler is invoked very infrequently (sec, min)

⇒ (may be slow).

•

The long-term scheduler controls the degree of multiprogramming.

•

Processes can be described as either:

+ I/O-bound process – spends more time doing I/O than

computations, many short CPU bursts.

+ CPU-bound process – spends more time doing computations;

few very long CPU bursts.

Controls swapping

+ Removes processes from memory

̶ Decreases degree of multiprogramming (swap out)

+ Reloads processes later (swap in)

Best performance:

Balance between I/O-bound and CPU-bound processes

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.15

Silberschatz, Galvin and Gagne ©2005

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.16

Silberschatz, Galvin and Gagne ©2005

Context Switch

Process Creation

•

When CPU switches to another process, the system must

+ save the state of the old process and

+ load the saved state for the new process.

•

Parent process creates children processes, which, in turn create

other processes, forming a tree of processes (system call).

•

•

Context-switch time is overhead

+ the system does no useful work while switching

•

Time dependent on hardware support (from 1µs to 1ms).

+ E.g., consider an architecture having multiple sets of registers.

Resource sharing

Goal: Preventing system overloading

+ Parent and children share all resources.

+ Children share subset of parent’s resources.

+ Parent and child share no resources.

•

Execution

+ Parent and children execute concurrently.

+ Parent waits until children terminate.

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.17

Silberschatz, Galvin and Gagne ©2005

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.18

Silberschatz, Galvin and Gagne ©2005

3

Process Creation (Cont.)

Process Creation (Cont.)

•

Address space

+ Child duplicate of parent

+ Child has a program loaded into it

•

UNIX examples

+ pid - process identifier

+ fork - system call creates new process,

holding a copy of the memory space of the parent process

+ exec - system call used after a fork

to replace the process’ memory space with a new program

•

Windows NT/XP support two models

+ Duplication like fork

+ Creation of new process

with address to new program to be loaded

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.19

Silberschatz, Galvin and Gagne ©2005

C Program Forking Separate Process

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.20

Silberschatz, Galvin and Gagne ©2005

A tree of processes on a typical UNIX system

int main()

{

pid_t pid;

/* fork another process */

pid = fork();

if (pid < 0) { /* error occurred */

fprintf(stderr, "Fork Failed!\n");

exit(-1);

} else if (pid == 0) { /* child process */

execlp("/bin/ls", "ls", NULL);

} else { /* parent process */

/* parent will wait for the child to complete */

wait (NULL);

printf ("Child Complete\n");

exit(0);

}

return 0;

}

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.21

Solaris OS

Silberschatz, Galvin and Gagne ©2005

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.22

Silberschatz, Galvin and Gagne ©2005

Process Termination

Cooperating Processes

•

Process executes last statement (exit) and

asks the operating system to

+ Output data from child to parent (parent issues a wait).

+ Process’ resources are deallocated

(released) by operating system.

•

Independent process cannot affect or be affected by the

execution of another process (no data sharing).

•

Cooperating process can affect or be affected by the execution of

another process

Parent may terminate execution of children processes (abort).

+ Child has exceeded allocated resources

+ Task assigned to child is no longer required

+ Parent is exiting.

̶ Operating system does not allow child to continue if its

parent terminates.

•

Advantages of process cooperation

+ Information sharing

̶ Also enables fault-tolerance, e.g., N-version redundancy

+ Computation speed-up

+ Modularity

+ Convenience

•

̶

̶

Note, this depends on the type of coupling that is supported.

All children terminated - cascading termination

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.23

Silberschatz, Galvin and Gagne ©2005

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.24

Silberschatz, Galvin and Gagne ©2005

4

Producer-Consumer Problem

Interprocess Communication (IPC)

•

•

•

Paradigm for cooperating processes, producer process produces

information that is consumed by a consumer process.

+ unbounded-buffer places no practical limit on the size of the

buffer.

+ bounded-buffer assumes that there is a fixed buffer size.

Variables in, out are initialized to 0

+ n is the number of slots in buffer

+ in points to the next free position in the buffer

+ out points to the first full position in the buffer

+ Buffer is empty when in = out

+ Buffer is full when in + 1 mod n = out

̶ Buffer is a circular array

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.25

Silberschatz, Galvin and Gagne ©2005

Implementation Questions

How are links established?

•

Can a link be associated with more than two processes?

•

How many links can there be between every pair of

communicating processes?

•

What is the capacity of a link?

•

Is the size of a message that the link can accommodate fixed or

variable?

•

Is a link unidirectional or bi-directional?

4.28

•

•

•

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.27

Silberschatz, Galvin and Gagne ©2005

Communications Models

•

TDDI12, A. Bednarski, IDA, Linköpings universitet

•

Mechanism for processes to communicate

and to synchronize their actions.

Message system – processes communicate with each other

without resorting to shared variables.

IPC facility provides two operations:

+ send(message) – message size fixed or variable

+ receive(message)

If P and Q wish to communicate, they need to:

+ establish a communication link between them

+ exchange messages via send/receive

Implementation of communication link

+ physical (e.g., shared memory, hardware bus)

+ logical (e.g., logical properties)

Silberschatz, Galvin and Gagne ©2005

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.29

Silberschatz, Galvin and Gagne ©2005

Direct Communication

Indirect Communication

•

•

Messages are directed and received from mailboxes

(also referred to as ports)

+ Each mailbox has a unique id

+ Processes can communicate only if they share a mailbox

•

Properties of communication link

+ Link established only if processes share a common mailbox

+ A link may be associated with many processes

+ Each pair of processes may share several communication links

+ Link may be unidirectional or bi-directional (usually)

•

Processes must name each other explicitly:

+ send (P, message) – send a message to process P

+ receive(Q, message) – receive a message from process Q

Properties of communication link

+ Links are established automatically

+ A link is associated with exactly one pair of communicating

processes

+ Between each pair there exists exactly one link

+ The link may be unidirectional, but is usually bi-directional

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.30

Silberschatz, Galvin and Gagne ©2005

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.31

Silberschatz, Galvin and Gagne ©2005

5

Indirect Communication (Cont.)

Indirect Communication

•

Operations

+ create a new mailbox

+ send and receive messages through mailbox

+ destroy a mailbox

•

Mailbox sharing

+ P1, P2, and P3 share mailbox A

+ P1, sends; P2 and P3 receive

+ Who gets the message?

•

Primitives are defined as:

send(A, message) – send a message to mailbox A

receive(A, message) – receive a message from mailbox A

•

Solutions

+ Allow a link to be associated with at most two processes

+ Allow only one process at a time to execute a receive operation

+ Allow the system to select arbitrarily the receiver.

Sender is notified who the receiver was.

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.32

Silberschatz, Galvin and Gagne ©2005

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.33

Synchronization

Buffering

•

•

Temporary queues

•

Queue of messages attached to the link;

implemented in one of three ways.

Communication: send/receive primitives

(different implementations)

+ Blocking/Synchronous

̶ send: sender blocked until message received (phone)

̶ receive: receiver blocks until a message is available

Silberschatz, Galvin and Gagne ©2005

1. Zero capacity – 0 messages

Sender must wait for receiver (rendez-vous).

“message system with no buffering”

+ Nonblocking/Asynchronous

̶ send: sends and resumes (SMS)

̶ receive: if there is a message, retrieve it, otherwise empty

message (classical mail box)

2. Bounded capacity – finite length of n messages

Sender must wait if link full.

“automatic buffering”

3. Unbounded capacity – infinite length

Sender never waits.

“automatic buffering”

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.34

Silberschatz, Galvin and Gagne ©2005

TDDI12, A. Bednarski, IDA, Linköpings universitet

Client-Server Communication

Sockets

•

•

•

•

•

•

•

Sockets

Remote Procedure Calls

Remote Method Invocation (Java)

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.36

Silberschatz, Galvin and Gagne ©2005

4.35

Silberschatz, Galvin and Gagne ©2005

A socket is defined as an endpoint for communication

Concatenation of IP address and port

The socket 146.86.5.20:1625 refers to port 1625 on host 146.86.5.20

Communication consists between a pair of sockets

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.37

Silberschatz, Galvin and Gagne ©2005

6

Remote Procedure Calls

Marshalling Parameters

•

Remote procedure call (RPC) abstracts procedure calls between

processes on networked systems.

•

stubs – client-side proxy for the actual procedure on the server.

•

The client-side stub locates the server and

marshalls the parameters.

•

The server-side stub receives this message,

unpacks the marshalled parameters,

and performs the procedure on the server.

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.38

Silberschatz, Galvin and Gagne ©2005

Execution of RPC

TDDI12, A. Bednarski, IDA, Linköpings universitet

Silberschatz, Galvin and Gagne ©2005

Remote Method Invocation

•

•

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.39

4.40

Silberschatz, Galvin and Gagne ©2005

Threads

Remote Method Invocation (RMI)

is a Java mechanism similar to RPCs.

RMI allows a Java program on one virtual machine

to invoke a method on a remote object (on another VM).

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.41

Silberschatz, Galvin and Gagne ©2005

Single and Multithreaded Processes

•

A thread (or lightweight process) is a basic unit of CPU utilization;

it consists of:

+ program counter

+ register set

+ stack space

•

A thread shares with its peer threads its:

+ code section

+ data section

+ operating-system resources

collectively know as a task.

•

A traditional or heavyweight process

is equal to a task with one thread

Advantage: Use of threads minimizes context switches

without loosing parallelism.

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.42

Silberschatz, Galvin and Gagne ©2005

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.43

Silberschatz, Galvin and Gagne ©2005

7

User/Kernel Threads

Multithreading Models

•

User threads

+ Thread management done by user-level threads library

(POSIX Pthreads, Win32 threads, Java threads)

•

Kernel threads

+ Supported by the kernel

(Windows XP/2000, Solaris, Linux, True64 UNIX, Mac OS X)

One-to-one

Many-to-one

Many-to-many

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.44

Silberschatz, Galvin and Gagne ©2005

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.45

Silberschatz, Galvin and Gagne ©2005

Thread Cancellation

Signal Handling

•

Terminating a thread before it has finished

•

Signals are used in UNIX systems to notify a process that a

particular event has occurred

•

Two general approaches:

+ Asynchronous cancellation terminates the target thread

immediately

+ Deferred cancellation allows the target thread to periodically

check if it should be cancelled

•

A signal handler is used to process signals

1. Signal is generated by particular event

2. Signal is delivered to a process

3. Signal is handled

•

Options:

+ Deliver the signal to the thread to which the signal applies

+ Deliver the signal to every thread in the process

+ Deliver the signal to certain threads in the process

+ Assign a specific thread to receive all signals for the process

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.46

Silberschatz, Galvin and Gagne ©2005

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.47

Silberschatz, Galvin and Gagne ©2005

Thread Pools

Recommended Reading and Exercises

•

Create a number of threads in a pool where they await work

•

•

Advantages:

+ Usually slightly faster to serve a request

+ Bounded number of threads in the application

Reading

+ Chapter 3 and 4 [SGG7]

Chapter 4 and 5 (6th edition)

Chapter 4 (5th edition)

Optional but recommended:

Section 3.5 and 3.6

RPC, Client/Server, Sockets, RMI, Mach OS

Section 4.3 and 4.5

•

Exercises:

+ 3.1 to 3.6

+ 3.7-3.11 (implementation)

̶ Project: UNIX shell

+ 4.1 to 4.8

+ 4.8-4.12 (implementation)

̶ Project: Matrix multiplication using threads

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.48

Silberschatz, Galvin and Gagne ©2005

TDDI12, A. Bednarski, IDA, Linköpings universitet

4.49

Silberschatz, Galvin and Gagne ©2005

8