THE EFFECTIVENESS OF A CLASSROOM RESPONSE SYSTEM AS A METHOD

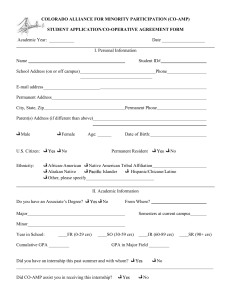

advertisement