D I F M

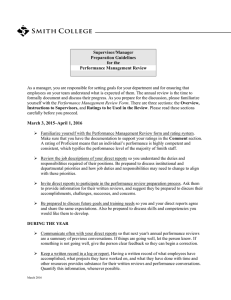

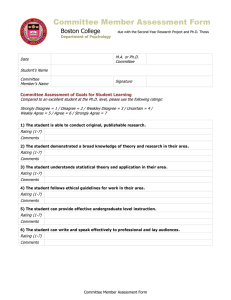

advertisement