MIT Manufacturing Lectures Stanley B. Gershwin

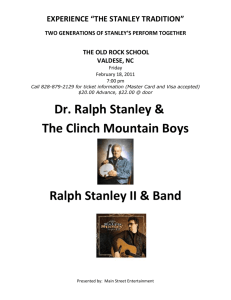

advertisement

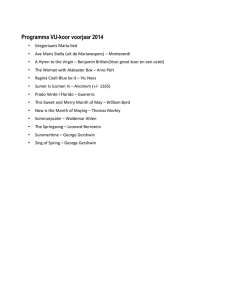

MIT 2.852 Manufacturing Systems Analysis Lectures 18–19 Loops Stanley B. Gershwin Spring, 2007 c 2007 Stanley B. Gershwin. Copyright � Problem Statement B1 M2 B2 M3 B3 M1 M4 B6 M6 B5 M5 B4 • Finite buffers (0 � ni(t) � Ni). • Single closed loop – fixed population ( � i ni(t) = N ). • Focus is on the Buzacott model (deterministic processing time; geometric up and down times). Repair probability = ri; failure probability = pi. Many results are true for more general loops. • Goal: calculate production rate and inventory distribution. c 2007 Stanley B. Gershwin. Copyright � 2 Problem Statement Motivation • Limited pallets/fixtures. • CONWIP (or hybrid) control systems. • Extension to more complex systems and policies. c 2007 Stanley B. Gershwin. Copyright � 3 Two-Machine Loops Special Case Refer to MSE Section 5.6, page 205. N N1 r1 p1 B1 M1 M2 N2 r2 p2 =� r1 p1 N* M1 B M2 r2 p2 B2 P loop(r1, p1, r2, p2, N1, N2) = P line(r1, p1, r2, p2, N �) where N � = min(n, N1) − max(0, n − N2) c 2007 Stanley B. Gershwin. Copyright � 4 Two-Machine Loops Special Case .14 .01 .1 .01 20 N2=10 N2=15 N2=20 N2=30 N2=40 0.885 0.88 production rate r1 p1 r2 p2 N1 0.89 0.875 0.87 0.865 0.86 0 c 2007 Stanley B. Gershwin. Copyright � 10 20 30 population 40 50 60 5 Expected population method • Treat the loop as a line in which the first machine and the last are the same. • In the resulting decomposition, one equation is missing. • The missing equation is replaced by the expected � population constraint ( i n̄i = N ). c 2007 Stanley B. Gershwin. Copyright � 6 Expected population method Evaluate i, i − 1, i + 1 modulo k (ie, replace 0 by k and replace k + 1 by 1). ps(i − 1)ru(i) ru(i) = ru(i − 1)X(i) + ri(1 − X(i)); X(i) = pu(i)E(i − 1) � � 1 1 pd(i − 1) + −2− , i = 1, ..., k pu(i) = ru(i) E(i − 1) ei rd(i − 1) pb(i + 1)rd(i) rd(i) = rd(i + 1)Y (i + 1) + ri+1(1 − Y (i + 1)); Y (i + 1) = . pd(i)E(i + 1) � � 1 1 pu(i + 1) + −2− , i = k, ..., 1 pd(i) = rd(i) E(i + 1) ei+1 ru(i + 1) This is 4k equations in 4k unknowns. But only 4k − 1 of them are independent because the derivation uses E(i) = E(i + 1) for i = 1, ..., k. The first k − 1 are E(1) = E(2), E(2) = E(3), ..., E(k − 1) = E(k). But this implies E(k) = E(1), which is the same as the kth equation. c 2007 Stanley B. Gershwin. Copyright � 7 Expected population method Therefore, we need one more equation. We can use � n̄i = N i If we guess pu(1) (say), we can evaluate � TOTAL n̄ = i n̄i as a function of pu(1). We search for the value of pu(1) such that n̄ TOTAL = N . c 2007 Stanley B. Gershwin. Copyright � 8 Expected population method Behavior: • Accuracy good for large systems, not so good for small systems. • Accuracy good for intermediate-size populations; not so good for very small or very large populations. c 2007 Stanley B. Gershwin. Copyright � 9 Expected population method • Hypothesis: The reason for the accuracy behavior of the population constraint method is the correlation in the buffers. � The number of parts in the system is actually constant . � The expected population method treats the population as random, with a specified mean. � If we know that a buffer is almost full, we know that there are fewer parts in the rest of the network, so probabilities of blockage are reduced and probabilities of starvation are increased. (Similarly if it is almost empty.) � Suppose the population is smaller than the smallest buffer. Then there will be no blockage. The expected population method does not take this into account. c 2007 Stanley B. Gershwin. Copyright � 10 Loop Behavior To construct a method that deals with the invariant (rather than the expected value of the invariant), we investigate how buffer levels are related to one another and to the starvation and blocking of machines. In a line, every downstream machine could block a given machine, and every upstream machine could starve it. In a loop, blocking and starvation are more complicated. c 2007 Stanley B. Gershwin. Copyright � 11 Ranges Loop Behavior B1 M2 B2 M3 B3 M1 M4 B6 M6 B5 M5 B4 • The range of blocking of a machine is the set of all machines that could block it if they stayed down for a long enough time. • The range of starvation of a machine is the set of all machines that could starve it if they stayed down for a long enough time. c 2007 Stanley B. Gershwin. Copyright � 12 Ranges Loop Behavior Range of Blocking 10 B1 10 M2 B2 10 M3 B3 M1 M4 7 B6 0 0 M6 B5 M5 B4 • All buffer sizes are 10. • Population is 37. • If M4 stays down for a long time, it will block M1. • Therefore M4 is in the range of blocking of M1. • Similarly, M2 and M3 are in the range of blocking of M1. c 2007 Stanley B. Gershwin. Copyright � 13 Ranges Loop Behavior Range of starvation 10 7 B1 M2 B2 10 M3 B3 M1 M4 0 B6 10 0 M6 B5 M5 B4 • If M5 stays down for a long time, it will starve M1. • Therefore M5 is in the range of starvation of M1. • Similarly, M6 is in the range of starvation of M1. c 2007 Stanley B. Gershwin. Copyright � 14 Loop Behavior Ranges Line • The range of blocking of a machine in a line is the entire downstream part of the line. • The range of starvation of a machine in a line is the entire upstream part of the line. c 2007 Stanley B. Gershwin. Copyright � 15 Ranges Loop Behavior Line In an acyclic network, if Mj is downstream of Mi, then the range of blocking of Mj is a subset of the range of blocking of Mi. Mi Mj Range of blocking of M j Range of blocking of M i Similarly for the range of starvation. c 2007 Stanley B. Gershwin. Copyright � 16 Ranges Loop Behavior Line In an acyclic network, if Mj is downstream of Mi, any real machine whose failure could cause Md(j) to appear to be down could also cause Md(i) to appear to be down. Mi Bi M j Md(i) Md(j) Consequently, we can express rd(i) as a function of the parameters of L(j). This is not possible in a network with a loop because some machine that blocks Mj does not block Mi. c 2007 Stanley B. Gershwin. Copyright � 17 Ranges Loop Behavior Difficulty for decomposition L(1) Range of blocking of M6 B1 M2 B2 M3 M u(1) B(1) B3 M1 Range of blocking of M1 M4 B1 B6 M6 B5 M5 d B(6) M2 B2 M3 B3 B4 Range of starvation of M1 M (6) d M (1) M4 M1 u M (6) B6 L(6) M6 B5 M5 B4 Range of starvation of M2 Ranges of blocking and starvation of adjacent machines are not subsets or supersets of one another in a loop. c 2007 Stanley B. Gershwin. Copyright � 18 Ranges Loop Behavior Difficulty for decomposition Range of blocking of M1 Range of blocking of M2 10 B1 10 M2 B2 10 M3 B3 M1 M4 7 B6 0 0 M6 B5 M5 B4 M5 can block M2. Therefore the parameters of M5 should directly affect the parameters of Md(1) in a decomposition. However, M5 cannot block M1 so the parameters of M5 should not directly affect the parameters of Md(6). Therefore, the parameters of Md(6) cannot be functions of the parameters of Md(1). c 2007 Stanley B. Gershwin. Copyright � 19 Multiple Failure Mode Line Decomposition • To deal with this issue, we introduce a new decomposition. • In this decomposition, we do not create failures of virtual machines that are mixtures of failures of real machines. • Instead, we allow the virtual machines to have distinct failure modes, each one corresponding to the failure mode of a real machine. c 2007 Stanley B. Gershwin. Copyright � 20 Multiple Failure Mode Line Decomposition 1,2 3 4 5,6,7 1,2, 3,4 5,6,7, 8,9,10 8 9,10 • There is an observer in each buffer who is told that he is actually in the buffer of a two-machine line. c 2007 Stanley B. Gershwin. Copyright � 21 Multiple Failure Mode Line Decomposition 1,2 3 4 5,6,7 1,2, 3,4 5,6,7, 8,9,10 8 9,10 • Each machine in the original line may and in the two-machine lines must have multiple failure modes. • For each failure mode downstream of a given buffer, there is a corresponding mode in the downstream machine of its two-machine line. • Similarly for upstream modes. c 2007 Stanley B. Gershwin. Copyright � 22 Multiple Failure Mode Line Decomposition 1,2 3 4 5,6,7 1,2, 3,4 5,6,7, 8,9,10 8 9,10 • The downstream failure modes appear to the observer after propagation through blockage . • The upstream failure modes appear to the observer after propagation through starvation . • The two-machine lines are more complex that in earlier decompositions but the decomposition equations are simpler. c 2007 Stanley B. Gershwin. Copyright � 23 Multiple Failure Mode Line Decomposition Two-Machine Line up down Form a Markov chain and find the steady-state probability distribution. The solution technique is very similar to that of the two-machine-state model. Determine the production rate, probability of starvation and probability of blocking in each down mode, average inventory. c 2007 Stanley B. Gershwin. Copyright � 24 Multiple Failure Mode Line Decomposition Line Decomposition • A set of decomposition equations are formulated. • They are solved by a Dallery-David-Xie-like algorithm. • The results are a little more accurate than earlier methods, especially for machines with very different failures. c 2007 Stanley B. Gershwin. Copyright � 25 Multiple Failure Mode Line Decomposition 1,2 3 Line Decomposition 4 5,6,7 1,2, 3,4 5,6,7, 8,9,10 8 9,10 • In the upstream machine of the building block, failure mode 4 is a local mode; modes 1, 2, and 3 are remote modes. Modes 5, 6, and 7 are local modes of the downstream machine; 8, 9, and 10 are remote modes. • For every mode, the repair probability is the same as the repair probability of the corresponding mode in the real line. • Local modes: the probability of failure into a local mode is the same as the probability of failure in that mode of the real machine. c 2007 Stanley B. Gershwin. Copyright � 26 Multiple Failure Mode Line Decomposition 1,2 3 Line Decomposition 4 5,6,7 1,2, 3,4 5,6,7, 8,9,10 8 9,10 • Remote modes: i is the building block number; j and f are the machine number and mode number of a remote failure mode. Then Ps,jf (i − 1) Pb,jf (i) u d pjf (i) = rjf ; pjf (i − 1) = rjf E(i) E(i − 1) where pu jf (i) is the probability of failure of the upstream machine into mode jf ; Ps,jf (i − 1) is the probability of starvation of line i − 1 due to mode jf ; rjf is the probability of repair of the upstream machine from mode jf ; etc. • Also, E(i − 1) = E(i). d (i), p • pu jf jf (i) are used to evaluate E(i), Ps,jf (i),Pb,jf (i) from two-machine line i in an iterative method. c 2007 Stanley B. Gershwin. Copyright � 27 Multiple Failure Mode Line Decomposition Line Decomposition Consider pu jf (i) = Ps,jf (i − 1) rjf ; pdjf (i − 1) = Pb,jf (i) rjf E(i) E(i − 1) In a line, jf refers to all modes of all upstream machines in the first equation; and all modes of all downstream machines in the second equation. We can interpret the upstream machines as the range of starvation and the downstream machines as the range of blockage of the line. c 2007 Stanley B. Gershwin. Copyright � 28 Multiple Failure Mode Line Decomposition B1 Extension to Loops M2 B2 M3 B3 M1 M4 B6 M6 B5 M5 B4 • Use the multiple-mode decomposition, but adjust the ranges of blocking and starvation accordingly. • However, this does not take into account the local information that the observer has. c 2007 Stanley B. Gershwin. Copyright � 29 Thresholds 10 B1 10 10 M2 B2 M3 B3 M4 M1 5 B6 2 M6 B5 0 M5 B4 5 d M (6) B6 u M (6) • The B6 observer knows how many parts there are in his buffer. • If there are 5, he knows that the modes he sees in M d(6) could be those corresponding to the modes of M1, M2, M3, and M4. c 2007 Stanley B. Gershwin. Copyright � 30 Thresholds 10 B1 9 10 M2 B2 M3 B3 M1 M4 8 B6 0 M6 B5 0 M5 B4 8 d M (6) B6 u M (6) • However, if there are 8, he knows that the modes he sees in M d(6) could only be those corresponding to the modes of M1, M2, and M3; and not those of M4. • The transition probabilities of the two-machine line therefore depend on whether the buffer level is less than 7 or not. c 2007 Stanley B. Gershwin. Copyright � 31 Thresholds • This would require a new model of a two-machine line. • The same issue arises for starvation. • In general, there can be more than one threshold in a buffer. • Consequently, this makes the two-machine line very complicated. c 2007 Stanley B. Gershwin. Copyright � 32 Transformation • Purpose: to avoid the complexities caused by thresholds. • Idea: Wherever there is a threshold in a buffer, break up the buffer into smaller buffers separated by perfectly reliable machines. c 2007 Stanley B. Gershwin. Copyright � 33 Transformation buffer size 20 3 B1 18 13 M2 B2 1 • When M2 fails for a long time, B1 fills up, and there is one part in B4. Therefore there is a threshold of 1 in B4. M3 M1 1 15 B4 threshold M4 population = 21 c 2007 Stanley B. Gershwin. Copyright � B3 • When M1 fails for a long time, B4 and B3 fill up, and there is one part in B2. Therefore there is a threshold of 1 in B2. 5 • When M3 fails for a long time, B2 fills up, and there are 18 parts in B1. Therefore there is a threshold of 18 in B1. 34 Transformation buffer size 20 3 B1 18 13 M2 B2 1 M3 M1 • Note: B1 has two thresholds and B3 has none. 1 15 B4 threshold M4 population = 21 c 2007 Stanley B. Gershwin. Copyright � B3 • When M4 fails for a long time, B3 and B2 fill up, and there are 13 parts in B1. Therefore there is a threshold of 13 in B1 . 5 • Note: The number of thresh­ olds equals the number of ma­ chines. 35 Transformation 20 B1 M2 B2 3 M*4 15 5 B4 M4 B3 M2 M3 M3 M1 M*1 M*3 M1 M*2 M4 • Break up each buffer into a sequence of buffers of size 1 and reliable machines. • Count backwards from each real machine the number of buffers equal to the population. • Identify the reliable machine that the count ends at. c 2007 Stanley B. Gershwin. Copyright � 36 Transformation M1 2 M*3 5 M*4 13 M2 B1 1 M*2 14 B4 M4 2 5 B3 M3 1 M*1 B2 • Collapse all the sequences of unmarked reliable machines and buffers of size 1 into larger buffers. c 2007 Stanley B. Gershwin. Copyright � 37 Transformation • Ideally, this would be equivalent to the original system. • However, the reliable machines cause a delay, so transformation is not exact for the discrete/ deterministic case. • This transformation is exact for continuous-material machines. c 2007 Stanley B. Gershwin. Copyright � 38 Transformation Small populations • If the population is smaller than the largest buffer, at least one machine will never be blocked. • However, that violates the assumptions of the two-machine lines. • We can reduce the sizes of the larger buffers so that no buffer is larger than the population. This does not change performance. c 2007 Stanley B. Gershwin. Copyright � 39 Numerical Results Accuracy • Many cases were compared with simulation: � Three-machine cases: all throughput errors under 1%; buffer level errors averaged 3%, but were as high as 10%. � Six-machine cases: mean throughput error 1.1% with a maximum of 2.7%; average buffer level error 5% with a maximum of 21%. � Ten-machine cases: mean throughput error 1.4% with a maximum of 4%; average buffer level error 6% with a maximum of 44%. c 2007 Stanley B. Gershwin. Copyright � 40 Numerical Results Other algorithm attributes • Convergence reliability: almost always. • Speed: execution time increases rapidly with loop size. • Maximum size system: 18 machines. Memory requirements grow rapidly also. c 2007 Stanley B. Gershwin. Copyright � 41 The Batman Effect Numerical Results 0.815 • Error is very small, but there are apparent discontinuities. Decomposition Simulation 0.81 0.805 Throughput 0.8 0.795 0.79 0.785 0.78 0.775 0.77 0 5 10 15 Population 20 c 2007 Stanley B. Gershwin. Copyright � 25 30 • This is because we cannot deal with buffers of size 1, and because we do not need to introduce reliable machines in cases where there would be no thresholds. 42 Behavior Numerical Results B1 * M2 B2 M1 M3 B4 M4 B3 • All buffer sizes 10. Population 15. Identical machines except for M1. • Observe average buffer levels and production rate as a function of r1. c 2007 Stanley B. Gershwin. Copyright � 43 Behavior Numerical Results 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 • Production rate vs. r1. • Usual saturating graph. c 2007 Stanley B. Gershwin. Copyright � 44 Behavior Numerical Results 10 b1 average b2 average b3 average b4 average 9 8 B1 * M2 7 B2 6 5 M1 M3 4 3 2 B4 M4 B3 1 0 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 • When r1 is small, M1 is a bottleneck, so B4 holds 10 parts, B3 holds 5 parts, and the others are empty. • As r1 increases, material is more evenly distributed. When r1 = 0.1, the network is totally symmetrical. c 2007 Stanley B. Gershwin. Copyright � 45 Applications • Design system with pallets/fixtures. The fixtures and the space to hold them in are expensive. • Design system with tokens/kanbans (CONWIP). By limiting population, we reduce production rate, but we also reduce inventory. c 2007 Stanley B. Gershwin. Copyright � 46 MIT OpenCourseWare http://ocw.mit.edu 2.852 Manufacturing Systems Analysis Spring 2010 For information about citing these materials or our Terms of Use, visit: http://ocw.mit.edu/terms.