Pursuit and an evolutionary game

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

Pursuit and an evolutionary game

Ermin Wei, Eric W. Justh and P.S. Krishnaprasad

Proc. R. Soc. A

2009 465 , 1539-1559 first published online 25 February 2009 doi: 10.1098/rspa.2008.0480

References

Subject collections

Email alerting service

This article cites 15 articles, 1 of which can be accessed free http://rspa.royalsocietypublishing.org/content/465/2105/1539.full.

html#ref-list-1

Articles on similar topics can be found in the following collections applied mathematics (59 articles)

Receive free email alerts when new articles cite this article - sign up in the box at the top right-hand corner of the article or click here

To subscribe to Proc. R. Soc. A go to: http://rspa.royalsocietypublishing.org/subscriptions

This journal is © 2009 The Royal Society

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

Proc. R. Soc. A (2009) 465 , 1539–1559 doi:10.1098/rspa.2008.0480

Published online 25 February 2009

Pursuit and an evolutionary game

B

Y

E

RMIN

W

EI

4

, E

RIC

W. J

USTH

3

AND

P. S. K

RISHNAPRASAD

1,2, *

1

Institute for Systems Research, and

2

Department of Electrical and Computer

Engineering, University of Maryland, College Park, MD 20742, USA

3

Naval Research Laboratory, Washington, DC 20375, USA

4

Department of Electrical Engineering and Computer Science,

Massachusetts Institute of Technology, Cambridge, MA 02139, USA

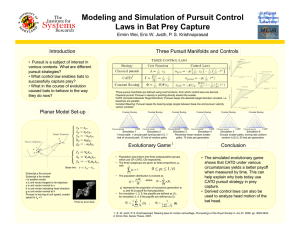

Pursuit is a familiar mechanical activity that humans and animals engage in—athletes chasing balls, predators seeking prey and insects manoeuvring in aerial territorial battles. In this paper, we discuss and compare strategies for pursuit, the occurrence in nature of a strategy known as motion camouflage , and some evolutionary arguments to support claims of prevalence of this strategy, as opposed to alternatives. We discuss feedback laws for a pursuer to realize motion camouflage, as well as two alternative strategies. We then set up a discrete-time evolutionary game to model competition among these strategies. This leads to a dynamics in the probability simplex in three dimensions, which captures the mean-field aspects of the evolutionary game. The analysis of this dynamics as an ascent equation solving a linear programming problem is consistent with observed behaviour in Monte Carlo experiments, and lends support to an evolutionary basis for prevalence of motion camouflage.

Keywords: pursuit; natural frames; motion camouflage; evolutionary game; replicator dynamics; geometry of simplex

1. Introduction

Pursuit phenomena in nature have a vital role in survival of species. In addition to prey-capture and mating behaviour, pursuit phenomena appear to underlie territorial battles in many species. In this paper, we discuss geometric patterns associated with pursuit, and suggest sensorimotor feedback laws that realize such patterns. We model pursuit problems as interactions between a pair of constantspeed particles ( pursuit – evasion system ). The geometric patterns of interest determine pursuit manifolds : states of the interacting particle system that satisfy particular relative position and velocity criteria. We then discuss (in § 2) three different types of pursuit: classical pursuit, motion camouflage pursuit

(also referred to as constant absolute target direction, CATD, pursuit in studies of prey-capture behaviour of echolocating bats (

Ghose et al . 2006 )) and constant

bearing pursuit, each associated with a specific pursuit manifold. Each manifold is determined by a cost function that measures the distance of a given state from

* Author and address for correspondence: Institute for Systems Research, University of Maryland,

College Park, MD 20742, USA (krishna@isr.umd.edu).

Received 21 November 2008

Accepted 21 January 2009 1539 This journal is q

2009 The Royal Society

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

1540 E. Wei et al.

the manifold. Using derivatives of these cost functions along trajectories of the system, we present (in § 3) feedback laws that drive the system towards each pursuit manifold. To ensure that the particles maintain constant speed, the feedback laws are gyroscopic : the forces exerted on a particle under feedback control do not alter the kinetic energy of the particle.

Here, we focus only on pursuit and not evasion: a pursuer senses and responds to a target (a ‘pursuee’ or ‘evader’) moving at constant speed. Depending on its sensory capabilities, the evader/pursuee may adopt a deterministic open-loop or closed-loop steering behaviour or, as we shall see later, even a random steering behaviour. Our interest is in steering behaviours that the pursuer might adopt to reach a specific pursuit manifold, with minimal assumptions about the behaviour

of the target. Empirical studies of pursuit behaviour in hoverflies ( Srinivasan &

), dragonflies (

Mizutani et al . 2003 ) and echolocating bats engaged in

prey capture ( Ghose et al . 2006 ) suggest prevalence of motion camouflage or

CATD pursuit. We postulate an evolutionary basis for this. In connection with this hypothesis, we investigate numerically the relative success of the different types of pursuit in § 4. We formulate an evolutionary game with three pure strategies corresponding to the three types of pursuit, with an open-loop evader

(i.e. the evader steering behaviour is not based on knowledge of the behaviour of the pursuer). Monte Carlo studies of this game are analysed in § 5 using a deterministic mean-field equation. The analysis of the convergence properties of this ordinary differential equation, which exploits a natural Riemannian geometry on the probability simplex in three dimensions, lends support to our hypothesis, i.e. motion camouflage or CATD optimizes fitness in a precise sense.

2. Modelling interactions

We model planar pursuit interactions using gyroscopically interacting particles, as in

Justh & Krishnaprasad (2006) . The (unit-speed) motion of the pursuer is

described by r _ p

Z x p

; x _ p

Z y p u p

; y _ p

Z K x p u p

; ð 2 : 1 Þ and the motion of the evader (with speed n ) by r _ e

Z n x e

; x _ e

Z n y e u e

; y _ e

Z K n x e u e

; ð 2 : 2 Þ where the steering control of the evader, u e

, is prescribed, and the steering control of the pursuer, u p

, is given by a feedback law. We also define r

Z r p K r e

; ð 2 : 3 Þ which we refer to as the ‘baseline’ between the pursuer and evader (

).

Remark 2.1.

In three dimensions (

Justh & Krishnaprasad 2005 ), use the

concept of natural frame (

Bishop 1975 ) to formulate interacting particle models.

In that setting, each particle has two steering (natural curvature) controls.

( a ) Pursuit manifolds and cost functions

The state space for the two-particle pursuer–evader system is G

!

G , where

G

Z

SE (2) is the special Euclidean group in the plane. We define the cost functions G : G

!

G / R and L : G

!

G / R associated with motion camouflage pursuit and constant bearing pursuit as

Proc. R. Soc. A (2009)

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

Pursuit and an evolutionary game evader trajectory e p

1541 pursuer trajectory

Figure 1. Trajectories for the pursuer and evader. The position of the pursuer is r p

, x p is the unit vector tangent to its trajectory and position of the evader, x e y p is the corresponding unit normal vector. Similarly, r e is the unit vector tangent to the evader’s trajectory and y e is the is the corresponding unit normal vector. The pursuer moves at unit speed, while the evader moves at speed n .

G

Z j r r j

$ j r r

_

_ j

Z d d t j d r d t r j

ð motion camouflage Þ ð 2 : 4 Þ and where

L

Z r j r j

$

R x p

ð constant bearing

R

Z

" cos q

K sin q

#

; sin q cos q

Þ ; ð

ð

2

2 :

: 5

6

Þ

Þ for q

2

(

K p /2, p /2). (The cost function (2.4) for motion camouflage was introduced in

Justh & Krishnaprasad (2006) ). For

R

Z I

2

!

2

(i.e. the 2

!

2 identity matrix) in (2.5), we define

L

0

Z r j r j

$ x p

ð 2 : 7 Þ to be the cost function associated with classical pursuit.

Observe that all three cost functions G , L and L

0 are only well defined for j r j O 0, and that they take values on the interval [

K

1,1].

Using the cost functions (2.4), (2.5) and (2.7), we can define pursuit manifolds.

We are careful to distinguish pursuit manifolds from the interaction laws, which drive the system towards these pursuit manifolds. Throughout this section, we restrict attention to finite intervals of time, and assume that the pursuer and evader do not collide during these intervals (so that we may assume j r j O 0).

Proc. R. Soc. A (2009)

1542

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

E. Wei et al.

(a) e (b) e (c) e p p p

Figure 2. Geometric representations of three pursuit manifolds via state constraints: ( a ) classical pursuit, ( b ) constant bearing pursuit and ( c ) motion camouflage pursuit.

We define the motion camouflage pursuit manifold by the condition G

Z

K

1.

To interpret this condition, let w be the transverse component of relative velocity, i.e.

w

Z r _

K r j r j

$

_ j r r j

; ð 2 : 8 Þ where we note that _ is the relative velocity and ðð r = j r j Þ

$ r _ Þð r = j r j Þ is the component of r _ along the baseline. The pursuer–evader system is said to be in a

‘state of motion camouflage (with respect to a point at infinity)’ iff w

Z

0 (see

Justh & Krishnaprasad 2006 ). The motion camouflage pursuit manifold is thus

defined by the conditions w

Z

0 and ð d = d t Þ j r j !

0 (i.e. the transverse component of relative velocity vanishes, and the baseline shortens with time;

).

The constant bearing pursuit manifold is defined by the condition L

Z

K

1. This condition is satisfied when the heading of the pursuer makes an angle q with the baseline vector. Similarly, the classical pursuit manifold is defined by the condition L

0

Z K

1. This condition is satisfied when the heading of the pursuer is aligned with the baseline (and the baseline shortens with time;

Classical pursuit and constant bearing pursuit are discussed in a rich historical context in

and

Nahin (2007) , where the authors make contact

with models of missile guidance and collective behaviour. Shneydor also discusses parallel navigation, which is precisely what we mean by motion camouflage or

CATD pursuit.

3. Steering laws for pursuit

There are two features we expect from the steering laws associated with each type of pursuit. First, they should leave the associated pursuit manifold invariant. Second, for arbitrary initial conditions, they should drive system (2.1) and (2.2) towards the associated pursuit manifold.

( a ) Motion camouflage pursuit law

For the planar setting, it is convenient to define q t to be the vector q rotated in the plane anticlockwise by p /2 radians. Then, (2.8) implies w

Z r _

K j r r j

$ r _ j r r j

Z j r t r j

$ r _ j r t r j

Z K r j r j

$ r _ t j r t r j

: ð 3 : 1 Þ

showed the pursuer steering law u p

Z

K m j r r j

$ r _ t

C

ð x p $ x e

Þ

K n

1

K n ð x p $ x e

Þ n

2 u e

; ð 3 : 2 Þ

Proc. R. Soc. A (2009)

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

Pursuit and an evolutionary game 1543 where m O 0, gives (after some calculation)

G _

Z

K m j r _ j

ð 1

K n ð x p $ x e

ÞÞ

K j j r r

_ j j j

1 r _ j j r r j

$ r _ t

2

: ð 3 : 3 Þ

If G

Z K

1 (i.e. the system is on the motion camouflage pursuit manifold), then the transverse component of the relative velocity w

Z

0, and we see from (3.3) that G _

Z

0.

Thus, steering law (3.2) leaves the motion camouflage pursuit manifold invariant.

Now suppose that the system is not on the motion camouflage pursuit manifold.

Because j _ j O 0 under the assumption 0 !

n !

1, (3.3) is well posed when j r j O 0, i.e. when the pursuer and evader are not in a collision state. We may then conclude that for any choice of m O 0, there exists r

0

O 0, such that G % 0, c j r j O r

0

. This observation forms the basis of a convergence result: if the pursuer uses steering law

(3.2), the system will approach the motion camouflage pursuit manifold, regardless of initial conditions, provided the distance between the pursuer and evader is sufficiently large.

Remark 3.1.

It is important to distinguish between the pursuer–evader system approaching a pursuit manifold from the pursuer actually approaching the evader. Under the hypotheses we make, if the system is on a pursuit manifold, e.g. if G

Z K

1, then the distance between the pursuer and evader is guaranteed to be decreasing. However, the approach to the pursuit manifold will not necessarily entail decreasing distance between the pursuer and evader at each instant of time. Furthermore, the distance from a pursuit manifold may be arbitrarily small

(e.g. 0 !

e

Z

G

K

ð

K

1 Þ / 1), while the distance between the pursuer and evader

(i.e.

j r j !N

) is arbitrarily large.

While (3.2) leads to favourable behaviour of G (i.e. conditions can be readily stated for which G is decreasing with time towards

K

1), it has the disadvantage of not being biologically plausible due to the term involving u e

. It seems too strong an assumption to make that the pursuer would be able to accurately estimate the curvature of the evader trajectory. Therefore,

dropped the rightmost term in (3.2), leaving u p

Z K m r j r j

$ r _ t

: ð 3 : 4 Þ

Control law (3.4) is a ‘high-gain feedback law’ because, by taking m O 0 sufficiently large, it can be proved that the pursuer–evader system will still be driven towards the motion camouflage pursuit manifold. Details of the proof can be found in

Krishnaprasad (2006) . The analysis has also been extended to three-dimensional

motion camouflage pursuit (

Reddy et al . 2006 ), motion camouflage pursuit with

sensorimotor delay (

Reddy 2007 ; Reddy et al . 2007

), and motion camouflage pursuit in a stochastic setting (

Galloway et al . 2007 ). However, note that (3.4) does not leave

the motion camouflage pursuit manifold invariant.

( b ) Constant bearing pursuit law

A feedback law that drives the pursuer–evader system (2.1) and (2.2) to the constant bearing pursuit manifold can be derived as follows. Note that d d t j r r j

Z r _ j r j

K r r

$ r _ j r j 3

Z j

1 r j

_

K j r r j

$ r _ j r r j

Z w j r j

: ð 3 : 5 Þ

Proc. R. Soc. A (2009)

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

1544 E. Wei et al.

Differentiating L with respect to time along trajectories of (2.1) and (2.2) yields

L

_

Z r j r j

$

R x _ p C

R x p $ j w r j

Z r j r j

$

R y p u p C

R x p $ w j r j

: ð 3 : 6 Þ

This suggests the pursuer steering law (to decrease L along trajectories) u p

Z K h r j r j

$

R y p K

ð w

$

R x p

Þ

;

ð r

$

R y p

Þ for h O 0. From the identity r _

Z j r r j

$ r _ j r r j

C r t j r j

$ r _ r t j r j and w

Z r t j r j

$ r _ r t j r j

; it follows that w

$

R x p

Z r t j r j

$

_ r t j r j

$

R x p

Z j r r j

$ r _ t j r r j

$

ð R x p

Þ t

ð

ð

ð

3

3

3 :

:

: 7

8

9

Þ

Þ

Þ

Z r j r j

$

_ t r j r j

$

R y p

; ð 3 : 10 Þ since planar rotations (i.e. left multiplication by R and the ‘perp’ operation) commute. Thus, (3.7) may be expressed as u p

Z

K h r j r j

$

R y p

1

K j r j r j r j

$ r _ t

: ð 3 : 11 Þ

Note the similarity in the form of the second term of (3.11) to steering law (3.4) for motion camouflage.

To measure how close the pursuer–evader system is to a pursuit manifold,

defined finite-time accessibility as follows.

Definition 3.2.

Given the system (2.1) and (2.2) with cost function G

(respectively, L ), we say that the motion camouflage (respectively, constant bearing) pursuit manifold is accessible in finite time if, for any e O 0, there exists a time t

1

O 0, such that G ð t

1

Þ %

K

1

C e (respectively, L ð t

1

Þ %

K

1

C e ).

Proposition 3.3.

Consider the systems ( 2.1

) and ( 2.2

) , with L defined by ( 2.5

) with q

2

(

K p /2, p /2), and control law ( 3.11

) , with the following hypotheses:

(A1) 0 !

n !

1 ( and n is constant ),

(A2) u e is continuous and

(A3) L (0) !

1 , and j u e j is bounded,

(A4) j r (0) j O 0.

Proc. R. Soc. A (2009)

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

Pursuit and an evolutionary game 1545

Then, the constant bearing pursuit manifold is accessible in finite time using highgain feedback ( i.e. by choosing h O 0 sufficiently large ).

Proof.

(Similar to the proof of proposition 3.3 in

(2006) , but somewhat simpler.) From (3.6) and (3.11), we have

L

_

Z K h r j r j

$

R y p

2

Z K h ð 1

K

L

2

Þ ; ð 3 : 12 Þ where we have used

1

Z r j r j

2

Z r j r j

$

R x p

2

C r j r j

$

ð R x p

Þ t

2

Z

L

2

C r j r j

$

R y p

2

: ð 3 : 13 Þ

From (3.12), we can write d L

1

K

L 2

Z K h d t ; which, on integrating both sides, leads to

ð

L d ~

L ð 0 Þ 1

K

~ 2

Z

K h

ð t

0 d t ~

Z

K h t :

Noting that

ð

L

L ð 0 Þ 1 d

~

2

Z

ð

L

L ð 0 Þ d ð tanh K

1

L Þ

Z tanh K

1

L

K tanh K

1

L ð 0 Þ ;

K we see that

ð

ð

ð

3

3

3 :

:

: 14

15

16

Þ

Þ

Þ

L ð t Þ

Z tanh ð tanh K

1

L ð 0 Þ

K h t Þ ; ð 3 : 17 Þ where we note that tanh(

$

) and tanh K

1

(

$

) are monotone increasing functions.

Now we estimate how long j r j O 0, which, in turn, determines how large t can become in (3.17). We have d d t j r j R

K

ð 1

C n Þ ; from which we conclude that j r ð t Þ j R j r ð 0 Þ j

K

ð 1

C n Þ t ; c t R 0 :

ð 3 : 18 Þ

ð 3 : 19 Þ

Then, defining

T

Z j r ð 0 Þ j

O 0

1

C n

ð 3 : 20 Þ to be the minimum interval of time over which we can guarantee the dynamics

(specifically, the feedback control u p

) is well defined, we conclude that

L ð T Þ

Z tanh ð tanh K

1

L ð 0 Þ

K h T Þ

Z tanh tanh K

1

L ð 0 Þ

K h j r ð 0 Þ j

1

C n

: ð 3 : 21 Þ

Proc. R. Soc. A (2009)

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

1546 E. Wei et al.

Next, we note that tanh ð x Þ %

K

1

C e 5 x %

1

2 ln e

2

K e

; ð 3 : 22 Þ for 0 !

e / 1. Thus, h R

1

C n j r ð 0 Þ j tanh K

1

L ð 0 Þ

K

1

2 ln e

2

K e

O 0 ð 3 : 23 Þ implies that tanh K

1

L ð 0 Þ

K h j r ð 0 Þ j

1

C n

%

1

2 ln e

2

K e

; ð 3 : 24 Þ so that

L ð T Þ

Z tanh tanh K

1

L ð 0 Þ

K h j r ð 0 Þ j

1

C n

%

K

1

C e : ð 3 : 25 Þ

We thus see that the constant bearing pursuit manifold is accessible in finite time T if we choose h sufficiently large so as to satisfy (3.23).

&

Remark 3.4.

If L

Z K

1 (i.e. the system is on the constant bearing pursuit manifold), then it is clear from (3.12) that L

_

Z

0. Thus, the constant bearing pursuit manifold is invariant under steering law (3.11).

Remark 3.5.

In the proof of the above proposition, we see that none of the calculations require q in (2.6) to be restricted to the interval (

K p /2, p /2).

However, if p /2 % q % p or

K p % q %

K p /2, then L

Z

K

1 corresponds to a lengthening baseline (hence not pursuit).

Remark 3.6.

The above proposition also applies to classical pursuit. We simply take R

Z I

2

!

2

. Furthermore, the classical pursuit manifold is invariant under steering law (3.11), with R

Z I

2

!

2

.

In this section, we have presented feedback laws for the pursuer to reach each of the three pursuit manifolds. Depending on its sensory capabilities, the pursuer may adopt a deterministic closed-loop steering behaviour, to reach a specific pursuit manifold. Thus, the choice of pursuit manifold constitutes a (pure) strategy . Particular strategies may be discernible in nature as fulfilling certain ecological imperatives. Empirical studies of pursuit behaviour in dragonflies

(

) and echolocating bats engaged in prey capture (

), suggest prevalence of the motion camouflage (CATD) pursuit strategy.

Our aim in § 4 is to examine an (artificial) evolutionary basis for this prevalence.

4. An evolutionary game

Having introduced pursuit manifolds and steering laws for three types of pursuit, we now consider how to compare their relative performance. For a class of evader trajectories that are only defined statistically, and a system model that is intrinsically nonlinear, we expect to have to use a Monte Carlo approach. One way of organizing the calculations is an evolutionary game formulation, an

Proc. R. Soc. A (2009)

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

Pursuit and an evolutionary game 1547 approach dating back to

and anticipated in the PhD thesis of

Nash (1950) . (See also Maynard Smith 1982 ; Weibull 1995 ;

Hofbauer & Sigmund

,

2003 .) In an evolutionary game with a finite number

of pure strategies, each member of a (large) population of players is programmed to play one of the strategies. A member playing pure strategy i is pitted against a randomly selected player from the population; equivalently, the i player is facing a mixed strategy with probability vector equal to the vector of population shares for each of the pure strategies. If the pay-off to the i player is higher (lower) than the population average pay-off, then the population share of i players is proportionately increased (decreased). This is the essence of replicator dynamics , as in

and

. For the purposes of this paper, our set-up is somewhat simpler: our population consists of players (bats, dragonflies, etc.) programmed to play one of three different pursuit strategies, and rather than competing head-to-head, the trials involve members of each population competing against nature. In the literature, this is referred to as frequency-independent fitness (

;

Hofbauer & Sigmund 2003 ). The

mathematical development for these games against nature may suggest particular approaches to analyse more complicated scenarios involving headto-head competition (which we will explore in future work). In the next subsection, we give a short mathematical summary of the type of evolutionary game we play.

We note that there is a rich body of work, dating back to the papers of Isaacs in the 1950s (

), in which pursuit–evasion games are studied as differential games. This is an approach focused on what we refer to here as individual trials, as opposed to the population view taken in this paper. The population view is essential when one seeks answers to questions of an evolutionary nature.

( a ) Evolutionary dynamics

Consider a finite set of pure strategies, indexed by i , and let p i denote the proportion of a population that adopts strategy i at a particular discrete time step (generation). Then, p i

Z

1 ; ð 4 : 1 Þ i

Z

1 where n is the number of pure strategies. Associated with each strategy i is a payoff w i

, which, in turn, gives rise to an average fitness w

Z j

Z

1 w j p j

: ð 4 : 2 Þ

In general, each w i

, i

Z

1, 2,

.

, n , will be a function of the population vector p

Z

( p

1

, p

2

,

.

, p n

). The average fitness w thus depends on both the individual pay-offs and on the proportion of the population adopting each strategy. Given a rule for determining the pay-offs, we seek the population vector that maximizes the average fitness.

In the discrete time setting, as stated at the beginning of this section, the standard evolutionary game formulation involves updating the proportion of the population using strategy i according to

Proc. R. Soc. A (2009)

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

1548 E. Wei et al.

p i

ð k

C

1 Þ

Z p i

ð k Þ w i w

; ð 4 : 3 Þ where k is the discrete time index. We note that, by (4.2), p i w w i

Z w

Z

1 ; i

Z

1 w

ð 4 : 4 Þ so that p ( k

C

1) satisfies (4.1).

To pass from the discrete to continuous time setting, we use

1 h

½ p i

ð k

C

1 Þ

K p i

ð k Þ

Z

1 h h p i

ð k Þ w w i

K p i

ð k Þ i

Z p i

ð k Þ w i h

K w w

; i

Z

1 ;

.

; n ; ð 4 : 5 Þ where h O 0 corresponds to the time-step duration. This leads us to write p _ i

Z p i w i K w h w

; i

Z

1 ;

.

; n ; ð 4 : 6 Þ but by applying a further (inhomogeneous) time-scale change, we can replace

(4.6) with p _ i

Z p i

ð w i K w Þ : ð 4 : 7 Þ

This is the form of the replicator dynamics arrived at in

w i is linear in p , we are in the setting of matrix games. See

for numerous examples of matrix games.

For a general evolutionary game, w i

Z w i

( p ), i

Z

1,

.

, n , would be computed using Monte Carlo methods. The problem we consider is more restrictive: the members of the population are not directly competing with each other, but instead are competing independently against nature, with N strategic match-ups in each generation of the population to compute the pay-offs from sample averages.

Therefore, for us, w i is independent of p , i

Z

1,

.

, n , but random depending on the state of nature. Thus, we have expected average pay-off differentials

" #

E ½ p i

ð w i K w Þ

Z

E p i w i K p i j

Z

1 p j w j

Z p i

E ½ w i K p i j

Z

1 p j

E ½ w j

; i

Z

1 ;

.

; n ;

ð 4 : 8 Þ where E [

$

] denotes expectation. We assume the ‘law of large numbers’,

E ½ w i

/ c i as N / N ; i

Z

1 ;

.

; n ; ð 4 : 9 Þ where N is the number of samples, and c i

O 0 by convention. This leads to the meanfield equations p _ i

Z p i

ð c i K c Þ ; i

Z

1 ;

.

; n ; ð 4 : 10 Þ where c

Z p i c i

: ð 4 : 11 Þ i

Z

1

We expect the behaviour of the mean-field equations (4.10) to be connected to the asymptotic behaviour of the differential equations with random coefficients

(4.7). Before we discuss the mean-field equations further, we give details of our Monte

Carlo experiments.

Proc. R. Soc. A (2009)

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

Pursuit and an evolutionary game 1549

( b ) Simulations

Simulations that illustrate the evolutionary game dynamics were performed.

For the purposes of the simulations, we took n

Z

3 and let the p i correspond to pursuits. The constraint

P

3 n

Z

1 p i

Z

1 was imposed for all time. Denoting the number of trials in generation j for strategy i by m ij

, the pay-offs are defined as w ij

1

Z m ij

1 k

Z

1 t kij

; ð 4 : 12 Þ where t kij is the time to capture for the k th trial in generation j using strategy i

Because our evolutionary game setting involves competition against nature

.

rather than directly between pursuers, we take m the pay-off w i in the previous subsection is simply w ij ij

Z m

Z const. Note that with the generation index j suppressed. An alternative definition of the pay-off would be

!

K

1 w ij

Z

1 m k

Z

1 t kij

: ð 4 : 13 Þ

For each generation, individual pay-offs were computed using (4.12) or (4.13), and the average fitness using (4.2). The population proportions for the succeeding generation were then computed using (4.3). By this method, a single trajectory in the simplex could be generated. Repeating the entire procedure many times from random initial values ( p

1

(0), p

2

(0) and p

3

(0)) yields a phase portrait for the evolutionary game on the simplex. (For each initial condition in the simplex, a total of 50 generations were run.)

Within each generation, various types of evader paths were used: linear; circular; periodic; and (approx.) piecewise linear. Linear paths were generated by setting u in (2.2). (The particular value u by setting values a e h

Z u

0 in (2.2). Circular paths were generated by setting e

Z

0.5 was used.) Periodic paths were generated a cos( u t ) in (2.2), where a ; u

1 and u e

Z

2 R

C u e h const.

were const. (The particular

Z

1 were used.) Approximately piecewise linear paths were generated by first specifying two positive parameters a (the time between turns) and k (the maximum absolute steering control) and then selecting random values for u e uniformly distributed between

K k and k . (The particular values used for a were 0.05, 0.1, 0.15, 0.2 and 0.25, and the particular values used for k were 1, 2 and 3, giving a total of 15 combinations.) The initial position of the evader was always set to r e

Z

0, and the initial heading of the evader was always set to x e

Z

(1, 0). However, the initial position r p of the pursuer was randomly chosen from a uniform distribution on a square centred at the origin (with side length 20;

figure 3 ), and the initial heading

x p of the pursuer was randomly chosen from a uniform distribution on the circle. The pursuer always moved at unit speed, and the speed of the evader was taken to be n

Z

0.6.

The numerical integrations needed for the pursuer–evader encounters in each trial were performed using a forward Euler method (with a fixed step size of 0.05

time units). The feedback gains m in (3.4) for the CATD law and h in (3.11) for the classical pursuit and constant bearing laws were set to 10. A trial would terminate when the separation between pursuer and evader fell below

Proc. R. Soc. A (2009)

1550

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

E. Wei et al.

possible pursuer initial positions 10

–10 evader initial position

10

–10

Figure 3. The initial position of the evader is always (0, 0). For each trial, the initial position of the pursuer is randomly chosen from a uniform distribution on the square [0, 1]

!

[0, 1].

a pre-specified capture distance (set to 0.05 distance units), at which point the capture time could be computed. For the constant bearing law, a fixed constant bearing angle q

Z

0.3 rad was used, i.e. we took

" cos q

K sin q

#

R

Z

; q sin q cos q

Z

0 : 3 ; ð 4 : 14 Þ in (3.11).

Examples of specific trials are shown in

figure 4 . Phase portraits in the simplex

for six different simulations are shown in

figure 5 . For simulations 1, 3 and 5, the

pay-offs are defined by (4.12). For simulations 2, 4 and 6, the pay-offs are defined by (4.13). In simulations 1 and 2, the following collection of evader trajectories are used at each generation: 1 linear path; 1 circular path; 1 oscillatory path; and

15 (almost) piecewise linear paths, so that m

Z

18. In simulations 3 and 4, 75

(almost) piecewise linear paths are used, so that m

Z

75. Comparing simulations

3 and 4 with simulations 1 and 2, the trajectories are observed to be smoother for simulations 3 and 4. This is because more trials are used per generation.

In simulations 5 and 6, only circular evader paths were used, and instead of using a constant evader steering control u e

Z

0.5, the steering control u e for each trial was chosen uniformly between 0 and 1. The number of trials per generation used was m

Z

50. As simulations 5 and 6 show, constant bearing is not as effective for this choice of circular evader path.

5. Analysis of the game

Here, we work out the properties of the mean-field equations (4.10) using the

Riemannian geometry of the simplex as an embedded submanifold of the positive orthant in R n

. The key ingredients for this analysis are a Riemannian metric on

Proc. R. Soc. A (2009)

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

Pursuit and an evolutionary game 1551

(a) 2 evader trajectory

0

–2

– 4 pursuer trajectory

– 6

–8

–10

–10 –5 0 5 10 horizontal distance

15 20

(c) 2 evader trajectory

0

(b) evader trajectory

–10 –5 0 5 pursuer trajectory

10 horizontal distance

15 20

–2

– 4 pursuer trajectory

– 6

–8

–10

–10 –5 0 5 10 horizontal distance

15 20

Figure 4. ( a ) An example of classical pursuit: the pursuer aligns its direction of motion with the baselines. ( b ) An example of CATD pursuit: the pursuer tries to keep the baselines parallel.

( c ) An example of constant bearing pursuit: the pursuer tries to keep the angle between its heading and the baselines constant at 0.3 rad. In all three figures, the evader exhibits random motion and baselines are shown as dotted lines.

the interior of the simplex, which can be derived from one on the positive orthant. The former is well known and attributed to

Fisher’s information matrix (

Fisher 1922 ), while the latter (first referred to as the

Shahshahani metric by

) was ‘invented’ by

apparently without prior knowledge of the work of Fisher and Rao. The information

theorist Campbell ( 1985 , 1995

) was well aware of this set of ideas and showed the relationship between them and certain optimization questions in data compression. In this context, he showed the relevance of what we term here the mean-field equations, but without any connection to (evolutionary) game theory.

( a ) Dynamics preserve the simplex

Our analysis starts with the mean-field equations (4.10). We first observe that

(4.10) preserves the (interior of the) simplex

( )

D

0 ; n

K

1

Z p

2 R n p i

Z

1 and p i

O 0 c i

Z

1 ;

.

; n ; ð 5 : 1 Þ i

Z

1

Proc. R. Soc. A (2009)

1552

(a)

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

E. Wei et al.

constant bearing

(b) constant bearing

(c) constant bearing classical pursuit

(d ) constant bearing

CATD classical pursuit

(e) constant bearing

CATD classical pursuit

( f ) constant bearing

CATD classical pursuit

CATD classical pursuit

CATD classical pursuit

CATD

Figure 5. Simulated phase plots. Pay-offs for simulations ( a ) 1, ( c ) 3 and ( e ) 5 are defined by (4.12), while pay-offs for simulations ( b ) 2, ( d ) 4 and ( f ) 6 are defined by (4.13). In simulations 1 and 2, each generation includes one trial with a linear evader path, one with a circular evader path

(turning rate r

Z

0.5), one with a periodic evader path and 15 trials of random evader paths, resulting in m

Z

18 trials total. In simulations 3 and 4, m

Z

75 trials with random evader paths are used in each generation. In simulations 5 and 6, m

Z

50 trials with circular evader paths are used in each generation (and the turning rate r is randomly selected for each trial from a uniform distribution on the interval [0, 1]).

in other words, if p ð 0 Þ

2

D

To see this, note that

0 ; n

K

1

, then p ð t Þ

2

D

0 ; n

K

1 for all future times t O 0.

ð 5 : 2 Þ i

Z

1 p _ i

Z i

Z

1 p i c i K c i

Z

1 p i

Z c

K c

Z

0 :

Next, for each i , we may write (4.10) as p _ i

Z p i

ðð 1

K p i

Þ c i

Þ

K p i

X p j c j

; j s i from which we may conclude (since p i

X p _ i

R

K p i p j

O 0 and c i

O 0, c i ) that

X c j

R

K p i c j j s i j s i and, therefore, p i

ð t Þ R p i

ð 0 Þ exp

K t

X c j

!

O 0 ; j s i for all t O 0.

Proc. R. Soc. A (2009)

ð

ð

ð

5

5

5 :

:

: 3

4

5

Þ

Þ

Þ

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

Pursuit and an evolutionary game 1553

( b ) Dynamics on the positive orthant project onto the simplex

Here, we follow

to derive the mean-field equations as an orthogonal projection (with respect to the Fisher–Rao–Shahshahani metric) of a linear vector field (see (5.6) below) in the positive orthant.

To interpret (4.10) geometrically, it is useful to introduce the related system p _ i

Z p i c i

; i

Z

1 ;

.

; n ; ð 5 : 6 Þ for c i embedded submanifold of

Let

O 0, c i , evolving on the positive orthant R p

2 say that v

D

0, n

K

1

2

T p

R n

C

R n

C

.

n

C

. Note that D

0, n

K

1 is an denote a point on the (interior of the) simplex. Then, we is orthogonal to (equivalently, is a normal vector to) D

0, n

K

1 if h v ; w i p

Z

0 ; c w

2

T p

D

0 ; n

K

1

; ð 5 : 7 Þ where w

2

T p

D

0 ; n

K

1

; iff i

Z

1 w i

Z

0 ; and the Shahshahani inner product h

$

,

$ i p on R n

C is defined by h v ; w i p

Z i

Z

1 v i w i p i j p j ; j p j

Z i

Z

1 p i

:

Condition (5.7) for orthogonality can then be written as

ð

ð

5

5 :

: 8

9

Þ

Þ i

Z

1 v i w i p i j p j

Z

0 : ð 5 : 10 Þ

Let p w

2

T p

D

2

D

0, n

0 ; n

K

1

,

K

1 and consider v

Z a p

2

T p

R n

C

, where a

2 R . Then, for i

Z

1 v i w i p i j p j

Z i

Z

1 v i w i p i

Z a i

Z

1 w i

Z

0 :

Thus, v

Z a p is a normal vector to D

0, n

K

1 at p . We also have

ð 5 : 11 Þ k v k

2 p

Z i

Z

1

ð a p i

Þð a p i

Þ p i

Z a

2 i

Z

1 p i

Z a

2

; ð 5 : 12 Þ so that k v k

2 p

Z

1, iff j a j

Z

1.

In (5.6), the right-hand side is a tangent vector to R n

C

2 c

1 p

1

3 at p

2

D

0, n

K

1 v

Z «

7

2

T p

R n

C

: defined by

ð 5 : 13 Þ c n p n

Proc. R. Soc. A (2009)

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

1554 E. Wei et al.

Suppose we project v orthogonally onto T p

D

0, n

K

1

. Defining

2 p

1

3

N

Z «

7

2

T p

R n

C p n

ð 5 : 14 Þ to be the unit normal, we have

½ v

K h v ; N i p

N i

Z c i p i K j

Z

1 c j p j p j p j

!

p i

Z p i c i K j

Z

1 c j p j

!

; i

Z

1 ;

.

; n ;

ð 5 : 15 Þ which is simply the right-hand side of the dynamics (4.10) on the simplex; furthermore, v

K h v ; N i p

N

2

T p

D

0 ; n

K

1

: ð 5 : 16 Þ

We can use the observation that (4.10) is a projection (with respect to the

Shahshahani inner product) of (5.6) onto the simplex to explicitly solve (4.10).

The solution of (5.6) is just p i

ð t Þ

Z p i

ð 0 Þ e tc i ; i

Z

1 ;

.

; n : ð 5 : 17 Þ

We can verify that p i

ð t Þ

Z

P n p i j

Z

1

ð 0 Þ e tc p j

ð 0 Þ i e tc j

; i

Z

1 ;

.

; n ; ð 5 : 18 Þ solves (4.10) by differentiating it with respect to time, p _ i

ð t Þ

Z

Z

P p n i

ð j

Z

1

0 Þ p j c i p i c i K p i e tc i

ð 0 Þ e tc j

K p i

P j n

Z

1

ð 0 Þ e tc i p j

ð 0 Þ e tc j

2

P j n

P Z

1 n j

Z

1 p j p j

ð 0 Þ c j

ð 0 Þ e e tc j tc j

Z p i c i K p j

Z

1 i p k

Z

1 j

ð 0 Þ c j c k e tc j

P n p k j

Z

1

ð 0 Þ e tc k p j

ð 0 Þ e tc j

Z p i c i K p i j

Z

1 p j c j

: ð 5 : 19 Þ

Furthermore, it is obvious that the initial conditions for (5.18) lie on the simplex.

( c ) Asymptotic behaviour of trajectories on the simplex

Suppose the expected pay-offs c i

, i

Z

1,

.

, n , satisfy c

1

!

c

2

!

/

!

c n

:

Proc. R. Soc. A (2009)

ð 5 : 20 Þ

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

Pursuit and an evolutionary game 1555

Then, from (5.18), we have p i

ð t Þ

Z

P n p i j

Z

1

ð 0 Þ e tc i p j

ð 0 Þ e e

K tc n tc j e K tc n

; i

Z

1 ;

.

; n ; so that lim t / N p i

ð t Þ

Z p i

ð 0 Þ lim t / N e K t ð c n K c i

Þ p n

ð 0 Þ

Z

(

0 ; i

Z

1 ;

.

; n

K

1 ;

1 ; i

Z n ;

ð

ð

5

5 :

: 21

22

Þ

Þ or

2

0

3 lim t / N p ð t Þ

Z

6

4

0

«

7

5

:

1

ð 5 : 23 Þ

Thus, trajectories starting in the interior of the simplex converge to a vertex of the simplex. We can now return to the game-theoretic interpretation. For an evolutionary game with an underlying symmetric bi-matrix stage game, it is a theorem (

Nachbar 1990 ) that if a trajectory of the evolutionary dynamics

initialized in the interior of the simplex converges as t / N to a limit (not necessarily in the interior) in the simplex, then the limit is a symmetric Nash equilibrium strategy of the stage game. In the present instance, the matrix of the bi-matrix game is A

Z

[ a ij

] with a nature’ aspect of our problem.

ij

Z c i independent of j , due to the ‘game against

( d ) Ascent on the simplex

Because there is convergent behaviour of trajectories of the mean-field equation (4.10) on the simplex, it is natural to look for an underlying optimality principle. The Fisher–Rao–Shahshahani metric (or information geometry) of the simplex yields an affirmative answer, by allowing us to show that the mean-field equations are indeed gradient dynamics.

appears not to have any use for this property, but in the setting of population genetics,

exploits the gradient property of a related mean-field equation to derive the Fisher fundamental theorem and the Kimura maximal principle of genetics.

The mean-field equation (4.10) also arises in the context of molecular selforganization (

Ku ), where a (Kimura-type) maximal principle is

stated, but without reference to the Fisher–Rao–Shahshahani metric (see also

for this remark).

P

Proposition 5.1.

Let J

Z j n

Z

1 c j p j

.

Then p _ i

Z p i c i K

X c j p j

!

j

Z

1

Z grad J ; ð 5 : 24 Þ

Proc. R. Soc. A (2009)

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

1556 E. Wei et al.

where the gradient is defined with respect to the Fisher–Rao–Shahshahani metric,

G

Z

½ g ij

Z diag ð 1 = p

1

; 1 = p

2

;

.

; 1 = p n

Þ ; ð 5 : 25 Þ on D

0, n

K

1

.

Proof.

Given a smooth manifold metric tensor grad J ) g ij

M with local coordinates x

1

, x

2

,

.

, x n and

, for a vector w

2

T x

M , we have (by definition of the vector field d J ð w Þ

Z i

Z

1 vJ w i v x i

Z h grad J ; w i

Z grad J

$

G w :

Since G is symmetric, (5.26) implies that

G grad J

Z vJ v x or

ð

ð

5

5 :

: 26

27

Þ

Þ grad J

Z

G K

1 vJ

: v x

ð 5 : 28 Þ

There is a subtlety in the calculation because

R

C

. Identifying M with R n

C

, x with p , and G with p

1

, p

2

,

.

, p n are not local coordinates on the simplex , even though they are suitable local coordinates for n

2

1 = p

1

0

3

G

Z 1

7 j p j ; ð 5 : 29 Þ

0 1 = p n

(which corresponds to (5.9) and restricts to (5.25) on the simplex), we have and therefore grad J

Z

G K

1 vJ v p

Z

1 j p j

2 p

1

0

1 p

0

3 2 c

1

3 n

« c n on R n

C

. We thus obtain a gradient ascent equation on R n

C

2 p

1

0

3 2

1 c

1

_

Z grad J

Z j p j

1 «

0 p n c n

3

7

:

Next, we define vJ v p i

Z c i

; i

Z

1 ;

.

; n ;

4 : D

0 ; n

K

1

/ R n

C and p 1 p ;

ð

ð

ð

ð

5

5

5

5 :

:

:

: 30

31

32

33

Þ

Þ

Þ

Þ

Proc. R. Soc. A (2009)

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

Pursuit and an evolutionary game 1557 i.e.

4 simply represents the inclusion map of the simplex into the positive orthant. Since

J : R n

C

/ R ; ð 5 : 34 Þ we have the pullback of J by 4 defined as

4 J : D

0 ; n

K

1

/ R and p 1 J ð 4 ð p ÞÞ

Z

ð J

+

4 Þð p Þ : ð 5 : 35 Þ

To verify that (4.10) corresponds to gradient dynamics on the simplex, we need to show that d ½ J ð 4 ð p ÞÞð w Þ

Z d ½ð J

+

4 Þð p Þð w Þ

Z d ½ð 4 J Þð p Þð w Þ

Z h grad ð J

+

4 Þ ; w i p

Z i

Z

1 p i c i K j

Z

1 c j p j

!

w i p i j p j

Z i

Z

1 c i K j

Z

1 c j p j

!

w i

; ð 5 : 36 Þ where w

2

T p

D

0 ; n

K

1 using the identity 4

, and we have used the fact that j p j

Z

1 on the simplex. But d

Z d 4 , i.e. pullback and (exterior) differentiation commute for smooth manifolds, we have d ½ J ð 4 ð p ÞÞð w Þ

Z d ½ð 4 J Þð p Þð w Þ

Z

½ 4 d J ð p Þð w Þ

Z

Z i

Z

1 c i w i K j

Z

1 c j p j

!

i

Z

1 w i

Z i

Z

1 i

Z

1 c i w i c i K j

Z

1 c j p j

!

w i

; ð 5 : 37 Þ where we have used (5.8). Since the simplex is invariant under (4.10), we may thus conclude that (4.10) is a gradient ascent equation in D

0, n

K

1

.

&

Remark 5.2.

The matrix G of the Fisher–Rao–Shahshahani metric is simply the Fisher information matrix, i.e. the Hessian of the relative entropy or

Kullback–Leibler divergence (

) on the interior of the simplex. For

P

Z

ð p

1

;

.

; p n

Þ

2

D

0 ; n

K

1 the Kullback–Leibler divergence is and Q

Z

ð q

1

;

.

; q n

Þ

2

D

0 ; n

K

1

; ð 5 : 38 Þ

KL

P

ð Q Þ

Z

D ð P k Q Þ

Z i

Z

1 p i ln p i q i

: ð 5 : 39 Þ

It is an elementary calculation to see that the Fisher information is then

2

1 = p

1

0

3

F

Z

D

2

KL

P

ð Q Þ j

Q

Z

P

Z 1

7

: ð 5 : 40 Þ

0 1 = p n

Remark 5.3.

The ascent on the simplex solves a linear programming problem maximizing J , which is simply the population average fitness function of the evolutionary game.

Proc. R. Soc. A (2009)

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

1558 E. Wei et al.

6. Conclusion

Pursuit phenomena have an essential role in nature. In this paper, we have investigated a model of pursuit with different strategies and derived steering laws for the pursuer to execute these strategies. We then posed the question of possible preference between these strategies. Empirical studies of dragonflies engaged in aerial territorial battles (

Mizutani et al . 2003 ) and echolocating bats

catching free-flying insect prey in a laboratory flight room ( Ghose et al . 2006

), have been influential in guiding us in addressing this question. We have investigated the apparent prevalence (see

and

for statistical analysis of bat data) of a specific strategy of pursuit, motion camouflage (or CATD) pursuit, through a set of Monte Carlo experiments that capture an evolutionary game dynamics. The results of the Monte Carlo experiments suggest maximization of fitness by adopting the motion camouflage

(CATD) pursuit. Inspired by these observations, we have formulated a model of the experiments as a mean-field dynamics for an evolutionary game with random pay-offs obeying a strong law of large numbers. Analysis of this model, following earlier work of

Campbell (1985) , using the Fisher–Rao–Shahshahani metric of

the simplex leads to results consistent with the Monte Carlo experiments, i.e.

a principle of population average fitness maximization holds.

This research was supported in part by the Air Force Office of Scientific Research under AFOSR grant FA9550710446, the Army Research Office under ARO grant W911NF0610325, the

ODDR&E MURI2007 Program grant N000140710734 (through the Office of Naval Research), the NSF-NIH Collaborative Research in Computational Neuroscience Program (CRCNS2004)

NIH-NIBIB grant 1 R01 EB004750-01, the Office of Naval Research and a University of Maryland

Summer Research Scholarship. The authors would like to gratefully acknowledge collaborations with Dr Kaushik Ghose, Dr Timothy Horiuchi and Dr Cynthia Moss, which led to the questions addressed in this paper.

References

Akin, E. 1979 The geometry of population genetics . Lecture Notes in Biomathematics, vol. 31.

Berlin, Germany; Heidelberg, Germany; New York, NY: Springer.

Bishop, R. L. 1975 There is more than one way to frame a curve.

Am. Math. Mon.

82 , 246–251.

( doi:10.2307/2319846 )

Campbell, L. L. 1985 The relationship between information theory and the differential geometry approach to statistics.

Inf. Sci.

35 , 195–210. ( doi:10.1016/0020-0255(85)90050-7 )

Campbell, L. L. 1995 Averaging entropy.

IEEE Trans. Inf. Theory 41 , 338–339. ( doi:10.1109/

18.370086

)

Cover, T. M. & Thomas, J. A. 2006 Elements of information theory , 2nd edn. New York, NY:

Wiley.

Fisher, R. A. 1922 On the mathematical foundations of theoretical statistics.

Phil. Trans. R. Soc.

Lond. A 222 , 309–368. ( doi:10.1098/rsta.1922.0009

)

Galloway, K., Justh, E. W. & Krishnaprasad, P. S. 2007 Motion camouflage in a stochastic setting.

In Proc. 45th IEEE Conf. Decision and Control , pp. 1652–1659.

Ghose, K., Horiuchi, T., Krishnaprasad, P. S. & Moss, C. 2006 Echolocating bats use a nearly timeoptimal strategy to intercept prey.

PLoS Biol.

4 , 865–873, e108. ( doi:10.1371/journal.pbio.

0040108 )

Hofbauer, J. & Sigmund, K. 1998 Evolutionary games and population dynamics . Cambridge, UK:

Cambridge University Press.

Proc. R. Soc. A (2009)

Downloaded from rspa.royalsocietypublishing.org

on 4 May 2009

Pursuit and an evolutionary game 1559

Hofbauer, J. & Sigmund, K. 2003 Evolutionary game dynamics.

Bull. Am. Math. Soc.

40 , 479–519.

( doi:10.1090/S0273-0979-03-00988-1 )

Isaacs, R. P. 1965 Differential games: a mathematical theory with applications to warfare and pursuit, control and optimization . New York, NY: Wiley.

Justh, E. W. & Krishnaprasad, P. S. 2005 Natural frames and interacting particles in three dimensions. In Proc. 44th IEEE Conf. Decision and Control , pp. 2841–2846. ( http://arxiv.org/ abs/math/0503390 )

Justh, E. W. & Krishnaprasad, P. S. 2006 Steering laws for motion camouflage.

Proc. R. Soc. A

462 , 3629–3643. ( doi:10.1098/rspa.2006.1742

)

Ku Bull. Math. Biol.

41 , 803–812. ( doi:10.1007/BF02462377 )

Maynard Smith, J. 1982 Evolution and the theory of games . Cambridge, UK: Cambridge University

Press.

Maynard Smith, J. & Price, G. R. 1973 The logic of animal conflict.

Nature 246 , 15–18. ( doi:10.

1038/246015a0 )

Mizutani, A. K., Chahl, J. S. & Srinivasan, M. V. 2003 Motion camouflage in dragonflies.

Nature

423 , 604. ( doi:10.1038/423604a )

Nachbar, J. 1990 Evolutionary selection dynamics in games: convergence and limit properties.

Int. J. Game Theory 19 , 59–89. ( doi:10.1007/BF01753708 )

Nahin, P. J. 2007 Chases and escapes: the mathematics of pursuit and evasion . Princeton, NJ;

Oxford, UK: Princeton University Press.

Nash, J. 1950 Non-cooperative games. PhD thesis, Princeton University.

Rao, C. R. 1945 Information and accuracy attainable in the estimation of statistical parameters.

Bull. Calcutta Math. Soc.

37 , 81–91.

Reddy, P. V. 2007 Steering laws for pursuit. MS thesis, University of Maryland.

Reddy, P. V., Justh, E. W. & Krishnaprasad, P. S. 2006 Motion camouflage in three dimensions.

In Proc. 45th IEEE Conf. Decision and Control , pp. 3327–3332. ( http://arxiv.org/abs/math/

0603176 )

Reddy, P. V., Justh, E. W. & Krishnaprasad, P. S. 2007 Motion camouflage with sensorimotor delay. In Proc. 46th IEEE Conf. Decision and Control , pp. 1660–1665.

Shahshahani, S. 1979 A new mathematical technique for the study of linkage and selection.

Mem. Am. Math. Soc.

17 , 1–34.

Shneydor, N. A. 1998 Missile guidance and pursuit . Chichester, UK: Horwood.

Sigmund, K. 1986 A survey of replicator equations. In Complexity, language and life: mathematical approaches , ch. 4 (eds J. L. Casti & A. Karlqvist), pp. 88–104. Berlin, Germany: Springer Verlag.

Srinivasan, M. V. & Davey, M. 1995 Strategies for active camouflage of motion.

Proc. R. Soc. B

259 , 19–25. ( doi:10.1098/rspb.1995.0004

)

Taylor, P. D. & Jonker, L. B. 1978 Evolutionarily stable strategies and game dynamics.

Math.

Biosci.

40 , 145–156. ( doi:10.1016/0025-5564(78)90077-9 )

Weibull, J. W. 1995 Evolutionary game theory . Cambridge, UK: MIT Press.

Proc. R. Soc. A (2009)