Fault Tolerance and Reliable Data Placement Zach Miller University of Wisconsin-Madison

advertisement

Fault Tolerance and Reliable

Data Placement

Zach Miller

University of Wisconsin-Madison

zmiller@cs.wisc.edu

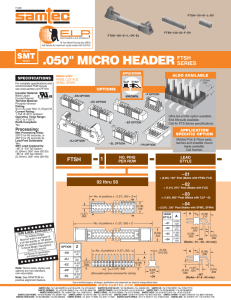

Fault Tolerant Shell (FTSH)

A grid is a harsh environment.

FTSH to the rescue!

The ease of scripting with very precise error

semantics.

Exception-like structure allows scripts to be both

succinct and safe.

A focus on timed repetition simplifies the most

common form of recovery in a distributed system.

A carefully-vetted set of language features limits the

"surprises" that haunt system programmers.

Simple Bourne script…

#!/bin/sh

cd /work/foo

rm –rf data

cp -r /fresh/data .

What if ‘/work/foo’ is unavailable??

Getting Grid Ready…

#!/bin/sh

for attempt in 1 2 3

cd /work/foo

if [ ! $? ]

then

echo "cd failed, trying again..."

sleep 5

else

break

fi

done

if [ ! $? ]

then

echo "couldn't cd, giving up..."

return 1

fi

Or with FTSH

#!/usr/bin/ftsh

try 5 times

cd /work/foo

rm -rf bar

cp -r /fresh/data .

end

Or with FTSH

#!/usr/bin/ftsh

try for 3 days or 100 times

cd /work/foo

rm -rf bar

cp -r /fresh/data .

end

Or with FTSH

#!/usr/bin/ftsh

try for 3 days every 1 hour

cd /work/foo

rm -rf bar

cp -r /fresh/data .

end

Exponential Backoff Example

#

#

command_wrapper /path/to/command max_attempts max_time each_time initial_delay

zmiller@cs.wisc.edu 2003-08-02

try for $3 hours or $2 times

try 1 time for $4 hours

$1 $6 $7 $8 $9 $10 $11 $12

catch

echo "ERROR: $1 $6 $7 $8 $9 $10 $11 $12 “

echo "sleeping for $delay seconds"

sleep $delay

delay=$delay .mul. 2

failure

end

catch

echo ERROR: all attempts failed... returning failure

exit 1

end

Another quick example…

hosts="mirror1.wisc.edu mirror2.wisc.edu

mirror3.wisc.edu"

forany h in ${hosts}

echo "Attempting host ${h}"

wget http://${h}/some-file

end

echo "Got file from ${h}”

File transfers may be better served by Stork

FTSH Summary

All the usual shell constructs

Redirection, loops, conditionals, functions,

expressions, nesting, …

And more

Logging

Timeouts

Process Cancellation

Complete parsing at startup

File cleanup

Used on Linux, Solaris, Irix, Cygwin, …

FTSH Summary

Written by Doug Thain

Available under GPL license at:

http://www.cs.wisc.edu/~thain/research/ftsh/

Outline

Introduction

FTSH

Stork

DiskRouter

Conclusions

A Single Project..

LHC (Large Hadron Collider)

Comes online in 2006

Will produce 1 Exabyte data by 2012

Accessed by ~2000 physicists, 150

institutions, 30 countries

And Many Others..

Genomic information processing applications

Biomedical Informatics Research Network

(BIRN) applications

Cosmology applications (MADCAP)

Methods for modeling large molecular systems

Coupled climate modeling applications

Real-time observatories, applications, and

data-management (ROADNet)

The Same Big Problem..

Need for data placement:

Locate the data

Send data to processing sites

Share the results with other sites

Allocate and de-allocate storage

Clean-up everything

Do these reliably and efficiently

Stork

A scheduler for data placement activities

in the Grid

What Condor is for computational jobs,

Stork is for data placement

Stork comes with a new concept:

“Make data placement a first class citizen in the

Grid.”

The Concept

Allocate space for

input & output data

Stage-in

•

Stage-in

•

Execute the Job

•

Stage-out

Execute the job

Release input space

Stage-out

Release output space

Individual Jobs

The Concept

Allocate space for

input & output data

Stage-in

•

Stage-in

•

Execute the Job

•

Stage-out

Execute the job

Release input space

Data Placement Jobs

Computational Jobs

Stage-out

Release output space

The Concept

Condor

Job

Queue

DAG specification

DaP A A.submit

DaP B B.submit

Job C C.submit

…..

Parent A child B

Parent B child C

Parent C child D, E

…..

C

D

A

B

C

E

E

DAGMan

F

Stork

Job

Queue

Why Stork?

Stork understands the

characteristics and semantics of data

placement jobs.

Can make smart scheduling decisions,

for reliable and efficient data

placement.

Failure Recovery and Efficient

Resource Utilization

Fault tolerance

Just submit a bunch of data placement jobs,

and then go away..

Control number of concurrent transfers

from/to any storage system

Prevents overloading

Space allocation and De-allocations

Make sure space is available

Support for Heterogeneity

Protocol

translation

using Stork

memory

buffer.

Support for Heterogeneity

Protocol

translation

using Stork

Disk Cache.

Flexible Job Representation

and Multilevel Policy Support

[

]

Type = “Transfer”;

Src_Url = “srb://ghidorac.sdsc.edu/kosart.condor/x.dat”;

Dest_Url = “nest://turkey.cs.wisc.edu/kosart/x.dat”;

……

……

Max_Retry = 10;

Restart_in = “2 hours”;

Run-time Adaptation

Dynamic protocol selection

[

]

[

]

dap_type = “transfer”;

src_url = “drouter://slic04.sdsc.edu/tmp/test.dat”;

dest_url = “drouter://quest2.ncsa.uiuc.edu/tmp/test.dat”;

alt_protocols = “nest-nest, gsiftp-gsiftp”;

dap_type = “transfer”;

src_url = “any://slic04.sdsc.edu/tmp/test.dat”;

dest_url = “any://quest2.ncsa.uiuc.edu/tmp/test.dat”;

Run-time Adaptation

Run-time Protocol Auto-tuning

[

link

= “slic04.sdsc.edu – quest2.ncsa.uiuc.edu”;

protocol = “gsiftp”;

bs

tcp_bs

p

]

= 1024KB;

= 1024KB;

= 4;

//block size

//TCP buffer size

Outline

Introduction

FTSH

Stork

DiskRouter

Conclusions

DiskRouter

A mechanism for high performance,

large scale data transfers

Uses hierarchical buffering to aid in

large scale data transfers

Enables application-level overlay

network for maximizing bandwidth

Supports application-level multicast

Store and Forward

C

A

DiskRouter

B

With

DiskRouter

Without

DiskRouter

Improves performance when bandwidth fluctuation between A and

B is independent of the bandwidth fluctuation between B and C

DiskRouter Overlay Network

90 Mb/s

A

B

DiskRouter Overlay Network

90 Mb/s

B

A

DiskRouter

C

Add a DiskRouter Node C which is not necessarily on the

path from A to B, to enforce use of an alternative path.

Data Mover/Distributed Cache

Source

Destination

DiskRouter Cloud

Source writes to the closest DiskRouter and Destination

receives it up from its closest DiskRouter

Outline

Introduction

FTSH

Stork

DiskRouter

Conclusions

Conclusions

Regard data placement as first class

citizen.

Introduce a specialized scheduler for data

placement.

Introduce a high performance data

transfer tool.

End-to-end automation, fault tolerance,

run-time adaptation, multilevel policy

support, reliable and efficient transfers.

Future work

Enhanced interaction between Stork,

DiskRouter and higher level planners

co-scheduling of CPU and I/O

Enhanced authentication mechanisms

More run-time adaptation

You don’t have to FedEx your

data anymore..

We deliver it for you!

For more information

Stork:

• Tevfik Kosar

• Email: kosart@cs.wisc.edu

• http://www.cs.wisc.edu/condor/stork

DiskRouter:

• George Kola

• Email: kola@cs.wisc.edu

• http://www.cs.wisc.edu/condor/diskrouter