De Novo What Description Optimality criteria

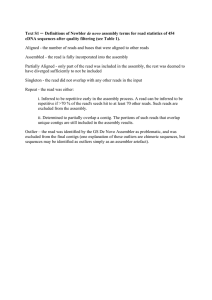

advertisement

OPTIMALITY CRITERIA for De Novo Transcriptome Assemb(lers|ly) What Number of raw reads used Identification of poor reads Trimming of reads Number of contigs Number of singletons N50 of contigs Description Optimality criteria How many of the raw reads given to the assembler does it use for assembly? [NOTE: This is conflated with the 'number of singletons' issue, as some assemblers count single‐sequence clusters as clusters and others do not.] Does the assembler identify and remove from consideration low quality reads? [NOTE: Some assemblers may classify a read as low quality if it appears to be part of a repeat element.] Does the assembler trim reads for quality or for known 'contaminant' sequence (adapters, poly(A/T)) during assembly to avoid illegal joins? How many contigs does the assembler produce? [NOTE: For some assemblers there is a distinction between clusters (=reads that likely come from the same transcription unit) and contigs (=assembled reads, that may represent different splicing isoforms or allelic forms of a transcript.] How many singletons are identified? more reads used = better either use mapping specified by assembler, or map reads back to the assembly using a standard tool to identify verifiable criteria for low quality read removal How long is the median contig size? This can be done for both all contigs and also for contigs greater than a given length (say 100 bases) to avoid and perhaps highlight the effects of lots of 'shrapnel' contigs. Mean contig length and What is the distribution of contig lengths? SD This assesses the likely completeness of the transcriptome (and also reports Number of contigs on the original RNA quality). greater than 1 kb Maximum contig length What is the maximal contig length? trimming is probably a good thing number of contigs should be LESS than the number of transcription units expected in the genome (e.g. ~20‐25,000 for a metazoan) and APPROACH the number of transcripts expected in the stage/tissue surveyed (e.g. about 10‐12,000 for a metazoan adult tissue) singletons should be a small percentage of the total number of input reads in a high‐density dataset this should approach the expected median length for full length transcripts in the species studied (e.g. for C. elegans it is ~1 kb); comparing all contigs N50 and contigs >100 bases N50, there shouldn't be much reduction this should approach the expected mean length for full length transcripts in the species studied (e.g. for C. elegans it is ~1.2 kb) the better assembler will have more contigs > 1kb Total span of contigs What is the total length of the contigs produced? Mean length of singletons Mean quality of singletons Singleton mean length is often much shorter than the mean length of the input reads: singletons may be poor sequence or fragmented transcripts. The longest contig should not usually exceed about 5 kb. The longest contigs should have 'credible' blast matches to NR protein/UniRef100 (i.e. they should match only to genes that have long transcripts, and not to odd collections of different genes each with short transcripts). the total span should approach the expected span for the organism studied (e.g. for metazoans from 25 to 40 Mb) mean length of singletons should be less than the mean length of reads Singleton mean quality is often much less than the mean quality of the input reads, indicating that singletons may be poor sequence. mean quality of singletons should be less than the mean quality of reads We do not expect to generate many huge contigs (though there are genes with huge transcripts). Some assemblers may generate large contigs by generating 'illegal' chimaeras. Optimality Criteria for de novo Transcriptome Assembly Mark Blaxter, Sujai Kumar, Stephen Bridgett September 2010 page 1 Time taken Number of unique bases in contigs Number of novel bases in contigs compared to other assemblies Shared bases from different assemblies Heterozygosity Mapping of reads Comparison to external cognate reference transcripts Comparison to external reference 'complete' proteome Comparison to a reference conserved proteome set Chimaera checking An assembler that completes in a shorter wall clock time permits the user to run the assembly with different parameter sets in a reasonable timeframe. The assembly should NOT result in lots of contigs that objectively could be coassembled. Self‐clustering should result in a minimal reduction in the extent of sequence. Different assemblers will yield different spans of assembly. The assembler that produces the greatest extent of unique sequence is likely to have accessed the greatest proportion of the transcriptome. This can be done by doing a co‐clustering between two assemblies and identifying the clusters that result that have contributions from only one of the input assemblies. Contigs that result from different assemblies but that have the same sequence are likely to be of more believable quality. This can be done by doing a co‐clustering between two assemblies and identifying the clusters that result that have contributions from both of the input assemblies. The nature of the input sample should allow assessment of the 'sanity' of assemblies based on the expected number of haploid genomes in the mix. Thus if there is one diploid organism as input, the maximum number of alleles at a particular base should be 2. An excess of alleles at a position will suggest over‐assembly of reads. Mapping back of reads using an independent mapping algorithm will assess the 'sanity' of the derived contigs. Surprisingly, it is possible for assemblers to generate contigs that have zero reads mapping back to them! If there are existing transcripts from the species of interest (or a very close relative) a good assembly will efficiently cover these independently acquired data. These reference sequences can be submitted mRNAs or Sanger ESTs. Reads can be mapped back using a standard reference mapper. If there is a good reference proteome from a genome project for a related organism, we can map the contigs to this and assess the coverage of the proteome by the contigs. Thus for a dipteran, we could use the D. melanogaster 'refseq' protein set derived from the genome. By defining core protein sets that we expect to be present in all members of the group from which the target species derives (e.g. all Metazoa) we can ask whether the assembly contains representatives of these 'essential' genes. Matching is done using BLAST, with a reasonably high cutoff (E‐value 1e‐12). Long contigs may result from chimaeric joining of reads that derive from different genes. This can be checked by taking, for all contigs greater than 1 kb in length, the first 500 bases and the last 500 bases and comparing them to a reference proteome set (we would recommend the reference conserved protein set). Both ends of each contig should match the same conserved protein (or conserved protein group) quicker is better (but speed is not all) an all against all blast clustering should reveal very few clusters of contigs that are near‐identical the assembly with the most novel bases is likely to be better [this gives an assessment of the number of putatively well assembled bases in each assembly] based on the expected number of haploid genomes input, the better assembly will not display allele counts exceeding the input genome count the best assembler will have the minimum number of 'naked' bases when reads are mapped back. the better assembler will leave fewer bases of the cognate reference naked the better assembler will generate contigs that match to a greater proportion of the reference proteome, and will cover a greater number of residues of the reference proteome the better assembler will produce contigs that match to a greater proportion of the reference conserved proteome, and will cover a greater number of residues of the reference conserved proteome the better assembler will have a low percentage of possible chimaeras > Optimality Criteria for de novo Transcriptome Assembly Mark Blaxter, Sujai Kumar, Stephen Bridgett September 2010 page 2 Optimality Criteria for de novo Transcriptome Assembly Mark Blaxter, Sujai Kumar, Stephen Bridgett September 2010 page 3