Enabling technologies for a new TELL DAQ interface module Guido Haefeli EPFL, Lausanne

advertisement

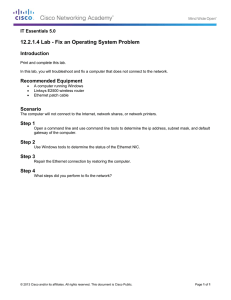

1st LHCb Collaboration Upgrade Workshop, Edinburgh 11.-12. January 2007 Enabling technologies for a new TELL DAQ interface module Guido Haefeli EPFL, Lausanne Outline • • • • • The current TELL1 10 Gigabit Ethernet for FPGAs FPGA technology and its trends TELL10 or TELL5 outline Conclusions 1) Digitization (VELO) 2) Synchronization (TTC) 3) Data compression (factor 10) 4) Buffering 5) Ethernet and IP formatting (framer), physical IF 24 x 1.28 Gbit/s = 30 Gbit/s co Da mp t a re ~ 1 ssi o 0 n 4 x 1 Gbit/s = 4 Gbit/s Interface to the input data “O-Rx” 8 Gbit/s 12 links 12 links 1.6 GHz DES • The TELL1 input data is received on 24 optical links running at 1.28 Gbit/s data rate. 8 Gbit/s • Two 12-way optical receivers are employed. • De-serialisation (DES) is implemented on the “SERDES” TLK2501. 8 Gbit/s • Data transmission to the processor FPGAs on parallel data buses running at 80MHz. • Each PP-FPGA receives 8 Gbit/s of 8 Gbit/s input data. 80 Mhz Processor FPGA “PP-FPGA” • Input data receiver FPGA, performes: – – • Summary: •Total of 100K Logic Elements •Total of 8 Mbit RAM •120 MHz processing frequency Synchronization zero suppression (common mode suppression, clusterization, hit address generation, …) 4 identical FPGAs per board of the type first generation Altera Stratix, 25K Logic Elements, 2 Mbit RAM, 780-pin. – Memory and logic resources 80% used. – Processing clock frequency 120 MHz, slowest speed grade to minimize FPGA cost. – IO usage 80%, 20% used by the Level 1 buffer interface – Embedded DSP blocks (Multiply accumulators) only 30% used. Processing is very logic and memory demanding ! Synchronization and Link FPGA “SyncLink-FPGA” s i t/ Gb 4G – Data linking and provides interface to processor FPGAs @ 4Gbit/s bandwidth. – Data buffering with QDR SRAM, 4Gbit/s read and write. – Provides interconnection to the Gigabit Ethernet card at 4Gbit/s. – TTCrx interface. 4 bit /s • SyncLink FPGA performs: 4 Gbit/s Summary: •Total of 25K Logic Elements •Total of 2 Mbit RAM •120 MHz processing frequency • One FPGA first generation Altera Stratix (25K Logic Elements), 1020-pin. – 50% logic and memory resources used – No DSPs used – IO 70% used. Network interface “GBE” • 4-way Gigabit Ethernet implemented on a mezzanine card. • 4 x 1 Gbit/s over copper • MAC and PHY on mezzanine card • Parallel “FIFO like” interface between FPGA and MAC. ECS and TTC interface • CCPC and GlueCard on board, 10/100 Ethernet connection for ECS. • I2C, JTAG, Local processor bus available via GlueCard • TTCrx on board connected to the SyncLink-FPGA 10 Gigabit Ethernet, Infiniband, 100 Gbit Ethernet • Optical 10 Gigabit Ethernet standard since 2002. Equipment becomes “in fashion” and cheaper. (5KCHF per port) • Copper 10 Gigabit Ethernet is standardized now (2006) 10GBASE-T, or IEEE 802.3an-2006, but no final cable specification yet. Maybe this goes faster than optical. • Infiniband is maybe an alternative to Ethernet, chips with one and soon for two port 10 Gbit/s over copper are available (PCI-e Î Infiniband, Mellanox ConnextX), requires new cabling. • 100 Gigabit Ethernet is the next generation Ethernet (decided by IEEE 2006), not yet commercialised but standardisation is ongoing, demands are existing. First equipment expected by 2009-2010. 10 Gigabit Ethernet for FPGAs MAC XAUI interface FPGA with serial transceivers XENPAK (10 Gigabit Ethernet optical interface module 10 Gigabit Ethernet PHY and optical transceiver “XENPAK” standard module XENPAK, XPAK, XFP module • Standard modules for different link length – Optical 10GBASE SR, 300m available (still expensive 5KCHF) • Used in high end server NIC cards or uplinks for Gigabit Ethernet switches Copper 10 Gigabit Ethernet • Copper 10 Gigabit Ethernet exists (6Watt/Xenpak) ! • Copper will maybe become cheap ! Altera Stratix history • First generation Stratix I: – Introduced 2002 (used for TELL1) – 80K Logic Elements (LE) – 8Mbit memory – 20 serial high speed transceivers (3.125 GHz) – System speed (120 MHz for TELL1) (slowest speed grade) • Second generation Stratix II (90nm): – Introduced 2004 – 180K Logic Elements (LE) – 9Mbit memory – 20 serial high speed transceivers (6.375 GHz) – System speed 50% faster than Stratix I • Third generation Stratix III (65nm): – Introduced 2006 (mass production 2008), price for 140K LEs 700 CHF at 1000 piece – 340K Logic Elements (LE) – 21Mbit memory – 20? (not available yet) serial high speed transceivers (6.375 GHz ?) – System speed 25% faster than Stratix II Xilinx Virtex history • • • Virtex 2 Pro (130nm): – Introduced 2002 – 100K Logic Cells (LCs) – 8Mbit memory – 2 PowerPCs – 20 serial high speed transceivers (3.125 GHz) Virtex 4 (90nm): – Introduced 2004 – 200K Logic Cells (LCs) – 10Mbit memory – 2 PowerPCs – 24 serial high speed transceivers (6.5 GHz) – System speed (500 MHz maximal internal clocking) Virtex 5 (65nm): – Introduced 2006 (mass production 2008),price for 330KLCs is 3100 at 1000 piece – 330K Logic Cells (LCs) – 15Mbit memory – 24 serial high speed transceivers (3.125 GHz) – System speed (550 MHz maximal internal clocking) FPGA trends • FPGA is replacing ASICs and DSPs, its market is growing fast ! • Every 2 years a new generation of FPGA, 20-30% faster and 50% more logic. • Hardcore functionality and chip types with, DSP blocks, high speed transceivers, PCI-e, Gigabit Ethernet MAC, micro processors. • Serial interfaces is in fashion, PCI-e, XAUI, … • More and more modular Intellectual Property (IP) cores available. • Low power consumption is important for the industry, but faster and bigger devices consume more power. • But constant number of high speed transceivers. • But constant number of maximal IO pins. • But cost per high end chip is constant ! New TELL1 task and performance • Same tasks as current TELL1 – Synchronization to TTC – Data pre-processing to achieve data compression by a factor 10! – Data buffering for zero suppression and DAQ interface. • No trigger functionality, no board to board interconnect. Definition: TELL5 5 times current TELL1 data bandwidth TELL10 10 times current TELL1 data bandwidth TELL40 40 times current TELL1 data bandwidth Compare high end FPGA to TELL1 processor • TELL1 PP-FPGA – 25K Logic Elements (LE) – 2Mbit memory – System speed 120 MHz • TELL10 PP-FPGA – 340K Logic Elements (LE) – 21Mbit memory – System speed 320 MHz (max on chip clock speed 600 MHz) Processing Power x 36 Receiver Use high speed optical links: – 2.56 Gbit/s data rate, 3.2 GHz links – De-serializer 4 x 2.56 Gbit/s to 32-bit@320MHz Eg. PMC Sierra PM8358-Quad PHY Serdes Broadcom BCM8011 • Input data bandwidth: – TELL1: 24 x 1.28 Gbit/s = 30 Gbit/s – TELL10: Factor 328 Gbit/s Î • Use 32 de-serializer • 1024-bit@320MHz parallel input distributed to 4 FPGAs – TELL40: Factor 1311 Gbit/s Î • Use 128 de-serializer • 4096-bit@320MHz parallel input distributed to 4 FPGAs Receiver (2) 32 8G 4 x Rcv /s bit Rx 4 x Rcv TELL40 /s 4 x Rcv it Gb 10 TELL10 4 x Rcv 4 x Rcv 4 x Rcv 4 x Rcv Text 4 x Rcv Possible now or soon with 10 Gbit/s Higher integration on chips needed, future (100 Gbit/s) ? Network interface Assumption: – Use 10 Gigabit Ethernet – Design for XENPAC PHY and optical or copper interconnect • Required bandwidth: – TELL1: 4 Gbit/s Î – TELL10: 40 Gbit/s Î • Possible: With XAUI interface and serial links (4x4@3.2GHz), XGMII 4 x 32-bit@320MHz, • 4 x XENPAC PHY modules • 4 x 10-Gbit/s MAC on FPGA – TELL40: 160 Gbit/s Î • Needs 16 x 10 Gigabit Ethernet connections, space, too high power and IO not available yet. Memory trends • SRAM and SDRAM memory with sufficient bandwidth and size are available already now. – QDRII+ SRAM, 72Mbit, 32-bit@800Mbit/s – SODIM DDRII, 4GByte,64-bit@800Mbit/s If more bandwidth is required multiple channels can be implemented. TELL10 outline with current technology 8x 4-channel SERDES 256-bit parallel interconnection 4 x 64-bit parallel interconnection Gigabit MAC as IP core on FPGA 4 x 10 Gigabit Ethernet uses a total of 16 high speed transceivers 4 x XENPAK optical 10 Gigabit transceivers TELL10 cost estimation today 32 x 150 CHF 4 x 3KCHF 1 x 3KCHF 4 x 5KCHF (XENPAK optical 10 Gigabit transceivers) 5KCHF PCB, ECS, connectors, fabrication Total: 45KCHF TELL5 “Single chip board” Number of input links adapted to possible processing power of FPGA Only one large FPGA, sufficient transceivers and IO pins (size adapted to needs) 1-4 XENPAK optical 10 Gigabit transceivers) Less dense PCB, can ease fabrication and changes Less complex design motivates to follow new technology (FPGAs) Summary • • 10 Gigabit Ethernet (also Infiniband) is standardized and commercialized already today. Standardized PHY modules leaves choice for optical or copper interface. TELL5 (single FPGA) is a very attractive architecture with many advantages: – – – Reduces complexity of the board which leads to smaller development cost and smaller design phase. Can gain a maximum from new generation FPGAs (less complex design motivates to follow technology) Single FPGA board can reach processing power and DAQ bandwidth of at least 5 times the current TELL1. • TELL10 is possible yet or soon, cost, power consumption, complexity is an issue! • TELL40 needs 100 Gigabit/s equipment and is not in range yet. • What about firmware development for integration of System on Programmable chip, microprocessor on FPGA. This has to be addressed for a new development. Cisco XENPAK modules • • • • • • • • • • • • CISCO XENPAK-10GB-CX4 (used for Infiniband) The Cisco 10GBASE-CX4 Module supports link lengths of up to 15 meters on CX4 cable. CISCO XENPAK-10GB-LX4 The Cisco 10GBASE-LX4 Module supports link lengths of 300 meters on standard Fiber Distributed Data Interface (FDDI) grade multimode fiber (MMF). To ensure that the specifications reported in Table 1 are met, the transmitter output should be coupled through a mode conditioning patch cord. CISCO XENPAK-10GB-SR The Cisco 10GBASE-SR Module supports a link length of 26 meters on standard FDDI grade MMF. Up to 300-meter link lengths are possible when using 2000 MHz/km MMF (OM3). CISCO XENPAK-10GB-LR The Cisco 10GBASE-LR Module supports a link length of 10 kilometers on standard single-mode fiber (SMF) (G.652). CISCO XENPAK-10GB-ER The Cisco 10GBASE-ER Module supports a link length of up to 40 kilometers on SMF (G.652). CISCO XENPAK-10GB-ZR The Cisco 10GBASE-ZR Module supports link lengths of up to about 80 kilometers on SMF. This interface is not part of the 10 GbE standard but is built according to Cisco optical specifications reported in Table 3. Different transceiver module specs • The 300-pin MSA converts between a 10Gbit/s serial optical signal and 16 parallel 622Mbit/s electrical signals, and currently accounts for most shipments. • The XENPAK MSA was co-founded in March 2001 by Agere Systems and has more than 25 member companies. It provides a smaller form factor, since it uses four channels running at 3.125Gigabit/s on the electrical side. Users have limited development efforts due to the market slump, but demand for smaller modules is now picking up. But, although XENPAK is designed for the heat dissipation necessary with high-power, long-reach telecom lasers, it also provides the standard for shorter-reach 10 Gbit Ethernet (10GbE) transceivers, for which it is larger than desired. MSAs have been proposed that are compatible with XENPAK’s four-wire 10 Gbit attachment unit interface (XAUI) and 70-pin electrical connector, but with smaller form factors for space-constrained applications to move to 10Gbit/s. The XPAK MSA group (www.xpak.org) was formed in March 2002 by Intel, Infineon Technologies and Picolight. In August XPAK made available Revision 2.0 ( “build-to” specification). In September membership grew to 21. • The X2 MSA (www.x2msa.org) was formed July 2002 by component suppliers Agere Systems and Agilent Technologies and subsequently supported by vendors JDS Uniphase, Mitsubishi Electric, NEC, OpNext, Optillion and Tyco Electronics. Shipping from first-half 2003, XPAK and X2 transceivers are initially focused on shorter-reach 10km links (comprising 80% of 10 GbE port applications) and second-generation applications that do not need XENPAK’s thermal capacity (though the heat sink can be adapted to different 10Gbit/s applications). So, although OEMs wanting to launch products immediately are going with XENPAK, it is expected that XPAK and X2 will grow faster than XENPAK implementations. • The XFP (10 Gigabit Small Form-Factor Pluggable) serial interface module group (www.xfpmsa.org), was founded in March 2002 by 10 networking, system, optical module, semiconductor and connector companies, including Broadcom, Brocade, Emulex, Finisar, JDSU, Velio, Maxim Integrated Products, ONI Systems, Sumitomo Electric, ICS, and Tyco Electronics. Unlike XENPAK, X2 and XPAK, XFP has a 10Gbit/s serial electrical interface (XFI) that converts serial 9.95-10.7Gbit/s electrical signals into external serial 9.95-10.7Gbit/s optical or electrical signals. This eliminates mux/demux serialto-parallel conversion logic chips inside the module and allows the serial 10Gbit/s physical-layer IC (PHY) to be moved on to the PCB (away from optics generated heat) and everything up to the XFI serial interface to be integrated into the CMOS media-access controller chip.