Reading CampusGrid e-Science Institute - Edinburgh High Throughput Computing Week

advertisement

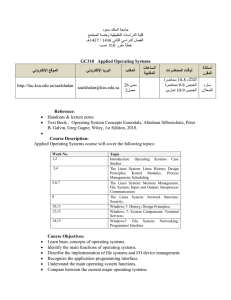

Reading CampusGrid e-Science Institute - Edinburgh High Throughput Computing Week 29 November 2007 © University of Reading 2007 www.reading.ac.uk Presenter Christopher Chapman Systems Programmer (ITNG) School of Systems Engineering University of Reading Overview • Designing the CampusGrid • Current Implementation • Success Stories • The Future – CampusGrid 2.0 Background • Started as a School of Systems Engineering activity – then needed to become campus wide • Condor Implementation • Different Implementations – Diskless Condor – Worked but was restricted by memory – Dual booting Windows/Linux • Problems – – – – Limited nodes Only available overnight Rebooting interfered with scheduled maintenance Not scalable Designing the CampusGrid • Requirements – – – – – Needs to be scalable Needs to be Linux based Require minimal changes to infrastructure Must not impact normal PC operation/maintenance Needs to be deployable though existing mechanisms • Solution – – – – Virtual instance of Linux running under Windows Network configuration based on host Only active when no one is logged into Windows Packaged for Microsoft SMS deployment Virtualisation • VMware • Virtual PC • Cooperative Linux (coLinux) Virtualisation - VMware • Potential benefits – – – – – Well established Support for Linux including performance additions Easily modifiable configuration file Easy to deploy Results in fully native Linux install • Potential challenges – Requires server version to run as a service – Hard to pass information between host and guest – Not free (at the time!) Virtualisation – Virtual PC • Potential benefits – – – – Free - Included in Campus Agreement Easily modifiable configuration file Straightforward to deploy Results in fully native Linux install • Potential challenges – Requires server version to run as a service – Hard to pass information between host and guest – No official Linux support (At the time!) Virtualisation – coLinux • Potential benefits – – – – – – Free Easily modifiable configuration file Straightforward to deploy Easy to pass information between host and guest Runs as a service Designed for Linux • Potential challenges – Requires custom built Linux install – Requires 3rd party network drivers – Only virtualises disk and network – no graphics And the winner? COOPERATIVE LINUX Cooperative Linux • coLinux kernel runs in a privileged mode • PC switches between host OS state and coLinux kernel state • coLinux as full control of the physical memory management unit (MMU) • Almost the same performance and functionality that can be expected from regular Linux on the same hardware • Can run a number of different Linux flavours Network configuration • Hosts use a Public class B network • Guests mirror the Hosts IP but for a private class B Network e.g. HOST = 134.225.217.32 GUEST = 10.216. 217.32 – – – – Guest MAC address based on Hosts MAC address No DHCP Private IP address are registered with DNS Each subnet has a second gateway (multi-netting) Nonintrusive 1. coLinux starts when a user logs out 2. Condor starts excepting jobs 3. coLinux start shuts down when user logs in 4. Condor is shutdown, nicely evicting any jobs Current Implementation • Campus wide • coLinux running Fedora Core 3 • Private network • Condor • NGS certificate authentication or Kerberos • Shared File system - NFS • Deployed using SMS • Around 400 nodes Successes to date • TRACK - Feature detection, Tracking and Statistical analysis for Meteorological and Oceanographic data. – Processing around 2000 jobs a day • Computing Mutual Information - Face Verification Using Gabor Wavelets – Condor cut processing from 105 days to 20 hours • Bayes Phylogenies - inferring phylogenetic trees using Bayesian Markov Chain Monte Carlo (MCMC) or Metropolis-coupled Markov chain Monte Carlo (MCMCMC) methods. – Implementation of MPI on condor • Analysing Chess moves The Future – CampusGrid 2.0 • coLinux running Linux (version to be decided) • Private network • NGS affiliate status • VDT software stack • NGS certificate authentication or Kerberos – Maybe even ShibGrid • Shared File system - NFS • Nodes updated from a central server • Deployed using SMS • More nodes For further information E-mail Christopher Chapman c.d.chapman@reading.ac.uk Jon Blower jdb@mail.nerc-essc.ac.uk Websites http://www.resc.rdg.ac.uk/ http://wiki.sse.rdg.ac.uk/wiki/ITNG:Campus_Grid