Exploitation of UKLight in High Performance Computing Applications Peter Coveney

advertisement

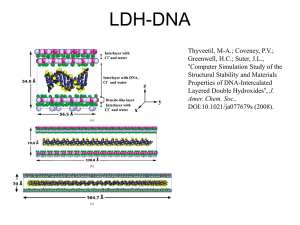

Exploitation of UKLight in High Performance Computing Applications Peter Coveney Centre for Computational Science University College London ESLEA technical collaboration meeting, Edinburgh, 20/06/2006 Peter Coveney (P.V.Coveney@ucl.ac.uk) Centre for Computational Science “Advancing science through computers” We are concerned with many aspects of theoretical and computational science, from chemistry and physics to materials, life sciences, informatics, and beyond. The Centre is comprised of chemists, physicists, mathematicians, computer scientists and biologists Projects and people using and/or planning to use UKLight • Lattice-Boltzmann modelling of Complex Fluids: G. Giupponi & R. Saksena • Simulated Pore Interactive Computing Environment (SPICE): S. Jha • Materials science: M.A. Thyveetil & J Suter • Application Hosting Environment (AHE): R. Saksena and S. Zasada Peter Coveney (P.V.Coveney@ucl.ac.uk) Centre for Computational Science • We need UKLight for: 1. Transfer large data-sets 2. Combined compute, visualisation and steering 3. Metacomputing and Coupled Model Applications • We need policies to enable advanced reservation and coscheduling for much of this - 2. and 3. • Strong synergies with many EPSRC/BBSRC/OMII/EU projects run by PVC Peter Coveney (P.V.Coveney@ucl.ac.uk) Lattice Boltzmann methods • Coarse-grained lattice gas automaton Continuum fluid dynamicists see it as a numerical solver for the BGK approximation to the Boltzmann equation • Lattice-Boltzmann computer codes are simple, algorithmically efficient and readily parallelisable, but numerical stability can be a problem. • Can simulate: • non-equilibrium processes such as self-assembly of amphiphilic fluids into equilibrium liquidcrystalline cubic mesophases. • flow in porous media: industrial applications like hydrocarbon recovery Peter Coveney (P.V.Coveney@ucl.ac.uk) LB3D: Three dimensional Lattice-Boltzmann simulations • LB3D code is written in Fortran90 and parallelized using MPI • Scales linearly on all available resources (CSAR, HPCx, Lemieux, Linux/Itanium) • Fully steerable • Simulations require supercomputers • Data produced during a single large scale simulation can exceed hundreds of gigabytes to terabytes 3D datasets showing snapshots • High end visualization hardware and parallel rendering software (e.g. VTK) needed for data analysis • Remote visualization allows monitoring of current simulations • Avoiding need to post-process data from a simulation of spinodal decomposition: A binary mixture of water and oil phase separates. ‘Blue’ areas denote high water densities and ‘red’ visualizes the interface between both fluids. J. Chin and P. V. Coveney, “Chirality and domain growth in the gyroid mesophase” Proc. R. Soc. A, 21 June 2006 Peter Coveney (P.V.Coveney@ucl.ac.uk) LB3D and UKLight Time of Day • Data transfer between NCSA and CCS storage facility at UCL in London using UKLight. • Around 0.6 Terabytes transferred in less than one night using gridftp with up to 30 streams opened at the same time. • The plateaux indicate the maximum bandwidth available that was limited by a 100Mbit/s network card connecting two machines at UCL. The same transfer would have taken days on a normal network. G. Giupponi, J. Harting, P.V. Coveney, "Emergence of rheological properties in lattice Boltzmann simulations of gyroid mesophases", Europhysics Letters, 73, 533-539 (2006). Peter Coveney (P.V.Coveney@ucl.ac.uk) SPICE Simulated Pore Interactive Computing Environment: Using Grid computing to understand DNA translocation across protein nanopores • Translocation critical for an understanding of many processes in molecular biology, including infectious diseases • Long standing physics problem of a semi-flexible polymer motion in a confined geometry • Technical applications: Artificial pores (similar to natural pore) for highthroughput DNA screening • Computing the Free Energy Profile -extremely challenging but yields maximal insight, understanding and control of translocation process Peter Coveney (P.V.Coveney@ucl.ac.uk) SPICE: Grid Computing Using Novel Algorithms Novel Algorithm: Steered Molecular Dynamics to “pull DNA through the pore”. Jarzynksi's Equation to compute equilibrium free energy profile from such non-equilibrium pulling. SMD+JE: Need to determine “optimal” parameters before simulations at the optimal value. Requires: Interactive simulations and distributing many large, parallel simulations Interactive “Live coupling”: uses visualization to steer simulation Peter Coveney (P.V.Coveney@ucl.ac.uk) SPICE: Grid Infrastructure SPICE uses a “Grid-of-Grids” --by federating the resources of the UK National Grid Service and US TeraGrid Peter Coveney (P.V.Coveney@ucl.ac.uk) SPICE: Grid Computing Using Novel Algorithms Steering large simulations introduces new challenges and new solutions -“effective command, control and communication” between scientist(s) and simulation Step I: Understand structural features using static visualization Step II: Interactive simulations for dynamic and energetic features - Steered simulations: bidirectional communication. Qualitative + Quantitative (SMD+JE) - Haptic interaction: Use haptic to feel feedback forces Step III: Simulations to compute “optimal” parameter values: 75 simulations on 128/256 processors each. Step IV: Use computed “optimal” values to calculate full FEP along the cylindrical axis of the pore. Peter Coveney (P.V.Coveney@ucl.ac.uk) SPICE: Grid Infrastructure High-end systems are required to provide real-time interactivity. “Live coupling”: Interactive simulations & viz to steer sim. Advanced networks provide schedulable capacity and high QoS. Significant performance enhancement using optical switched light-paths Visualization: (I,II) : Prism (UCL) Simulation: (II, III, IV) : NGS + TeraGrid Sim–Viz connection : UKLight/GLIF • Global Lamda Integrated Facility (GLIF) Required for qualitative + quantitative information Require 256px (or more) of HPC to compute fast enough to provide interactivity Steady-state data stream (up & down) ~ Mb/s BUT Interactive simulations perform better when using optically switched networks between simulation and visualization Peter Coveney (P.V.Coveney@ucl.ac.uk) Experimental setup Newton (NAMD) UKLight Dual-homed Linux PC (Nistnet) Pt-to-pt GigE Workstation (VMD) • Nistnet emulation package used to introduce artificial latency and bandwidth limitation to simulate different lengths of light path. • Also introduce packet loss to simulate congested link Peter Coveney (P.V.Coveney@ucl.ac.uk) SPICE: UKLight preliminary figures • Testing NAMD + UKLight: QoS is crucial Quasi-interactive mode (interactive mode but no data exchange) 20% slowdown relative to non-interactive mode Interactive mode: performance sensitive to packet loss (PL) 0.01% PL leads to a 16% performance degradation 0.1% PL leads to a 75% performance degradation Ongoing further tests through 26 June using Newton. Peter Coveney (P.V.Coveney@ucl.ac.uk) Grand Challenge Problem: Human Arterial Tree Cardiovascular disease accounts for about 40% of deaths in USA; Strong relationship between blood flow pattern and formation of arterial disease such as atherosclerotic plaques; Disease develops preferentially in separated and recirculating flow regions such as vessel bifurcations; Blood flow interactions can occur Between different scales, e.g. large-scale flow features coupled to cellular processes such as platelet aggregation; At similar scales in different regions of vascular system; at the largest scale coupled through pulse wave from heart to arteries; Objective of current project Peter Coveney (P.V.Coveney@ucl.ac.uk) Metacomputing: Nektar • Understanding this kind of interaction is critical: • Surgical intervention (e.g. bypass grafts) alters wave reflections • Modification of local wave influences wall stress • Hybrid approach: • 3D detailed CFD computed at arterial bifurcations; • Waveform coupling between bifurcations modelled with a reduced set of 1D equations (sectional area, velocity and pressure information). • Computational challenges: Enormous problem size and memory requirement • 55 largest arteries in human body, 27 bifurcations; Memory consumption ca. 7 TB for full resolution • Does not fit into any single supercomputer available to open research community. Solution: Grid computing using ensembles of supercomputers on the TeraGrid and UK machines Peter Coveney (P.V.Coveney@ucl.ac.uk) Simulation/Performance Results NCSA SDSC PSC TACC Peter Coveney (P.V.Coveney@ucl.ac.uk) Simulating & Visualizing Human Arterial Tree USA Computation Visualization ANL Flow data Viz servers ata d w Flo SC05, Seattle, WA ow l F ta da UK Viewer client Peter Coveney (P.V.Coveney@ucl.ac.uk) Metacomputing: Vortonics •Physical challenges: Reconnection & Dynamos •Vortical reconnection governs establishment of steady-state in Navier-Stokes turbulence •And others.. •Mathematical challenges: •Identification of vortex cores, and discovery of new topological invariants associated with them •Discovery of new and improved analytical solutions of Navier-Stokes equations for interacting vortices •Computational challenges: Enormous problem sizes, memory requirements, and long run times •Algorithmic complexity scales as cube of Re •Substantial postprocessing for core identification •Largest present runs and most future runs require geographically distributed domain decomposition (GD3) •Is GD3 on Grids a sensible approach? Peter Coveney (P.V.Coveney@ucl.ac.uk) Run Sizes to Date / Performance •Multiple Relaxation Time Lattice Boltzmann (MRTLB) model for Navier-Stokes equations •600,000 SUPS/processor when run on one multiprocessor •3D lattice sizes up to 2x109 through SC05 across six sites on TG/NGS •NCSA, SDSC, ANL, TACC, PSC, CSAR (UK) •GBs of data injected into network at each timestep. Strongly bandwidth limited. •Effective SUPS/processor •Reduced by factor approximately equal to number of sites •Therefore SUPS approximately constant as problem grows in size Peter Coveney (P.V.Coveney@ucl.ac.uk) 1 2 3 4 sites sites kSUPS/Proc 1 600 2 300 4 149 6 75 Discussion / Performance Metric • We are aiming for lattice sizes that can not reside at one Supercomputer Center.. • Bell, etc.,“Generate versus Transfer?” • If data can be regenerated locally, don’t send it over the grid! (105 ops/byte) • Thought experiment: • Enormous lattice, local to SC Centre, by swapping n portions to disk. • If we can not exceed this performance, not worth using the Grid for GD3 • Make the very optimistic assumption that disk access time is not limiting • Clearly total SUPS constant, since it is one single multiprocessor • Therefore SUPS/processor degrades by 1/n, so SUPS is constant • We can do that now. That is the scaling that we see now. GD3 is a win! • And things should only get better… • Improvements in store: • UDP with added reliability (UDT) in MPICH-G2 will improve bandwidth • Multithreading in MPICH-G2 will overlap communication and computation to hide latency and bulk data transfers. Better programming model… Peter Coveney (P.V.Coveney@ucl.ac.uk) Metacomputing: LB3D •Lattice Boltzmann Simulation of the Gyroid Mesophase •Physical challenges: •Self-assembly and defect dynamics of ternary amphiphilic mixtures •Rheology of self-assembled mesophases •Computational challenges: Enormous problem sizes, memory requirements, and long run times •Large lattice sizes exceed memory available on a single supercomputer MPICH-G2 enabled LB3D for geographically distributed domain decomposition (GD3) •Computational steering to identify parameter sets corresponding to system states of interest, e.g., with multiple gyroidal domains •Transfer check-points for job migration and analysis between sites. Data-sets are order of 10 GBs. During the TeraGyroid experiment(2003/2004), systems with 10243 lattice sites had checkpoint files ~ 0.5TB on PSC. Requirement for high-bandwidth, dedicated networks like UKLight Peter Coveney (P.V.Coveney@ucl.ac.uk) Materials Science: Clay-Polymer Nanocomposites • Above image: 10M atom system with expanded z axis with adjusted aspect ratio for easier viewing • Only possible to efficiently analyze system with parallel rendering software • Large data sets requiring visualization, powerful machine required Peter Coveney (P.V.Coveney@ucl.ac.uk) Real-time Visualisation of LAMMPS over UKLight • Data from LAMMPS is streamed from supercomputer to visualisation resource/desktop • Testing the connection • Collect time taken to receive data for model sizes up to 10 million atoms. • Network emulator NIST Net is used to emulate production network on UKLight connection • Latency and packet loss can be controlled using NIST Net to make an accurate comparison of performance. Peter Coveney (P.V.Coveney@ucl.ac.uk) Application Hosting Environment • V1.0.0 released 31 March 2006; in OMII distribution by SC06 • Designed to provide scientists with application specific services to utilize grid resources in a transparent manner • Exposes applications as Web Services • Operates unintrusively over multiple administrative domains • Lightweight hosting environment for running unmodified “legacy” applications (e.g. NAMD, LB3D, LAMMPS, DL_POLY, CASTEP, VASP) on grid resources – NGS, TeraGrid, DEISA … • Planned: Host Meta-computing Applications and Coupled Model Applications • Planned: Interface the AHE client with HARC resource co-scheduler and ESLEA Control Plane Software to co-schedule network, compute and visualization resources. J. Maclaren and M. McKeown, HARC: A Highly-Available Robust Co-scheduler, submitted to HPDC and AHM 06. Peter Coveney (P.V.Coveney@ucl.ac.uk) Architecture of the AHE Peter Coveney (P.V.Coveney@ucl.ac.uk) Global Grid Infrastructure NGS UK NGS HPCx Leeds US TeraGrid Starlight (Chicago) SDSC Netherlight (Amsterdam) NCSA Manchester Oxford RAL PSC UKLight AHE All sites connected by production network Resource Co-allocation with HARC and ESLEA Control Plane Software Computation Network PoP Visualization Peter Coveney (P.V.Coveney@ucl.ac.uk) DEISA CONCLUSIONS Benefits of UKLight in computational science - Fixed latency, no jitter - High bandwidth for data transfers - QoS: Certainty of having what you need when you need it (albeit not scalable today) - Provide virtual networks a la UCLP Problems - Necessary but not sufficient - Policies and configurations of machines not designed to accommodate grid computing Peter Coveney (P.V.Coveney@ucl.ac.uk) Acknowledgements • RealityGrid & RealityGrid Platform Grants(GR/R67699) • ELSEA Grant (GR/T04465) • Colin Greenwood • Peter Clarke • Nicola Pezzi • Matt Harvery Peter Coveney (P.V.Coveney@ucl.ac.uk)