Tutorial 2: Client Java tutorial

advertisement

Tutorial 2: Client Java tutorial

This document is designed to provide a tutorial for using the OMII_1 Client Distribution’s Java

API to write programs that run an application installed on an OMII_1 service provider.

This tutorial is targeted at developers with a basic to intermediate level of Java expertise, who

are familiar with running basic jobs from the OMII_1 Client Distribution using the command

line. It is assumed that developers have overall knowledge of the OMII_1 architecture,

including each of the four services provided by the OMII_1 service provider.

Example 1: Single Job

Example 2: Two Jobs

Example 3: n Parallel Jobs

Appendix A: Single Job - SmallApp.java

Appendix B: Two Sequential Jobs - SmallAppSeq2.java

Appendix C: Two Parallel Jobs - SmallAppPar2.java

Appendix D: n Parallel Jobs - SmallAppParN.java

Appendix E: OMIIUtilities.java

Appendix F: OMII Compilation and Execution Scripts

Appendix G: OMII Work-SmallApp.xml

Equivalent examples in Globus for Tutorial 2 can be found here.

Document Scope

This tutorial will focus on the client-side aspects of using Java to implement simple, typical

grid workflow models to drive the execution of jobs on a service provider. It will deal with how

to use the client API to utilise the four services contained within a service provider installation

to facilitate obtaining an allocation, uploading input data, executing a number of jobs, and

downloading output data.

Pre-requisites

The prerequisites for the tutorial are:

1. The installation of an OMII_1 Client (Windows or Linux). This will be used as a platform to

submit jobs from.

2. Access to an OMII_1 Server which has the OMII Base, Extension and Services installed.

●

●

An open, credit-checked account on the OMII_1 Server. (See Creating a new account).

A working and tested GRIATestApp application (installed by default as part of the

OMII_1 services installation). See How to install the OMII Services.

Scenario Background

The simple scenario used by this tutorial is one that represents a class of problem that is

commonly solved using grid software: a user has a set of data that he wants processed by an

application, and he wishes to use the computational and data resources provided by another

user on another machine to expedite this process. This may involve running the application

many times over this data to produce output for each element in the data set.

Using an OMII_1 approach, this process involves the following steps, described in a taskoriented form:

1. Ensure application is installed on the service provider: Since the OMII_1

Distribution uses a static application model the user needs to ensure that the

application they wish to use is installed on the service provider.

2. Obtain account on the service provider: This is required before anything can be

done by the client on the server.

3. Obtain resource allocation: This involves determining the requirements of the

entire process and submitting them as a request for resources to the service provider.

If approved, an allocation is sent back to the client that provides a context within which

applications can be run.

4. Upload input data: Data to be processed by the application is uploaded to input

data stager area(s) on the server.

5. Execute the application on the input data: Execute the application on each

element in the input data (held in the input data stager areas). Output is held in output

data stager area(s). A single run of an application on a set of input data that produces

output data is referred to as a job.

6. Download the output produced by the application: Data produced by the

application from the input data is downloaded from the output data stager areas on the

server.

7. Finish the allocation: The client informs the server that they no longer require the

allocation.

Note that the first step is a manual one achieved by interaction between the client user and a

service provider administrator, who must inspect and approve the application before installing

it on their server. The second step is initiated using the OMII_1 Client software as a request

to a service provider who must approve, and possibly credit check, the user’s request. Upon

success of the request, the user receives an account URI that they can use to access the

functionality provided by the server itself. Taking the nature of steps 1-2 into account, it is

steps 3-7 that we can automate into a Java workflow.

An interesting point to note is that when a job is submitted to the service provider, the job may

be run on the machine local to the service provider or it may be redistributed to a cluster

manager (e.g. PBS, Condor), depending on how the service-provider is configured. You will

have no control over which platform your job is running on. The benefit for this is that you will

not have to worry about writing different submission scripts for jobs running on different

cluster resource manager platforms.

Example Implementations

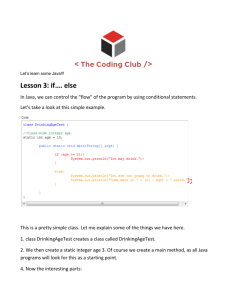

This section will look at how to write Java clients that use the OMII_1 Client API to run jobs,

and how to set up an environment in which to compile and run those clients. This section will

make reference to each of the Appendices.

The examples shown in this tutorial use the GRIATestApp application preinstalled in any

standard installation of the OMII_1 Server. The GRIATestApp is an application implemented

in Perl which inputs one or more text files, sorts the words in each file into alphabetical order

and outputs the same number of text files. On the service provider, the application wrapper

script for the GRIATestApp accepts input and produces output in zipped file format.

Environment - Setting Up

Perform the following steps to set up a development environment for this tutorial:

●

●

●

●

In the OMII Client install directory (e.g. /home/user1/OMIICLIENT) create a

subdirectory, for example, tutorial.

Copy the Java examples given in Appendices 1-4 into this directory under their

respective file names.

Copy the Work-Small.xml file given in Appendix 6 into the tutorial directory.

Depending on whether the client platform is Linux or Windows, copy the appropriate

CompileEx and RunEx scripts (held in Appendix F) into the tutorial directory.

Compilation

For these tutorials, a script like CompileEx can be used to compile the examples. Windows

and Linux versions of this script are given in Appendix F. For example, on Linux you can use

it to compile the first example using:

$ ./CompileEx.sh SmallApp

You do not need to specify the.java suffix for the Java source file.

Execution

Running the compiled examples can be done using a script like RunEx which can also be

found in Appendix 5 for Windows and Linux. For example, in Linux, to run the first example

(note no .class suffix):

$ ./RunEx.sh SmallApp

This utilizes a Java class installed as part of the OMII Client installation

(CreateRequirementsFile.class) that automatically generates an OMII Client Requirements

XML file. For more details about this class, see the OMII Client Command Line tutorial.

Example 1: Single Job

Preparation

Firstly, we need to define the overall structure for our class. For conciseness, a few simple

convenience functions that perform simple XML and allocation handling have been delegated

into a separate class, OMIIUtilities.java, which is shown in Appendix E. These functions will

be explained as they are encountered in this tutorial. Our example class, SmallApp, inherits

this class to be able to use these functions. The entire source for this class can be found in

Appendix A:

class SmallApp extends OMIIUtilities {

public static StateRepository repository = null;

public static final String serviceProviderAccount =

"http://<host>:<port>/axis/services/AccountService#<account_conv_id>";

...

}

The serviceProviderAccount constant should be changed to reflect the open account on a

service provider you wish the client to use for this tutorial.

Within this class is first defined a StateRepository variable, which will be used to hold open

account conversations and the conversational state of an allocation and its associated job,

and data conversations. We have also defined a constant that simply holds the account this

example will use. This should be changed to reflect an open, credit-checked account that the

client has with the service provider, which can be harvested from an OMII Client Accounts.xml

file.

Next, we define the main() function within this class.

public static void main(String[] args) throws IOException, GRIARemoteException,

UserAbort, NoSuchConversation, InterruptedException {

...

}

Note that we express the exceptions that may be thrown within this function. Ideally, each of

these exceptions would be caught at the appropriate points within the code and dealt with, but

they have been demoted to ‘throws’ around the main() function for the sake of brevity. By

missing this throw list out, compilation will identify the points in the code where these

exceptions should be caught.

Within main, some initial preparation is necessary in order for us to tender a set of

requirements. The main() function first calls setupLogging(), a function inherited from

the OMIIUtilities class, which simply initializes Java’s logging, for Java’s LogManager and

log4j. However, the most important logging configuration is for log4j, the properties of which

can be changed in the OMII Client’s conf/ subdirectory, if required for debugging purposes.

Initialising the Repository and Validating Accounts

The client workflow can now be implemented. The first part of this workflow creates our

StateRepository; in this case we will use a MemoryStateRepository which stores all

allocation conversation state non-persistently in memory. The account held in

serviceProviderAccount is then imported into this repository:

repository = new MemoryStateRepository();

repository.importAccountConversation(new URL(serviceProviderAccount), "Test");

AccountConversation[] accounts = repository.getAccountConversations();

We could import many accounts here if we wished. As an alternative, we could have used a

FileStateRepository here for persistent storage of conversation state. For example:

repository = new FileStateRepository(“<repository_filename>.state”);

Test is a local client reference that we can use to refer to this account, but since this Java

client does not need to refer to this account offline, we do not need to use it. To ensure that

the account is contactable on the server, we do the following:

for (int i = 0; i < accounts.length; i++)

accounts[i].getAccountStatus();

It actually checks all client accounts that exist within the repository by asking the server’s

Account Service for the status of each account (since a repository can hold multiple

accounts). If an account is not open or is in some way inaccessible, this check will cause our

client to fail.

Obtaining an Allocation

Now that we have initialized logging and have a repository with a valid account, we can

tender our requirements to our service provider that holds that account. The requirements

are held in a standard OMII Client Requirements file, which is imported into a

ResourceAllocationType using the OMIIUtilities functions xmlParse() (to load the file

into the XML Document Object Model format), and getRequirements() (that takes this

XML Document and deserialises it into a ResourceAllocationType). The

getRequirements() function also syntactically validates the XML file and throws a

RuntimeException if not valid:

ResourceAllocationType requirements =

getRequirements(xmlParse("Requirements-SmallApp.xml"));

The Requirements-SmallApp.xml is created automatically by the RunEx script before the Java

client is run.

Next, we use the OMIIUtilities function getAllocation() to send our requirements to all

service providers represented by accounts in the repository (in our case only one), and return

an allocation:

AllocationConversation allocation =

getAllocation(repository, requirements, "SmallAppTest");

SmallAppTest represents the client’s local allocation name, although since we have direct

access to the allocation via its AllocationConversation, we do not need to use it to

reference this allocation.

The function getAllocation() invokes an OMII Client helper that in general performs the

following tasks:

●

●

●

●

●

Sends requirements to the Resource Allocation Service of all service providers

represented by accounts held in repository.

Receives offers from those service providers who make one.

Displays offers to user in a graphical user interface, allowing the user to select the offer

that best meets their requirements.

Confirms the selected offer with the service provider and receives an allocation in

return.

Returns the allocation to the client Java application as an

AllocationConversation.

The client can now use this returned AllocationConversation to create data stage areas

and jobs.

Initialise Data Stager Areas & Upload Input Data

Next, we need to upload our input data. Before we can do this, we need to initialize a data

stage area on the server to hold it. Also, following program execution, we need to download

the output data which will also be held in a data stage area. The locations for input and

output staging are required by a job when it is started, so we need to define both before we

run the job:

DataConversation DSinput = allocation.newData("input.zip");

DataConversation DSoutput = allocation.newData("output.zip");

The names ‘input.zip’ and ‘output.zip’ are local names we will use on the client to refer to

these areas later. We use the same name as the files they represent for clarity. Now we

upload the input data to the Data Service for storage:

DataHandler handler = new DataHandler(new FileDataSource("input.zip"));

DSinput.save(handler);

Execute the Application on the Input Data

The next stage involves initializing a new job, then running it. We previously obtained our

allocation by sending a list of overall job requirements to the service provider for tendering.

Now, we need to inform the service provider of the requirements for an individual job, within

the constraints of the overall list of allocation limits. These are held locally in a Work

requirements XML file which is parsed, and marshalled into a JobSpecType:

JobSpecType work = getJobSpec(xmlParse("Work-SmallApp.xml"));

It should be noted that the requirements of all individual jobs cannot exceed the overall limits

for the allocation. Therefore the following must be true:

Number_of_jobs * Work_Requirements <= Obtained_Allocation_Limits

Next, we initialize the job within our allocation with the application we wish to run and a local

name as arguments:

JobConversation JSjob = allocation.newJob("http://omii.org/GRIATestApp",

"SmallTestApp");

Again, SmallTestApp is a local client reference that we do not need, since in this case we

have direct access to the job conversation.

A job conversation is returned, which we can use to start the job and monitor its progress

later. We now start the job, passing the input and output data conversations we created

earlier (as arrays, since there may be multiple inputs and outputs) and the job’s requirements

to the service provider:

JSjob.startJob(work, new DataConversation[] { DSinput },

new DataConversation[] { DSoutput });

On the service provider, the input is staged to the job, and the application run. We then

simply wait for the job to finish by using the job conversation to check whether it is active:

while (JSjob.stillActive())

System.out.print(".");

Download the Output Produced by the Application

Upon completion, the job stages its output to the output stage area we defined earlier.

Downloading this output is simply accomplished by the following:

System.out.println("\nDownloading results (output.zip)");

DSoutput.read(new File("Output.zip"));

We request the output from the Data Service via the output stage area data conversation.

Finish the Allocation

After we have run all required jobs and downloaded the results, we can signal the service

provider that we no longer require our allocation and all the associated resources we

acquired. This is accomplished as follows:

Conversation[] children = allocation.getChildConversations();

for (int i = 0; i < children.length; i++)

children[i].finish();

allocation.finishResourceAllocation();

As mentioned previously, the state of our entire allocation is held in a client repository in the

form of client-server conversations. This ‘clean-up’ process effectively cycles through each

conversation entry in this repository associated with our allocation, ‘finishing’ each one and

removing it from the repository before finally finishing our allocation. Note that the account

conversation, of which the allocation is a child node, remains.

For reference, the Globus equivalent for running a single job using the Globus Java CoG

(Community Grids) Kit is given here.

Example 2: Two Jobs

Managing two jobs is much the same, but from a workflow perspective, can be accomplished

in one of two ways: running them sequentially or in parallel. For this section, we will focus on

the sequential execution of two jobs.

As before, the initial stages of creating a repository, accessing our account(s), tendering for

offers and accepting an allocation are the same. However, since we are running more than

one job, we would normally need to ensure that our list of requirements (in our client

Requirements XML file) correctly reflects our increased needs. In this case, however, the

Requirements file generated by our RunEx.sh script (Appendix F) is sufficient to run all

examples in this tutorial.

This sequential example will use the output from the first job as the input to the second (see

Appendix B). Again, the serviceProviderAccount constant must be changed to reflect

an open service provider account. Compared to the previous example, we need an additional

data stager to hold this intermediate output/input data, and we need to start a second job after

the first has finished. The data stagers, therefore, will be created thus:

DataConversation DSinput1 = allocation.newData("input1.zip");

DataConversation DSoutput1 = allocation.newData("output1.zip");

DataConversation DSoutput2 = allocation.newData("output2.zip");

The first job is initialized, started and checked for completion exactly as before:

JobConversation JSjob1 = allocation.newJob("http://omii.org/GRIATestApp",

"SmallTestApp");

JSjob1.startJob(work, new DataConversation[] { DSinput1 },

new DataConversation[] { DSoutput1 });

while (JSjob1.stillActive())

System.out.print(".");

The second job is set up and checked in a similar way, but simply uses DSoutput1 as the

input to the job:

JobConversation JSjob2 = allocation.newJob("http://omii.org/GRIATestApp",

"SmallTestApp");

JSjob2.startJob(work, new DataConversation[] { DSoutput1 },

new DataConversation[] { DSoutput2 });

while (JSjob2.stillActive())

System.out.print(".");

Note that we explicitly wait for the first job to finish before beginning the second: we cannot

simply depend on the OMII server to begin the second job when its input is ready from the

first job. Also, optionally this script could be made more efficient by moving the initialization of

the second job (the second newJob call) to immediately after the first job’s startJob. This

would mean that the second job is being initialized while the first job is running. However, if

the first job were to fail this would leave an initialized job unused.

Following the second job, the output can be downloaded and the allocation finished as before.

For reference, the Globus equivalent for running two sequential jobs using the Globus Java

CoG (Community Grids) Kit is given here.

Example 3: n Parallel Jobs

Handling multiple jobs in parallel is again similar to the first example (see Appendix C).

Again, the serviceProviderAccount constant must be changed to reflect an open

service provider account. We first initialise the input and output stagers and hold their

conversations in arrays. Assuming NUM_JOBS is a constant referring to the desired number

of jobs:

DataConversation[] DSinput = new DataConversation[NUM_JOBS];

for (int inCount = 0; inCount < NUM_JOBS; inCount++) {

DSinput[inCount] = allocation.newData("input" + String.valueOf(inCount+1) + ".

zip");

}

DataConversation[] DSoutput = new DataConversation[NUM_JOBS];

for (int outCount = 0; outCount < NUM_JOBS; outCount++) {

DSoutput[outCount] = allocation.newData("output" + String.valueOf(outCount+1)

+ ".zip");

}

Each data stager is given a unique local name – input<n>.zip or output<n>.zip, depending on

its intended use. We use filenames as the local name, since it reflects the nature of the data,

but the name could be anything. Then, assuming input files input<1..NUM_JOBS>.zip exist

they are uploaded into the input stagers:

for (int inCount = 0; inCount < NUM_JOBS; inCount++) {

DataHandler handler = new DataHandler(new FileDataSource("input" +

String.valueOf(inCount+1) + ".zip"));

DSinput[inCount].save(handler);

}

Next, we initialise the jobs, and then start them in parallel, passing each job their

corresponding input and output stager areas:

JobConversation[] JSjob = new JobConversation[NUM_JOBS];

for (int inCount = 0; inCount < NUM_JOBS; inCount++) {

JSjob[inCount] = allocation.newJob("http://omii.org/GRIATestApp",

"SmallTestApp");

}

for (int inCount = 0; inCount < NUM_JOBS; inCount++) {

JSjob[inCount].startJob(work, new DataConversation[] { DSinput[inCount] },

new DataConversation[] { DSoutput[inCount] });

}

Another approach would have been to initialise and start each job within a single loop; we

separate each part of the process for clarity.

At this point, all the jobs are executing. Then, we check each job until there is no longer any

jobs still running:

boolean stillRunningJob;

do {

stillRunningJob = false;

for (int inCount = 0; inCount < NUM_JOBS; inCount++) {

if (JSjob[inCount].stillActive()) stillRunningJob = true;

}

} while (stillRunningJob);

Next, we just download the results:

for (int outCount = 0; outCount < NUM_JOBS; outCount++) {

System.out.println("Downloading results (output" + String.valueOf(outCount+1)

+ ".zip)");

DSoutput[outCount].read(new File("output" + String.valueOf(outCount+1) + ".

zip"));

}

There are more efficient ways to achieve this. One way would be to monitor all jobs, and

when one is finished, download the results immediately. The results would then be available

client-side (hopefully) soon after they become available on the server. At the moment,

downloading of all results does not occur until the slowest job has completed.

Finally, we finish the allocation as before.

For reference, the Globus equivalent for running two sequential jobs using the Globus Java

CoG (Community Grids) Kit is given in here.

Appendix A: Single Job - SmallApp.java

import

import

import

import

import

import

import

java.io.*;

java.net.*;

javax.activation.*;

org.gria.client.*;

org.gria.client.helpers.*;

uk.ac.soton.ecs.iam.gria.client.*;

org.gria.serviceprovider.commontypes.messaging.*;

class SmallApp extends OMIIUtilities {

public static StateRepository repository = null;

public static final String serviceProviderAccount =

"http://<host>:<port>/axis/services/AccountService#<account_conv_id>";

public static void main(String[] args) throws IOException, GRIARemoteException,

UserAbort, NoSuchConversation, InterruptedException {

System.out.println("Small App - GRIATestApp Java demonstration");

System.out.println("\n-- Setting up logging --\n");

setupLogging();

System.out.println("\n-- Beginning GRIATestApp client demo --\n");

System.out.println("Creating memory (non persistent) repository");

repository = new MemoryStateRepository();

repository.importAccountConversation(new URL(serviceProviderAccount), "Test");

AccountConversation[] accounts = repository.getAccountConversations();

// Getting account status ensures a known client race condition does not occur

for (int i = 0; i < accounts.length; i++)

accounts[i].getAccountStatus();

System.out.println("Retrieving allocation requirements");

ResourceAllocationType requirements =

getRequirements(xmlParse("Requirements-SmallApp.xml"));

System.out.println("Tendering requirements to service providers\n");

AllocationConversation allocation = getAllocation(repository, requirements,

"SmallAppTest");

System.out.println("\nCreating input/output data staging areas");

DataConversation DSinput = allocation.newData("input.zip");

DataConversation DSoutput = allocation.newData("output.zip");

System.out.println("Uploading input data (input.zip)");

DataHandler handler = new DataHandler(new FileDataSource("input.zip"));

DSinput.save(handler);

System.out.println("Retrieving individual job requirements");

JobSpecType work = getJobSpec(xmlParse("Work-SmallApp.xml"));

System.out.println("Initialising job");

JobConversation JSjob = allocation.newJob("http://omii.org/GRIATestApp",

"SmallTestApp");

System.out.println("Starting job");

JSjob.startJob(work, new DataConversation[] { DSinput },

new DataConversation[] { DSoutput });

System.out.println("Waiting for job to finish");

while (JSjob.stillActive())

System.out.print(".");

System.out.println("\nDownloading results (output.zip)");

DSoutput.read(new File("Output.zip"));

System.out.println("Cleaning up allocation");

Conversation[] children = allocation.getChildConversations();

for (int i = 0; i < children.length; i++)

children[i].finish();

allocation.finishResourceAllocation();

System.out.println("Small App complete");

System.exit(0); // Force us to exit, even if swing thread is still running

}

}

Appendix B: Two Sequential Jobs - SmallAppSeq2.java

import

import

import

import

import

import

import

java.io.*;

java.net.*;

javax.activation.*;

org.gria.client.*;

org.gria.client.helpers.*;

uk.ac.soton.ecs.iam.gria.client.*;

org.gria.serviceprovider.commontypes.messaging.*;

class SmallAppSeq2 extends OMIIUtilities {

public static StateRepository repository = null;

public static final String serviceProviderAccount =

"http://<host>:<port>/axis/services/AccountService#<account_conv_id>";

public static void main(String[] args) throws IOException, GRIARemoteException,

UserAbort, NoSuchConversation, InterruptedException {

System.out.println("Small App - GRIATestApp Java demonstration");

System.out.println("\n-- Setting up logging --\n");

setupLogging();

System.out.println("\n-- Beginning GRIATestApp client demo --\n");

System.out.println("Creating memory (non persistent) repository");

repository = new MemoryStateRepository();

repository.importAccountConversation(new URL(serviceProviderAccount), "Test");

AccountConversation[] accounts = repository.getAccountConversations();

// Getting account status ensures a known client race condition does not occur

for (int i = 0; i < accounts.length; i++)

accounts[i].getAccountStatus();

System.out.println("Retrieving allocation requirements");

ResourceAllocationType requirements =

getRequirements(xmlParse("Requirements-SmallApp.xml"));

System.out.println("Tendering requirements to service providers\n");

AllocationConversation allocation = getAllocation(repository, requirements,

"SmallAppTest");

System.out.println("\nCreating input/output data staging areas");

DataConversation DSinput1 = allocation.newData("input1.zip");

DataConversation DSoutput1 = allocation.newData("output1.zip");

DataConversation DSoutput2 = allocation.newData("output2.zip");

System.out.println("Uploading input data (inputn.zip)");

DataHandler handler1 = new DataHandler(new FileDataSource("input1.zip"));

DSinput1.save(handler1);

System.out.println("Retrieving individual job requirements");

JobSpecType work = getJobSpec(xmlParse("Work-SmallApp.xml"));

System.out.println("Initialising job 1");

JobConversation JSjob1 = allocation.newJob("http://omii.org/GRIATestApp",

"SmallTestApp");

System.out.println("Starting job 1");

JSjob1.startJob(work, new DataConversation[] { DSinput1 },

new DataConversation[] { DSoutput1 });

System.out.println("Waiting for job 1 to finish");

while (JSjob1.stillActive())

System.out.print(".");

System.out.println("\nInitialising job 2");

JobConversation JSjob2 = allocation.newJob("http://omii.org/GRIATestApp",

"SmallTestApp");

System.out.println("Starting job 2");

JSjob2.startJob(work, new DataConversation[] { DSoutput1 },

new DataConversation[] { DSoutput2 });

System.out.println("Waiting for job 2 to finish");

while (JSjob2.stillActive())

System.out.print(".");

System.out.println("\nDownloading results (output2.zip)");

DSoutput2.read(new File("output2.zip"));

System.out.println("Cleaning up allocation");

Conversation[] children = allocation.getChildConversations();

for (int i = 0; i < children.length; i++)

children[i].finish();

allocation.finishResourceAllocation();

System.out.println("Small App complete");

System.exit(0); // Force us to exit, even if swing thread is still running

}

}

Appendix C: Two Parallel Jobs - SmallAppPar2.java

import

import

import

import

import

import

import

java.io.*;

java.net.*;

javax.activation.*;

org.gria.client.*;

org.gria.client.helpers.*;

uk.ac.soton.ecs.iam.gria.client.*;

org.gria.serviceprovider.commontypes.messaging.*;

class SmallAppPar2 extends OMIIUtilities {

public static StateRepository repository = null;

public static final String serviceProviderAccount =

"http://<host>:<port>/axis/services/AccountService#<account_conv_id>";

public static void main(String[] args) throws IOException, GRIARemoteException,

UserAbort, NoSuchConversation, InterruptedException {

System.out.println("Small App - GRIATestApp Java demonstration");

System.out.println("\n-- Setting up logging --\n");

setupLogging();

System.out.println("\n-- Beginning GRIATestApp client demo --\n");

System.out.println("Creating memory (non persistent) repository");

repository = new MemoryStateRepository();

repository.importAccountConversation(new URL(serviceProviderAccount),

"Test");

AccountConversation[] accounts = repository.getAccountConversations();

// Getting account status ensures a known client race condition does not

occur

for (int i = 0; i < accounts.length; i++)

accounts[i].getAccountStatus();

System.out.println("Retrieving allocation requirements");

ResourceAllocationType requirements =

getRequirements(xmlParse("Requirements-SmallApp.xml"));

System.out.println("Tendering requirements to service providers\n");

AllocationConversation allocation = getAllocation(repository,

requirements,

"SmallAppTest");

System.out.println("\nCreating input/output data staging areas");

DataConversation DSinput1 = allocation.newData("input1.zip");

DataConversation DSinput2 = allocation.newData("input2.zip");

DataConversation DSoutput1 = allocation.newData("output1.zip");

DataConversation DSoutput2 = allocation.newData("output2.zip");

System.out.println("Uploading input data (inputn.zip)");

DataHandler handler1 = new DataHandler(new FileDataSource("input1.zip"));

DSinput1.save(handler1);

DataHandler handler2 = new DataHandler(new FileDataSource("input2.zip"));

DSinput2.save(handler2);

System.out.println("Retrieving individual job requirements");

JobSpecType work = getJobSpec(xmlParse("Work-SmallApp.xml"));

System.out.println("Initialising job");

JobConversation JSjob1 = allocation.newJob("http://omii.org/GRIATestApp",

"SmallTestApp");

JobConversation JSjob2 = allocation.newJob("http://omii.org/GRIATestApp",

"SmallTestApp");

System.out.println("Starting jobs");

JSjob1.startJob(work, new DataConversation[] { DSinput1 },

new DataConversation[] { DSoutput1 });

JSjob2.startJob(work, new DataConversation[] { DSinput2 },

new DataConversation[] { DSoutput2 });

System.out.println("Waiting for job to finish");

while (JSjob1.stillActive() || JSjob2.stillActive())

System.out.print(".");

System.out.println("\nDownloading results (outputn.zip)");

DSoutput1.read(new File("output1.zip"));

DSoutput2.read(new File("output2.zip"));

System.out.println("Cleaning up allocation");

Conversation[] children = allocation.getChildConversations();

for (int i = 0; i < children.length; i++)

children[i].finish();

allocation.finishResourceAllocation();

System.out.println("Small App complete");

System.exit(0); // Force us to exit, even if swing thread is still

running

}

}

Appendix D: n Parallel Jobs - SmallAppParN.java

import

import

import

import

import

import

import

java.io.*;

java.net.*;

javax.activation.*;

org.gria.client.*;

org.gria.client.helpers.*;

uk.ac.soton.ecs.iam.gria.client.*;

org.gria.serviceprovider.commontypes.messaging.*;

class SmallAppParN extends OMIIUtilities {

public static StateRepository repository = null;

public static final int NUM_JOBS = 2;

public static final String serviceProviderAccount =

"http://<host>:<port>/axis/services/AccountService#<account_conv_id>";

public static void main(String[] args) throws IOException, GRIARemoteException,

UserAbort, NoSuchConversation, InterruptedException {

System.out.println("Small App - GRIATestApp Java demonstration");

System.out.println("\n-- Setting up logging --\n");

setupLogging();

System.out.println("\n-- Beginning GRIATestApp client demo --\n");

System.out.println("Creating memory (non persistent) repository");

repository = new MemoryStateRepository();

repository.importAccountConversation(new URL(serviceProviderAccount),

"Test");

AccountConversation[] accounts = repository.getAccountConversations();

// Getting account status ensures a known client race condition does not

occur

for (int i = 0; i < accounts.length; i++)

accounts[i].getAccountStatus();

System.out.println("Retrieving allocation requirements");

ResourceAllocationType requirements =

getRequirements(xmlParse("Requirements-SmallApp.xml"));

System.out.println("Tendering requirements to service providers\n");

AllocationConversation allocation = getAllocation(repository,

requirements,

"SmallAppTest");

DataConversation[] DSinput = new DataConversation[NUM_JOBS];

for (int inCount = 0; inCount < NUM_JOBS; inCount++) {

System.out.println("Creating input data staging area " +

String.valueOf(inCount+1));

DSinput[inCount] = allocation.newData("input" + String.valueOf

(inCount+1) + ".zip");

}

DataConversation[] DSoutput = new DataConversation[NUM_JOBS];

for (int outCount = 0; outCount < NUM_JOBS; outCount++) {

System.out.println("Creating output data staging area " +

String.valueOf(outCount+1));

DSoutput[outCount] = allocation.newData("output" + String.valueOf

(outCount+1) + ".zip");

}

for (int inCount = 0; inCount < NUM_JOBS; inCount++) {

System.out.println("Uploading input data (input" + String.valueOf

(inCount+1) + ".zip)");

DataHandler handler = new DataHandler(new FileDataSource("input" +

String.valueOf(inCount+1) + ".zip"));

DSinput[inCount].save(handler);

}

System.out.println("Retrieving individual job requirements");

JobSpecType work = getJobSpec(xmlParse("Work-SmallApp.xml"));

JobConversation[] JSjob = new JobConversation[NUM_JOBS];

for (int inCount = 0; inCount < NUM_JOBS; inCount++) {

System.out.println("Initialising job " + String.valueOf(inCount

+1));

JSjob[inCount] = allocation.newJob("http://omii.org/GRIATestApp",

"SmallTestApp");

}

for (int inCount = 0; inCount < NUM_JOBS; inCount++) {

System.out.println("Starting job " + String.valueOf(inCount+1));

JSjob[inCount].startJob(work, new DataConversation[] { DSinput

[inCount] },

new DataConversation[] { DSoutput[inCount] });

}

System.out.println("Waiting for jobs to finish");

boolean stillRunningJob;

do {

stillRunningJob = false;

for (int inCount = 0; inCount < NUM_JOBS; inCount++) {

if (JSjob[inCount].stillActive()) stillRunningJob = true;

}

System.out.print(".");

} while (stillRunningJob);

System.out.println("\n");

for (int outCount = 0; outCount < NUM_JOBS; outCount++) {

System.out.println("Downloading results (output" + String.valueOf

(outCount+1) + ".zip)");

DSoutput[outCount].read(new File("output" + String.valueOf(outCount

+1) + ".zip"));

}

System.out.println("Cleaning up allocation");

Conversation[] children = allocation.getChildConversations();

for (int i = 0; i < children.length; i++)

children[i].finish();

allocation.finishResourceAllocation();

System.out.println("Small App complete");

System.exit(0); // Force us to exit, even if swing thread is still

running

}

}

Appendix E: OMIIUtilities.java

import

import

import

import

import

import

import

import

import

import

import

org.w3c.dom.*;

javax.xml.parsers.*;

java.io.*;

java.util.logging.*;

org.apache.log4j.PropertyConfigurator;

org.gria.client.*;

org.gria.client.helpers.*;

uk.ac.soton.ecs.iam.gria.client.helpers.*;

uk.ac.soton.ecs.iam.gria.client.swing.*;

org.exolab.castor.xml.Unmarshaller;

org.gria.serviceprovider.commontypes.messaging.*;

class OMIIUtilities {

// Logging

public static void setupLogging() {

LogManager logger = LogManager.getLogManager();

java.util.logging.Logger root = logger.getLogger("");

if (root != null)

root.setLevel(Level.SEVERE);

else

System.out.println("Note: Failed to find root logger");

// And again, this time for log4j

PropertyConfigurator.configure(new File("../conf",

"log4j.properties").toString());

System.out.println("Logger initialised");

}

// XML Document handling

// Given a filename which points to an XML file, return an XML Document object

of

// that file

public static Document xmlParse(String xmlFile) throws IOException {

Document doc;

try {

DocumentBuilderFactory factory = DocumentBuilderFactory.newInstance

();

factory.setNamespaceAware(true);

DocumentBuilder docBuilder = factory.newDocumentBuilder();

doc = docBuilder.parse(new File(xmlFile));

} catch (Exception ex) {

throw new RuntimeException("Your '" + xmlFile + "' file is not

correct.", ex);

}

return doc;

}

// Given an XML document, return the main document element as a Resource

Allocation

// type

public static ResourceAllocationType getRequirements(Document doc) {

try {

return (ResourceAllocationType) Unmarshaller.unmarshal(

ResourceAllocationType.class, doc.getDocumentElement());

} catch (Exception ex) {

throw new RuntimeException("New requirements doc is not valid!",

ex);

}

}

// Given an XML document, return the main document element as a JobSpec type

public static JobSpecType getJobSpec(Document doc) {

try {

return (JobSpecType) Unmarshaller.unmarshal(JobSpecType.class,

doc.getDocumentElement());

} catch (Exception ex) {

throw new RuntimeException("Work requirements doc is not valid!",

ex);

}

}

public static AllocationConversation getAllocation(StateRepository rep,

ResourceAllocationType req, String name) {

try {

AllocationHelper helper =

new AllocationHelperImpl(rep, new SwingInputHandler());

return (AllocationConversation) helper.getBestAllocation(req,

name);

} catch (Exception ex) {

throw new RuntimeException("Problem getting allocations from

service provider(s)!", ex);

}

}

}

Appendix F: OMII Compilation and Execution Scripts

Linux

CompileEx.sh

#!/bin/sh

export OMII_CLIENT_HOME=..

export MY_CLASSPATH=.:../lib:

for j in `ls ../lib/*.jar`; do

MY_CLASSPATH=$j:$MY_CLASSPATH

done

echo "Compiling the sample GRIATestApp client..."

javac -classpath $MY_CLASSPATH $1.java

RunEx.sh

#!/bin/sh

export OMII_CLIENT_HOME=..

export MY_PARAMS=-Dgria.config.dir=../conf

export MY_CLASSPATH=.:../lib:

for j in `ls ../lib/*.jar`; do

MY_CLASSPATH=$j:$MY_CLASSPATH

done

echo Create Requirements.xml file...

rm Requirements-SmallApp.xml

java -cp .. CreateRequirementsFile http://omii.org/GRIATestApp Requirements-SmallApp.

xml

echo "Running the sample GRIATestApp client..."

java -cp $MY_CLASSPATH $MY_PARAMS $1

Windows

CompileEx.bat

@echo OFF

setlocal enabledelayedexpansion

set OMII_CLIENT_HOME=..

set MY_CLASSPATH=.;..\lib;

for %%j in (..\lib\*.jar) do set MY_CLASSPATH=!MY_CLASSPATH!;%%j

echo Compiling the sample GRIATestApp client...

echo.

javac -classpath %MY_CLASSPATH% %1.java

endlocal enabledelayedexpansion

RunEx.bat

@echo OFF

setlocal enabledelayedexpansion

set OMII_CLIENT_HOME=..

set MY_PARAMS=-Dgria.config.dir=../conf

set MY_CLASSPATH=.;..\lib;

for %%j in (..\lib\*.jar) do set MY_CLASSPATH=!MY_CLASSPATH!;%%j

echo Create Requirements.xml file...

del /Q Requirements-SmallApp.xml

java -cp .. CreateRequirementsFile http://omii.org/GRIATestApp Requirements-SmallApp.

xml

echo Running the sample GRIATestApp client...

java -cp %MY_CLASSPATH% %MY_PARAMS% %1

endlocal enabledelayedexpansion

Appendix G: OMII Work-SmallApp.xml

<?xml version="1.0" encoding="UTF-8"?>

<spec xmlns:ns1="http://www.gria.org/org/gria/serviceprovider/commontypes/messaging">

<ns1:work>

<ns1:std-CPU-seconds>200</ns1:std-CPU-seconds>

</ns1:work>

<ns1:min-physical-memory>

<ns1:bytes>10000</ns1:bytes>

</ns1:min-physical-memory>

<ns1:max-output-volume>

<ns1:bytes>10000</ns1:bytes>

</ns1:max-output-volume>

<ns1:num-processors>1</ns1:num-processors>

<ns1:arguments>-cputime=30</ns1:arguments>

</spec>

1.2 P1 © University of Southampton, Open Middleware Infrastructure Institute