“How to Connect Campus Grids to the NGS” -support.ac.uk

http://www.ngs.ac.uk

http://www.grid-support.ac.uk

“How to Connect Campus

Grids to the NGS”

Neil Geddes

Director, GOSC

The UK's National Grid Service is a project to deploy and operate a grid infrastructure for computing and data access across the UK. This development will be a cornerstone of the development of the UK's "e-

Infrastructure" over the coming decade. The goals, current status and plans for the National Grid Service and the Operations Support Centre will be described.

Outline

• Overview of GOSC and NGS

• Services & Getting Access

• Joining the NGS

– How and why

• The Future

– Roadmap for the future

• Summary

GOSC

The Grid Operations Support Centre is a distributed “virtual centre” providing deployment and operations support for the

NGS and the wider UK e-Science programme.

- started October 2004

GOSC Roles

UK Grid Services

National Services

Authentication, authorization, certificate management, VO management, security, network monitoring, help desk + support centre.

support@grid-support.ac.uk

NGS Services

Job submission, simple registry, data transfer, data access and integration, resource brokering, monitoring and accounting, grid management services, workflow, notification, operations centre.

NGS core-node Services

CPU, (meta-) data storage, key software

Services coordinated with others (eg OMII, NeSC, LCG, EGEE):

Integration testing, compatibility & Validation Tests, User Management, training

Administration:

Security

Policies and acceptable use conditions

SLA’s, SLD’s

Coordinate deployment and Operations

The National Grid Service:

Towards the UK's e-infrastructure

• The UK's National Grid Service is a project to deploy and operate a grid infrastructure for computing and data access across the UK.

– Learn what it means

– Learn how to do it

• This development will be a cornerstone of the development of the UK's "e-Infrastructure" over the coming decade

NGS “Today”

Interfaces

Projects e-Minerals e-Materials

Orbital Dynamics of Galaxies

Bioinformatics (using BLAST)

GEODISE project

UKQCD Singlet meson project

Census data analysis

MIAKT project e-HTPX project.

RealityGrid (chemistry)

OGSI::Lite

Users

Leeds

Oxford

UCL

Cardiff

Southampton

Imperial

Liverpool

Sheffield

Cambridge

Edinburgh

QUB

BBSRC

CCLRC.

Nottingham

…

If you need something else, please say!

EGEE Resources: Feb 2005

Country providing resources

Country anticipating joining

In EGEE:

113 sites, 30 countries

>10,000 cpu

~5 PB storage

Includes non-EGEE sites:

• 9 countries

• 18 sites

Enabling Grids for E-sciencE

• HEP Applications

• Biomed Applications

– imaging, drug discover

– mri simulation

– protein sequence analyis

• Generic Applications

– Earth Observation,

Seismology, Hydrology,

Climate, Geosciences

–

Computational Chemistry

–

Astrophysics

• Applications “behind the corner”

– R-DIG

– BioDCV

INFSO-RI-508833

Applications

12000

10000

8000

6000

LCG/CondorG

LCG/Original

NorduGrid

Grid3

4000

2000

0

6/

24

/2

00

4

7/

1/

20

04

7/

8/

20

04

7/

15

/2

00

7/

4

22

/2

00

4

7/

29

/2

00

8/

4

5/

20

04

8/

12

/2

00

8/

4

19

/2

00

8/

4

26

/2

00

4

9/

2/

20

04

9/

9/

20

04

9/

16

/2

00

4

9/

23

/2

00

4

9/

30

/2

00

4

10

/7

/2

00

4

10

/1

4/

20

04

10

/2

1/

20

04

10

/2

8/

20

04

11

/4

/2

00

4

11

/1

1/

20

04

11

/1

8/

20

04

11

/2

5/

20

04

12

/2

/2

00

4

12

/9

/2

00

12

/1

4

6/

20

04

12

/2

3/

20

04

12

/3

0/

20

04

1/

6/

20

05

1/

13

/2

00

5

1/

20

/2

00

5

1/

27

/2

00

5

2/

3/

20

05

2/

10

/2

00

5

2/

17

/2

00

5

2/

24

/2

00

5

3/

3/

20

05

3/

10

/2

00

5

3/

17

/2

00

5

3/

24

/2

00

5

3/

31

/2

00

5

GOSC Management Board - NGS StatusEGEE Third Conference, Athens, 19.04.2005

8

http://www.ngs.ac.uk

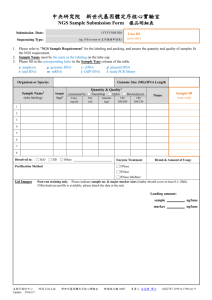

NGS core nodes

:

Need UK e-Science certificate (1-2 days)

Apply through NGS web site (1-2 weeks)

Service and Access

What we provide and how to get permission

NGS Core Services

• Globus Toolkit version 2

• Job submission, File transfer, Shell

• Storage Resource Broker

• Oracle (9i)

• OGSA-DAI

• Certificate Authority

• Information Services (MDS/GIIS)

• MyProxy server

• Integration tests and database

• Cluster monitoring

• LCG-VO

In testing:

• VOMS

• EDG Resource Broker

• Portal(s)

Gaining Access

• All access is through digital X.509 certificates

• From UK e-Science CA or recognized peer

National HPC services NGS Partner Sites

• Data nodes at RAL + Manchester

• Compute nodes at Oxford + Leeds

• Compute nodes at Cardiff + Bristol

• Free at point of use

• Apply through NGS web site

• Accept terms and conditions of use

• Light-weight peer review

– 1-2 weeks

• To do: project or VO-based application and registration

• Must apply separately to research councils

• Digital certificate and Conventional

(username/ password) access supported

Affiliate Sites

• Access approved projects/VO’s

Joining the NGS

How, Why and

The Vision Thing

How to Join

Resource providers join the NGS by

• Defining level of service commitments through SLDs

• Adopting NGS acceptable use and security policies

• Run compatible middleware

– as defined by NGS Minimum Software Stack

– and verified by compliance test suite

• Support monitoring and accounting

Two levels of membership

1. Affiliation

• a.k.a. connect to NGS

2. Partnership

How to Join - 1

1.

Ask

• Grid Support Centre, Stephen Pickles

• Process currently limited only by available effort http://www.ngs.ac.uk

How to Join - 2

1.

Ask

• Grid Support Centre, Stephen Pickles

• Process currently limited only by available effort

2.

Get assigned a buddy

• added to rollout mailing list

• read the joining guide http://www.ngs.ac.uk/man/documents/NGS_Partner_joining_procedure_0.4.pdf

How to Join - 3

1.

Ask

• Grid Support Centre, Stephen Pickles

• Process currently limited only by available effort

2.

Get assigned a buddy

• added to rollout mailing list

• read the joining guide

3.

Install the minimum software stack

• On the resource, or on a gateway http://www.ngs.ac.uk/man/documents/NGS_Minimum_software_stack_0.7.pdf

How to Join - 4

1.

Ask

• Grid Support Centre, Stephen Pickles

• Process currently limited only by available effort

2.

Get assigned a buddy

• added to rollout mailing list

• read the joining guide

3.

Install the minimum software stack

• On the resource, or on a gateway

4.

Pass monitoring tests for 7 days

How to Join - 5

1.

Ask

• Grid Support Centre, Stephen Pickles

• Process currently limited only by available effort

2. Get assigned a buddy

• added to rollout mailing list

• read the joining guide

3. Install the minimum software stack

• On the resource, or on a gateway

4. Pass monitoring tests for 7 days

5. Agree to security and acceptable use policies

• security contact + part of campus security

• developing operational security with GridPP, EGEE, OSG

How to Join - 6

1.

Ask

• Grid Support Centre, Stephen Pickles

• Process currently limited only by available effort

2.

Get assigned a buddy

• added to rollout mailing list

• read the joining guide

3.

Install the minimum software stack

• On the resource, or on a gateway

4.

Pass monitoring tests for 7 days

5.

Agree to security and acceptable use policies

6.

Define a Service Level

How to Join - 7

1.

Ask

• Grid Support Centre, Stephen Pickles

• Process currently limited only by available effort

2. Get assigned a buddy

• added to rollout mailing list

• read the joining guide

3. Install the minimum software stack

• On the resource, or on a gateway

4. Pass monitoring tests for 7 days

5. Agree to security and acceptable use policies

6. Define a Service Level

7. Set up service level monitoring

How to Join - 8

1.

Ask

• Grid Support Centre, Stephen Pickles

• Process currently limited only by available effort

2. Get assigned a buddy

• added to rollout mailing list

• read the joining guide

3. Install the minimum software stack

• On the resource, or on a gateway

4. Pass monitoring tests for 7 days

5. Agree to security and acceptable use policies

6. Define a Service Level

7. Set up service level monitoring

8. Approval by GOSC Board

– Get representation on Technical Board

– Process took 6-12 months for core sites

– Process took 3-6 months for Cardiff +Bristol

– Process should take less than 3 months for Lancaster

Why the NGS

• Common tools, procedures and interfaces

– Reduce total cost of ownership for providers

– Lower threshold for users

• Early adopter system for UK research grids

– technology evaluation (in production)

– technology choices

– pool expertise

– drive interface standards and requirements

• both a voice and a target

– etc.

Why Join

• (your) Users increasingly want resources as services and not as complicated bits of kit

– common interfaces across a range of facilities

• Funders of regional and national facilities want common interfaces to lower barriers to access

– e.g. Hector

• By joining you leverage the national expertise in running these services

– technical advice and support

– security procedures and incident response

– tools to help monitor and patch

• All of the above required in any TCO calculation

– Get it at lower cost by joining the NGS

• Members get a say in the technical decisions …

The Future

More Vision and

Vision Meets Reality

Regional and

Campus grids

HPCx + HECtoR

UK e-Infrastructure

Users get common access, tools, information,

Nationally supported services, through NGS

Community Grids

VRE, VLE, IE

Integrated internationally

LHC

ISIS TS2

Maintaining

Compatibility

• Operating a production grid means valuing robustness and reliability over fashion.

– bug uncovered in OpenSSH in October 2004

• NGS cares about:

– alignment/compatibility with leading international Grid efforts

– special requirements of UK e-Science community

– easy migration/upgrade paths

– proven robustness/reliability

– based on standards or standards-track specifications

• NGS cannot support everything

• Everyone wants service-oriented grids

– but still settling out: WS-I, WS-I+, OGSI, WSRF, GT3, GT4, gLite

• Caution over OGSI/WSRF has led to wide convergence on GT2 for production grids and hence some inter-Grid compatibility

– but there are potentially divergent forces at work

• Significant changes to NGS Minimum Software Stack will require approval by

NGS Management Board on conservative time scales

Strategic

Framework

• GOSC/NGS UK e-Science project

– support other UK (e-)science projects

• International Compatibility

– EGEE

• European infrastructure (and possible funding)

• LHC at most UK universities

– only user group who want to build the grid

– GridPP committed to common w/s plan in 2005

• GEANT

– Others

• TeraGrid – US cyberinfrastructure $$$ (unlikely to pay us)

• Open Science Grid – will develop compatibility with LCG

• RoW e.g. China

– Want use other software, but must be EGEE compatible

– Also driven by user requirements

– Sets framework for relationship with OMII and others

• Other factors

– JISC and Shibboleth

Process for Moving

Forward

1. New developments evaluated by ETF

– must have some longer term support likely

2. User requests treated on case by case basis

3. NGS Technical Board consider against needs

• user demand

• new functionality

• improved functionality

• improved security/performace/managability

4. Proposal brought to GOSC Board

• Prepared by GOSC “executive”

• N.Geddes, S.Pickles, A.Richards, S.Newhouse

Current Roadmap

• April 2005 Extensions of NGS membership

• July 2005 First assessment of NGS WS infrastructure

Outline plans for 2005/2006 NGS AAA devt.

Migration and/or interoperability of GT2/GT4

• Oct 2005 Deployment of Resource Brokering and VO mgmt

Client (compute element) compatibility with EGEE

Decision on support for GT4 infrastructure

• Jan 2006 Evaluation of Shibboleth interoperability solutions

• April 2006 Approval of Shibboleth interoperability plans

• July 2006 Interoperability with Shibboleth infrastructure

• Oct 2006 Technology refresh for core NGS nodes

The beginning …

– Backup slides to follow …

EDS is Transforming Clients to Agile Enterprise

– Virtualised Computing Platform

EDS Services Transition Roadmap

Step 6: Grid

Agility

Drivers

Reduce

Risk

Step 5: Utility Service

• Standards

• Visibility

•

Quality

•

Security

•

Efficiency

Improve

Scalability,

Service

Quality/Levels,

Productivity & more

Improve

Utilisation

Step 4: Virtual Service Suite

Step 3: Automated Operations & Managed Storage

Reduce

TCO

Step 2: Consolidate (Server, Network, Storage, etc)

Step 1: Migrate & Manage (Regional Facilities)

© 2004 Electronic Data Systems Corporation. All rights reserved.

The GOSC Board

Director, GOSC (Chair)

Technical Director, GOSC

Collaborating Institutions

CCLRC

Leeds

Oxford

Manchester

Edinburgh/NeSC

UKERNA

London College

ETF Chair

GridPP Project Leader

OMII Director

EGEE UK+I Federation Leader

HEC Liaison

Also invited e-Science User Board Chair.

Director, e-Science Core Programme

Neil Geddes

Stephen Pickles

Prof. Ken Peach

Prof. Peter Dew

Prof. Paul Jeffreys

Mr. Terry Hewitt

Prof. Malcolm Atkinson

Dr. Bob Day tbd

Dr. Stephen Newhouse

Prof. Tony Doyle

Dr. Alistair Dunlop

Dr. Robin Middleton

Mr. Hugh Pilcher-Clayton

Prof. Jeremy Frey

Dr. Anne Trefethen