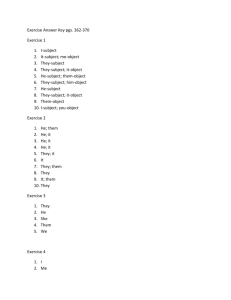

MAS.632 Conversational Computer Systems MIT OpenCourseWare rms of Use, visit:

advertisement