The UK National Grid Service Andrew Richards – CCLRC, RAL

advertisement

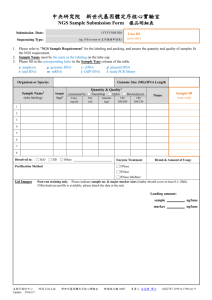

The UK National Grid Service http://www.ngs.ac.uk Andrew Richards – CCLRC, RAL History Pre NGS • L2G – Level 2 Grid deployed Easter 2003 – • contains members from each of the ten UK e-Science Centres and CCLRC plus Centres of Excellence Requirement for dedicated resources Rollout of NGS • NGS Evaluation and Installation May 2003 -> December 2003 • Pre-Production use Started April 2004 (‘Early Bird Users’) • Full Production Started – September 2004 (All Hands Meeting 2004 – Nottingham) Funding – JISC funded £1.06M Hardware (2003-2006) • Oxford, White Rose Grid (Leeds) - Compute Cluster • Manchester – Data Cluster • E-Science Centre funded 420k for additional Data Cluster – JISC funded £900k staff effort including 2.5SY at CCLRC e-Science Centre • The e-Science Centre leads and coordinates the project for the JISC funded clusters Objectives • Build on recent advances in grid technology to offer a stable highly available production quality grid service • Interoperability with other grids such as those deployed by the Enabling Grids for eScience in Europe (EGEE) project and the LHC Computing Grid (LCG) • To provide the broad user community with access to large scale resources through the grid interface • To meet users’ evolving requirements for a production grid service by working with the user community, through the User Group established by the Core Programme • To cooperate effectively with the Engineering Task Force and other teams NGS Today NGS “Tomorrow” GOSC Timeline NGS WS Service NGS Expansion (Bristol, Cardiff…) NGS Production Service NGS WS Service 2 OGSA-DAI NGS Expansion WS2 plan WS plan Q2 Q3 Q4 Q1 2004 Q2 Q3 2005 Q4 Q1 Q2 Q3 2006 OMII release gLite release 1 EGEE gLite alpha release EGEE gLite release OMII Release Grid Operation Support Centre Web Services based National Grid Infrastructure UK e-Infrastructure NGS Hardware Compute Cluster Data Cluster •64 dual CPU Intel 3.06 GHz (1MB cache) nodes •2GB memory per node •2x 120GB IDE disks (1 boot, 1 data) •Gigabit network •Myrinet M3F-PCIXD-2 •Front end (as node) •Disk server (as node) with 2x Infortrend 2.1TB U16U SCSI Arrays (UltraStar 146Z10 disks) •20 dual CPU Intel 3.06 GHz nodes •4GB memory per node •2x120GB IDE disks (1 boot, 1 data) •Gigabit network •Myrinet M3F-PCIXD-2 •Front end (as node) •18TB Fibre SAN ( Infortrend F16F 4.1TB Fibre Arrays (UltraStar 146Z10 disks) •PGI compilers •Intel Compilers, MKL •PBSPro •TotalView Debugger •RedHat ES 3.0 •PGI compilers •Intel Compilers, MKL •PBSPro •TotalView Debugger •Oracle 9i RAC •Oracle Application server •RedHat ES 3.0 NGS Software NGS Database Service • Database Service on CCLRC – RAL and Manchester Sites • • • Oracle 9i RAC (hold license for 10g if/when required) Using OCFS Oracle Application Server • • • 5 node Clustered Database Single node Database for SRB Use of Transparent Application Failover (TAF) NGS SRB • Single instance Oracle 9i for MCAT at CCLRC – RAL, duplicated at Manchester • SRB Version 3.2.1, using GSI authentication mechanisms • SRB vaults at each of the NGS core sites • Single NGS zone for core nodes – Option to use Federation for other zones. Monitoring BDII Nagios Ganglia GITS Testing User Support http://www.grid-support.ac.uk support@grid-support.ac.uk NGS Portal User Registration ‘Early Bird’ Users April 2004 Example Users Projects • e-Minerals • e-Materials • Orbital Dynamics of Galaxies • Bioinformatics (using BLAST) • GEODISE project • UKQCD Singlet meson project • Census data analysis • MIAKT project • e-HTPX project. • RealityGrid (chemistry) Users • Leeds • Oxford • UCL • Cardiff • Southampton • Imperial • Liverpool • Sheffield • Cambridge • Edinburgh • QUB • BBSRC • CCLRC User Registration (Process) User Applies Accepted (Via Website) (User Notified) Application QC User added to NGS VO (includes SRB account) Application Submitted to Peer Review Panel Approved ? Rejected (User Notified) User added NGS-USER and NGS-ANNOUNCE Mailing lists User Example GODIVA Diagnostics Study of Oceanography Data EVE Excitations and Visualisation Project Integrative Biology Simulation of Strain in Soft Tissue under Gravity Production Status • • • • • • • • • • TODAY 4 new core nodes operational 125 Users registered (25 since 1 September ’04) Grid enabled – Globus v2 (VDT distribution v1.2) at present BDII information service (GLUE + MDS Schemas) Data Services – Oracle and SRB Growing base of user applications MyProxy and CA services provided by UK Grid Support Centre VO Management Software – LCG-VO User support: Helpdesk • • • • • Next… Other Middleware [gLite/OMII etc…] NGS Portal Resource Broker SRB production service Contact Information NGS Grid Coordinator Andrew Richards: a.j.richards@rl.ac.uk NGS Website http://www.ngs.ac.uk Helpdesk (via Grid Support Centre) Email: support@grid-support.ac.uk