The National Grid Service: An Overview

advertisement

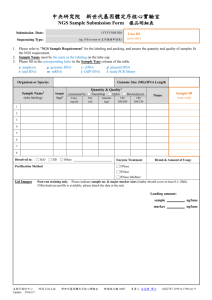

The National Grid Service: An Overview Stephen Pickles <stephen.pickles@manchester.ac.uk> University of Manchester Technical Director, GOSC Towards an NGS User Induction Course, NeSC, Edinburgh, 8 December 2004 1 Outline • Context – UK e-Science Programme – NGS and GOSC – ETF, EGEE, OMII, TeraGrid... • Core Services – Globus, SRB, OGSA-DAI,... • Operations Infrastructure • Web sites • Current Status 2 Context • • • • UK e-Science Programme Grid Operations Support Centre National Grid Service Relationships to – EGEE – Engineering Task Force (ETF) – Open Middleware Infrastructure Institute (OMII) 3 UK e-Science Programme Key Components UK Grid Operations Support Centre N G S 4 GOSC The Grid Operations Support Centre is a distributed “virtual centre” providing deployment and operations support for the UK e-Science programme. 5 GOSC Roles UK Grid Services National Services NGS Services Authentication, authorisation, certificate management, VO management, security, network monitoring, help desk + support centre. Job submission, simple registry, data transfer, data access and integration, resource brokering, monitoring and accounting, grid management services, workflow, notification, operations centre. NGS core-node Services CPU, (meta-) data storage, key software Integration testing, compatibility & Validation Tests, User Management, training Services to be coordinated with others (eg OMII, NeSC, LCG): Administration: Policies and acceptable use conditions SLA’s, SLD’s Coordinate deployment and Operations 6 One Stop Shop Click for help 7 Authentication, authorisation, certificate management, VO management, security. Helpdesk FAQ 8 GOSC does not... • Run a repository • Develop software (much) – contribute to developments to influence/adapt cf • “I’ve got one of those you can have. You just need to …” • (Training – Edinburgh/NeSC are part of GOSC) • Provide support for Access Grid – use Access Grid Support Centre instead – http://www.agsc.ja.net/ • Do extensive user hand-holding and application support – Need the e-Science Centres – Priorities will be driven by users 9 National Grid Service 10 NGS - A production Grid National Grid Service Level-2 Grid * Leeds Manchester * * DL * Oxford RAL * 11 NGS “Today” Interfaces OGSI::Lite Projects WSRF::Lite e-Minerals e-Materials Orbital Dynamics of Galaxies Bioinformatics (using BLAST) GEODISE project UKQCD Singlet meson project Census data analysis MIAKT project e-HTPX project. RealityGrid ConvertGrid (ESRC) Integrative Biology Users Leeds Oxford UCL Cardiff Southampton Imperial Liverpool Sheffield Cambridge Edinburgh QUB BBSRC CCLRC Manchester 12 “Tomorrow” GOSC Timeline NGS WS Service NGS Expansion (Bristol, Cardiff…) NGS Production Service NGS WS Service 2 OGSA-DAI NGS Expansion WS2 plan WS plan Q2 Q3 Q4 Q1 2004 Q2 Q3 2005 Q4 Q1 Q2 Q3 2006 OMII release Web Services-based National Grid Infrastructure gLite release 1 EGEE gLite alpha release EGEE gLite release OMII Release 13 http://www.ngs.ac.uk Core nodes: Need UK e-Science certificate (1-2 days) Apply through NGS web site (1-2 weeks) 14 Gaining Access NGS core nodes • • • • • • data nodes at RAL and Manchester compute nodes at Oxford and Leeds free at point of use apply through NGS web site light-weight peer review – 1-2 weeks all access is through digital X.509 certificates – from UK e-Science CA – or recognized peer National HPC services • HPCx • CSAR Must apply separately to research councils Digital certificate and Conventional (username/ password) access supported 15 UofD U of A H P C x Commercial Provider PSRE Man. Leeds GOSC RAL Oxford C S A R U of B U of C NGS Core Nodes: Host core services, coordinate integration, deployment and support +free to access resources for all VOs. Monitored interfaces + services NGS Partner Sites: Integrated with NGS, some services/resources available for all VOs Monitored interfaces + services NGS Affiliated Sites: Integrated with NGS, support for some VO’s Monitored interfaces (+security etc.) 16 Joining the NGS Resource providers join the NGS by • Adopting NGS acceptable use and security policies • Run compatible middleware – as defined by NGS Minimum Software Stack – and verified by compliance test suite • Support monitoring and accounting Two levels • NGS affiliates • NGS partners – also provide significant resources or services to NGS users More later 17 • • Start from LCG2 “Harden” middleware • • Expand applications €32 from EU • – – – The EU Grid Infrastructure 50% deployment/operations lots at CERN • matched by PP UK + I – – – training GOC dev + ops regional deployment+support • T2 coordinators 18 LCG •Used for batch production Now. • worldwide de facto standards •Currently trying to interface analysis S/W • on top of gLite from EGEE •Need to move out of the physics dept’s 19 GRIDPP • UK Contribution to LCG • GridPP1 (2001-2004) • GridPP2 (2004-2007) – Also supports current users – 33% deployment/operations – 33% middleware dev. – 33% applications – 60% deployment/operations • LCG2 -> EGEE • – 15% middleware dev. – 25% applications Not just PPARC funding – Universities • support LHC • benefit from grid experience • You should know/meet these people 20 Grid Operations Centre Responsibilities in EGEE UK focused screen UKERNA work to be integrated 21 OMII 22 Managing middleware evolution • Core of GOSC built around experience in deploying and running National Grid Service (NGS) – Support service • Important to coordinate and integrate this with deployment and operations work in EGEE, LCG and similar projects. – e.g. EGEE – low level services, CA, GOC, CERT... • Focus on deployment and operations, NOT development. EGEE… Other software sources Prototypes & specifications NGS ETF Software with proven capability & realistic deployment experience OMII ‘Gold’ services UK Campus Operations and other Grids Feedback & future requirements Deployment/testing/advice 23 (Grid) Engineering Task Force • Originally built the UK Level-2 Grid using hardware resources volunteered by the UK e-Science Centres – very heterogeneous – exposed need for dedicated resources • 0.5 FTE at each e-Science centre • Now primarily conducting pre-deployment evaluation and testing for NGS • Currently evaluating: – – – – – Condor (nearly complete) Globus Toolkit version 4 Innergrid and Outergrid OMII distribution gLite from EGEE (awaiting release candidate January ’05) • Also doing portal work for NGS linked to JISC VRE developments • UDDI work complete 24 NGS Core Services - Globus • Globus Toolkit version 2 – GT 2.4.3 from VDT 1.2 • • • • Job submission (GRAM) File transfer (GridFTP) Shell (GSI-SSH) Information Services (MDS/GIIS/GRIS) – Information providers from GLUE schema • More from Steve Pickering later today 25 NGS Core Services - SRB • Storage Resource Broker from SDSC • Location transparent access to storage • Metadata catalog • Replica management • Clients on compute nodes • Servers on data nodes • More from Andy Richards later today 26 NGS Core Services – Oracle • Oracle 9i database • Only on data nodes • Populated by users/data providers • Infrastructure maintained by NGS database administrators • Used directly or via OGSA-DAI • More from Matt Ford later today 27 NGS Core Services – OGSA-DAI • Open Grid Services Architecture (OGSA) • Database Access and Integration (DAI) • Developed by UK e-Science projects OGSA-DAI and DAIT • OGSA-DQP (Distributed Query Processor) • Experimental service based on OGSI/GT3 on Manchester data node only – will consider WS-I and WSRF flavours when in final release • Uses Oracle underneath • Early users from e-Social Science (ConvertGrid) • More from Matt Ford later today 28 NGS Core Services - other Operated by GOSC for NGS and UK e-Science programme In production: • Certificate Authority • Information Services (MDS/GIIS) • MyProxy server • Integration tests and database • Cluster monitoring • LCG-VO In testing: • VOMS • EDG Resource Broker In development • Accounting • Portal (see Dharmesh’s demonstration, Friday) 29 NGS Organisation • Operations Team • Technical Board • Management Board (a.k.a. Steering Committee) – – – – – led by Andy Richards representatives from all NGS core nodes meets weekly by Access Grid day-to-day operational and deployment issues reports to Technical Board – – – – – – led by Stephen Pickles representatives from all sites and GOSC meets bi-weekly by Access Grid deals with policy issues and high-level technical strategy sets medium term goals and priorities reports to Management Board – meets quarterly – representatives from funding bodies, partner sites and major stakeholders – sets long term priorities 30 Web Sites • NGS – http://www.ngs.ac.uk • GOSC – http://www.grid-support.ac.uk • CSAR – http://www.csar.cfs.ac.uk • HPCx – http://www.hpcx.ac.uk 31 Google search for “Grid Support” 32 33 34 35 36 37 Production Status TODAY • 4 JISC-funded core nodes operational • 136 Users registered (36 since 1 September ’04) • Grid enabled – Globus v2 (VDT distribution v1.2) at present • BDII information service (GLUE + MDS Schemas) • Data Services – Oracle, SRB and OGSA-DAI • Growing base of user applications • MyProxy and CA services provided by GOSC • VO Management Software – LCG-VO • User support: Helpdesk Next… • NGS Portal • Resource Broker • SRB production service • Accounting • EGEE VOMS • Move from User- to Project/VO-based registration • Other Middleware [gLite/OMII etc…] 38 Helpdesk http://www.grid-support.ac.uk support@grid-support.ac.uk UKGSC: Queue Totals For Month Prior To (10:11 AM, 26/11/2004) • Certification still dominant query type • General contains GGUS related queries from EGEE/GGUS helpdesk • (A separate queue for this new traffic is to be created) 39 User registrations so far… Number of Registered NGS Users 160 Number of Users 140 120 100 NGS User Registrations 80 Linear (NGS User Registrations) 60 40 20 0 04 April 2004 24 May 2004 13 July 2004 01 September 2004 21 October 2004 10 December 2004 Date 40 Recent Developments • • • • NGS newsletter OMII helpdesk Resource Broker (LCG) available for early adopters TeraGrid – UK certificates accepted on TeraGrid – Looking at INCA monitoring with Jenny Schopf • Trial of accounting software from MCS project – using GGF Usage Record draft standard (as EGEE) • Meeting with Open Science Grid’s iGOC • Collect user exemplars – Agreed form of acknowledgement 41 Other Developments • VO server (LCG/EGEE) being trialled by RealityGrid • • • • – also CCLRC e-Science EGEE VOMS now available EGEE meeting in den Haag Security = NGS + GridPP + EGEE UKERNA developments on Security and Network monitoring • Negotiations with vendors on software licenses – NAG – positive discussions • If user A has a license X, they can use any other site with a license X • To be formalised – Gaussian • Need a Commercial license ($20k-30k) – Matlab • Currently have a workable solution (binaries) but next release will break • Mathworks aware but no solution yet 42 The Last Slide • We are in the game of providing a service – built on leading (sometimes bleeding) edge academic stuff ! • The challenge – not the latest and greatest grid – not what any given user wants • The solution – want to make it work • for our researchers • for our institutions – and maintain compatibility with EGEE, TeraGrid – and accommodate OMII – and expand, bringing in more partners • Sign people/users up (to the vision) – “get out more” 43