Document 13346322

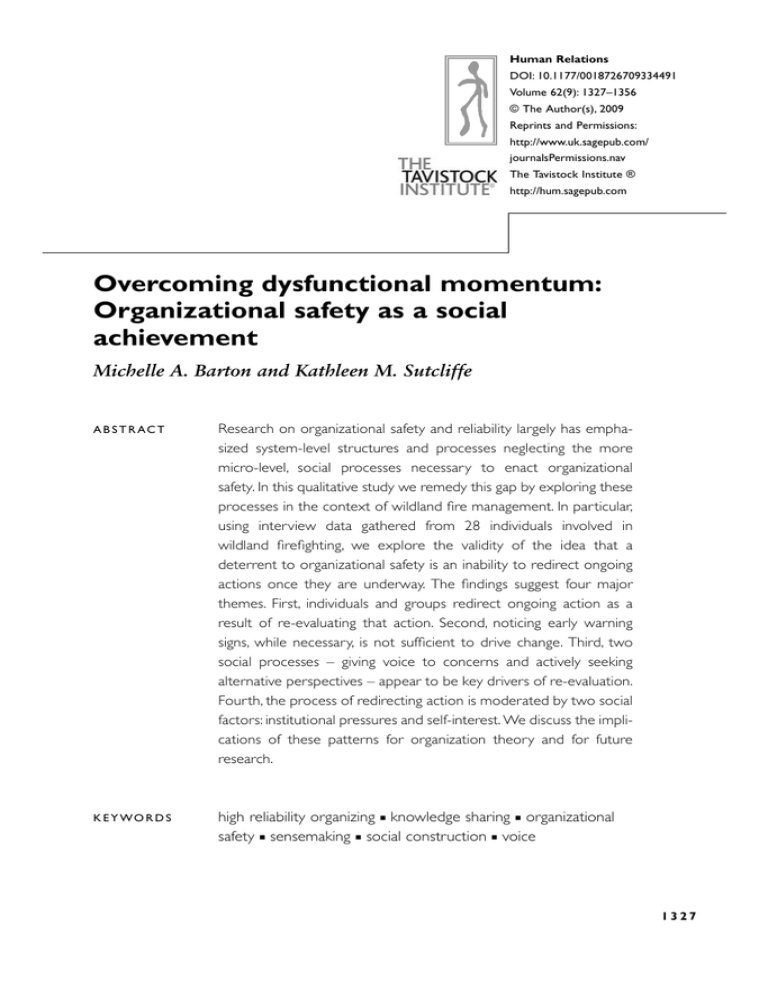

advertisement