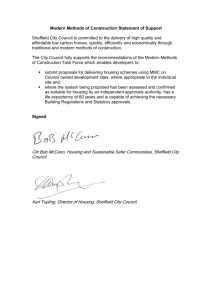

‘Other’ Experiments’ Requirements Dan Tovey University of Sheffield

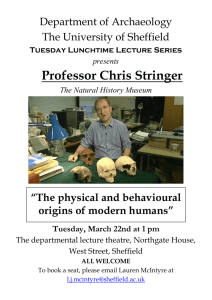

advertisement

‘Other’ Experiments’ Requirements Dan Tovey University of Sheffield Dan Tovey 1 University of Sheffield Background ‘Other’ Experiments defined as non-LHC and non-US-collider. Covers ANTARES, CALICE, CRESST, H1, LC-ABD, MICE, MINOS, NA48, phenoGrid, SNO, UKDMC, ZEUS etc. Bids for funding under GridPP1 unsuccessful. Since then have fed requirements (non-grid) for e.g. Tier-1/A resources into GridPP via EB (DRT rep.). Several EoI-style bids contributed to GridPP2 proposal (ANTARES, CALICE, MICE, phenoGrid, UKDMC, ZEUS). Developed into full Proforma-2 task definitions: • • • • • ANTARES CALICE MICE phenoGrid UKDMC phenoGrid bid successful g 1.0 FTE for 3 years awarded to Durham. Others rejected in favour of ‘generic GridPP portal’. Dan Tovey 2 University of Sheffield ANTARES Neutrino telescope being built in the Med. 1 Tb/week raw data rate – would benefit from grid data processing. Opportunity for evangelism/outreach to new communities. Task definition • interface ANTARES data and calibration databases with existing Grid data handling resources; • establish appropriate authentication schemes (certification, etc.); • enable access to reconstructed data by other collaborators; • include ANTARES data into fast, global, astrophysics alert systems. Also proposed Grid running of CORSIKA shower Monte Carlo (simple executable suitable for generic portal?) Requested 1.0 FTE/yr for 3 years. Dan Tovey 3 University of Sheffield CALICE Major project to define and build calorimetry for a future linear collider. 4 Tb data (108 events) from 2004-2006 beam tests – analyse and simulate (109 events) using Grid. Proposed milestones/deliverables: • Month 3: CALICE VO and work-load management system in place, with acceptance at Tier-1 + at least one Tier-2 site. • Month 6: CALICE GEANT4 Monte Carlo running in EGEE/LCG Grid environment. • Month 15: Preproduction test run of around 107 events based on first beam test parameters. • Month 18: Prototype release for book-keeping and user tools. • Month 21: CALICE VO acceptance at all planned Tier-2 sites. • Month 24: Start production of first large GEANT4 Monte Carlo dataset. • Month 27: Completed book-keeping and user tools in place to allow multiple dataset generation by non-experts. Requested 0.5 FTE/yr for 3 years (ICL proposed, shared with Babar/GANGA). Dan Tovey 4 University of Sheffield MICE Flagship UK-based proof-of-principle experiment for muon cooling for a neutrino factory / muon collider. Identified four areas where e-Science will be key: • Data distribution from a Tier 0 centre via the Grid Assumed to be RAL in this case! • Apparatus monitoring, control and calibration Remote monitoring developed in collaboration with GridCC (Grid-enabled Remote Instruments with Distributed Control and Computation) – unique in GridPP • Data persistency Adopt LCG tools • Grid job submission and monitoring Same requirements as typical LHC experiment - adopt CMS/LCG tools Requested 1.0 FTE/yr for 3 years at Brunel, assisted by existing effort at ICL and ICL e-Science studentship. Dan Tovey 5 University of Sheffield phenoGrid Proposal from UK phenomenologists to GRID-enable HERWIG++. Project will form part of Generators Services sub-project of LCG. Also includes training aspect. Selected milestones/deliverables: • 0-6 months: Familiarisation with ThePEG structure and HERWIG++ code. Ensuring code can run under all platforms supported by LCG and LHC experiments. Modifications necessary to ensure program can be used on GRID. Example jobs and scripts to allow simulation jobs to be submitted via GRID. Training of HERWIG and phenoGRID collaboration members. • 7-12 months: Development of program and interfaces so that can be used with GANGA and in EDG framework. • 13-18 months: Development of interfaces to LCG services, e.g. POOL storage and passing of events to detector simulation. • 19-24 months: Development of tools to allow simulation of large event samples using GRID for distributed parallel computing. • 25-30 months: Validation and testing of interfaces and use of HERWIG++ via the GRID g tuning new physics models in the program. Requested 1.0 FTE/yr for 3 years (Success! - awarded to Durham). Dan Tovey 6 University of Sheffield UKDMC UK Dark Matter Collaboration detectors increasing in size/total data rate by factor >10 within next year to ~ 1-5 Tb/year. Further factor 10 increase proposed within 3-5 years (ZEPLINMAX). Propose to interface existing reconstruction software (ZAP/UNZAP) and G4 simulations to Grid for distributed running (UK-UCLA-ITEP etc). Make use of existing EDG and LCG tools. Selected deliverables: • Month 12: Prototype interfaces between G4/FLUKA and ZAP/UNZAP, and EDG/LCG tools, making full use of existing GridPP1 developments (e.g. GANGA). • Month 24: Fully functioning online UKDMC detector conditions database and Replica Catalogue accessible by prototype UKDMC software interfaces. • Month 36: Fully functioning UKDMC software framework permitting submission of G4, FLUKA and ZAP/UNZAP reconstruction jobs to multiple grid-enabled Collaboration computing resources. Requested 1.0 FTE/yr for 3 years. Dan Tovey 7 University of Sheffield ZEUS Did not submit Proforma-2: info taken from GridPP2 proposal. Proposed to grid-enable existing software framework FUNNEL for distributed Monte Carlo job submission. Also distributed physics analysis tool: already use dCache system for data handling and LSF for resource brokerage on 40 node farm at DESY g extend across ZEUS institutes world-wide. Selected milestones: • • • • 12 months: Commissioned testbed tailored to ZEUS needs; 18 months: Authentication strategy established; 24 months: I/O layers adapted to suit the Funnel system; During 3rd year: Development of resource management concepts and monitoring tools; • 3 years: Roll-out of production version of Grid-enabled Funnel system to the Collaboration. Potential problem with interface between existing framework and EDG/LCG tools however g would need careful design. Problem likely to also occur with use of any generic GridPP portal? Dan Tovey 8 University of Sheffield LC-ABD Linear Collider Accelerator and Beam Delivery system collaboration. Did not submit Proforma-2: info taken from GridPP2 proposal. Need to perform high statistics simulations of physical interactions between matter (spoilers, collimators etc.) and beam particles. Propose to give accelerator codes a Grid interface (GUI portal or otherwise) • Interface to Resource Broker, • Grid-based metadata catalogue for storage of results Dan Tovey 9 University of Sheffield Generic Portal Result of GridPP2 PRSC process is proposal for development of generic GridPP portal for ‘other’ experiments / dissemination purposes. Clearly vital project if GridPP is to reach out beyond limited set of experiments g middleware must be of general use to experiments with little or no spare manpower to tailor own code to Grid use. Possible use-case for simple submission of generic executable jobs: • User submits job to web-based GridPP portal, specifying local executable, libraries, steering files, input file metadata, desired location of output data files Requires generic portal. • Tool queries experiment-specific grid-based metadata catalogue to find location of input files (SE). Requires simple, flexible generic grid-based metadata catalogue application which can be adapted simply to needs of wide range of experiments. • Tool submits jobs to local CE (or more sophisticated scheduling?) • Tool copies output data files to specified location and notifies user has finished (e-mail). Problems with interface with existing experiment-specific software frameworks (FUNNEL etc.)? Dan Tovey 10 University of Sheffield Conclusions So far progress with Grid use by ‘Other’ experiments not good due to lack of available manpower. GridPP2 has not (so far) provided solution to this problem! Opportunities for evangelising some large international collaborations through UK involvement lost. Nevertheless generic GridPP portal project should certainly help some, although possibly not all, experiments. • Cautious optimism! Caveat: needs of individual ‘other’ experiments need to be considered carefully + taken into account when defining functionality g if not risk designing generic tool of use to noone! In any case non-Grid use of central computing facilities (RAL and elsewhere) must still be supported. Dan Tovey 11 University of Sheffield