The PCAV Cluster caffeine February 6, 2013

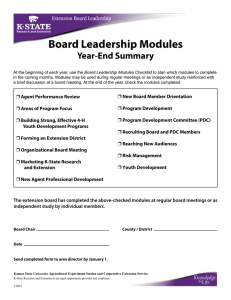

advertisement

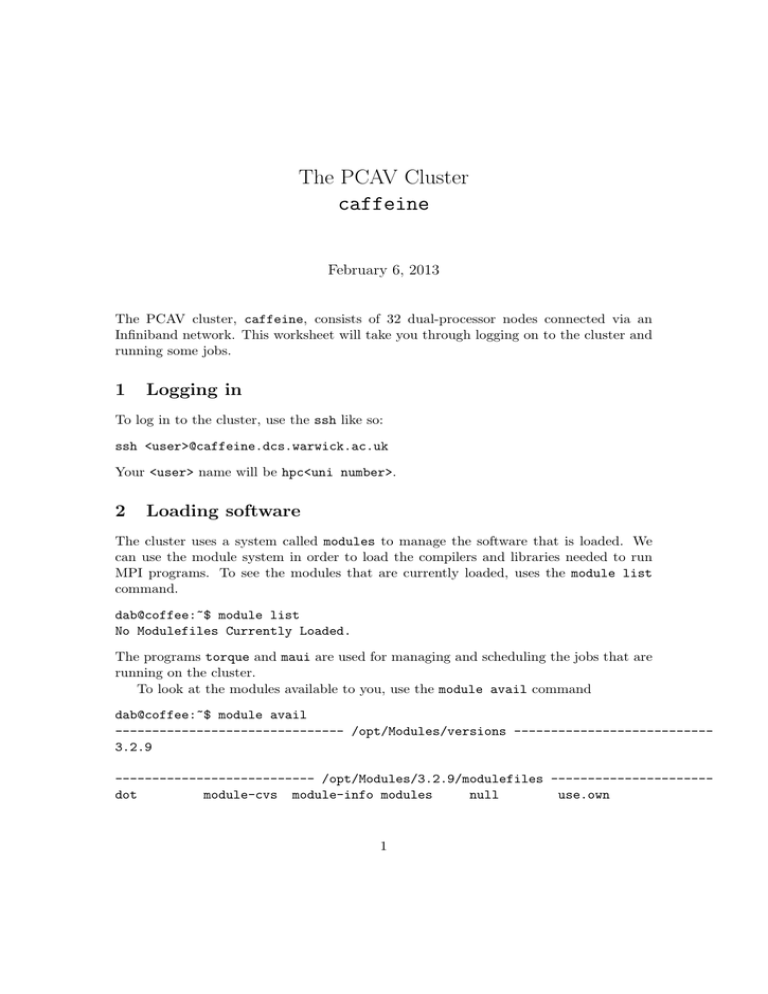

The PCAV Cluster caffeine February 6, 2013 The PCAV cluster, caffeine, consists of 32 dual-processor nodes connected via an Infiniband network. This worksheet will take you through logging on to the cluster and running some jobs. 1 Logging in To log in to the cluster, use the ssh like so: ssh <user>@caffeine.dcs.warwick.ac.uk Your <user> name will be hpc<uni number>. 2 Loading software The cluster uses a system called modules to manage the software that is loaded. We can use the module system in order to load the compilers and libraries needed to run MPI programs. To see the modules that are currently loaded, uses the module list command. dab@coffee:~$ module list No Modulefiles Currently Loaded. The programs torque and maui are used for managing and scheduling the jobs that are running on the cluster. To look at the modules available to you, use the module avail command dab@coffee:~$ module avail ------------------------------- /opt/Modules/versions --------------------------3.2.9 --------------------------- /opt/Modules/3.2.9/modulefiles ---------------------dot module-cvs module-info modules null use.own 1 ----------------------------- /opt/etc/modules/compilers -----------------------gnu/gnu64-4.4 -------------------------------- /opt/etc/modules/mpi --------------------------gnu/ompi-1.6.2 gnu/ompi-1.6.2-dev ------------------------------- /opt/etc/modules/tools -------------------------elf/0.8.13 silo/4.8 valgrind/ompi-1.6.2/3.8.1 hdf5/1.8.9 unwind/1.1 wmtools/ompi-1.6.2/1.2.2 wmtools/ompi-1.6.2/1.2.3 ------------------------------- /opt/etc/modules/java --------------------------jdk/1.7.0_07 To load the MPI libraries and begin developing program, we need to load the gnu/openmpi-1.2.7 module. To do this, use the module load command followed by the name of the module. dab@coffee:~$ module load gnu/ompi-1.6.2 dab@coffee:~$ Note that nothing is printed when the module is successfully loaded, but if we check the currently loaded modules, the gnu/ompi-1.6.2 module will be there. Task 1 Check the currently loaded modules and make sure that the module gnu/ompi-1.6.2 is loaded. Now we have loaded the MPI libraries, we can compile our programs like normal. 3 Running Your Program Everything we have done so far has happened on the head node of the cluster – coffee. When we run our programs, we want to run them on the compute nodes. To do this, we need to submit a job to the queue. Jobs in the queue can either be interactive, or run in batch mode with no user input required. 3.1 Interactively When we submit a job to the queue, we use the qsub command, followed by a few parameters: • -V – this ensures that all enviroment variables are passed through from the head node to the compute nodes. If you do not set this option, MPI will not work! • -I – this specifies that we want an interactive job. • -l nodes=4:ppn=2 – this is how we specify how many processors we want to request. Here we are requesting 4 nodes and 2 processors per node, for a total of 8 processors. 2 Lets look at how this works dab@coffee:~$ qsub -V -I -l nodes=4:ppn=2 qsub: waiting for job 175919.taz to start qsub: job 175919.taz ready dab@caf02:~$ We are now inside an interactive job. From here, we can run our MPI programs using the mpirun command we have seen previously. One useful thing to note is that if you do not pass the -n argument with a number of processes, mpirun will automatically use the same number of processes as you have processors. To get out of the interactive job, just type exit. dab@caf02:~$ exit logout qsub: job 175919.coffee completed Running interactive jobs like this is a great way to test your code, since you can watch it execute. 3.2 Batch Mode Running in interactive mode has a few limitations, mainly in terms of the amount of time you have, and the number of processors you can request. This is why we will need to use batch jobs. To submit a batch job, we simply do not use the -I option. One option that is useful when we submit a batch job is the -l walltime=00:10:00 which allows us to request a specific amount of wall time, in the format HH:mm:ss. Try and keep your walltimes as small as possible, since this will help you to get your jobs run faster. A batch job consists of a script, which is used to run your application. A simple script file will look like this: # submit.pbs -- Simple job submission #PBS -V -l nodes=4:ppn=2,walltime=00:10:00 # Change to the directory where we submitted the job from cd $PBS_O_WORKDIR mpirun helloMPI Note how we have put the scheduler options on second line of the script, following the #PBS. This means we do not have to type them in on the command line when we submit, so we can just type in 3 dab@taz:~$ qsub submit.pbs 175920.coffee Note that the qsub command will return the job id, allowing us to check on our jobs. 4 Managing Jobs • To look at the jobs currently in the queue, use the qstat command. dab@coffee:~$ qstat Job id Name User Time Use S Queue ------------------------- ---------------- --------------- -------- - ----172364.coffee ...gReplace.rep8 csufak 0 R hpsg The S column of the output contains the current status of the job. R is running, C is completed, and E is ending. • To remove a job from the queue, use the qdel command, and pass in the job id as an argument, for exmaple qdel 175919 4