Optimisation of Grid Enabled

advertisement

Optimisation of Grid Enabled Storage at Small Sites

Greig A Cowan

University of Edinburgh, UK

Jamie K Ferguson, Graeme A Stewart

University of Glasgow, UK

Abstrat

Grid enabled storage systems are a vital part of data proessing in the grid environment. Even

for sites primarily providing omputing yles, loal storage ahes will often be used to buer input

les and output results before transfer to other sites. In order for sites to proess jobs eÆiently it is

neessary that site storage is not a bottlenek to Grid jobs.

dCahe and DPM are two Grid middleware appliations that provide disk pool management of

storage resoures. Their implementations of the storage resoure manager (SRM) webservie allows

them to provide a standard interfae to this managed storage, enabling them to interat with other

SRM enabled Grid middleware and storage devies. In this paper we present a omprehensive set of

results showing the data transfer rates in and out of these two SRM systems when running on 2.4 series

Linux kernels and with dierent underlying lesystems.

This benhmarking information is very important for the optimisation of Grid storage resoures at

smaller sites, that are required to provide an SRM interfae to their available storage systems.

1 Introdution

1.1 Small sites and Grid omputing

The EGEE projet [1℄ brings together sientists

and engineers from 30 ountries to order to reate a Grid infrastruture that is onstantly available for sienti omputing and analysis.

The aim of the Worldwide LHC Computing

Grid (WLCG) is to use the EGEE developed

software to onstrut a global omputing resoure that will enable partile physiists to store

and analyse partile physis data that the Large

Hadron Collider (LHC) and its experiments will

generate when it starts taking data in 2007. The

WLCG is based on a distributed Grid omputing model and will make use of the omputing

and storage resoures at physis laboratories and

institutes around the world. Depending on the

level of available resoures, the institutes are organised into a hierarhy starting with the Tier-0

entre at CERN (the loation of the LHC), multiple national laboratories (Tier-1 entres) and

numerous smaller researh institutes and Universities (Tier-2 sites) within eah partiipating

ountry. Eah Tier is expeted to provide a ertain level of omputing servie to Grid users one

the WLCG goes into full prodution.

The authors' host institutes form part of SotGrid [2℄, a distributed WLCG Tier-2 entre, and

it is from this point of view that we approah the

subjet of this paper. Although eah Tier-2 is

unique in its onguration and operation, similarities an be easily identied, partiularly in

the area of data storage:

1. Typially Tier-2 sites have limited hardware

resoures. For example, they may have one

or two servers attahed to a few terabytes of

disk, ongured as a RAID system. Additional storage may be NFS mounted from

another disk server whih is shared with

other non-Grid users.

2. No tape storage.

3. Limited manpower to spend on administering and onguring a storage system.

The objetive of this paper is to study the onguration of a Grid enabled storage element at

a typial Tier-2 site. In partiular we will investigate how hanges in the disk server lesystems and le transfer parameters aet the data

transfer rate when writing into the storage element. We onentrate on the ase of writing to

the storage element, as this is expeted to be the

most stressful operation on the Tier-2's storage

resoures, and indeed testing within the GridPP

ollaboration bears this out [3℄. Sites will be able

to use the results of this paper to make informed

deisions about the optimal setup of their storage resoures without the need to perform extensive analysis on their own.

Although in this paper we onentrate on partiular SRM [4℄ grid storage software, the results

will be of interest in optimising other types of

grid storage at smaller sites.

This paper is organised as follows. Setion 2

desribes the grid middleware omponents that

were used during the tests. Setion 3 then goes

on to desribe the hardware, whih was hosen

to represent a typial Tier-2 setup, that was used

during the tests. Setion 3.1 details the lesystem formats that were studied and the Linux kernel that was used to operate the disk pools. Our

testing method is outlined in Setion 4 and the

results of these tests are reported and disussed

in Setion 5. In Setion 6 we present suggestions

of possible future work that ould be arried out

to extend our understanding of optimisation of

Grid enabled storage elements at small sites and

onlude in Setion 7.

2 Grid middleware omponents

2.1 SRM

The use of standard interfaes to storage resoures is essential in a Grid environment like

the WLCG sine it will enable interoperation of

the heterogeneous olletion of storage hardware

that the ollaborating institutes have available

to them. Within the high energy physis ommunity the storage resoure manager (SRM) [4℄

interfae has been hosen by the LHC experiments as one of the baseline servies [5℄ that

partiipating institutes should provide to allow

aess to their disk and tape storage aross the

Grid. It should be noted here that a storage element that provides an SRM interfae will be referred to as `an SRM'. The storage resoure broker (SRB) [6℄ is an alternative tehnology developed by the San Diego Superomputing Center

[7℄ that uses a lient-server approah to reate a

logial distributed le system for users, with a

single global logial namespae or le hierarhy.

This has not been hosen as one of the baseline

servies within the WLCG.

2.2 dCahe

dCahe [8℄ is a system jointly developed by

DESY and FNAL that aims to provide a mehanism for storing and retrieving huge amounts

of data among a large number of heterogeneous

server nodes, whih an be of varying arhitetures (x86, ia32, ia64). It provides a single

namespae view of all of the les that it manages and allows aess to these les using a variety of a protools, inluding SRM. By onneting

dCahe to a tape bakend, it beomes a hierarhial storage manager. However, this is not of

partiular relevane to Tier-2 sites who do not

typially have tape storage. dCahe is a highly

ongurable storage solution and an be easily

deployed at Tier-2 sites where DPM is not suÆiently exible.

2.3 Disk pool manager

Developed at CERN, and now part of the gLite

middleware set, the disk pool manager (DPM)

is similar to dCahe in that is provides a single namespae view of all of the les stored on

the multiple disk servers that it manages and

provides a variety of methods for aessing this

data, inluding SRM. DPM was always intended

to be used primarily at Tier-2 sites, so has an emphasis on ease of deployment and maintenane.

Consequently it laks some of the sophistiation

of dCahe, but is simpler to ongure and run.

2.4 File transfer servie

The gLite le transfer servie (FTS) [9℄ is a grid

middleware omponent that aims to provide reliable le transfer between storage elements that

provide the SRM or GridFTP [10℄ interfae. It

uses the onept of hannels [11℄ to dene unidiretional data transfer paths between storage

elements, whih usually map to dediated network pipes. There are a number of transfer parameters that an be modied on eah of these

hannels in order to ontrol the behaviour of the

le transfers between the soure and destination

storage elements. We onern ourselves with two

of these: the number of onurrent le transfers (Nf ) and the number of parallel GridFTP

streams (Ns ). Nf is the number of les that

FTS will simultaneously transfer in any bulk le

transfer operation. Ns is number of simultaneous GridFTP hannels that are opened up for

eah of these les.

2.5 Installation

Setions 2.2 and 2.3 desribed the two disk pool

management appliations that are suitable for

use at small sites. Both dCahe (v1.6.6-5) and

DPM (v1.4.5) are available as part of the 2.7.0

release of the LCG software stak and have been

sequentially investigated in the work presented

here. In eah ase, the LCG YAIM [12℄ installation mehanism was used to reate a default instane of the appliation on the available test mahine (See Setion 3). For dCahe,

PostGreSQL v8.1.3 was used, obtained from the

PostGreSQL website [13℄. Other than installing

the SRM system in order that they be fully operational, no onguration options were altered.

3 Hardware onguration

In order for our ndings to be appliable to existing WLCG Tier-2 sites, we hose test hardware

representative of Tier-2 resoures.

1. Single node with a dual ore Xeon CPU.

This operated all of the relevant servies

(i.e. SRM/nameserver and disk pool aess) that were required for operation of the

dCahe or DPM.

2. 5TB RAID level-5 disk with a 64K stripe.

Partitioned into three 1.7TB lesystems.

3. Soure DPM for the transfers was a sufiently high performane mahine that it

was able to output data aross the network

suh that it would not at as a bottlenek

during the tests.

4. 1Gb/s network onnetion between the

soure and destination SRMs, whih were

on the same network onneted via a Netgear GS742T swith. During the tests,

there was little or no other traÆ on the

network.

5. No rewalling (no iptables module loaded)

between the soure and destination SRMs.

3.1 Kernels and lesystems

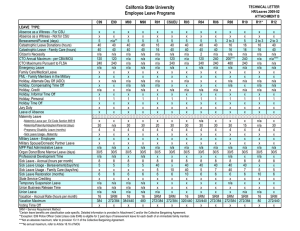

Table 1 summarises the ombinations of Linux

kernels that we ran on our storage element and

the disk pool lesystems that we tested it with.

As an be seen four lesystems, ext2 [14℄, ext3

[15℄, jfs [16℄, xfs [17℄, were studied. Support for

the rst 3 lesystems is inluded by default in the

Sienti Linux [18℄ 305 distribution. However,

support for xfs is not enabled. In order to study

the performane of xfs a CERN ontributed rebuild of the standard Sienti Linux kernel was

used. This diers from the rst kernel only with

the addition of xfs support.

Note that these kernel hoies are in keeping

with the `Tier-2' philosophy of this paper { Tier2 sites will not have the resoures available to reompile kernels, but will instead hoose a kernel

whih inludes support for their desired lesystem.

In eah ase, the default options were used

when mounting the lesystems.

Kernel

ext2

2.4.21

2.4.21+ern xfs

Y

N

Filesystem

ext3

jfs

xfs

Y

N

Y

N

N

Y

Table 1: Filesystems tested for eah Linux kernel/distribution.

4 Method

The method adopted was to use FTS to transfer 30 1GB les from a soure DPM to the test

SRM, measuring the data transfer rate for eah

of the lesystem-kernel ombinations desribed

in Setion 3.1 and for dierent values of the FTS

parameters identied in Setion 2.4. We hose

to look at Nf ; Ns 2 (1; 3; 5; 10). Using FTS enabled us to reord the number of suessful and

failed transfers in eah of the bathes that were

submitted. A 1GB le size was seleted as being representative of the typial lesize that will

be used by the LHC experiments involved in the

WLCG.

Eah measurement was repeated 4 times to

obtain a mean. Any transfers whih showed

anomalous results (e.g., less than 50% of the

bandwidth of the other 3) were investigated and,

if neessary, repeated. This was to prevent failures in higher level omponents, e.g., FTS from

adversely aeting the results presented here.

5 Results

5.1 Transfer Rates

Table 2 shows the transfer rate, averaged over

Ns , for dCahe for eah of the lesystems. Similarly 3 shows the rate averaged over Nf .

Nf

1

3

5

10

ext2

157

207

209

207

Filesystem

ext3

jfs

xfs

146

176

162

165

137

236

246

244

156

236

245

247

Table 2: Average transfer rates per lesystem

for dCahe for eah Nf .

Ns

1

3

5

10

ext2

217

191

189

183

Filesystem

ext3

jfs

xfs

177

159

155

158

234

214

208

207

233

219

217

215

Table 3: Average transfer rates per lesystem

for dCahe for eah Ns .

Table 4 shows the transfer rate, averaged over

Ns , for DPM for eah of the lesystems. Similarly 5 shows the rate averaged over Nf .

The following onlusions an be drawn:

1. Transfer rates are greater when using modern high performane lesystems like jfs and

xfs than the older ext2,3 lesystems.

2. Transfer rates for ext2 are higher than ext3,

beause it does not suer a journalling overhead.

3. For all lesystems, having more than 1 simultaneous le transferred improves the average transfer rate substantially. There appears to be little dependene of the average transfer rate on the number of les in a

multi-le transfer (for the range of Nf studied).

4a. With dCahe, for all lesystems, single

stream transfers ahieve a higher average

transfer rate than multistream transfers.

4b. With DPM, for ext2,3 single stream transfers ahieve a higher average transfer rate

than multistream transfers. For xfs and jfs

multistreaming has little eet on the rate.

4. In both ases, there appears to be little dependene of the average transfer rate on the

number of streams in a multistream transfer

(for the range of Ns studied).

Nf

1

3

5

10

Filesystem

ext2

214

297

300

282

ext3

jfs

xfs

192

252

261

253

141

357

368

379

204

341

354

356

Table 4: Average transfer rates per lesystem

for DPM for eah Nf .

Ns

1

3

5

10

ext2

Filesystem

293

264

264

272

ext3

jfs

xfs

277

209

237

234

289

313

303

339

310

323

307

317

Table 5: Average transfer rates per lesystem

for DPM for eah Ns .

5.2 Error Rates

Table 6 shows the average perentage error rates

for the transfers obtained with both SRMs.

5.2.1

dCahe

With dCahe there were a small number of transfer errors for ext2,3 lesytems. These an be

traed bak to FTS anelling the transfer of a

single le. It is likely that the high mahine load

generated by the I/O traÆ impaired the performane of the dCahe SRM servie. No errors

were reported with xfs and jfs lesystems whih

an be orrelated with the orrespondingly lower

load that was observed on the system ompared

to the ext2,3 lesystems.

5.2.2

DPM

With DPM all of the lesystems an lead to errors in transfers. Similarly to dCahe these errors generally our beause of a failure to orretly all srmSetDone() in FTS. This an be

traed bak to the DPM SRM daemons being

SRM

dCahe

DPM

ext2

0.21

0.05

Filesystem

ext3

jfs

xfs

0.05

0.10

0

1.04

0

0.21

Table 6: Perentage observed error rates for different lesystems and kernels with dCahe and

DPM.

badly aeted by the mahine load generated

by the high I/O traÆ. In general it is reommended to separate the SRM daemons from the

atual disk servers, partiularly at larger sites.

Note that the error rate for jfs was higher,

by some margin, than for the other lesystems.

However, this was mainly due to one single transfer whih had a very high error rate and further

testing should be done to see if this repeats.

5.3 Comment on FTS parameters

The poorer performane of multiple parallel

GridFTP streams relative to a single stream

transfer observed for dCahe and for DPM with

ext2,3 an be understood by onsidering the I/O

behaviour of the destination disk. With multiple

parallel streams, a single le is split into setions

and sent down separate TCP hannels to the destination storage element. When the storage element starts to write this data to disk, it will have

to perform many random writes to the disk as

dierent pakets arrive from eah of the dierent

streams. In the single stream ase, data from a

single le arrives sequentially at the storage element, meaning that the data an be written

sequentially on the disk, or at least with signiantly fewer random writes. Sine random writes

involve physial movement of the disk and/or the

write head, it will degrade the write performane

relative to a sequential write aess pattern.

In fat multiple TCP streams are generally

beneial when performing wide area network

transfers, in order to maximise the network

throughput. In our ase as the soure and destination SRMs were on the same LAN the eet

of multistreams was generally detrimental.

It must be noted that a systemati study was

not performed for the ase of Nf > 10. However, initial tests show that if FTS is used to

manage a bulk le transfer on a hannel where

Nf is initially set to a high value, then the

destination storage element experienes a high

load immediately after the rst bath of les has

been transferred, ausing a orresponding drop

in the observed transfer rate. This eet an be

seen in Figure 1, where there is an initial high

data transfer rate, but this redues one the rst

bath of Nf les has been transferred. It is likely

that the eet is due to the post-transfer negotiation steps of the SRM protool ourring simultaneously for all of the transferred les. The

resulting high load on the destination SRM node

auses all subsequent FTS transfer requests to

time out, resulting in the transfers failing. It

must be noted that our use of a 1GB test le for

Figure 1: Network prole of destination dCahe

node with jfs pool lesystem during an FTS

transfer of 30 1GB les. Throughout the transfer, Nf = 15. Final rate was 142Mb/s with 15

failed transfers.

Figure 2: Network prole of destination dCahe

node with jfs pool lesystem during an FTS

transfer of 30 1GB les. Nf = 1 at the start

of the transfer, inreasing up to Nf = 15 as

the transfer progressed. Final transfer rate was

249Mb/s with no failed transfers.

all transfers will exaerbate this eet. Figure 2

shows how this eet disappears when the start

times of the le transfers are staggered by slowly

inreasing Nf from Nf = 1 to 15, indiating that

higher le transfer rates as well as fewer le failures ould be ahieved if FTS staggered the start

times of the le transfers. Improvements ould

be made if multiple nodes were used to spread

the load of the destination storage element. If

available, separate nodes ould be used to run

the disk server side of the SRM and the namespae servies.

6 Future Work

The work desribed in this paper is a rst step

into the area of optimisation of grid enabled storage at Tier-2 sites. In order to fully understand

and optimise the interation between the base

operating system, disk lesystem and storage appliations, it would be interesting to extend this

researh in a number of diretions:

1. Using non-default mount options for eah of

the lesystems.

2. Repeat the tests with SL 4 as the base oper-

ating system (whih would also allow testing

of a 2.6 Linux kernel).

3. Investigate the eet of using a dierent

stripe size for the RAID onguration of the

disk servers.

4. Vanilla installations of SL generally ome

with unsuitable default values for the TCP

read and write buer sizes. In light of

this, it will be interesting to study the how

hanges in the Linux kernel-networking tuning parameters hange the FTS data transfer rates. Initial work in this area has shown

that improvements an be made.

5. It would be interesting to investigate the

eet of dierent TCP implementations

within the Linux kernel. For example, TCPBIC [19℄, westwood [20℄, vegas [21℄ and

web100 [22℄.

6. Charaterise the performane enhanement

that an be gained by the addition of extra

hardware, partiularly the inlusion of extra

disk servers.

When the WLCG goes into full prodution, a

more realisti use ase for the operation of an

SRM will be one in whih it is simultaneously

writing (as is the ase here) and reading les

aross the WAN. Suh a simulation should also

inlude a bakground of loal le aess to the

storage element, as is expeted to our during

periods of end user analysis on Tier-2 omputing

lusters. Preliminary work has already started

in this area where we have been observing the

performane of existing SRMs within SotGrid

during simultaneous read and writing of data.

7 Conlusion

This paper has presented the results of a omparison of le transfer rates that were ahieved when

FTS was used to opy les between Grid enabled

storage elements that were operating with dierent destination disk pool lesystems and a 2.4

Linux kernel. Both dCahe and DPM were onsidered as destination disk pool managers providing an SRM interfae to the available disk.

In addition, the dependene of the le transfer

rate on the number of onurrent les (Nf ) and

the number of parallel GridFTP streams (Ns )

was investigated.

In terms of optimising the le transfer rate

that ould be ahieved with FTS when writing

into a harateristi Tier-2 SRM setup, the results an be summarised as follows:

1. Pool lesystem: jfs or xfs.

2. FTS parameters: Low value for Ns , high

value for Nf (staggering the le transfer

start times). In partiular, for dCahe

Nf = 1 was identied as giving optimal

transfer rate.

It is not possible to make a single reommendation on the SRM appliation that sites should

use based on the results presented here. This deision must be made depending upon the available hardware and manpower resoures and onsideration of the relative features of eah SRM

solution.

Extensions to this work were suggested, ranging from studies of the interfae between the kernel and network layers all the way up to making hanges to the hardware onguration. Only

when we understand how the hardware and software omponents interat to make up an entire

Grid enabled storage element will we be able to

give a fully informed set of reommendations to

Tier-2 sites regarding the optimal SRM onguration. This information is required by them in

order that they an provide the level of servie

expeted by the WLCG and other Grid projets.

8 Aknowledgements

The researh presented here was performed using SotGrid [2℄ hardware and was made possible

with funding from the Partile Physis and Astronomy Researh Counil through the GridPP

projet [23℄. Thanks go to Paul Millar and David

Martin for their tehnial assistane during the

ourse of this work.

Referenes

[1℄ Enabling Grids for E-sieneE

http://www.eu-egee.org/

[2℄ SotGrid, the Sottish Grid Servie

http://www.sotgrid.a.uk

[3℄ GridPP Servie Challenge Transfer tests

http://wiki.gridpp.a.uk/wiki

/Servie Challenge Transfer Tests

[4℄ The SRM ollaboration

http://sdm.lbl.gov/srm-wg/

[5℄ LCG Baseline Servies Group Report

http://ern.h/LCG/peb/bs/BSReportv1.0.pdf

[6℄ The SRB ollaboration

http://www.sds.edu/srb/index.php/Main Page

[7℄ The San Diego Superomputing Center

http://www.sds.edu

[8℄ dCahe ollaboration

http://www.dahe.org

[9℄ gLite FTS

https://uimon.ern.h/twiki/bin/view/

LCG/FtsRelease14

[10℄ Data transport protool developed by the

Globus Alliane

http://www.globus.org/grid software

/data/gridftp.php

[11℄ P. Kunszt, P. Badino, G. MCane,

The gLite File Transfer Servie: Middleware Lessons Learned from the Servie Challenges, CHEP 2006 Mumbai.

http://indio.ern.h

/ontributionDisplay.py?ontribId=20

&sessionId=7&onfId=048

[12℄ LCG generi installation manual

http://grid-deployment.web.ern.h/griddeployment/doumentation/LCG2Manual-Install/

[13℄ PostGreSQL Database website

http://www.postgresql.org/

[14℄ R.Card, T. Ts'o, S. Tweedie (1994). "Design and implementation of the seond extended lesystem." Proeedings of the First

Duth International Symposium on Linux.

ISBN 90-367-0385-9.

[15℄ Linux ext3 FAQ

http://batleth.sapientisat.org/projets/FAQs/ext3-faq.html

[16℄ Jfs lesystem homepage

http://jfs.soureforge.net/

[17℄ Xfs lesystem homepage

http://oss.sgi.om/projets/xfs/index.html

[18℄ Sienti Linux homepage

http://sientilinux.org

[19℄ A TCP variant for high speed long distane

networks

http://www.s.nsu.edu/faulty/rhee

/export/bitp/index.htm

[20℄ TCP Westwood details

http://www.s.ula.edu/NRL/hpi/tpw/

[21℄ TCP Vegas details

http://www.s.arizona.edu/protools/

[22℄ TCP Web100 details

http://www.hep.ul.a.uk/ytl/tpip/web100/

[23℄ GridPP - the UK partile physis Grid

http://gridpp.a.uk