FEA of Slope Failures with a Stochastic Distribution of

advertisement

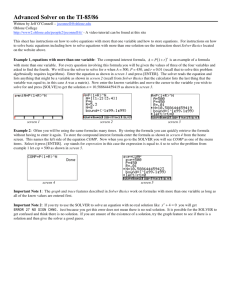

FEA of Slope Failures with a Stochastic Distribution of Soil Properties Developed and Run on the Grid William Spencer1, Joanna Leng2, Mike Pettipher2 1 School of Mechanical Aerospace and Civil Engineering, University of Manchester 2 Manchester Computing, University of Manchester Abstract This paper presents a case study about how one user developed and ran codes on a grid, in this case in the form of the National Grid Service (NGS). The user had access to the core components of the NGS which consisted of four clusters which are configured, unlike most other U.K. academic hpc services, to be used primarily through grid technologies, in this case globus. This account includes how this code was parallelised, its performance and the issues involved in selecting and using the NGS for the development and running of these codes. A general understanding of the application area, computational geotechnical engineering, and the performance issues of these codes are required to make this clear. 1. Introduction The user is investigating the stochastic modelling of heterogeneous soils in geotechnical engineering using the Finite Element Analysis (FEA) method. Here thousands of realisations of soil properties are generated to match statistical characteristics of real soil, so that margins of design reliability can be assessed. The user wished to understand what performance benefits they could gain from parallelising their code. To do this the user needed to develop a parallel version of the code. The production grid service philosophy of the NGS seemed appropriate to run the multiple realisations necessary. To do this the user had to test and run the code through grid technologies, which in this case meant globus. 2. Scientific Objectives Engineers characterise material property, such as the strength of steel, by a single value, in order to simplify subsequent calculations. By choosing a single value, any inherent variation in the material is ignored. In soil, widely differing properties are seen over a small spatial distance, invalidating this assumption. In this case the model is a 3D representation of a soil slope or embankment. The slope fails when a volume of soil breaks away from the rest of the slope and moves en-masse downhill due to gravity, Figure 1. This process is modelled using a FEA with an elastic-perfectly plastic Tresca model to simulate a clay soil. Previous studies in this field, e.g. [5], have been limited to 2D analysis with modest scope. These 2D models are flawed in their representation of material variability and failure modes, thus the novel extension to 3D provides a much greater understanding of the problem. Figure 1: Example of random slope failure contours of displacement Stochastic analysis was performed using the Monte Carlo framework to take account of spatial variation. A spatially correlated random field of property values is generated using the LAS method [2]. This mimics the natural variability of shear strength within the ground; this is mapped onto a cubiodal FE mesh. Figure 2 shows a 3D random field, typical of measured values for natural soils [1]. FEA is then performed in which the slope is progressively loaded, until it fails. This process is then repeated with a different random field for each realisation. After many realisations the results are collated allowing the probability of failure to be derived for any slope loading. A further limitation is placed on the mesh resolution in order to preserve accuracy, the maximum element size is 0.5m cubed. 500 realisations were necessary to gain an accurate understanding of the slope failures reliability. same stress return method; however in this solver the factorisation and the loading are inherently linked. Other stress return methods are not available for the Tresca soil model used. 4.1 Comparison of direct and iterative solvers Figure 2: 3D random field 3. Strategy for Parallelisation Two approaches were investigated, one that uses a serial solver, but achieves parallelism by task farming realisations to different processors; and the other that uses a parallel solver and task farming. Both codes were adapted from their original forms [4]. Once developed both codes were analysed to discover which would be most appropriate for full scale testing. A serial version, using a direct solver, was developed on a desktop platform. The second, a simple parallel version that uses an iterative element by element solver, was developed and optimised on a local HPC facility, comprising SGI Onyx 300 platform with 32 MIPS based processors. The limiting factor preventing further work on the Onyx was time to solution, with serial solutions taking six times longer than on the user’s desktop. In order to allow larger analyses to be conducted 100000 CPU hour were applied for, and allocated, on the NGS. The NGS consists of 4 clusters of Pentium 3 machines, each processor running approximately 1.5 times faster than the user’s desktop and 10 times faster than the Onyx processors. 4. Performance This is a plasticity problem that uses many loading iterations to distribute stresses and update the plasticity of elements. The viscoplastic strain method used requires hundreds of iterations but no updates to the stiffness matrix. This allows the time consuming factorisation of the stiffness matrix to be decoupled from the loading iterations. The iterative solver uses the It is the normal expectation that the iterative solver would outperform the serial direct solver in a parallel environment. Indeed the iterative solver showed good speedup when run on increasing numbers of processors, as expected by Smith [4]. The plasticity analysis discussed here does not fit this generalisation. Consideration of the mathematical procedures adopted in this iterative solver and in the direct solver lead to the observation that the direct solver takes advantage of the unchanging mesh. Table I compares timings for codes with both solvers, the iterative and the direct, for one realisation only. The code with the direct solver was run on 1 processor while the code with the iterative solver was run on 8 processors. The table demonstrates the efficiency of the direct solver for this problem in the ratio of timings for the two solvers in terms of wall time and so demonstrating the algorithmic efficiency of the direct solver for this particular analysis. When the use of task farming for multiple realisations is studied, the direct solver becomes even more competitive; assuming efficient task farming over 8 processors, then this is demonstrated by the ratio of CPU time. The serial solver strategy is an order of magnitude faster than the parallel solver. The minimum desired mesh depth for this problem is 60 elements, with 500 realisations and 16 sets of parameters being needed for a minimal analysis. The user found that feasibly only 32 processors were available for use on the NGS. Based on extrapolation of Table I a rough estimate of wall clock time can be calculated; task-farming with the direct solver requires 5.8 days while task-farming with the iterative solver requires 57.9 days. Clearly the direct solver combined with running realisations in parallel is the only reasonable choice. 4.2 Memory requirements The only major drawback is that the direct solver consumes much more memory than the iterative solver. The resources for the direct solver are greater as it needs to form and save the entire stiffness matrix. The required memory increases with the number of degrees of freedom squared. Whereas the iterative solver has a total memory requirement that grows with Number of Elements in y Direction (length of slope) 1 3 5 15 30 Direct Solver on Parallel Solver Wall Time CPU Time 1 processor on 8 processors (column A) (column B) Time (s) per Realisation Ratio of B/A 27.7 111.7 4.0 32.3 110.9 245.0 2.2 17.7 200.9 392.6 2.0 15.6 592.4 982.4 1.7 13.3 1092.5 1601.1 1.5 11.7 Table I: Comparison of direct and iterative solver run times. the number of degrees of freedom divided by the number of processors used. Thus the direct solver is limited to the amount of memory locally available to the CPU, which on the two main NGS nodes is 1 Gb. This gives an absolute limit to the number of elements that can be solved; In this case it is fortunate that the maximum size of mesh is large enough to give a meaningful solution. The results in Table I, show that the iterative solver is increasingly competitive the larger the problem gets. Combined with the memory limitation of the direct solver a further increase in mesh size could only be achieved using the iterative solver. 5. Experimentation The validity of the final code was proven by comparing it with the results of the previously studied 2D version [5] and those of well established deterministic analytical results. In both cases the results compared very favourably, providing a check on both the 3D FEA code and the 3D random field generator. Further to the validation, full scale analyses are currently being undertaken. Preliminary results [6] show that use of the more realistic 3D model has a significant effect on the reliability of the slope. These results have interesting implications for the design of safe and efficient slopes, showing that a ‘safe’ slope designed by traditional methods can either be very conservative or risky, if the effects of soil variability are not considered. 6. Suitability and Use of the National Grid Service (NGS) The use of commodity clusters is ideal for this code’s final form, as its fundamental nature does not require the ultra fast inter-processor communication or shared memory of some proprietary hardware. The very large memory requirements of the direct solver made the individual per processor memory limit the constraining factor.. For the alternate iterative solver version high speed interconnections would go some way to speeding it up. The NGS is configured to be used through globus. In this work globus was used for several purposes from interactive login, to file transfer and job submission. The user is based as the School of Engineering at the University of Manchester and it is worth noting that this school has a policy of only supporting Microsoft windows on their local desktop machines and globus is not part of the routine configuration. Globus was not installed locally but instead the user started a session on a local Onyx and started their globus session from there. Once the user had his code working on one of the NGS nodes he transferred and compiled a copy on each of the other nodes. Job submission was performed using each of these executables. The code is designed to run in a particular environment with set directories and input and out put files. The user developed a number of scripts to automate the set up of this configuration and these were run by hand before a job was run. With time and confidence the user could completely automate the process. The jobs were submitted from the Onyx using scripts to write and execute pbs, these were configured to provide a wide range of job submission types and then reused or adapted ad hoc. The initial scripts were quite simple with just a globus-job-run command. These saved the user typing out complex commands. More sophisticated scripts were developed as the user became confident. These scripts set environment variables and used the Resource Allocation Language file for job submission. The user monitored the load on all the nodes so that he could submit jobs on the node with the lowest load. A small amount of work was needed to get the codes that had previously been running on the Onyx to run on the NGS machines. The compiler on the NGS nodes was stricter in its implementation of standards. The method adopted for editing the code was to do so on a desktop windows machine in a FORTRAN editor and then transfer the file via the local Onyx to the NGS node, whence it was compiled. This did take some extra time in transferring files to and fro but in general the reduction in execution time from running tests on NGS more than made up for it. Debugging was achieved through printing output to file and by visualization when the code ran to completion, but incorrectly. While totalview is available on the nodes the user was not aware of this tools functionality or how to use this profiling tool. Overall developing the code on the grid was no more painful than in any other environment, the only downside being the lack of processor availability when the service is busy; either requiring the use of a different NGS node or the local Onyx. 7. Discussion and Conclusions It should be noted that prior to this project on the NGS this user had no experience or understanding of e-Science and the grid. He was not familiar with hpc and had little practice in using HPC services. Initially it was daunting to use a service like the NGS where the policies and practices were different to the user’s local HPC service. The user took some time and support to learn how to get the best out of the service but in the end was happy with both the service and the computational results. The main value of using the NGS for this user was to allow the execution of code requiring large amounts of CPU time. Large volumes of CPU time for in house machines are often hard to come by because they are in high demand. At present the NGS is moderately loaded, and has powerful computers, allowing such large analyses to be run in a timely fashion. Generally a run requiring 32 CPU’s was started within 24 hours. It also (usually) allowed virtually on demand access to run small debug or test programs, most useful in code development. The NGS has been in full production since September 2004 and currently has over 300 active users. This user applied for resources near the beginning of the service, autumn 2004. The loading of the service has increased steadily and with this the monitoring of the use of resources has become more critical [3]. As the number of users increases further and the resources become scarcer it is expected that the policy of the NGS will develop. Given the very large allocation of time given to this user (100000 hours), this has not been of severe detriment, but may constrain other users with smaller allocations. In its present form the ideal solution to filling the desired number of CPU hours would be to harness the spare CPU time of the university’s public clusters via distributed grid application such as BOINC [7] or Condor [8]. Short of this the use of the NGS provides a ready to run and largely trouble free resource, with good support services. The future of this particular application is no doubt in a fully parallel implementation, with the iterative solver. This is the only computational approach that will deal with the demands of the science, which will require the analysis of higher resolution and longer slopes. Little optimisation or profiling was used on any of the codes. It is expected that improvements to efficiency and speed would be introduced particularly to the iterative solver version. Further development of the tangent stiffness method making it applicable to this problem would dramatically improve the performance of the iterative solver, at the expense of considerable research time. Acknowledgment The authors would like to acknowledge the use of the UK National Grid Service in carrying out this work. References [1] K. Hyunki, “Spatial Variability in Soils: Stiffness and Strength”, PhD thesis, Georgia Institute of Technology, August 2005, pp 15-16. [2] G.A. Fenton and E.H. Vanmarcke, "Simulation of random fields via local average subdivision." J. of Engineering Mechanics, ASCE, 116(8), Aug 1990, pp 1733-1749. [3] NGS (National Grid Service); http://www.ngs.ac.uk/, last accessed 21/2/06. [4] I.M. Smith and D.V. Griffiths, ”Programming the finite element method” third edition, John Wiley & Son, Nov 1999. [5] M.A. Hicks and K. Samy, “Reliability-based characteristic values: a stochastic approach to Eurocode 7”, Ground Engineering, Dec 2002, pp 30-34. [6] W. Spencer and M.A. Hicks, “3D stochastic modelling of long soil slopes”, 14th ACME conference, Belfast, April 2006, pp 119-122. [7] Berkley open infrastructure for network computing; http://boinc.berkeley.edu/, last accessed 2/4/06. [8] Condor for creating computational grids; http://www.cs.wisc.edu/pkilab/condor/, last accessed 4/7/06