An Optimal Family of Exponentially Accurate One-Bit Sigma-Delta Quantization Schemes Percy Deift

advertisement

An Optimal Family of Exponentially Accurate One-Bit

Sigma-Delta Quantization Schemes

Percy Deift∗

C. Sinan Güntürk∗

Felix Krahmer∗†

January 21, 2010

Abstract

Sigma-Delta modulation is a popular method for analog-to-digital conversion of

bandlimited signals that employs coarse quantization coupled with oversampling. The

standard mathematical model for the error analysis of the method measures the performance of a given scheme by the rate at which the associated reconstruction error

decays as a function of the oversampling ratio λ. It was recently shown that exponential accuracy of the form O(2−rλ ) can be achieved by appropriate one-bit Sigma-Delta

modulation schemes. By general information-entropy arguments r must be less than

1. The current best known value for r is approximately 0.088. The schemes that were

designed to achieve this accuracy employ the “greedy” quantization rule coupled with

feedback filters that fall into a class we call “minimally supported”. In this paper, we

study the minimization problem that corresponds to optimizing the error decay rate

for this class of feedback filters. We solve a relaxed version of this problem exactly

and provide explicit asymptotics of the solutions. From these relaxed solutions, we

find asymptotically optimal solutions of the original problem, which improve the best

known exponential error decay rate to r ≈ 0.102. Our method draws from the theory

of orthogonal polynomials; in particular, it relates the optimal filters to the zero sets

of Chebyshev polynomials of the second kind.

1

Introduction

Conventional Analog-to-Digital (A/D) conversion systems consist of two basic steps: sampling and quantization. Sampling is the process of replacing the input function (x(t))t∈R by a

sequence of its sample values (x(tn ))n∈Z . The sampling instances (tn ) are typically uniform,

i.e., tn = nτ for some τ > 0. It is well known that this process incurs no loss of information

if the signal is bandlimited and τ is sufficiently small. More precisely, if supp x

b ⊂ [−Ω, Ω],

1

then it suffices to pick τ ≤ τcrit := 2Ω , and the sampling theorem provides a recipe for perfect

∗

†

Courant Institute of Mathematical Sciences, New York University, New York, NY, USA.

Hausdorff Center for Mathematics, Universität Bonn, Bonn, Germany.

1

reconstruction via the formula

x(t) = τ

X

x(nτ )ϕ(t − nτ ),

(1)

n∈Z

where ϕ is any sufficiently localized function such that

1, |ξ| ≤ Ω,

ϕ(ξ)

b =

0, |ξ| ≥ 2τ1 ,

(2)

which we shall refer to as the admissibility condition for ϕ. Here, x

b and ϕ

b denote the

Fourier transform of x and ϕ, respectively. In this paper, we shall work with the following

normalization of the Fourier transform on R:

Z ∞

b

f (ξ) :=

f (t)e−2πiξt dt.

−∞

The value ρ := 1/τ is called the sampling rate, and ρcrit := 1/τcrit = 2Ω is called the critical

(or Nyquist) sampling rate. The oversampling ratio is defined as

λ :=

ρ

ρcrit

.

(3)

We shall assume in the rest of the paper that the value of Ω is arbitrary but fixed.

The next step, quantization, is the process of discretization of the amplitude, which

involves replacing each x(tn ) by another value qn suitably chosen from a fixed, typically

finite set A (the alphabet). The resulting sequence (qn ) forms the raw digital representation

of the analog signal x.

The standard approach to recover an approximation x̃ to the original analog signal x is

to use the reconstruction formula (1) with x(nτ ) replaced by qn , which produces an error

signal e := x − x̃ given by

X

e(t) = τ

x(nτ ) − qn ϕ(t − nτ ).

(4)

n∈Z

The quality of this approximation can then be measured by a variety of functional norms on

the error signal. In this paper we will use the norm kekL∞ which is standard and arguably

the most meaningful.

Traditionally, A/D converters have been classified into two main families: Nyquist-rate

converters (λ ≈ 1) and oversampling converters (λ 1). When τ = τcrit , the set of

functions {ϕ(· − nτ ) : n ∈ Z} forms an orthogonal system. Therefore, a Nyquist-rate

converter necessarily has to keep |x(nτ ) − qn | small for each n in order to achieve small

overall reconstruction error; this requires that the alphabet A forms a fine net in the range

of the signal. On the other hand, oversampling converters are not bound by this requirement

because as τ decreases (i.e., λ increases), the kernel of the operator

X

Tτϕ : (cn ) 7→

cn ϕ(· − nτ )

(5)

n∈Z

2

gets “bigger” in a certain sense, and small error can be achieved even with very coarse alphabets A. The extreme case is a one-bit quantization scheme, which uses A = {−1, +1}. For

the circuit engineer, coarse alphabets mean low-cost analog hardware because increasing the

sampling rate is cheaper than refining the quantization. For this reason, oversampling data

converters, in particular, Sigma-Delta (Σ∆) modulators (see section 2.1 below) have become

more popular than Nyquist-rate converters for low to medium-bandwidth signal classes, such

as audio [12]. On the other hand, the mathematics of oversampled A/D conversion is highly

challenging as the selection problem of (qn ) shifts from being local (i.e., the qn ’s are chosen

independently for each n) to a more global one; a quantized assignment qn ∈ A should be

computed based not only on the current sample value x(tn ), but also taking into account

sample values x(tk ) and assignments qk in neighboring positions. Many “online” quantization

applications, such as A/D conversion of audio signals, require causality, i.e., only quantities

that depend on prior instances of time can be utilized. Other applications, such as digital

halftoning, may not be strictly bound by the same kind of causality restrictions although

it is still useful to process samples in some preset order. In both situations, the amount of

memory that can be employed in the quantization algorithm is one of the limiting factors

determining the performance of the algorithm.

This paper is concerned with the approximation theory of oversampled, coarse quantization, in particular, one-bit quantization of bandlimited functions. Despite the vast engineering literature on the subject (e.g., see [12]), and a recent series of more mathematically

oriented papers (e.g., [5, 15, 7, 8, 9, 1, 2]), the fundamental question of how to carry out

optimal quantization remains open. After the pioneering work of Daubechies and DeVore on

the mathematical analysis and design of Σ∆ modulators [5], more recent work showed that

exponential accuracy in the oversampling ratio λ can be achieved by appropriate one-bit Σ∆

modulation schemes [7]. The best achievable error decay rate for these schemes was O(2−rλ )

with r ≈ 0.076 in [7]. Later, with a modification, this rate was improved to r ≈ 0.088 in [11].

It is known that any one-bit quantization scheme has to obey r < 1, whereas it is not known

if this upper bound is tight [4, 7]. This paper improves the best achievable rate further to

r ≈ 0.102 by designing an optimal family of Sigma-Delta modulation schemes within the

class of so-called “minimally supported” recursion filters. These schemes were introduced in

[7] together with a number of accompanying open problems. The main results of this paper

(see Theorems 4.1, 5.5, 5.6) are based on the solution of one of these problems, namely,

the optimization of the minimally supported recursion filters that are used in conjunction

with the greedy rule of quantization. One of our main results is that the supports of these

optimal filters are given by suitably scaled zero sets of Chebyshev polynomials of the second

kind, and we use this result to derive our improved exponent for the error bound.

The paper is organized as follows. In Section 2, we review the basic mathematical theory

of Σ∆ modulation as well as the constructions and methods of [7] which will be relevant to

this paper, such as the family of minimally supported recursion filters, the greedy quantization rule, and fundamental aspects of the error analysis leading to exponentially decaying

error bounds. As we explain, the discrete optimization problem introduced in [7] plays a

key role in the analysis. In Section 3, we introduce a relaxed version of the optimization

3

problem, which is analytically tractable. This relaxed problem is solved in Section 4 and

analyzed asymptotically in Section 5 as the order of the reconstruction filter goes to infinity.

Section 5 also contains the construction of asymptotically optimal solutions to the discrete

optimization problem, which yields the exponential error decay rate mentioned above. Finally, we extend our results to multi-level quantization alphabets in Section 6. We also

collect, separately in the Appendix, the properties and identities for Chebyshev polynomials

which are used in the proofs of our results.

2

2.1

Background on Σ∆ modulation

Noise shaping and feedback quantization

Σ∆ modulation is a generic name for a family of recursive quantization algorithms that

utilize the concept of “noise shaping”. (The origin of the terminology Σ∆, or alternatively

∆Σ, goes back to the patent application of Inose et al [10] and refers to the presence of

certain circuit components at the level of A/D circuit implementation; see also [12, 13].)

Let (yn ) be a general sequence to be quantized (for example, yn = x(nτ )), (qn ) denote the

quantized representation of this sequence, and (νn ) be the quantization error sequence (i.e.,

the “noise”), defined by ν := y − q. Noise shaping is a quantization strategy whose objective

is to arrange for the quantization noise ν to fall outside the frequency band of interest, which,

in our case is the low-frequency band. Note that the effective error we are interested in is

e = Tτϕ (ν), hence one would like ν to be close to the kernel of Tτϕ . It is useful to think of

Tτϕ (ν) as a generalized convolution of the sequence ν and the function ϕ (sampled at the

scale τ ). Let us introduce the notation

ν ~τ ϕ := Tτϕ (ν).

(6)

Note that (a ∗ b) ~τ ϕ = a ~τ (b ~τ ϕ) and a ~τ (ϕ ∗ ψ) = (a ~τ ϕ) ∗ ψ, etc., where a and b are

sequences on Z, ϕ and ψ are functions on R, and ∗ denotes the usual convolution operation

(on Z and R). By taking the Fourier transform of (5) one sees that the kernel of Tτϕ consists

of sequences ν that are spectrally disjoint from ϕ, i.e., “high-pass” sequences, since ϕ is a

“low-pass” function, as apparent from (2). Thus, arranging for ν to be (close to) a high-pass

sequence is the primary objective of Σ∆ modulation.

High-pass sequences have the property that their Fourier transforms vanish at zero frequency (and possibly

a neighborhood). For a finite high-pass sequence s, the Fourier

P in 2πinξ

transform sb(ξ) :=

sn e

has a factor (1 − e2πiξ )m for some positive integer m, which

m

means that s = ∆ w for some finite sequence w. Here, ∆ denotes the finite difference

operator defined by

(∆w)n := wn − wn−1 .

(7)

The quantization error sequence ν = y − q, however, need not be finitely supported, and

therefore νb need not be defined as a function (since ν is bounded at best). Nevertheless, this

spectral factorization can be used to model more general high-pass sequences. Indeed, a Σ∆

4

modulation scheme of order m utilizes the difference equation

y − q = ∆m u

(8)

to be satisfied for each input y and its quantization q, for an appropriate auxiliary sequence

u (called the state sequence). This explicit factorization of the quantization error is useful

if u is a bounded sequence, as will be explained in more detail in the next subsection.

In practice, (8) is used as part of a quantization algorithm. That is, given any sequence

(yn )n≥0 , its quantization (qn )n≥0 is generated by a recursive algorithm that satisfies (8). This

is achieved via an associated “quantization rule”

qn = Q(un−1 , un−2 , . . . , yn , yn−1 , . . . ),

(9)

together with the “update rule”

un =

m

X

k−1

(−1)

k=1

m

un−k + yn − qn ,

k

(10)

which is a restatement of (8). Typically one employs the initial conditions un = 0 for n < 0.

In the electrical engineering literature, such a recursive procedure for quantization is

called “feedback quantization” due to the role qn plays as a (nonlinear) feedback term via

(9) for the difference equation (8). Note that if y and q are given unrelated sequences and

u is determined from y − q via (8), then u would typically be unbounded for m ≥ 1. Hence

the role of the quantization rule Q is to tie q to y in such a way as to control u.

A Σ∆ modulator may also arise from a more general difference equation of the form

y−q =H ∗v

(11)

where H is a causal sequence (i.e., Hn = 0 for n < 0) in `1 with H0 = 1. If H = ∆m g with

g ∈ `1 , then any (bounded) solution v of (11) gives rise to a (bounded) solution u of (8) via

u = g ∗ v. Thus (11) can be rewritten in the canonical form (8) by a change of variables.

Nevertheless, there are significant advantages to working directly with representations of the

form (11), as explained in Section 2.3 below.

2.2

Basic error estimates

Basic error analysis of Σ∆ modulation only relies on the boundedness of solutions u of (8)

for given y and q, and not on the specifics of the quantization rule Q that was employed.

It is useful, however, to consider arbitrary quantizer maps M : y 7→ q that satisfy (8) for

some m and u, where u may or may not be bounded. Note that if u is a solution to (8) for

a given triple (y, q, m), then ũ := ∆u is a solution for the triple (y, q, m−1). Hence a given

map M can be treated as a Σ∆ modulator of different orders. Formally, we will refer to the

pair (M, m) as a Σ∆ modulator of order m.

As indicated above, in order for a Σ∆ modulator (M, m) to be useful, there must be a

bounded solution u to (8). (Note that, up to an additive constant, there can be at most one

5

such bounded solution once m > 0.) Moreover, one would like this to be the case for all

input sequences y in a given class Y, such as Yµ = {y : ||y||`∞ ≤ µ} for some µ > 0. In this

case, we say that (M, m) is stable for the input class Y. Clearly, if (M, m) is stable for Y,

then (M, m−1) is stable for Y as well. To any quantizer map M and a class of inputs Y, we

assign its maximal order m∗ (M, Y) via

m∗ (M, Y) := sup m : ∀y ∈ Y, ∃u ∈ `∞ such that y − M(y) = ∆m u .

(12)

Note that both 0 and ∞ are admissible values for m∗ (M, Y). With this notation, (M, m) is

stable for the class Y if and only if m ≤ m∗ (M, Y).

Stability is a crucial property. Indeed, it was shown in [5] that a stable m-th order scheme

with bounded solution u results in the error bound

kekL∞ ≤ kuk`∞ kϕ(m) kL1 τ m ,

(13)

where ϕ(m) denotes the mth order derivative of ϕ. The proof of (13) employs repeated

summation by parts, giving rise to the commutation relation

(∆m u) ~τ ϕ = u ~τ (∆m

τ ϕ),

(14)

where ∆τ is the finite difference operator at scale τ defined by (∆τ ϕ)(·) := ϕ(·) − ϕ(· − τ ).

The left hand side of (14) is simply equal to the error signal e by definition. On the other

hand, the right hand side of this relation immediately leads to the error bound (13). As u

is not uniquely determined by the equation y − M(y) = ∆m u, it is convenient to introduce

the notation

U (M, m, y) := inf kuk`∞ : y − M(y) = ∆m u ,

(15)

and for an input class Y

U (M, m, Y) := sup U (M, m, y).

(16)

y∈Y

We are interested in applying (13) to sequences y that arise as samples yn = x (nτ ) of a

bandlimited signal x as above. To compare the error bounds for different values of τ , one

could consider the class Y(x) = {y = (yn )n∈Z : yn = x(nτ ) for some τ } and work with the

constant U (M, m, Y(x) ). However, it is difficult to estimate U (M, m, Y(x) ) in a way that

accurately reflects the detailed nature of the signal x. Instead, we note that for kxkL∞ ≤ µ,

one has Y(x) ⊂ Yµ , where Yµ = {y = (yn )n∈Z : ||y||`∞ ≤ µ} as defined above. This leads to

the bound

kekL∞ ≤ U (M, m, Yµ )kϕ(m) kL1 τ m .

(17)

In (17), the reconstruction kernel ϕ is restricted by the τ -dependent admissibility condition (2). However, if a reconstruction kernel ϕ0 is admissible in the sense of (2) for τ = τ0 =

1/ρ0 , then it is admissible for all τ < τ0 . This allows one to fix ρ0 = (1+)ρcrit for some small

(m)

> 0, and set ϕ = ϕ0 . Now Bernstein’s inequality1 implies that kϕ0 kL1 ≤ (πρ0 )m kϕ0 kL1

1

If fb is supported in [−A, A], then kf 0 kLp ≤ 2πAkf kLp for 1 ≤ p ≤ ∞.

6

since ϕ

b0 is supported in [− ρ20 , ρ20 ]. This estimate allows us to express the error bound naturally as a function of the oversampling ratio λ defined in (3), as follows:

Let and ϕ0 be fixed as above, let M be a given quantizer function, and let m be a positive

integer. Then for any given Ω-bandlimited function x with kxkL∞ ≤ µ, the quantization error

e = eλ , as expressed in (4) in terms of τ = λρ1crit , can be bounded in terms of λ:

keλ kL∞ ≤ U (M, m, Yµ )kϕ0 kL1 π m (1 + )m λ−m .

(18)

As the constants are independent of λ, this yields an O(λ−m ) bound as a function of λ.

Note that any dependency of the error on the original bandwidth parameter Ω has now been

effectively absorbed into the fixed constant kϕ0 kL1 . In fact, this quantity need not even

depend on Ω; a reconstruction kernel ϕ∗0 can be designed once and for all corresponding to

Ω = 1, and then employed to define ϕ0 (t) := Ωϕ∗0 (Ωt), which is admissible and has the same

L1 -norm as ϕ∗0 .

2.3

Exponentially accurate Σ∆ modulation

The O(λ−m ) error decay rate derived in the previous section is based on using a fixed

Σ∆ modulator. It is possible, however, to improve the bounds by choosing the modulator

adaptively as a function of λ. In order to obtain an error decay rate better than polynomial,

one needs an infinite family ((Mm , m))∞

1 of stable Σ∆ modulation schemes from which

the optimal scheme Mmopt (i.e., one that yields the smallest bound in (18)) is selected as a

function of λ. This point of view was first pursued systematically in [5]. Finding a stable Σ∆

modulator is in general a non-trivial matter as m increases, and especially so if the alphabet

A is a small set. The extreme case is one-bit Σ∆ modulation, i.e., when card(A) = 2. The

first infinite family of arbitrary-order, stable one-bit Σ∆ modulators was also constructed in

[5]. In the one-bit case, one may set A = {−1, +1} to normalize the amplitude, and choose

µ ≤ 1 when defining the input class Y = Yµ . The optimal order mopt and the size of the

resulting bound on keλ kL∞ depend on the constants U (Mm , m, Yµ ).

There are a priori lower bounds on U (M, m, Yµ ) for any 0 < µ ≤ 1 and any quantizer

M. Indeed, as shown in [7], one obtains a super-exponential lower bound on U (M, m, Yµ )

by considering the average metric entropy of the space of bandlimited functions, as follows.

Define the quantity

Um (Y) := inf U (M, m, Y)

(19)

M

and let

Xµ := {x : supp x

b ∈ [−1/2, 1/2], kxkL∞ ≤ µ}.

(20)

Then, as shown in [6, 7], one has for any one-bit quantizer

sup{keλ kL∞ : x ∈ Xµ } &µ 2−λ ,

(21)

which yields, when used with (18), the result that Um (Yµ ) &µ (mc)m for some absolute

constant c > 0 [7]. Here by A &s B, we mean that A ≥ CB for some constant C that may

depend on s, but not on any other input variables of A and B.

7

For the family of Σ∆ modulators constructed in [5], which we will denote by (Dm )∞

m=1 , the

2

best upper bounds on U (Dm , m, Yµ ) are of order exp(cm ), resulting in the order-optimized

error bound keλ kL∞ . exp(−c(log λ)2 ), which is substantially larger than the exponentially

small lower bound of (21). The first construction that led to exponentially accurate Σ∆

modulation was given later in [7]. The associated modulators, here denoted by (Gm )∞

m=1 ,

satisfy the bound

U (Gm , m, Yµ ) . (ma)m ,

(22)

for a = a(µ) > 0. Substituting (22) into (18) and using the elementary inequality

min mm α−m . e−α/e

m

(23)

with α = λ/(aπ(1 + )), one obtains the order-optimized error bound

sup{keλ kL∞ : x ∈ Xµ } . 2−rλ ,

(24)

where r = r(µ) = (aπe(1 + ) log 2)−1 . On the other hand, the smallest achievable value of a

is shown to be 6/e, which corresponds to the largest achievable value of r = rmax ((Gm )∞

m=1 ) ≈

(6π log 2)−1 ≈ 0.076. Observe from (21) that it is impossible to achieve an exponent r > 1.

In [7], the Gm were given in the form (11) where the causal sequences H, g ∈ `1 with

H0 = g0 = 1 depend on m, and are related via

H = ∆m g.

(25)

Define h := δ (0) − H, where δ (0) denotes the Kronecker delta sequence supported at 0. Then

(11) can be implemented recursively as

vn = (h ∗ v)n + yn − qn .

(26)

If q is chosen so that the resulting v is bounded, i.e., if this new scheme is stable, then

u := g ∗ v is a bounded solution of (8), and

kuk`∞ ≤ kgk`1 kvk`∞ .

(27)

The significance of the more general form (11) giving rise to (26) is the following: If

||h||1 + ||y||∞ ≤ 2,

(28)

qn := sign ((h ∗ v)n + yn ) .

(29)

then the greedy quantization rule

leads to a solution v with ||v||∞ ≤ 1, provided the initial conditions satisfy this bound.

However, for the canonical form (8), the condition (28) clearly fails for all m > 1.

For kyk∞ ≤ µ ≤ 1, a filter h will satisfy (28) and hence lead to a stable P

scheme when

khk1 ≤ 2 − µ. Note that (25) together with the fact that g ∈ `1 implies that

hi = 1 and

hence khk1 ≥ 1.

8

In view of the preceding considerations, we are led, for each m to the following minimization problem:

Minimize kgk1 subject to δ (0) − h = ∆m g, khk1 ≤ 2 − µ.

(30)

It was shown in [7] that if a sequence of filters h(m) satisfy the feasibility conditions in (30)

for the corresponding m ∈ N, the diameter of the support set of h(m) must grow at least

quadratically in m.

The minimization problem (30) was not solved in [7]; rather the author introduced a class

of feasible filters h = h(m) which were effective in the sense that they lead to an exponential

error bound with rate constant r as above. These filters h(m) are sparse, i.e., they contain

only a few non-zero entries. Indeed, each h(m) has exactly m non-zero entries, which can be

shown to be the minimal support size for which h(m) can satisfy the feasibility conditions. We

shall call such filters minimally supported. Note that if h has finite support and kgk1 < ∞,

then g has finite support. We make the following formal definition:

Definition 2.1. We say that a filter h = δ (0) − ∆m g, for a finitely supported g, has minimal

support if |supp h| = m.

The goal of this paper is to find optimal filters within the class of filters with minimal

support.

2.4

Filters with minimal support

As the filter δ (0) − h arises as the m-th order finite difference of the vector g, its entries have

to satisfy m moment conditions. This implies that the support size of h is at least m, as

advertised above.

For filters h with minimal support

h=

m

X

dj δ (nj ) ,

(31)

j=1

the moment conditions lead to explicit formulae for the entries dj in terms of the support

{nj }m

j=1 of h, where 1 ≤ n1 < n2 < · · · < nm [7]. Here the condition that n1 ≥ 1 follows

from the strict causality of h.

Indeed, one finds

m

Y

0

ni

dj =

.

(32)

n − nj

i=1 i

Here the notation 0 , and analogously 0 , indicates that the singular terms are excluded

from the product, or the sum respectively. By definition, if m = 1, one has d1 = 1.

The condition khk1 ≤ 2 − µ then takes the form

Q

P

m

m Y

X

0

j=1

ni

≤ 2 − µ.

|n

−

n

|

i

j

i=1

9

(33)

Furthermore, explicit computations lead to the identity

Qm

j=1 nj

kgk1 =

.

m!

(34)

In this notation, minimization problem (30) takes the form

Qm

Minimize

j=1

nj

m!

over {n = (n1 , . . . , nm ) ∈ Nm : (33) holds and 1 ≤ n1 < · · · < nm } (35)

For µ = 1, Problem (35) has a solution only for m = 1, and we find h = δ (1) , but for

µ < 1, the problem has a nontrivial solution for all m. That is, we can find nj , j = 1, . . . , m,

that satisfy (33). In particular, for nj (σ) = 1 + σ(j − 1), one easily sees that

lim

σ→∞

m

m Y

X

0

j=1

ni (σ)

= 1.

|ni (σ) − nj (σ)|

i=1

(36)

So for every µ < 1, n(σ) satisfies constraint (33) for all σ large enough.

Furthermore, any minimizer n of problem (35) must satisfy n1 = 1. Indeed, otherwise

nj > 1 for all j and we can define ñ by ñj = nj − 1 ≥ 1 for all j = 1, . . . , m. Calculate

m

m Y

X

0

j=1

and

m

m

m

m

X Y0 ni − 1

X Y0

ñi

ni

=

<

≤2−µ

|ñ

−

ñ

|

|n

−

n

|

|n

−

n

|

i

j

i

j

i

j

i=1

j=1 i=1

j=1 i=1

Qm

j=1

ñj

m!

Qm

<

j=1

m!

nj

.

(37)

(38)

So n cannot be a minimizer.

Hence, we can fix n1 ≡ 1, which reduces problem (35) to minimizing

η(n) :=

m

Y

nj

(39)

j=2

over the set {n = (n2 , . . . , nm ) ∈ Nm−1 |1 < n2 < · · · < nm } under the constraint

m

m Y

X

0

j=1

ni

≤ γ,

|n

−

n

|

i

j

i=1

(40)

where again n1 ≡ 1. The factor m! in the denominator has been absorbed into the definition

of η to simplify the notation. Furthermore, we have set γ = 2 − µ, as the considerations that

follow make sense for arbitrary γ > 1 and not only for γ ≤ 2.

Notational Remark: All quantities in the derivations below depend on m. We will suppress this dependence unless it is relevant in a particular argument.

10

3

The relaxed minimization problem for optimal filters

The variables nj correspond to the positions of the nonzero entries in the vector h, so they

are constrained to positive integer values. We will first consider the relaxed minimization

problem without this constraint; this will eventually enable us to draw conclusions about

the original problem. Thus the variables nj ∈ N will be replaced by relaxed variables

xj ∈ R+ . Furthermore, it turns out to be convenient to replace the index set {1, . . . , m} by

{0, . . . , m − 1}.

The relaxed minimization problem is specified as follows: Minimize

η(x) :=

m−1

Y

xj

(41)

j=1

over the set D = {x ∈ Rm−1 |1 < x1 < x2 < · · · < xm−1 } under the constraint

f (x) :=

m−1

X m−1

Y0

j=0 i=0

xi

≤ γ,

|xi − xj |

(42)

where x0 ≡ 1.

Observe that f is defined and smooth in the open domain D. The following monotonicity

property for f is important in making inferences from the relaxed to the discrete minimization

xj

problem. Let r(x) be given by rj (x) = xj−1

, j = 1, . . . , m − 1, and set F (r) = f (x) for x

such that r = r(x).

Lemma 3.1. The function F (r) is strictly decreasing in each variable rj .

Proof. A simple calculation shows that

F (r) =

m−1

X m−1

Y0

j=0 i=0

m−1

XY

Y

xi

1

=

|xi − xj |

r r · · · rj − 1 i>j 1 −

j=0 i<j i+1 i+2

1

1

rj+1 rj+2 ···ri

,

(43)

from which the monotonicity is immediate.

Definition 3.2. If x, y ∈ D and 1 ≤

y1

x1

≤ ··· ≤

ym−1

,

xm−1

we say that y is subordinate to x.

Clearly, y is subordinate to x if and only if rj (x) ≤ rj (y) for j = 1, . . . , m − 1, so

Lemma 3.1 is equivalent to the following:

Corollary 3.3. If y is subordinate to x and x 6= y, then f (y) < f (x).

If x is a minimizer of the constraint optimization problem (41), (42), then f (x) = γ.

Indeed, for a proof by contradiction, assume that x is a minimizer and f (x) < γ. Then for

t ∈ [0, 1), we can define x̃j (t) = (1 − t)xj + tx0 . Since f ◦ x̃ is continuous in t and

f (x̃(0)) = f (x) < γ,

11

(44)

there exists t > 0 such that f (x̃(t)) < γ. However, the function

η (x̃(t)) =

m−1

Y

((1 − t)xj + tx0 ))

(45)

j=0

is decreasing in t, so

η (x̃(t)) < η (x) ,

(46)

and x cannot be a minimizer. Hence we can replace constraint (42) by the equality

f (x) =

m−1

X m−1

Y0

j=0 i=0

xi

= γ.

|xi − xj |

(47)

As we now show, this equation defines a smooth manifold within D. It is enough to verify

that ∇f 6= 0. Note first that

∂

xk

1

1

= −xj

.

∂xk |xk − xj |

xk − xj |xk − xj |

Now calculate for j 6= k using this fact

m−1

m−1

xi

1

xi

1

∂ Y0

Y0

=

−xj

∂xk i=0 |xi − xj |

|xi − xj |

xk − xj |xk − xj |

i=0

(48)

(49)

i6=k

= −

η(x) (−1)j

bj ,

xk xk − xj

where we set from now on

bj (x) =

m−1

Y0

i=0

1

.

xi − xj

(50)

(51)

j

Note that (−1) bj (x) is always positive.

Furthermore, for j = k,

m−1

m−1

X

0 η(x) (−1)k

xi

∂ Y0

= −

bk (x).

∂xk i=0 |xi − xk |

x

k xk − xl

l=0

(52)

Hence

m−1

X0

∂f

1

1

= − η(x)

(−1)k bk (x) + (−1)j bj (x) .

∂xk

xk

xk − xj

j=0

For k = m − 1, all terms in the sum are positive. Hence

12

(53)

∂f

< 0

∂xm−1

(54)

and so {x|f (x) = γ} is a manifold within D.

We now show that the infimum of η subject to (47) is attained in D. Let η0 =

inf

η(x) and let x(n) ∈ D ∩ {f = γ} be chosen such that lim η(x(n) ) = η0 . As before,

n→∞

x∈D,f (x)=γ

(n)

we set x0

≡ 1.

We first show that x(n) is bounded. Define M := sup η x(n) . Then for each n,

n∈N

(n)

kx(n) k∞ = |xm−1 | ≤ η x(n) ≤ M,

(n)

(55)

(n)

as, for each i, 1 ≤ xi ≤ xm−1 . Since M < ∞, it follows that x(n) is bounded. We conclude

that x(n) must have a convergent subsequence x(nk ) → x(∞) .

Now x(∞) cannot lie on the boundary of D. Indeed, for any 0 ≤ j 6= k ≤ m − 1, we have

(n)

γ = f (x

(n)

)≥

m−1

Y0

(n)

xi

(n)

i=0 |xi

−

(n)

xj |

≥

1

(n)

M m−2 |xj

(n)

− xk |

,

(56)

(n)

which implies that |xj − xk | ≥ γM1m−2 > 0. It follows that x(n) stays away from the

boundary of D, which implies that x(∞) ∈ D. Thus, problem (41), (47) must have at least

one minimizer xmin = x(∞) in D. Note that a priori, there can be more than one minimizer.

As {x|f (x) = γ} is a manifold within D, every minimizer xmin = (x1 , . . . , xm−1 ) of the

constrained optimization problem given by (41) and (47) solves the associated Lagrange

multiplier equations, i.e., there exists ν = ν(xmin ) ∈ R such that

ν∇η(xmin ) + ∇f (xmin ) = 0,

f (xmin ) = γ.

Combined with (53) and the relation

(57), (58) take the explicit form

m−1

X

0

j=0

∂

η(y)

∂yk

=

1

η(y),

yk

(57)

(58)

the Lagrange multiplier equations

1

(−1)k bk (xmin ) + (−1)j bj (xmin ) = ν,

xk − xj

(59)

f (xmin ) = γ

(60)

for k = 1, . . . , m − 1 and x0 ≡ 0 as before.

Note that any critical point xcrit of the minimization problem for η on D solves equations

(59), (60) for some ν. In the following section, we will show that in fact η has a unique critical

point in D.

13

4

Solution of the relaxed minimization problem

Theorem 4.1. The minimum value of η on the manifold {f = γ} in D is given by

η = ηmin =

sinh(2mβ)

(2 sinh β)2m−1 cosh β

(61)

where β = β(m, γ) is the unique positive solution of the equation

cosh ((2m − 1)β)

= γ.

cosh β

(62)

The minimum value ηmin is attained at the unique point xmin = (x1 , . . . , xm−1 ), where

xj = 1 +

1

(1 + zj ) ,

2 sinh2 β

j = 1, . . . , m − 1.

(63)

π , j = 1, . . . , m − 1, are the zeros of the Chebyshev polynomial of the

Here zj = cos m−j

m

second kind of degree m − 1.

Proof. The minimization problem (41), (47) assumes its minimum in D, so there must be

at least one critical point xcrit = (x1 , . . . , xm−1 ) with 1 < x1 < · · · < xm−1 .

To prove uniqueness, we will express the associated Lagrange multiplier problem as a

nonlinear matrix equation and then show using a rank argument, which is established by

Proposition 4.2, that the equation can have only the solution given by (63).

As in (59), xcrit = (x1 , . . . , xm−1 ) must satisfy

m−1

X

0

j=0

1

(−1)k bk (xcrit ) + (−1)j bj (xcrit ) = ν(xcrit ),

xk − xj

for k = 1, . . . , m − 1 and, again, bj (xcrit ) =

m−1

Q0

i=0

(64)

1

.

xi −xj

In matrix notation, the statement reads

B(xcrit )v = ν(xcrit )e,

(65)

where e = (1, 1, . . . , 1)T ∈ Rm−1 , v = (1, −1, 1, −1, . . . )T ∈ Rm and the matrix-valued

function B : Rm−1 → R(m−1)×m is given by

m−1

P0 b1 (y)

b2 (y)

bm−1 (y)

b0 (y)

···

y1 −y0

y1 −yj

y1 −y2

y1 −ym−1

j=0

m−1

P

b0 (y)

0 b2 (y)

b1 (y)

bm−1 (y)

y2 −y0

·

·

·

y2 −y1

y2 −yj

y2 −ym−1

,

B(y) =

(66)

j=0

.

.

.

.

...

.

.

.

.

.

.

.

.

m−1

P0 bm−1 (y)

b0 (y)

b1 (y)

b2 (y)

···

ym−1 −y0

ym−1 −y1

ym−1 −y2

ym−1 −yj

j=0

14

where y = (y1 , . . . , ym−1 ) and as before y0 ≡ 1.

For given y = (y1 , . . . , ym−1 ) let py (s) be a polynomial such that

p0y (s)

=

m−1

Y

(s − yj ).

(67)

j=1

For definiteness, we normalize py (0) = 0. Let Γ be a positively oriented circle in C of radius

R large enough to enclose all yj ’s, including y0 ≡ 1. We now calculate the integral

I

1

py (z)

Ik =

dz, k = 1, . . . , m − 1

(68)

2πi

(z − yk )(z − y0 )p0y (z)

Γ

in two different ways.

Firstly, letting R → ∞, we see that Ik = m1 . Secondly, we compute the integral using the

residues at yj , 0 ≤ j ≤ m − 1. For the residue Rj at yj , j 6= k, we obtain

Rj = (−1)m−1

bj (y)

p(yj ).

yj − yk

(69)

At zk , we have a double root in the denominator of the integrand in (68), so

0 m−1

Q

(yk − yi )

m−1

i=0

p (z) 0

X i6=j,k

py (yk )

y

Rk = m−1

− py (yk )

= m−1

2

Q

Q

m−1

j=0

Q

(z − yi ) (yk − yi )

j6=k

(yk − yi )

i=0

i=0

i6=k

i6=k

(70)

i=0

i6=k

z=yk

m−1

= (−1)

m−1

X

0 bk (y)

py (yk )

y

−

y

j

k

j=0

(71)

Summing the residues, we conclude that for k = 1, . . . , m − 1:

m−1

(−1)m−1 X0

1

=

(bk (y)py (yk ) + bj (y)py (yj ))

m

y

−

y

j

k

j=0

(72)

or equivalently

(−1)m

B(y)py =

e,

(73)

m

where py = (py (y0 ), py (y1 ), . . . , py (ym−1 ).

The normalization py (0) = 0 plays no role in the above calculation, and so (73) also holds

for py + e. Hence, the vector e lies in the kernel of B(y) for any y. In Proposition 4.2, we

will show that dim Ker B(y) = 1, and hence Ker B(y) is spanned by e. In particular, this

shows that ν(xcrit ) 6= 0: Otherwise, v would be collinear to e, which is impossible.

15

Specifying y = xcrit , one obtains

B(xcrit )pxcrit

(−1)m

e,

=

m

(74)

and it follows that

B(xcrit ) [m(−1)m ν(xcrit )pxcrit ] = ν(xcrit )e.

(75)

By (65), v also solves (75), and thus

v − m(−1)m ν(xcrit )pxcrit = c e

(76)

q(s) = m(−1)m ν(xcrit )pxcrit (s) + c.

(77)

for some constant c.

Set

Then q is a polynomial of degree m with critical points at the xj , j = 1, . . . , m − 1 such

that q(xj ) = (−1)j , j = 1, . . . , m − 1. As q cannot have any more critical points and must

be monotonic for x > xm−1 , then ultimately, it will change sign and there is a unique point

xm > xm−1 such that q(xm ) = −q(xm−1 ) = (−1)m . Hence the polynomial u given by

xm − 1

m

u(s) = (−1) q

(s + 1) + 1

(78)

2

has the equi-oscillation property, and we conclude by Proposition A.1 that u(s) = Tm (s).

That implies, that if zm , j = 1, . . . , m − 1, are the extrema of Tm – i.e., the zeros of the

Chebyshev polynomials of the second kind of degree m − 1 – then the extrema of q are given

by

xm − 1

xj =

(1 + zj ) + 1.

(79)

2

Thus all critical points xcrit = (x1 , . . . , xm−1 ) are given by

xj = xj (K) := 1 + K(1 + zj )

(80)

for some constant K. It follows from Lemma 3.1 that f (x(K)) is strictly monotonic in K,

i.e., different values of K correspond to different values of γ. This proves that η has a unique

critical point on {f = γ} in D, and, in particular, that xmin is unique and given by (63).

We now compute K = K(m, γ). The calculation uses several facts about Chebyshev

polynomials and their roots, which we have collected in the Appendix. Let β = β(m, γ) > 0

be defined through the relation

1

K=

.

(81)

2 sinh2 β

16

Noting that zi = −zm−i , we obtain from Lemma A.2

f (x) =

m−1

Y

i=1

=

m−1 m−1

1 + K(1 + zi ) X Y 1 + K(1 + zi )

+

K |zi − z0 |

K |zi − zj |

j=1 i=0

i6=j

m−1 m−1

Y

2

m

"

(82)

i=1

m−1

m−1

1 + K(1 + zi ) X 2m−1 (1 − zj ) Y 1 + K(1 + zi )

+

K

m

K

j=1

i=0

m−1 m−1

Y

i6=j

# "

m−1

X

1

1 + zm−j

=

+ 1 − zm−i

1+

m i=1 K

1 + K(1 − zm−j )

j=1

#

"

#

"

m−1 m−1

1 X

1 + zj

2m−1 Y 1

1+

.

=

+ 1 − zi

m i=1 K

K j=1 1 + K1 − zj

Now 1 +

1

K

2

(83)

#

(84)

(85)

= 1 + 2 sinh2 (β) = cosh(2β) and

m−1 1

2m−1 Y 1

1 0

sinh(2mβ)

1

+ 1 − zi = 2 Tm 1 +

= 2 Tm0 (cosh(2β)) =

.

m i=1 K

m

K

m

m sinh(2β)

(86)

Furthermore, differentiating (162), we obtain for z = cosh(τ )

m−1

X

j=1

Tm00 (z)

1

m coth(mτ ) − coth(τ )

=

= 0

.

z − zj

Tm (z)

sinh τ

(87)

Hence

m−1

1 + zj

1 X

1+

K j=1 1 + K1 − zj

m−1

X

1 + cosh(2β)

= 1 + (cosh(2β) − 1)

−1 +

cosh(2β) − zj

j=1

= 1 − (m − 1)(cosh(2β) − 1) + (cosh2 (2β) − 1)

m−1

X

j=1

(88)

(89)

1

cosh(2β) − zj

m coth(2mβ) − coth(2β)

sinh 2β

= m (1 − cosh(2β) + sinh(2β)coth(2mβ)) .

= 1 − (m − 1)(cosh(2β) − 1) + sinh2 (2β)

17

(90)

(91)

(92)

Combining (92) and (86) yields

sinh(2mβ) − cosh(2β) sinh(2mβ) + sinh(2β) cosh(2mβ)

sinh(2β)

sinh(2mβ) − sinh((2m − 2)β)

=

2 sinh(β) cosh(β)

2 cosh((2m − 1)β) sinh(β)

=

2 sinh(β) cosh(β)

cosh((2m − 1)β)

,

=

cosh(β)

γ = f (x) =

(93)

(94)

(95)

(96)

which proves (62). As cosh((2m−1)β)

is strictly monotonic in β, β > 0 is uniquely determined

cosh(β)

from γ. Of course, this fact also follows from the uniqueness of K proved above.

Now finally, using (86), we write

ηmin =

m−1

Y

i=0

(1 + K(1 + zi )) = K

m−1

m−1

Y

i=0

1

+ 1 + zi

K

=

sinh(2mβ)

(2 sinh(β))2m−1 cosh(β)

(97)

It remains to show that B(xcrit ) has rank m − 1. We will show, more generally, that

B(y) has rank m − 1 for an arbitrary y = (y1 , . . . , ym−1 ), as long as yi 6= yj for i 6= j in

{0, 1, . . . , m − 1}. As before we set y0 ≡ 1. The proof of Proposition 4.2 below goes through

without this restriction on y0 , but this more general fact is of no consequence for the results

in this paper.

Factor out bj (y) from the j-th column, j = 0, . . . m − 1 and extend the resulting matrix

to an m × m square matrix B̃(y) by adding a row that is the negative of the sum of all the

other rows, as follows.

m−1

P0 1

1

1

1

···

y0 −y1

y0 −y2

y0 −ym−1

l=0 y0 −yl

m−1

P0 1

1

1

1

y1 −y0

···

y

−y

y

−y

y

−y

1

1

2

1

m−1

l

l=0

m−1

P

B̃(y) =

(98)

0

1

1

1

1

·

·

·

y2 −y1

y2 −yl

y2 −ym−1

y2 −y0

l=0

..

..

..

..

...

.

.

.

.

m−1

P0

1

1

1

1

···

ym−1 −y0

ym−1 −y1

ym−1 −y2

ym−1 −yl

l=0

Clearly, rank B̃(y) = rank B(y). We prove that B̃(y) has rank m − 1 by explicitly showing

18

that B̃(y) is similar to the Jordan block

J =

0

0

0

..

.

1

0

0

..

.

0

1

0

..

.

···

···

···

...

.

(99)

Proposition 4.2. For m ≥ 2, B̃(y) has the Jordan decomposition

B̃(y) = P (y)JP (y)−1 ,

(100)

where

b0 (y)

b0 (y)(y0 − ym−1 )

b1 (y)

b1 (y)(y1 − ym−1 )

b2 (y)(y2 − ym−1 )

b (y)

P (y) = 2 .

..

..

.

bm−2 (y) bm−2 (y)(ym−2 − ym−1 )

bm−1 (y)

0

m−1

m−1

.

···

m−1 )

b0 (y) (y0 −y

(m−1)!

···

m−1 )

b1 (y) (y1 −y

(m−1)!

m−1

m−1 )

···

b2 (y) (y2 −y

(m−1)!

..

..

.

.

(ym−2 −ym−1 )m−1

· · · bm−2 (y)

(m−1)!

···

0

(101)

Here the bj (y)’s are defined as in (51).

Proof. The matrix P (y) is of the form D1 V D2 , where D1 , D2 are invertible diagonal matrices

and V is a Vandermonde matrix. Hence, P (y) is invertible and the proof of (100) is equivalent

to showing that B̃(y)P (y) = P (y)J, that is, for 0 ≤ j, n ≤ m − 1,

m−1

X

k=0

k6=j

m−1

bk (y) (yk − ym−1 )n X bj (y) (yj − ym−1 )n

(yj − ym−1 )n−1

+

= bj (y)

,

yj − yk

n!

y

n!

(n

−

1)!

j − yl

l=0

(102)

l6=j

1

where (−1)!

= 0.

The proof is based on the counterclockwise integral defined for all t ∈ C

I

1

(z − t)n

Jm,n (t) =

dz, 0 ≤ n ≤ m − 1

m−1

Q

2πi

(z − yi )

Γ

(103)

i=0

over a circle Γ of radius R large enough that it encloses all yj ’s. Letting R → ∞, we see

(0)

that Jm,n = δn−(m−1) independent of t. On the other hand, note that the residue at yk is

(−1)m−1 bk (y)(yk − t)n . Hence

(0)

δn−(m−1)

m−1

= Jm,n = (−1)

m−1

X

k=0

19

bk (y)(yk − t)n .

(104)

Now

b (y)

k

yk −yj

for j 6= k

∂bk

= m−1

P bj (y)

∂yj

l=0 yl −yj

(105)

for j = k

l6=j

Hence, differentiating (104) with respect to yj , leads to the identity

m−1

X

k=0

k6=j

m−1

X bj (y)

bk (y)

(yk − t)n +

(yl − t)n + bl (y)n(yj − t)n−1 = 0.

yk − yj

yl − yj

l=0

(106)

l6=j

Letting t → ym−1 , one obtains (102).

5

Asymptotics for the relaxed and the discrete minimization problem

In the following proposition, we evaluate the dependence on m of the solution x = x(m) of

(m)

the relaxed minimization problem. For any fixed j, we show that xj converges as m → ∞,

and we compute the limit.

Proposition 5.1.

(a) For K = K(m, γ) as in (80)

2(m − 1)2

2m2

−

1

≤

K

≤

.

(cosh−1 γ)2

(cosh−1 γ)2

(b) Set σ :=

π2

2.

(cosh−1 γ )

(107)

Then for all m and all 1 ≤ j ≤ m − 1,

(m)

xj

≤ 1 + σj 2 .

(108)

(c) For any fixed j ≥ 1,

(m)

lim xj

m→∞

= 1 + σj 2 .

(109)

(d)

lim

m→∞

1/m

η(x(m) )

1

σ

=

= 2.

−1

2

2

m

π

(cosh γ)

(110)

Proof. We first provide bounds on β defined in (62). For a lower bound, write

γ=

cosh(2m − 1)β)

= cosh(2mβ) − sinh(2mβ) tanh β ≤ cosh(2mβ).

cosh β

20

(111)

For an upper bound, we have

γ=

cosh(2m − 1)β)

= cosh((2m − 2)β) + sinh((2m − 2)β) tanh β ≥ cosh((2m − 2)β). (112)

cosh β

We obtain the bounds

1

1

cosh−1 γ ≤ β ≤

cosh−1 γ.

2m

2m − 2

This implies the upper bound for K

K=

(113)

2m2

1

1

≤

.

≤

2β 2

2 sinh2 β

(cosh−1 γ)2

(114)

For the lower bound on K, we have by an elementary estimate

K=

1

1

2(m − 1)2

≥

−

1

≥

− 1.

2β 2

2 sinh2 β

(cosh−1 γ)2

This proves (a).

Also

(m)

xj

= 1 + 2K sin

2

jπ

2m

4m2

≤1+

(cosh−1 γ)2

jπ

2m

2

= 1 + σj 2 ,

(115)

(116)

which proves (b).

From (107)

lim

m→∞

and so

lim

m→∞

(m)

xj

K(m, γ)

2

=

,

2

m

(cosh−1 γ)2

2

=1+

lim 2m2 sin2

(cosh−1 γ)2 m→∞

jπ

2m

(117)

= 1 + σj 2 ,

(118)

which proves (c).

Finally, from (113) we see that

lim 2mβ(m, γ) = cosh−1 γ,

m→∞

(119)

and hence from (61)

1/m

1

sinh(2mβ)

lim

m→∞ m2

(2 sinh β)2m−1 cosh β

1/m

1

2 sin β sinh (2mβ)

= lim

m→∞

cosh β

4m2 sinh2 β

K(m, γ)

1

=

= lim

,

2

m→∞

2m

(cosh−1 γ)2

(η(x))1/m

lim

=

m→∞

m2

which proves (d). This completes the proof of the Proposition.

21

(120)

(121)

(122)

Motivated by the above proposition, let us define

wj := 1 + σj 2 ,

j ≥ 1...,

(123)

and an associated vector sequence w(m) := (w1 , . . . , wm−1 ), m ≥ 2. We know that for each

(m)

j ≥ 1, xj → wj as m → ∞. The following lemma provides a nonasymptotic relation

between x(m) and w(m) which will be utilized in proving Proposition 5.3 and Theorem 5.5.

Lemma 5.2. w(m) is subordinate to x(m) .

(m)

Proof. By Definition 3.2, we need to show that for 0 ≤ j ≤ m − 2 and w0

(m)

wj+1

(m)

(m)

≡ x0

≡ 1,

(m)

≥

xj+1

wj

(m)

,

(124)

xj

that is,

2

(1 + σ(j + 1) ) 1 + 2K sin

2

jπ

2m

2

≥ (1 + σj ) 1 + 2K sin

2

(j + 1)π

2m

,

(125)

or equivalently

(j + 1)π

jπ

2

2

σ(2j + 1) − 2K sin

− sin

2m

2m

(j + 1)π

jπ

2

2

2

2

− j sin

≥ 0.

+ 2σK (j + 1) sin

2m

2m

(126)

We show that both these summands are nonnegative.

2

By Proposition 5.1(a), K ≤ 2mπ2 σ , and so for the first summand, it is sufficient to show

that

4m2

(j + 1)π

jπ

2

2

(2j + 1) − 2 sin

− sin

≥ 0.

(127)

π

2m

2m

Indeed, by standard trigonometric identities

π (j + 1)π

jπ

4m2

4m2

(2j + 1)π

2

2

sin

−

sin

=

sin

sin

≤ 2j + 1, (128)

π2

2m

2m

π2

2m

2m

which proves (127).

On the other hand, the positivity of the second summand follows from the fact that the

function sin(y)

is decreasing on [0, π2 ]. This completes the proof of (126) and hence the proof

y

of the Lemma.

The above results for the relaxed minimization problem allow us to draw conclusions for

(m)

our original problem with the constraint that the filter locations nj are all integers.

(m)

(m)

(m)

(m)

With n1 ≡ x0 ≡ 1, we seek an integer sequence n(m) = (n2 , . . . , nm ) such that

n(m) is subordinate in the sense of Definition 3.2 to x(m) := (1 + K(1 + zj ))m−1

j=1 , the solution

22

of the relaxed minimization problem (41), (42). By Corollary 3.3, f (n(m) ) ≤ f (x(m) ) ≤ γ,

(m)

so n(m) satisfies (40). Note that for the nj ’s we use the original index set j = 1, . . . , m of

(m)

Section 2.4, while for the xj ’s we retain the labels j = 0, . . . , m − 1. In this section, we

(m)

(m)

work with the specific integer sequence n(m) = (n2 , . . . , nm ) defined recursively by

'

&

(m)

(m)

(m) xj

, j = 1, . . . , m − 1,

(129)

nj+1 = nj

(m)

xj−1

(m)

(m)

where n1 ≡ x0 ≡ 1 as above and dse denotes the smallest integer greater or equal to s.

This sequence is minimal amongst all integer sequences subordinate to x(m) in the sense that

(m)

km

2

if k = (k2 , . . . , km ) is any integer sequence such that 1 ≤ k(m)

≤ · · · ≤ (m)

, then kj ≥ nj

x1

(m)

x1 ,

for all j = 2, . . . , m. Indeed one has k2 ≥

(m)

assuming by induction kj ≥ nj , one obtains

&

(m)

kj+1 = dkj+1 e ≥ xj

kj

which implies

'

&

(m)

(m) nj

≥ xj

(m)

xj−1

xm−1

(m)

k2 ≥ dx1 e

(m)

= n2 , and

'

(m)

(m)

xj−1

= nj+1 .

(130)

We will next derive an asymptotic formula, analogous to Proposition 5.1(c), for the limit

(m)

of nj as m → ∞.

Proposition 5.3. Let σ > 54 . Then, for all j ≥ 1,

(m)

lim nj

m→∞

= 1 + dσe(j − 1)2 .

(131)

Proof. We will prove the statement by induction on j. The case j = 1 holds by definition.

Assume that (131) holds for some j ≥ 1.

(m)

We first consider the case when σ is an integer. Since nj is integer valued and dσe = σ,

(m)

(131) is equivalent to saying that nj = 1 + σ(j − 1)2 for all sufficiently large m. Now by

Lemma 5.2 (see (124)) we have

(m)

2

2

1 + σj ≥ (1 + σ(j − 1) )

xj

(m)

xj−1

(m)

=

(m)

(m) xj

nj

(m)

xj−1

(m)

(132)

(m)

(m)

which, together with (129), implies 1 + σj 2 ≥ nj+1 . Since nj+1 ≥ xj and xj → 1 + σj 2

(m)

(see Proposition 5.1(c)), we obtain nj+1 → 1 + σj 2 , which completes the induction step.

Now assume σ > 45 is noninteger. By Proposition 5.1(c) and the induction hypothesis,

we have

(m)

1 + σj 2

(m) xj

2

)

(133)

=

(1

+

dσe(j

−

1)

lim nj

(m)

m→∞

1 + σ(j − 1)2

xj−1

23

Since the function d·e is continuous at every noninteger, our problem reduces to showing

that

1 + σj 2

dσej 2 < (1 + dσe(j − 1)2 )

< 1 + dσej 2

(134)

1 + σ(j − 1)2

for all j ≥ 1 and σ > 45 , for then we have

'

&

'

&

(m)

(m)

x

x

(m) j

(m)

(m) j

= lim nj

= lim nj+1 ,

1 + dσej 2 = lim nj

(m)

(m)

m→∞

m→∞

m→∞

xj−1

xj−1

(135)

which would complete the induction step.

To show (134), first note that

(1 + dσe(j − 1)2 )

1 + σj 2

(dσe − σ)(2j − 1)

− dσej 2 = 1 −

,

2

1 + σ(j − 1)

1 + σ(j − 1)2

(136)

which immediately yields the second inequality for all j ≥ 1 and noninteger σ > 0. The first

inequality in (134) is also immediate for j = 1, as the right hand side of (136) reduces to

1 − (dσe − σ), which is strictly positive. Now note that

1 + σ(t − 1)2 ≥ 2σt + 1 − 3σ, for all t ∈ R,

(137)

since the right hand side is the equation of the line tangent to the parabola 1 + σ(t − 1)2 at

t = 2. Now, for σ > 2, we have

(dσe − σ)(2j − 1)

2j − 1

2j − 1

<

≤

≤ 1, for all j ≥ 2.

1 + σ(j − 1)2

1 + 2(j − 1)2

4j − 5

On the other hand, for

5

4

(138)

< σ < 2, we have

3

3

(2j − 1)

j − 34

(dσe − σ)(2j − 1)

4

2

≤ 5

≤ 1, for all j ≥ 2.

<

1 + σ(j − 1)2

j − 11

1 + 54 (j − 1)2

2

4

(139)

Hence the first inequality in (134) holds for all j ≥ 1 and noninteger σ > 45 .

(m)

(m)

(m)

Remark: For j = 1 and j = 2, we have n1 = 1 and n2 = dx1 e so that the formula

(131) is actually valid for all σ > 0 because of Proposition 5.1(b) and (c). On the other

(m)

hand, for j = 3 and 1 < σ < 45 , we have n3 → 8 instead of 9. To see this, note that

(m)

n2 → 1 + dσe = 3, so that

(m)

(m) x2

(m)

x1

lim n2

m→∞

=

3(1 + 4σ)

∈ (7.5, 8).

1+σ

(m)

(140)

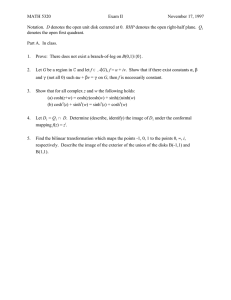

Similarly, for 1 < σ < 45 , n4 → 17 instead of 19. As shown in Figure 1, the pattern

becomes more complicated for higher values of j and the interval has to be subdivided. A

similar pattern is present also for 0 < σ < 1. We shall not explore this here; we again refer

to Figure 1.

24

8

6

4

j=2

(m)

lim xj−1

− − − m→∞

____ lim n(m)

j

2

m→∞

0

0

1

2

3

σ

4

5

6

7

30

20

j=3

10

(m)

lim xj−1

− − − m→∞

____ lim n(m)

j

m→∞

0

0

1

2

3

σ

4

5

6

7

60

40

j=4

20

(m)

lim xj−1

− − − m→∞

____ lim n(m)

j

m→∞

0

0

1

2

3

σ

4

5

6

7

400

300

200

j=9

100

lim xj−1

− − − m→∞

____ lim n(m)

j

(m)

m→∞

0

0

1

2

3

σ

4

5

(m)

(m)

Figure 1: Numerical comparison of lim xj−1 with lim nj

m→∞

m→∞

6

7

as a function of σ for j =

2, 3, 4, 9. For j = 2, these limits equal 1 + σ and 1 + dσe, respectively. For j ≥ 3, notice the

(m)

difference between the cases σ > 5/4 and σ < 5/4. In the first case, one has lim nj =

m→∞

1 + dσe(j−1)2 , whereas in the latter case this formula is no longer valid.

25

(m)

(m)

Definition 5.4. A sequence of integer vectors k(m) = (k2 , . . . , km ) of increasing length

m − 1, m = 2, 3 . . . , with f (k (m) ) ≤ γ is said to be asymptotically optimal if

lim

m→∞

!1/m

η k(m)

= 1,

η (x(m) )

(141)

where x(m) is the solution of (41), (42) as above.

The relevance of this definition lies in the fact that the quantity lim m12 (η(k(m) ))1/m

m→∞

controls the rate of exponential

decay for the error bound associated with the sequence of

filters defined by k(m) . The precise relation will be seen in Theorem 5.6 below. We will

use the following lemma to assess the asymptotic optimality of our minimal subordinate

construction n(m) defined in (129).

Theorem 5.5. If σ, defined in Proposition 5.1, is an integer, then the sequence n(m) , m =

2, 3, . . . , defined by (129), is both asymptotically optimal and subordinate to x(m) . If σ is not

an integer, no sequence of integer vectors can have both of these properties.

(m)

Proof. If σ is not an integer, then lim x1

m→∞

(m)

= 1+σ is not an integer, and so for any sequence

(m)

k(m) = (k2 , . . . , km ), m = 2, 3, . . . , of integer vectors subordinate to x(m) ,

lim sup

m→∞

η(k)

η(x)

(m)

(m)

1/m

≥ lim sup

m→∞

k2

(m)

x1

≥ lim

m→∞

dx1 e

(m)

x1

=

1 + dσe

> 1.

1+σ

(142)

Hence k(m) cannot be asymptotically optimal.

Now consider the case that σ is an integer. Then by Lemma 5.2, w(m) = (w1 , . . . , wm−1 )

(m)

(m)

is an integer sequence subordinate to x(m) . As in Proposition 5.3, one has xj ≤ nj+1 ≤ wj ,

as n(m) is the minimal integer sequence subordinate to x(m) .

Next, from Proposition 5.1(a) we see that

2K

4s

C1

≥ 2 1−

(143)

m2

π

m

2

for some constant C1 < ∞. Together with the elementary fact that sinx x ≥ 1 − C2 x2 for a

sufficiently large constant C2 , this implies that for 1 ≤ j ≤ m2/3 and some constant C3 < ∞

π2 j 2

jπ

C3

C1

(m)

2

2

2

≥ 1+σ 1 −

j 1 − C2

≥ (1+σj ) 1 − 2/3 .

xj = 1+2K sin

2m

m

4 m2

m

(144)

2/3

Then for 1 ≤ j ≤ m and some C4 < ∞

nj+1 (m)

wj

1

C4

≤ (m) ≤

≤ 1 + 2/3 .

C3

(m)

xj

xj

m

1 − m2/3

26

(145)

Now

&

'

(m)

x

1

nj+1 (m)

1

(m) j

= (m) nj

≤ (m)

(m)

(m)

xj

xj

xj

xj−1

!

(m)

(m) xj

nj

(m)

xj−1

+1

(m)

=

nj

(m)

xj−1

+

1

xj (m)

.

(146)

Combining (143) together with the elementary lower bound sinx x ≥ π2 for 0 ≤ x ≤ π2 , we

(m)

obtain xj ≥ C5 j 2 , j ≥ 1, for some constant C5 > 0. By repeated application of (146), one

then obtains for m2/3 < j ≤ m − 1 and some constant C6 < ∞

(m)

(m)

nj+1

(m)

xj

≤

nj

(m)

xj−1

(m)

j

X

nbm2/3 c + 1

1

1

C4

C6

+

+

≤ ··· ≤

≤ 1 + 2/3 + 2/3 .

(m)

2

2

C5 j

C5 l

m

m

xbm2/3 c

l=bm2/3 c+1

(147)

Thus there exists some constant C7 < ∞, such that for all 1 ≤ j ≤ m − 1,

We conclude that

C7

nj+1 (m)

≤ 1 + 2/3 .

(m)

xj

m

(148)

m

m−1

Y n(m)

η(n(m) )

C7

j+1

1≤

=

≤ 1 + 2/3

,

η(x(m) )

x (m)

m

j=1 j

(149)

which implies that

lim

m→∞

η(n(m) )

η(x(m) )

1/m

= 1,

(150)

and hence n(m) is asymptotically optimal.

Remark: For noninteger values of σ, we do not know if there are asymptotically optimal

integer vectors k(m) (which are necessarily

not subordinate to x(m) ) that are admissible, i.e.,

√

(m)

that satisfy f (k ) ≤ γ = cosh(π/ σ). At the same time, it is natural to ask how close our

construction n(m) is to being asymptotically optimal, i.e., the value of the limit, as m → ∞,

of (η(n(m) )/η(x(m) ))1/m . We shall not carry out an analysis here that is similar to Proposition 5.3 to find this limit, but instead provide our numerical findings in Figure 2.

Optimal exponential error decay for minimally supported filters

We are now ready to prove the promised improved exponential error decay estimate for the

Σ∆ modulators defined by n(m) .

Theorem 5.6. For all 1 < γ < 2 such that σ =

π2

2

(cosh−1 γ )

is an integer, all one-bit Σ∆

(m)

(m)

modulators corresponding to filters h(m) minimally supported at positions 1, n2 , . . . , nm are

stable for all input sequences y with kyk∞ ≤ µ = 2−γ. Furthermore, the family consisting of

27

0.7

0.6

0.5

0.4

0.3

¢1/m

1 ¡

η(x(m) )

m2

¢1/m

1 ¡

____ lim

η(n(m) )

m→∞ m2

0.2

− − − lim

m→∞

0.1

0

0

1

2

3

σ

4

5

6

7

2.5

2

1.5

1

____

lim

m→∞

0.5

0

1

2

3

σ

4

µ

5

η(n(m) )

η(x(m) )

6

¶1/m

7

1/m

1/m

with lim m12 η(x(m) )

= σ/π 2 ,

Figure 2: Numerical comparison of lim m12 η(n(m) )

m→∞

m→∞

as a function of σ. Top: plotted individually, bottom: their ratio.

∞

the one-bit Σ∆ modulators corresponding to the filters h(m) m=2 for all orders m gives rise

to exponential error decay: For any rate constant r < r0 := e2 σπln 2 , there exists a constant

C = C(r) such that

keλ k∞ ≤ C2−rλ .

(151)

Proof. Stability follows from the fact that n(m) is subordinate to x(m) , which satisfies the

stability condition f (x(m) ) ≤ γ.

Choose the reconstruction

ϕ0 such that the corresponding as introduced in Secp rkernel

0

. Now let g (m) be such that ∆m g (m) = δ (0) − h(m) , as in

tion 2.2 satisfies 1 + <

r

Section 2.3. Then from (18) and (27), we have the error bound

keλ k∞ ≤ kg (m) k1 kvk∞ kϕ0 k1 π m (1 + )m λ−m ,

(152)

where v solves (26).

Recall that our construction yields kvk∞ ≤ 1. Furthermore, by Theorem 5.5 and Proposition 5.1 (d), we have that

lim

m→∞

1/m

η(n(m) )

σ

1

=

= 2

−1

2

2

m

π

(cosh γ)

28

(153)

and hence by (34)

η(n(m) ) eσ m m

=

m (1 + o(1))m .

(154)

m!

π2

p r0Now consider m ≥ M (r) large enough to ensure that the (1 + o(1))-factor is less than

. Then (152) implies

r

kg (m) k1 =

keλ k∞ ≤ kϕ0 k1

eσ m

π

m

m

r m

0

r

λ−m .

(155)

As explained in Section 2.3, we choose, for each λ, the filter h(m) that leads to the minimal

error bound. Then by a slight variation of (23), we obtain

m

eσ m

π r

m r0

−m

m

λ .r exp − 2

λ = 2−rλ ,

(156)

keλ k∞ ≤ kϕ0 k1 min

m≥M (r)

π

r

e σ r0

which proves the theorem.

Remark: The smallest integer σ such that the stability constraint khk1 ≤ γ is satisfied

for some γ < 2 is σ = 6. In this case, Theorem 5.6 yields exponential error decay for any rate

constant r < r0 ≈ 0.102. This is the fastest error decay currently known to be achievable for

one-bit Σ∆ modulation. The previously best known bound for the achievable rate constant

was r0 ≈ 0.088 [11].

6

Multi-level quantization alphabets and the case of

small σ

In this paper we have primarily considered one-bit Σ∆ modulators, though our theory and

analysis is equally applicable to quantization alphabets that consist of more than two levels.

Let us consider a general alphabet A = AL with L levels such that consecutive levels are

separated by 2 units as before. For instance, A4 = {−3, −1, 1, 3} and A5 = {−4, −2, 0, 2, 4}.

The corresponding generalized stability condition for the greedy quantization rule is then

khk1 + kyk∞ ≤ L,

(157)

which still guarantees the bound kvk∞ ≤ 1 (see, e.g., [13, p. 104]). In terms of our filter

design and optimization problem, the parameter γ that bounds khk1 can be set as large as

L. The potential benefit of increased number of levels is that large values of γ correspond to

small values of σ, which in turn yields faster exponential error decay rates in the oversampling

ratio λ as given in (156). There is, however, also an increase in the number of bits spent per

sample which needs to be accounted for if a rate-distortion type performance analysis is to

be carried out.

As seen above (Theorem 5.5 and Figure 2), our analytical results are available for integer

values of σ only. We will evaluate the performance of multi-level quantization alphabets

29

L

(average) number of bits/sample B = log2 L

minimum integer value of σ

maximum input signal kyk∞

achievable error decay rate r0 = π(e2 σ ln 2)−1

coding efficiency r0 /B

2

1

6

0.058

0.102

0.102

3

4

5

12

1.585

2

2.322 3.585

4

3

2

1

0.490 0.851 0.335 0.408

0.153 0.204 0.306 0.613

0.097 0.102 0.132 0.171

Table 1: Comparison of exponential error decay rates and their coding efficiency for multilevel quantization alphabets.

based on these values first. For each

√ positive integer σ, we can find the minimum integer

value of L ≥ 2 such that cosh(π/ σ) < L. Alternatively, if L ≥ 2 is specified first, we find

the minimum integer value of σ that satisfies this condition. Given

such a (σ, L) pair, the

√

corresponding bound kyk∞ on the input signal is L − cosh(π/ σ). We also compute the

achievable exponential error decay rate r0 given by e2 σπln 2 , the average number of quantizer

bits per sample B := log2 L, and a coding efficiency figure given by r0 /B. The results are

tabulated in Table 1. It turns out that the smallest value of L which results in σ = 1 is

L = 12. This case also yields the highest coding efficiency figure given by 0.171. It also

yields a significantly more favorable range for the input signal compared to the one-bit case

(L = 2). As before, it is easy to check via Kolmogorov entropy bounds that the coding

efficiency is always bounded by 1. The cases L = 4 and L = 5 are also noteworthy for they

provide better overall performance, yet still with a small quantization alphabet.

It is natural to ask what can be said for the case of noninteger values of σ, especially as

σ → 0. For our minimal subordinate constructions, we employ the numerically computed

1/m

given in Figure 2 as a function of the continuous parameter

values of limm→∞ m12 η(n(m) )

σ. As in Theorem 5.6, the achievable error decay rate r0 is given by

r0 =

m2

1

lim

.

πe2 ln 2 m→∞ η(n(m) ) 1/m

(158)

On the other hand the smallest number of levels L of the quantizer which guarantees stability

for the greedy rule is given by

√

(159)

L := dcosh(π/ σ)e.

Finally, the coding efficiency as a function of σ is

r0

1

m2

√

= 2

lim

.

B

πe lndcosh(π/ σ)e m→∞ η(n(m) ) 1/m

(160)

We plot our numerical findings in Figure 3. The specific values reported in Table 1 are visible

at the integer values σ = 1, 2, 3, 4, 6. It is interesting that the coding efficiency figure 0.171

reported for σ = 1 seems to be the global maximum value achievable by our construction

over all values of σ. This is possibly the best performance achievable by minimally supported

30

0.25

coding efficiency

0.2

0.15

0.1

0.05

0

0

1

2

3

σ

4

5

6

7

Figure 3: Numerical computation of the coding efficiency formula given by (160) for the

minimal subordinate filters n(m) .

filters that are constrained by subordinacy. We do not know if this figure can be exceeded

without the subordinacy constraint. The case of arbitrary optimal filters (i.e., not necessarily

minimally supported) is also open, along with the case of quantization rules that are more

general than the greedy rule.

Acknowledgements

The work in this paper was supported in part by various grants and fellowships: National

Science Foundation Grants DMJ-0500923 (Deift), CCF-0515187 (Güntürk), Alfred P. Sloan

Research Fellowship (Güntürk), the Morawetz Fellowship at the Courant Institute (Krahmer), the Charles M. Newman Fellowship at the Courant Institute (Krahmer) and a NYU

GSAS Dean’s Student Travel Grant (Krahmer).

31

A

Some useful properties of Chebyshev polynomials

Recall that the Chebyshev Polynomials of the first and second kind in x = cos θ are given

by

sin(m + 1)θ

Tm (x) = cos mθ

and

Um (x) =

,

(161)

sin θ

respectively. The Chebyshev polynomials have, in particular, the following properties (see

[14], [3]):

• Tm0 (x) = mUm−1 (x),

• The zeros of Um−1 are zj = cos

m−j

π

m

, j = 1, . . . , m − 1,

• For m > 0, the leading coefficient of Tm is 2m−1 ,

• The Chebyshev polynomials satisfy the following identities

Tm (cosh τ ) = cosh(mτ ),

Um (cosh τ ) =

sinh(mτ )

,

sinh τ

(162)

• The Chebyshev polynomials satisfy the differential equation

(1 − x)2 Tm00 (x) − xTm0 (x) + m2 Tm (x) = 0.

(163)

We say that a polynomial p of degree m has the equi-oscillation property on [−1, 1]

(compare [3]) if it has m − 1 real critical points ζ1 , . . . , ζm−1 which satisfy

ζ0 := −1 < ζ1 < · · · < ζm−1 < ζm := 1

(164)

such that the associated values are alternating

p(ζj ) = (−1)m−j

(165)

for j = 0, . . . , m.

Note that if a polynomial has the equi-oscillation property then its leading coefficient is

positive. The Chebyshev polynomials of the first kind Tm have the equi-oscillation property

for all m. Indeed, the first two properties given above imply that the zj ’s are the critical

points of Tm , and a simple calculation shows that Tm (zj ) = (−1)m−j . The equi-oscillation

property in fact characterizes the Chebyshev polynomials of the first kind:

Proposition A.1. If p(s) is a polynomial of degree m in s with the equi-oscillation property

on [−1, 1], then p = Tm .

32

Proof. The proof follows ideas used in [3] to establish that, up to a constant, the Tm are the

unique monic polynomials with minimal L∞ norm.

Let p(s) = ap sm + . . . and q(s) = aq sm + . . . be two polynomials with the equi-oscillation

property. W.l.o.g. assume aq ≥ ap > 0. Let ζ1 < · · · < ζm−1 be the critical points of p in

[−1, 1] and set ζ0 = −1, ζm = 1.

Consider the polynomial r(s) = p(s) − aapq q(s) of degree (m − 1). Then r(ζm−j ) ≥ 0 for

all even j, and r(ζm−j ) ≤ 0 for all odd j. The proof that r ≡ 0 follows from the following

more general statement:

Claim: If t0 < t1 < · · · < tm ∈ R and a polynomial ρ of degree m − 1 satisfies

(−1)j ρ(ζj ) ≥ 0 for all j, then ρ ≡ 0.

We conclude that r = p − aapq q ≡ 0 by applying the claim to ρ = (−1)m r. Since p(1) =

q(1) = 1 implies that ap = aq , we see that p ≡ q.

Proof of Claim: The proof proceeds by induction in m. In the case m = 1, ρ(t0 ) ≥ 0

and ρ(t1 ) ≤ 0 implies that r ≡ 0. For the induction step, assume that the claim holds true

for m. Given a polynomial ρ of degree m with the property, it must have a zero z with

r(x)

. Note that ρ̃ is a polynomial of degree m − 1. If z > tm ,

tm ≤ z ≤ tm+1 . Define ρ̃(x) = z−x

j

then ρ̃(tj )(−1) ≥ 0 for 0 ≤ j ≤ m and hence ρ̃ ≡ 0 by the induction hypothesis. If z = tm

then ρ̃(tj )(−1)j ≥ 0 for 0 ≤ j ≤ m − 1, but clearly one also has ρ̃(tm+1 )(−1)m ≥ 0. Again

by the induction hypothesis ρ̃ ≡ 0.

The following lemma plays a useful role in solving the relaxed minimization problem.

Lemma A.2. Let zj , j = 1, . . . , m − 1, be the critical points of the Chebyshev polynomial of

the first kind Tm , as above, and set z0 ≡ −1. Then

m(−1)m−1

for k = 0

m−1

2m−1

Y

(zk − zi ) =

(166)

m(−1)m−1−k

i=0

for k > 0

i6=k

2m−1 (1−zk )

Proof. Recall that Tm has leading coefficient 2m−1 . We obtain

Tm0 (z)

m−1

= m2

m−1

Y

(z − zi ),

(167)

i=1

and

Tm00 (z)

m−1

= m2

m−1

X m−1

Y

j=1 i=1

i6=j

33

(z − zi ),

(168)

and hence for 1 ≤ k ≤ m − 1

Tm00 (zk ) = m2m−1

m−1

Y

(zk − zi )

(169)

i=1

i6=k

=

m−1

m2m−1 Y

(zk − zi ).

1 + zk i=0

(170)

i6=k

Thus for 1 ≤ k ≤ m − 1 one has Tm0 (zk ) = 0 and Tm (zk ) = (−1)m−k , and so (163) reads

(1 −

or

m−1 m−1

Y

2 m2

zk )

(zk

1 + zk i=0

i6=k

m−1

Y

i=0

i6=k

− zi ) + m2 (−1)m−k = 0,

(zk − zi ) =

(−1)m−k−1 m

.

2m−1 (1 − zk )

(171)

(172)

On the other hand, as z0 = cos(π), we have using (161)

m−1

Y

(z0 − zi ) =

i=1

1

1

sin(mθ)

(−1)m−1 m

1

0

T

U

(z

)

=

lim

.

(z

)

=

=

m 0

0

m2m−1 m

2m−1

2m−1 θ→π sin θ

2m−1

(173)

References

[1] J. J. Benedetto, A. M. Powell, and Ö. Yılmaz. Sigma-Delta (Σ∆) quantization and

finite frames. IEEE Trans. Inform. Theory, 52(5):1990–2005, 2006.

[2] B. Bodmann and V. I. Paulsen. Frame paths and error bounds for sigma-delta quantization. Appl. Comput. Harmon. Anal., 22, 2007.

[3] P. Borwein and T. Erdélyi. Polynomials and polynomial inequalities, volume 161 of

Graduate Texts in Mathematics. Springer-Verlag, New York, 1995.

[4] A. R. Calderbank and I. Daubechies. The pros and cons of democracy. IEEE Trans.

Inform. Theory, 48(6):1721–1725, 2002. Special issue on Shannon theory: perspective,

trends, and applications.

[5] I. Daubechies and R. DeVore. Reconstructing a bandlimited function from very coarsely

quantized data: A family of stable sigma-delta modulators of arbitrary order. Ann. of

Math, 158:679–710, 2003.

34