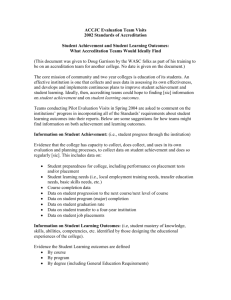

Assessing Student Learning Victor Valley College

advertisement