Lectures TDDD10 AI Programming Multiagent Decision Making

advertisement

Lectures

AI Programming:

Introduction to RoboRescue

3Agents and Agents

4Multi-Agent and

5

Multi-Agent Decision Making

6Cooperation And Coordination

7

Cooperation And Coordination

8

Machine

9Knowledge

10

Putting It All

1

2

TDDD10 AI Programming

Multiagent Decision Making

Cyrille Berger

2 / 82

Lecture goals

Lecture content

Multi-agent decision in a

competitive environment

Learn about the concept of utility,

rational agents, voting and auctioning

3 / 82

Self-Interested

Social Choice

Auctions

Single Dimension Auctions

Combinatorial Auctions

4 / 82

Utilities and Preferences

Self-Interested Agents

Assume we have just two agents: Ag = {i,

Agents are assumed to be self-interested: they

have preferences over how the environment is

Assume Ω = {ω₁, ω₂, …}is the set of

“outcomes” that agents have preferences over

We capture preferences by utility

uᵢ = Ω→ ℝ

uⱼ = Ω→ ℝ

Utility functions lead to preference orderings

over outcomes: ω ⪰ ω’ means uᵢ(ω) ≥ uᵢ(ω’)

ω ⪲ ω’ means uᵢ(ω) > uᵢ(ω’)

6 / 82

Self-Interested Agents

What is utility?

If agents represent individuals or organizations then we

cannot make the benevolence assumption.

Utility is not money, but similar

Agents will be assumed to act to further there own

interests, possibly at expense of others.

Potential for conflict.

May complicate the design task enormously.

7 / 82

8 / 82

Multiagent Encounters (1/2)

Multiagent Encounters (2/2)

We need a model of the environment in which

these agents will act…

Examples of a state transformer

function

agents simultaneously choose an action to perform, and

as a result of the actions they select, an outcome in Ω will

result

the actual outcome depends on the combination of actions

assume each agent has just two possible actions that it

can perform, C (“cooperate”) and D (“defect”)

Environment behavior given by state

transformer function:

τ: Acⁱ ⨯ Acʲ → Ω

This environment is sensitive to actions of

both agents:

τ(D,D)=ω₁ τ(D,C)=ω₂ τ(C,D)=ω₃

Neither agent has any influence in this

environment:

τ(D,D)=ω₁ τ(D,C)=ω₁ τ(C,D)=ω₁

This environment is controlled by j

τ(D,D)=ω₁ τ(D,C)=ω₂ τ(C,D)=ω₁

9 / 82

Coordination game

Suppose we have the case where both agents can

influence the outcome, and they have utility

functions as follows:

10 / 82

Payoff Matrices

We can characterize the previous scenario

in a payoff matrix:

uᵢ(ω₁)=2 uᵢ(ω₂)=1 uᵢ(ω₃)=3 uᵢ(ω₄)=4

uⱼ(ω₁)=2 uⱼ(ω₂)=3 uⱼ(ω₃)=1 uⱼ(ω₄)=4

This environment is sensitive to actions of both agents:

τ(D,D)=ω₁ τ(D,C)=ω₂ τ(C,D)=ω₃ τ(C,C)=ω₄

With a bit of abuse of notation:

uᵢ(D,D)=2 uᵢ(D,C)=1 uᵢ(C,D)=3

uⱼ(D,D)=2 uⱼ(D,C)=3 uⱼ(C,D)=1

Agent i is the column player

Agent j is the row player

Then agent i’s preferences are:

C,C ⪰ᵢ C,D ≻ᵢ D,C ⪰ᵢ

“C” is the rational choice for i.

11 / 82

12 / 82

Disgrace of Gijón (World Cup 1982)

The Prisoner’s Dilemma

Two men are collectively charged with

a crime and held in separate cells, with

no way of meeting or communicating.

They are told that:

One game left: Germany uᵢ(≥3-0) = 2 uⱼ(≥3-0) =

uᵢ(2-0) = uᵢ(1-0) = 2 uⱼ(2-0) = uⱼ(1-0) =

uᵢ(a-a) = -1 uⱼ(a-a) =

uᵢ(0-a) = -1 uⱼ(0-a) = 2 (a >

Final score: Germany 1 - 0

if one confesses and the other does not, the confessor

will be freed, and the other will be jailed for three years

If both confess, then each will be jailed for two years

Both prisoners know that if neither

confesses, then they will each be jailed

for one year

13 / 82

The Prisoner’s Dilemma

Payoff matrix for prisoner’s dilemma:

14 / 82

Solution Concepts

How will a rational agent behave in

any given scenario?

Answered in solution concepts:

Top left: If both defect, then both get punishment for mutual

defection

Top right: If i cooperates and j defects, i gets sucker’s payoff

of 1, while j gets 4

Bottom left: If j cooperates and i defects, j gets sucker’s

payoff of 1, while i gets 4

Bottom right: Reward for mutual cooperation

15 / 82

dominant

Nash equilibrium

Pareto optimal

strategies that maximize social

16 / 82

Dominant Strategies (1/2)

Dominant Strategies (2/2)

Given any particular strategy (either C or D) of

agent i, there will be a number of possible

We say s₁ dominates s₂ if every outcome possible

by i playing s₁ is preferred over every outcome

possible by i playing s₂

A rational agent will never play a

dominated

So

in deciding

strategy

what to do, we can delete

dominated strategies

Unfortunately, there is not always a unique

undominated strategy

Rational action example:

Prisoner's Dilemna:

17 / 82

18 / 82

(Pure Strategy) Nash Equilibrium (1/2)

(Pure Strategy) Nash Equilibrium (2/2)

In general, we will say that two strategies s1

and s2 are in Nash equilibrium if:

Coordination game:

Neither agent has any incentive to deviate from

a Nash equilibrium

Prisoner's Dilemna:

under the assumption that agent i plays s₁, agent j can do no

better than play s₂; and

under the assumption that agent j plays s₂, agent i can do no

better than play s₁.

Unfortunately:

Not every interaction scenario has a Nash

equilibrium

Some interaction scenarios have more than one

Nash equilibrium

19 / 82

20 / 82

Pareto Optimality (1/2)

Pareto Optimality (2/2)

An outcome is said to be Pareto optimal (or Pareto

efficient) if there is no other outcome that makes one

agent better off without making another agent worse off.

If an outcome is Pareto optimal, then at least one agent

will be reluctant to move away from it (because this

agent will be worse off).

If an outcome ω is not Pareto optimal, then there is

another outcome ω’ that makes everyone as happy, if

not happier, than ω.

“Reasonable” agents would agree to move to ω’ in this

case. (Even if I don’t directly benefit from ω, you can

benefit without me suffering.)

Coordination game:

Prisoner's Dilemna:

21 / 82

Social Welfare (1/2)

The social welfare of an outcome ω is the sum of the

utilities that each agent gets from ω :

Think of it as the “total amount of utility in the

As a solution concept, may be appropriate when the

system”.

whole system (all agents) has a single owner (then

overall benefit of the system is important, not

individuals).

23 / 82

22 / 82

Social Welfare (2/2)

Coordination game:

Prisoner's Dilemna:

24 / 82

The Prisoner’s Dilemma

The Prisoner’s Dilemma

Solution

This apparent paradox is the fundamental

problem of multi-agent interactions.

It appears to imply that cooperation will not

occur in societies of self-interested agents.

Real world

D is a dominant

(D,D) is the only Nash

All outcomes except (D,D) are Pareto

(C,C) maximizes social

The individual rational action is defect

This guarantees a payoff of no worse than 2, whereas

cooperating guarantees a payoff of at most 1. So defection

is the best response to all possible strategies: both agents

defect, and get payoff = 2

But intuition says this is not the best outcome:

Surely they should both cooperate and each get payoff of 3!

25 / 82

The Iterated Prisoner’s Dilemma

One answer: play the game more than

once

If you know you will be meeting your

opponent again, then the incentive to

defect appears to evaporate

Cooperation is the rational choice in the

infinitely repeated prisoner’s dilemma

27 / 82

nuclear arms reduction (“why don’t I keep mine. . . ”)

free rider systems — public

television licenses.

The prisoner’s dilemma is

Can we recover

26 / 82

Backwards Induction

But…, suppose you both know that you will play

the game exactly n times

On round n - 1, you have an incentive to defect, to gain that

extra bit of payoff…

But this makes round n – 2 the last “real”, and so you have

an incentive to defect there, too.

This is the backwards induction problem.

Playing the prisoner’s dilemma with a fixed,

finite, pre-determined, commonly known

number of rounds, defection is the best strategy

28 / 82

Axelrod’s Tournament

Strategies in Axelrod’s Tournament

Suppose you play iterated prisoner’s

dilemma against a range of

What strategy should you choose, so as

opponents…

to maximize your overall payoff?

Axelrod (1984) investigated this

problem, with a computer tournament

for programs playing the prisoner’s

dilemma

RANDOM

ALLD: “Always defect” — the hawk

TIT-FOR-TAT:

On round u = 0, cooperate

On round u > 0, do what your opponent did on round u –

TESTER:

On 1st round, defect. If the opponent retaliated, then play

TIT-FOR-TAT. Otherwise intersperse cooperation and

defection.

JOSS:

As TIT-FOR-TAT, except periodically defect

29 / 82

Axelrod’s Tournament results

TIT-FOR-TAT won the first tournament

A second tournament was called

TIT-FOR-TAT won the second

tournament as well

31 / 82

30 / 82

Recipes for Success in Axelrod’s Tournament

Axelrod suggests the following rules

for succeeding in his tournament:

Don’t be envious:

Don’t play as if it were zero sum!

Be nice:

Start by cooperating, and reciprocate cooperation

Retaliate

Always punish defection immediately, but use “measured”

force — don’t overdo it

Don’t hold

Always reciprocate cooperation immediately

32 / 82

Competitive and Zero-Sum Interactions

Where preferences of agents are

diametrically opposed we have

strictly competitive scenarios

Zero-sum encounters are those

where utilities sum to zero:

uᵢ(ω) + uⱼ(ω) = 0

Matching Pennies

Players i and j simultaneously choose the

face of a coin, either “heads” or “tails”.

If they show the same face, then i wins,

while if they show different faces, then j

wins.

for all ω ∊ Ω

Zero sum implies strictly

Zero sum encounters in real life are

very rare, but people tend to act in

many scenarios as if they were zero

33 / 82

34 / 82

Mixed Strategies for Matching Pennies

Mixed Strategies

No pair of strategies forms a pure

strategy Nash Equilibrium: whatever

pair of strategies is chosen, somebody

will wish they had done something else.

The solution is to allow mixed

A mixed strategy has the form

play α₁ with probability p₁

play α₂ with probability p2₂

...

play αk with probability pk.

that p₁ + p₂ + … + pₖ = 1.

Nash proved that every finite game has a

Nash equilibrium in mixed strategies.

play “heads” with probability

play “tails” with probability

This is a Nash Equilibrium

35 / 82

36 / 82

Social Choice

Social choice theory is concerned with

group decision making.

Classic example of social choice theory:

voting.

Formally, the issue is combining

preferences to derive a social outcome.

Social Choice

38 / 82

Components of a Social Choice Model

Assume a set Ag = {1, …, n} of

voters.

These are the entities who expresses

preferences.

Voters make group decisions wrt a set

Ω = {ω₁, ω₂, …} of outcomes.

Think of these as the candidates.

If |Ω| = 2, we have a pair wise

election.

39 / 82

Preferences

Each voter has preferences over W: an

ordering over the set of possible

outcomes Ω.

Example, Suppose:

Ω = {gin, rum, brandy, whisky}

then we might have agent mjw with preference order:

ω(mjw) = (brandy, rum, gin, whisky)

meaning:

brandy >mjw rum >mjw gin >mjw whisky

40 / 82

Preference Aggregation

The fundamental problem of social choice

theory:

Given a collection of preference orders, one

for each voter, how do we combine these to

derive a group decision, that reflects as

closely as possible the preferences of voters?

variants of preference aggregation:

social welfare

social choice

Social Welfare Functions

Let П(Ω) be the set of preference

orderings over Ω.

A social welfare function takes the voter

preferences and produces a social

preference order:

We define ≻* as the outcome of a social

welfare function

whisky ≻* gin ≻* brandy ≻* rum ≻*

S ≻* M ≻* SD ≻* MP ≻* C ≻* V ≻* FP ≻*KD ≻* FI ≻*

41 / 82

Social Choice Functions

Sometimes, we just to select one of

the possible candidates, rather than

a social order.

This gives social choice functions:

Example: presidential election.

42 / 82

Voting Procedures: Plurality

Social choice function: selects a

single outcome.

Each voter submits

Each candidate gets one point for

every preference order that ranks them

Winner is the one with largest number

of points.

Example: Political elections in UK, France, USA...

If we have only two candidates, then

plurality is a simple majority election.

43 / 82

44 / 82

Anomalies with Plurality

Strategic Manipulation by Tactical Voting

Suppose your preferences

Suppose |Ag| = 100 and Ω = {ω₁,

ω₂, ω₃} with:

ω₁ ≻ ω₂ ≻ ω₃

while you believe 49% of voters have

40% voters voting for

30% of voters voting for

30% of voters voting for

ω₂ ≻ ω₁ ≻ ω₃

and you believe 49% have

ω₃ ≻ ω₂ ≻ ω₁

You may do better voting for w2, even though

this is not your true preference profile.

This is tactical voting: an example of

strategic manipulation of the vote.

Especially a problem in two legs

With plurality, ω₁ gets elected even

though a clear majority (60%)

prefer another candidate!

45 / 82

46 / 82

Condorcet’s Paradox

Applications of social choice theory

Suppose Ag = {1, 2, 3} and Ω = {ω₁, ω₂, ω₃}

Main application is for human choice

and decision making

Results aggregation

ω₁ ≻₁ ω₂ ≻₁ ω₃

ω₂ ≻₂ ω₃ ≻₂ ω₁

ω₃ ≻₃ ω₁ ≻₃ ω₂

For every possible candidate, there is another

candidate that is preferred by a majority of

This is Condorcet’s paradox: there are situations in

which, no matter which outcome we choose, a

majority of voters will be unhappy with the

outcome chosen.

47 / 82

aggregate the output of several search engines

48 / 82

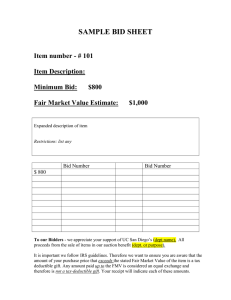

Application of auctions

With the rise of the Internet, auctions have

become popular in many e-commerce applications

(e.g. eBay)

Auctions

are an efficient tool for reaching

agreements in a society of self-interested

Auctions

For example, bandwidth allocation on a network, sponsor links

Auctions can be used for efficient resource

allocation within decentralized

computational

Frequently

utilized

systems

for solving multi-agent

and multi-robot coordination problems

For example, team-based exploration of unknown terrain

50 / 82

What is an Auction?

An auction takes place between an agent

known as the auctioneer and a collection

of agents known as the bidders

Limit Price

Each trader has a value or limit price

that they place on the good.

The goal of the auction is for the auctioneer to allocate

all goods to the bidders

The auctioneer desires to maximize the price and

bidders desire to minimize the price

Assume some initial

Exchange is the free alteration of

allocations of goods and money between

51 / 82

A buyer who exchanges more than their limit price for a

good makes a loss.

A seller who exchanges a good for less than their limit

price makes a loss.

Limit prices clearly have an effect on

the behavior of traders.

There are several models, embodying

different assumptions about the nature

of the good.

52 / 82

Limit Price

Winner's curse

Private value

Termed in the 1950s:

Common value

For example

Good has an value to me that is independent of what it is

worth to you.

Textbook gives the example of John Lennon’s last dollar bill.

The good has the same value to all of us, but we have

differing estimates of what it is.

Winner’s curse

Correlated value

Our values are related.

The more you are prepared to pay, the more I should be

prepared to pay.

Oil companies bid for drilling rights in the Gulf of Mexico

Problem was the bidding process given the uncertainties in estimating the

potential value of an offshore oil field

Competitive bidding in high risk situations, by Capen, Clapp and Campbell,

Journal of Petroleum Technology, 1971

An oil field had an actual intrinsic value of $10 million

Oil companies might guess its value to be anywhere from $5 million to $20

million

The company who wrongly estimated at $20 million and placed a bid at that

level would win the auction, and later find that it was not worth that much

In many cases the winner is the person who has

overestimated the most ⇒ “The Winner’s curse”

Bid Shading: Offer bid below a certain amount of the

valuation

53 / 82

Auction Parameters

Auction

One shot: Only one bidding

Ascending: Auctioneer begins at minimum price, bidders

increase bids

Descending: Auctioneer begins at price over value of good and

lowers the price at each round

Continuous:

Auctions may

Standard Auction: One seller and multiple buyers

Reverse Auction: One buyer and multiple sellers

Double Auction: Multiple sellers and multiple buyers

Combinatorial

54 / 82

Single versus Multi-dimensional

Single dimensional auctions

The only content of an offer are the price and

quantity of some specific type of good.

“I’ll bid $200 for those 2

Multi dimensional auctions

Offers can relate to many different aspects of

many different goods.

“I’m prepared to pay $200 for those two red chairs,

but $300 if you can deliver them tomorrow.”

Frequency ranges for cell

Buyers and sellers may have combinatorial valuations for

bundles of goods

55 / 82

56 / 82

English Auction

An example of first-price open-cry

ascending auctions

Protocol:

Single Dimension Auctions

Auctioneer starts by offering the good at a low price

Auctioneer offers higher prices until no agent is willing to pay

the proposed level

The good is allocated to the agent that made the highest offer

Properties

Generates competition between bidders (generates revenue for

the seller when bidders are uncertain of their valuation)

Dominant strategy: Bid slightly more than current bit, withdraw

if bid reaches personal valuation of good

Winner’s curse (for common value goods)

58 / 82

Dutch Auction

First-Price Sealed-Bid Auctions

First-price sealed-bid auctions are one-shot auctions:

Protocol:

Dutch auctions are examples of first-price opencry descending auctions

Protocol:

Auctioneer starts by offering the good at artificially high value

Auctioneer lowers offer price until some agent makes a bid equal

to the current offer price

The good is then allocated to the agent that made the offer

Properties

Items are sold rapidly (can sell many lots within a single day)

Intuitive strategy: wait for a little bit after your true valuation has

been called and hope no one else gets in there before you (no

general dominant strategy)

Winner’s curse also possible

59 / 82

Within a single round bidders submit a sealed bid for the

The good is allocated to the agent that made highest

Winner pays the price of highest

Often used in commercial auctions, e.g., public building contracts

Problem: the difference between the highest and second

highest bid is “wasted money” (the winner could have

offered less)

Intuitive strategy: bid a little bit less than your true

valuation (no general dominant strategy)

As more bidders as smaller the deviation should

60 / 82

Vickrey Auctions

Generalized first price auctions

Proposed by William Vickrey in 1961 (Nobel Prize in Economic

Sciences in 1996)

Vickrey auctions are examples of second-price sealed-bid

one-shot auctions

Protocol:

within a single round bidders submit a sealed bid for the good

good is allocated to agent that made highest bid

winner pays price of second highest bid

Used by Yahoo for “sponsored links” auctions

Introduced in 1997 for selling Internet advertising by

Yahoo/Overture (before there were only “banner ads”)

Advertisers submit a bid reporting the willingness to pay on a

per-click basis for a particular keyword

Cost-Per-Click (CPC)

Advertisers were billed for each “click” on sponsored

links leading to their page

Dominant strategy: bid your true valuation

if you bid more, you risk to pay too much

if you bid less, you lower your chances of winning while still having to pay

the same price in case you win

Antisocial behavior: bid more than your true valuation to

make opponents suffer (not “rational”)

For private value auctions, strategically equivalent to the

English auction mechanism

The links were arranged in descending order of bids, making

highest bids the most prominent

Auctions take place during each

However, auction mechanism turned out to be unstable!

Bidders revised their bids as often as

61 / 82

62 / 82

Generalized second price auctions

Introduced by Google for pricing

sponsored links (AdWords

Select)

Observation: Bidders generally do

not want to pay much more than the

rank below them

Therefore: 2nd price

Further

Combinatorial Auctions

Advertisers bid for keywords and keyword

combinations

Rank: CPC_BID X quality score

Price: with respect to lower ranks

http://www.chipkin.com/googleadwords-actual-cpccalculation/

After seeing Google’s success, Yahoo

also switched to second price

auctions in 2002

63 / 82

Combinatorial Auctions

Complements and Substitutes

In a combinatorial auction, the auctioneer puts

several goods on sale and the other agents

submit bids for entire bundles of goods

Given a set of bids, the winner determination

problem is the problem of deciding which of

the bids to accept

The value an agent assigns to a bundle of goods may

depend on the combination

Complements: The value assigned to a set is greater

than the sum of the values assigns to its elements

The solution must be feasible (no good may be allocated

to more than one agent)

Ideally, it should also be optimal (in the sense of

maximizing revenue for the auctioneer)

A challenging algorithmic problem

65 / 82

Example: „a pair of shoes” (left shoe and a right

Substitutes: The value assigned to a set is lower than

the sum of the values assigned to its elements

Example: a ticket to the theatre and another one to a football

match for the same night

In such cases an auction mechanism allocating one

item at a time is problematic since the best bidding

strategy in one auction may depend on the outcome

of other auctions

66 / 82

Optimal Winner Determination Algorithm

Protocol

One auctioneer, several bidders, and many items to be

Each bidder submits a number of package bids specifying

sold

the valuation (price) the bidder is prepared to pay for a

particular bundle

The auctioneer announces a number of winning bids

The winning bids determine which bidder obtains which

item, and how much each bidder has to pay

No item may be allocated to more than one bidder

Examples of package bids:

Agent 1: ({a, b}, 5), ({b, c}, 7), ({c, d}, 6)

Agent 2: ({a, d}, 7), ({a, c, d}, 8)

Agent 3: ({b}, 5), ({a, b, c, d}, 12)

Generally, there are 2n − 1 non-empty bundles for n items,

how to compute the optimal solution?

67 / 82

An auctioneer has a set of items M = {1,2,…,m} to sell

There are N={1,2,…,n} buyers placing bids

Buyers submit a set of package bids B = {B1,B2,…,Bn}

A package bid is a tuple B = [S, v(S)], where S ⊆ M is

a set of items (bundle) and vi(S) > 0 buyer’s i true

valuation (price)

xS,i ∈ {0, 1} is a decision variable for assigning

bundle S to buyer i

The winner determination problem (WDP) is to label the

bids as winning or losing (by deciding each xs,i so as to

maximize the sum of the total accepted bid price)

This is NP-Complete ! Can be solved with an integer

program solver, or heuristic search

68 / 82

Solving WDPs by Heuristic Search

Two ways of representing the state space:

Branch-on-items:

A state is a set of items for which an

allocation decision has already been made

Branching is carried out by adding a further

Branch-on-bids:

Branch-on-Items

Branching based on the

question: “What bid should this

item be assigned to?”

Each path in the search tree

consists of a sequence of

disjoint bids

Bids that do not share items with

each other

A path ends when no bid can be added

to it at each node are the sum

Costs

A state is a set of bids for which an

acceptance decision has already been made

Branching is carried out by adding a further

of the prices of the bids

accepted on the path

69 / 82

Problem with branch-on-items

70 / 82

Example of branch-on-items

What if the auctioneer's revenue can increase

by keeping items?

Example:

There is no bid for 1,

$5 bid for 2,

$3 bid for {1;2}

Bids: {1,2},

{2,3}, {3}, {1;3}

We add Dummy

Bids: {1}, {2}

Thus, better to keep 1 and sell 2 than selling

The auctioneer's possibility of keeping items can

be implemented by placing dummy bids of price

zero on those items that received no 1-item bids

(Sandholm 2002)

71 / 82

72 / 82

Branch-on-bids

Branch-on-bids - Example

Branching is based on the question:

“Should this bid be accepted or rejected?“

Binary tree

When branching on a bid, the children in

the search tree are the world where that bid

is accepted (IN), and the world where that

bid is rejected (OUT)

No dummy bids are needed

First a bid graph is constructed that

represents all constraints between the bids

Then, bids are accepted/rejected until all

bids have been handled

Bids: {1,2},

{2,3}, {3}, {1;3}

On accept: remove all constrained bids from the graph

On reject: remove bid itself from the graph

73 / 82

Heuristic Function

74 / 82

Auctions for Multi-Robot Exploration

For any node N in the search tree, let g(N) be the

revenue generated by bids that were accepted

according

The

heuristic

until

function

N

h(N) estimates for every node N

how much additional revenue can be expected ongoing

from N

An upper bound on h(N) is given by the sum over the

maximum contribution of the set of unallocated items A:

Tighter bounds can be obtained by solving the linear

program relaxation of the remaining items (Sandholm

2006)

75 / 82

Consider a team of mobile robots that has to visit a number

of given targets (locations) in initially partially unknown

Examples of such tasks are cleaning missions, spaceterrain

exploration, surveillance, and search and rescue

Continuous re-allocation of targets to robots is necessary

For example, robots might discover that they are separated by a blockage

from their target

To allocate and re-allocate the targets among themselves,

the robots can use auctions where they sell and buy targets

Team objective can be to minimize the sum of all path costs,

hence, bidding prices are estimated travel costs

The path cost of a robot is the sum of the edge costs along its

path, from its current location to the last target that it visits

76 / 82

Multi-Robot Exploration

General Exploration

Robot always follow a minimum cost path that visits all

allocated targets

Whenever a robot gains more information about the terrain, it

shares this information with the other robots

If the remaining path of at least one robot is blocked, then all

robots put their unvisited targets up for auction

The auction(s) close after a predetermined amount of time

Constraints: each robot wins at most one bundle and each target is

contained in exactly one bundle

After each auction, robots gained new targets or exchanged

targets with other robots

Then, the cycle repeats

Three robots exploring Mars. The robots’ task is to gather data around

the four craters, e.g. to visit the highlighted target sites. Source: N.

Kalra

77 / 82

78 / 82

Single-Round Combinatorial Auction

Parallel Single-Item Auctions

Protocol:

Protocol:

Every robot bids all possible bundles of targets

The valuation is the estimated smallest path cost needed

to visit all targets in the bundle (TSP)

A central auctioneer determines and informs the winning

robots within one round

Every robot bids on each target in parallel until

all targets are asigned

The valuation is the smallest path cost from the

robots current position to the target

Similar to Target Clustering

Optimal team performance:

Advantage:

Combinatorial auctions take all positive and negative

synergies between targets into account

Simple to implement and computation and

communication efficient

Minimization of the total path

Drawbacks:

Disadvantage:

Robots cannot bid on all possible bundles of targets

because the number of possible bundles is exponential in

the number of targets

To calculate costs for each bundle requires to calculate the

smallest path cost for visiting a set of targets (Traveling

Salesman Problem)

Winner determination is NP-hard

The team performance can be highly suboptimal

since it does not take any synergies between the

targets into account

79 / 82

80 / 82

Sequential Single-Item Auctions

Summary

Utilities and competitive strategies

Voting mechanism

We discussed English, Dutch, First-Price SealedBid, and Vickrey auctions

Generalized second price auctions have shown

good properties in practice, however, “truth

telling” is not a dominant strategy

Combinatorial auctions are a mechanism to

allocate a number of goods to a number of

agents

Protocol:

Targets are auctioned after the sequence T1, T2, T3, T4, …

The valuation is the increase in its smallest path cost that

results from winning the auctioned target

The robot with the overall smallest bid is allocated the

corresponding target

Finally, each robot calculates the minimum-cost path for

visiting all of its targets and moves along this path

Advantages:

Hill climbing search: some synergies between targets are

taken into account (but not all of them)

Simple to implement and computation and communication

efficient

Since robots can determine the winners by listening to the

bids (and identifying the smallest bid) the method can be

executed decentralized

Disadvantages:

Order of targets change the result

81 / 82

82 / 82