Automatic Speech Recognition for Marathi Isolated Words Web Site: www.ijaiem.org Email: ,

advertisement

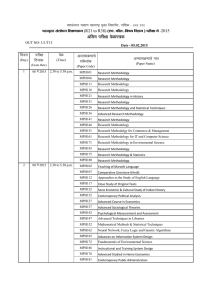

International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com Volume 1, Issue 3, November 2012 ISSN 2319 - 4847 Automatic Speech Recognition for Marathi Isolated Words Gajanan Pandurang Khetri1, Satish L. Padme2, Dinesh Chnadra Jain3 ,Dr. H. S. Fadewar4, Dr. B. R. Sontakke5 and Dr. Vrushsen P. Pawar6 1 Research scholar, department of Computer Science, Singhania University, Rajasthan, India 2 Assistant Librarian, Dr. Babasaheb Ambedkar Marathwada Universtiy, Aurangabad, Maharashtra, India 5 Research Scholar, Department of Computer Science, SRTM University, Nanded, Maharashtra, India 4 Assistant Professor, Department of Computational Science, Swami Ramanand Teerth Marathwada University, Nanded, Maharashtra, India 5 Associate Professor, Department of Mathematics, Pratisthan Mahavidyalaya, Paithan, Maharashtra, India 6 Assistant Professor and Head, Department of Computational Science, Swami Ramanand Teerth Marathwada University, Nanded, Maharashtra, India. ABSTRACT A biometric recognition system uses for law enforcement and legal purposes of secure entry in private and government institution and organization. In this paper, speech recognition is one of the techniques of biometrics, to collect the words convert to an acoustic signal. The use of isolators Marathi word feature extraction, to identify and verify each spoken word is a field that is being actively researched. The results from MFCC and VQ algorithm apply on IWAMSR databases, found easy access of end users. Keywords: Speech recognition, Marathi speech recognition, IWAMSR, MFCC and vector quantization. 1. INTRODUCTION Biometrics first came into extensive use for law-enforcement and legal purposes—identification of criminals and illegal aliens, security clearances for employees in sensitive jobs, paternity determinations, forensics, positive identifications of convicts and prisoners, and so on. Today, however, many civilian and private-sector applications are increasingly using biometrics to establish personal recognition [1]. Biometrics in its various forms provides the potential for reliable, convenient, automatic person recognition. Systems based on fingerprints, faces, hand geometries, iris images, vein patterns and voice signals are now all available commercially. While this is true, it is also true that the take-up of this technology has been slow. Biometrics applications act as a guard control, limiting access to something or somewhere; it is the something or the somewhere that in turn defines the potential merits of biometric-based solutions. Yet biometrics has not entered every-day life to any extent whatsoever. If it had, then conventional guards, physical keys and the allpervading passwords and PIN numbers essential to gain access to mobile phones, computer software, buildings and so on, all would be in decline. This lack of take-up is even more surprising given the rate at which passwords and PINs are forgotten, as indicated by the prevalence of the web-page rescue message “Forgotten your password?” In short, why has biometric technology not made PINs, passwords and many physical keys things of the past? The answer must lie in factors such as cost, accuracy and convenience of use [13]. The main used of speech recognition is collected words (captured by talker, microphone and telephone) convert to an acoustic signal. The use of isolated Marathi word feature extraction, to identify and verify each spoken word is a field that is being actively researched. Isolated speech recognition refers to the ability of the speech recognizer to identify and recognize a specific word in a stream of words [20]. Volume 1, Issue 3, November 2012 Page 69 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com Volume 1, Issue 3, November 2012 ISSN 2319 - 4847 2. RELATED WORK Features extraction in ASR is the computation of a sequence of feature vectors which provides a compact representation of the given speech signal. Ursin [2] identified the most common operations required for front-end (features extraction) techniques. Those operations include; sampling with a preferred sampling rate between 16000 to 22000 times a second for speech processing corresponding to 16 kHz to 22 kHz. According to Martens [3], there are various speech features extraction techniques, including Linear Predictive Coding (LPC), Perceptual Linear Prediction (PLP) and MelFrequency Cepstral Coefficient (MFCC). However, MFCC has been the most frequently used technique especially in speech recognition and speaker verification applications. Feature extraction is usually performed in three main stages. The first stage is called the speech analysis or the acoustic front-end, which performs spectro-temporal analysis of the speech signal and generates raw features describing the envelope of the power spectrum of short speech intervals. The second stage compiles an extended feature vector composed of static and dynamic features. Finally, the last stage transforms these extended feature vectors into more compact and robust vectors that are then supplied to the recognizer. [3].The main objective of features extraction is to extract characteristics from the speech signal that are unique, discriminative, robust and computationally efficient to each word which are then used to differentiate between different words [2]. Based on a highly simplified model for speech production, the linear prediction coding (LPC) algorithm is one of the earliest standardized coders, which works at low bit-rate inspired by observations of the basic properties of speech signals and represents an attempt to mimic the human speech production mechanism [9]. In addition, Chetouani et al. [10] considered LPC as a useful method for feature extraction based on a modelization of the vocal tract. Another popular feature set is the set of perceptual linear prediction (PLP) coefficients, which was first introduced by Hermansky [11], who formulated PLP as a method for deriving a more auditory-like spectrum based on linear predictive (LP) analysis of speech, which is achieved by making some engineering approximations of the psychophysical attributes of the human hearing process [12]. PLP introduces an estimation of the auditory properties of human ear, and takes features of the human ear into account more specifically than many other acoustical front end techniques [11]. The most prevalent and dominant method used to extract spectral features is calculating Mel-Frequency Cepstral Coefficients (MFCC). MFCCs are one of the most popular feature extraction techniques used in speech recognition based on frequency domain using the Mel scale which is based on the human ear scale [10]. MFCCs being considered as frequency domain features are much more accurate than time domain features [14]. Huang et al. [14] also defined the Mel-Frequency Cepstral Coefficients (MFCC) as a representation of the real Cepstral of a windowed short-time signal derived from the Fast Fourier Transform (FFT) of that signal. The difference from the real Cepstral is that a nonlinear frequency scale is used, which approximates the behavior of the auditory system. According to Zoric [15], MFCC is an audio feature extraction technique which extracts parameters from the speech similar to ones that are used by humans for hearing speech, while at the same time, deemphasizes all other information. As MFCCs take into consideration the characteristics of the human auditory system, they are commonly used in the automatic speech recognition systems. MFCCs are extracted by transforming the speech signal which is the convolution between glottal pulse and the vocal tract impulse response into a sum of two components known as the cepstrum that can be separated by band pass linear filters [16]. MFCCs extraction involves a frame-based analysis of a speech signal where the speech signal is broken down into a sequence of frames. Each frame undergoes a sinusoidal transform (Fast Fourier Transform) in order to obtain certain parameter which then undergoes Mel-scale perceptual weighting and de-correlation. The result is a sequence of feature vectors describing useful logarithmically compressed amplitude and simplified frequency information [17]. According to Martens [3], MFCC features are obtained by first performing a standard Fourier analysis, and then converting the power-spectrum to a mel-frequency spectrum. Therefore, MFCC will be obtained by taking the logarithm of that spectrum and by computing its inverse Fourier transform. Quantization is the process of approximating continuous amplitude signals by discrete symbols, which can be quantization of a single signal value or parameter known as scalar quantization, or joint quantization of multiple signal values or parameters known as vector quantization [14]. VQ offers several unique advantages over scalar quantization, including the ability to exploit the linear and nonlinear dependencies among the vector components, and is highly versatile in the selection of multidimensional quantizer cell shapes. Due to these reasons, VQ results in lower distortion than scalar quantization [9]. According to Goldberg and Riek [18], VQ is a general class of methods that encode groups of data rather than individual samples of data in order to exploit the relation among elements in the group to represent the group as a whole more efficiently than each element by itself. Vector quantization (VQ) is not a method of producing clusters or partitions of a data set but rather an application of many of the clustering algorithms already presented with a main purpose to perform data compression [19]. Thus, it is lossy data compression method which maps k-dimensional vectors into a finite set of vectors [15]. Volume 1, Issue 3, November 2012 Page 70 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com Volume 1, Issue 3, November 2012 ISSN 2319 - 4847 3. PREPROCESSING 3.1 Features extraction using MFCC algorithm According to Ursin [2], MFCC is considered as the standard method for feature extraction in speech recognition tasks. Perhaps the most popular features used for speech recognition today are the mel-frequency cepstral coefficients (MFCCs), which are obtained by first performing a standard Fourier analysis, and then converting the power-spectrum to a melfrequency spectrum. By taking the logarithm of that spectrum and by computing its inverse Fourier transform one then obtains the MFCC. The individual features of the MFCC seem to be just weakly correlated, which turns out to be an advantage for the creation of statistical acoustic models [3]. MFCC has generally obtained a better accuracy and a minor computational complexity with respect to alternative processing as compared to other features extraction techniques [4]. Mel-Frequency Cepstral Coefficient (MFCC) is a deconvolution algorithm applied in order to obtain the vocal tract impulse response from the speech signal. It transforms the speech signal which is the convolution between glottal pulse and the vocal tract impulse response into a sum of two components known as the cestrum. This computation is carried out by taking the inverse DFT of the logarithm of the magnitude spectrum of the speech frame [8] (1) Where, h ^ [n], u ^ [n] and x ^ [n] are the complex cestrum of h[n], u[n] and x[n] respectively. 3.2 Features training using VQ algorithm The training process of the VQ codebook applies an important algorithm known as the LBG VQ algorithm, which is used for clustering a set of L training vectors into a set of M codebook vectors. This algorithm is formally implemented by the following recursive procedure: [5], the following steps are required for the training of the VQ codebook using the LBG algorithm as described by Rabiner and Juang [6]. 1. Design a 1-vector codebook; this is the centroid of the entire set of training vectors. Therefore, no iteration is required in this step. 2. Double the size of the codebook by splitting each current codebook yn according to the following rule (2) (2) Where, n varies from 1 to the current size of the codebook, and _ is the splitting parameter, whereby _ is usually in the range of (0.01 _ _ _ 0.05). 3.3 Feature matching testing using euclidean distances measure The matching of an unknown Marathi word is performed by measuring the Euclidean distance between the feature vectors of the unknown Marathi word to the model of the known Marathi words in the database. The goal is to find the codebook that has the minimum distance measurement in order to identify the unknown word [7]. For example in the testing or identification session, the Euclidean distance between the features vector and codebook for each Marathi spoken word is calculated and the word with the smallest average minimum distance is picked as shown in the equation below (3). (3) Where, xi is the ith input features vector, yi is the ith features vector in the codebook, and d is the distance between xi and yi. Volume 1, Issue 3, November 2012 Page 71 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com Volume 1, Issue 3, November 2012 ISSN 2319 - 4847 4. EXPERIMENTAL SETUP IWAMSR database created using three steps, one is talking person, talking condition, the transducers and transmission system. IWAMSR The first step contains five different speakers out of whom their speech samples were collected. Those five speakers include three male and two female speakers belonging to different ages, genders and races. Second step follow speaking condition in which the speech samples were collected from, which basically refer to the environment of the recording stage, finally third step is transducers and transmission system, speech samples were recorded and collected using a normal microphone. Database1 collects 63 unknown Marathi speech samples contain six speech samples collected from two different talkers, which are trained to produce the VQ codebook. Database2 collects 90 unknown Marathi speech samples contain one male talker and remaining was collected at tested against recording. Database3 collects 36 unknown Marathi speech samples contain nine speech samples from four different talkers who are male and female, which are trained to produce VQ codebook. The experiments were carried out Pentium IV processor with 3.0 GHz, hard disk drive is 200GB, 1GB Random Access Memory and talker. 5. EXPERIMENTAL RESULTS The feature matching stage was done for three different databases of IWAMSR. Figure 1, 2 shows three different databases of IWAMSR, each database contains number of unknown speech samples for testing purpose. database1, database2 and database3 contain 63, 90 and 36 unknown speech samples were tested and yielding an accuracy rate is near about 85.71%, 31.11% and 88.88%. Database1 of IWAMSR out of 63 unknown speech samples found 54 correct matching and remaining 9 speech samples are false matching. Database2 of IWAMSR out of 90 unknown speech samples found 28 correct matching and 62 speech samples are false matching. Database3 of IWAMSR out of 36 unknown speech samples found 32 correct matching and 4 speech samples are false matching. Table 1 shows, FAR (%) results on IWAMSR databases. Figure 1 Testing Results of various IWAMSR DATABASES Figure 2 Accuracy Rate in IWAMSR Databases Volume 1, Issue 3, November 2012 Page 72 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com Volume 1, Issue 3, November 2012 ISSN 2319 - 4847 Table 1: FAR (%) Results on IWAMSR DATABASE IWAMSR Databases FAR (%) Database1 Database2 Database3 0.14 0.68 0.11 6. CONCLUSION FIWAMSR database1 has better performance than IWAMSR database2. Database3 found high recognition rate it main reason behind this database contains nine speech samples collected from different male and female talkers. This research has satisfy the fasten process of communication. Also, this research will satisfy the end users and easy access for that purpose. This system could be implemented in various Marathi businesses; Marathi organization Marathi based financial institution and Marathi academic institutions. References [1] Salil Prabhakar, Sharath Pankanti and Anil K. Jain, [2003], “Biometric Recognition: Security and Privacy Concerns,” IEEE Security and Privacy Magazine, pp. 33-42. J. S. Mason and J. D. Brand, “The Role of dynamics in Visual Speech Biometrics,” IEEE, 2002. [2] M. Ursin, “Triphone Clustering In Finnish Continuous Speech recognition,” Master Thesis, Department of Computer Science, Helsinki University of Technology, Finland, 2002. [3] J. P. Martens, “Continuous Speech Recognition over the Telephone,” Electronics and Information Systems, Ghent University, Belgium, 2000. [4] S. B. Davis and P. Mermelstein, “Comparison of Parametric Representations of Monosyllabic Word recognition In Continuously Spoken Sentences,” IEEE Transactions on Acoustics, Speech and Signal Processing, vol. 28, pp. 357- 366, 1980. [5] Y. Linde, A. Buzo and R. M. Gray, “An Algorithm for Vector Quantizer Design,” IEEE Transactions on Communications, vol. COM28, no 1, pp. 84-95, 1980. [6] L. Rabiner and B. H. Juang, “Fundamentals of speech recognition,” Prentice Hall, NJ, USA, 1993. [7] P. Franti, T. Kaukoranta and O. Nevalainen, “On the Splitting Method for Vector Quantization Codebook Generation,” Optical Engineering;, vol. 36, no. 11, pp. 3043-3051, 1997. [8] C. Becchetti and L. P. Ricotti, “Speech Recognition Theory and C++ Implementation,” John Wiley and Sons Ltd;, England, 1999. [9] C. C. Wai, “Speech Coding Algorithms Foundation and Evolution of Standardized Coders,” John Wiley and Sons Inc., NJ, USA, 2003 [10] M. Chetouani, B. Gas, J. L. Zarader and C. Chavy, “Neural Predictive Coding for Speech Discriminant Feature Extraction: The DFE-NPC,” ESANN'2002 proceedings – European Symposium on Artificial Neural Networks, Bruges, Belgium, pp. 275-280, 2002. [11] H. Hermansky, “Perceptual Linear Predictive (PLP) Analysis of Speech,” The Journal of the Acoustical Society of America, vol. 87, no. 4, pp. 1738–1752, 1990. [12] B. Milner, “A comparison of front -end Configurations for Robust Speech Recognition,” ICASSP ’2002, vol. 01, pp. 797–800, 2002. [13] J. S. Mason and J. D. Brand, “The Role of dynamics in Visual Speech Biometrics,” IEEE, 2002. [14] X. Huang, A. Acero and H. W. Hon, “Spoken Language Processing: A Guide to Theory, Algorithm, and System Development,” Prentice Hall, Upper Saddle River, NJ, USA, 2001. [15] G. Zoric, “Automatic Lip Synchronization by Speech Signal Analysis,” Master Thesis, Department of Telecommunications, Faculty of Electrical Engineering and Computing, University of Zagreb, Croatia, 2005. [16] O. Khalifa, S. Khan, M. R. Islam, M. Faizal and D. Dol, “Text Independent automatic Speaker Recognition,” 3rd International Conference on Electrical & Computer Engineering, Dhaka, Bangladesh, pp. 561-564, 2004. [17] C. R. Buchanan, “Informatics Research Proposal – Modeling the Semantics of sound,” http://www.inf.ed.ac.uk/teaching/courses/diss/projects/s0454291.pdf, retrieved in March 2005. [18] R. Goldberg and L. Riek, “A Practical Handbook of speech Coders,” CRC Press,USA, 2000. [19] A. R. Webb, “Statistical pattern Recognition,” John Wiley and Sons Ltd., England, 2002. [20] C. R. Spitzer, “The Avionics Handbook,” CRC Press, USA, 2001 AUTHOR Gajanan P. Khetri received the M.Sc (Information Technology) degree in Department of Computer Science and Information Technology from Dr. Babasaheb Ambedkar Marathwada University Aurangabad, Maharashtra (India) in 2008. He is currently Ph.D. student in Department of Computer Science, Singhania University, Pacheri Bari, Dist-Jhunjhunu, Rajasthan (India). Volume 1, Issue 3, November 2012 Page 73 International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: editor@ijaiem.org, editorijaiem@gmail.com Volume 1, Issue 3, November 2012 ISSN 2319 - 4847 Satish L.Padme received the MLIsc (Library and Information Science) Degree from Dr. Babasaheb Ambedkar Marathwada University, Aurangabad, Maharashtra, (India) He Cleared his NET in Library and Information Science .Now he doing his Ph.D. from Dr. Babasaheb Ambedkar Marathwada University, Aurangabad, Maharashtra, (India). He has published 15 research papers in conferences, seminars .Currently he has working as an Assistant Librarian in Dr. Babasaheb Ambedkar Marathwada University, Aurangabad, Maharashtra (India). Mr. Dinesh Chandra Jain received M.Tech (IT) degree from BU and B.E(Computer Science ) degree from RGPV –University Bhopal. He has published many Research Papers in reputed International Journal. He is a member of ISTE and many Engineering Societies. He is pursuing PhD in Computer Science from SRTM University-Nanded (India). Dr.Vrushsen Pawar received MS, Ph.D. (Computer) Degree from Dept. CS & IT, Dr. B. A. M. University, and PDF from ES, University of Cambridge, UK. Also Received MCA (SMU), MBA (VMU) degrees respectively. He has received prestigious fellowship from DST, UGRF (UGC), Sakaal foundation, ES London, ABC (USA) etc. He has published 90 and more research papers in reputed national international Journals & conferences. He has recognized Ph.D Guide from University of Pune, SRTM University & Singhania University (India) He is senior IEEE member and other reputed society member. Currently he has working, Associate Professor and Head of Department of Computational sciences in SRTM University, Nanded, MS, India. Volume 1, Issue 3, November 2012 Page 74