Document 13260402

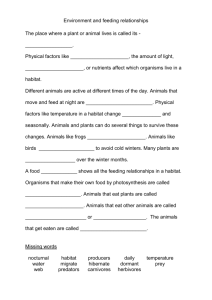

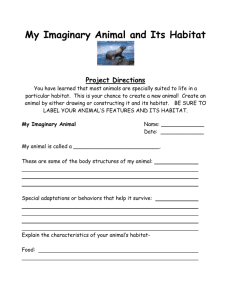

advertisement