Uncovering Mental Representations with Markov Chain Monte Carlo Adam N. Sanborn

advertisement

Uncovering Mental Representations with

Markov Chain Monte Carlo

Adam N. Sanborn

Indiana University, Bloomington

Thomas L. Griffiths

University of California, Berkeley

Richard M. Shiffrin

Indiana University, Bloomington

Abstract

A key challenge for cognitive psychology is the investigation of mental representations, such as object categories, subjective probabilities, choice utilities,

and memory traces. In many cases, these representations can be expressed

as a non-negative function defined over a set of objects. We present a behavioral method for estimating these functions. Our approach uses people as

components of a Markov chain Monte Carlo (MCMC) algorithm, a sophisticated sampling method originally developed in statistical physics. Experiments 1 and 2 verified the MCMC method by training participants on various category structures and then recovering those structures. Experiment 3

demonstrated that the MCMC method can be used estimate the structures

of the real-world animal shape categories of giraffes, horses, dogs, and cats.

Experiment 4 combined the MCMC method with multidimensional scaling

to demonstrate how different accounts of the structure of categories, such as

prototype and exemplar models, can be tested, producing samples from the

categories of apples, oranges, and grapes.

Determining how people mentally represent different concepts is one of the central

goals of cognitive psychology. Identifying the content of mental representations allows us to

explore the correspondence between those representations and the world, and to understand

their role in cognition. Psychologists have developed a variety of sophisticated techniques

for estimating certain kinds of mental representations, such as the spatial or featural repreThe authors would like to thank Jason Gold, Rich Ivry, Michael Jones, Woojae Kim, Krystal Klein, Tania

Lombrozo, Chris Lucas, Angela Nelson, Rob Nosofsky and Jing Xu for helpful comments. ANS was supported

by an Graduate Research Fellowship from the National Science Foundation and TLG was supported by grant

number FA9550-07-1-0351 from the Air Force Office of Scientific Research while completing this research.

Experiments 1, 2, and 3 were conducted while ANS and TLG were at Brown University. Preliminary results

from Experiments 2 and 3 were presented at the 2007 Neural Information Processing Systems conference.

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

2

sentations underlying similarity judgments, from behavior (e.g., Shepard, 1962; Shepard &

Arabie, 1979; Torgerson, 1958). However, the standard method used for investigating the

vast majority of mental representations, such as object categories, subjective probabilities,

choice utilities, and memory traces, is asking participants to make judgments on a set of

stimuli that the researcher selects before the experiment begins. In this paper, we describe a

novel experimental procedure that can be used to efficiently identify mental representations

that can be expressed as a non-negative function over a set of objects, a broad class that

includes all of the examples mentioned above.

The basic idea behind our approach is to design a procedure that produces samples

of stimuli that are not chosen before the experiment begins, but are adaptively selected to

concentrate in regions where the function in question has large values. More formally, for

any non-negative function f (x) over a space of objects X , we can define a corresponding

probability distribution p(x) ∝ f (x), being the distribution obtained when f (x) is normalized over X . Our approach provides a way to draw samples from this distribution. For

example, if we are investigating a category, which is associated with a probability distribution or similarity function over objects, this procedure will produce samples of objects

that have high probability or similarity for that category. We can also use the samples

to compute any statistic of interest concerning a particular function. A large number of

samples will provide a good approximation to the means, variances, covariances, and higher

moments of the distribution p(x). This makes it possible to test claims about mental representations made by different cognitive theories. For instance, we can compare the samples

that are produced with the claims about subjective probability distributions that are made

by probabilistic models of cognition (e.g., Anderson, 1990; Ashby & Alfonso-Reese, 1995;

Oaksford & Chater, 1998).

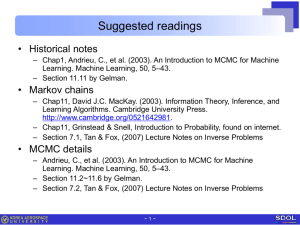

The procedure that we develop for sampling from these mental representations is

based on a method for drawing samples from complex probability distributions known as

Markov chain Monte Carlo (MCMC) (an introduction is provided by Neal, 1993). In this

method, a Markov chain is constructed in such a way that it is guaranteed to converge to a

particular distribution, allowing the states of the Markov chain to be used in the same way

as samples from that distribution. Transitions between states are made using a series of

local decisions as to whether to accept a proposed change to a given object, requiring only

limited knowledge of the underlying distribution. As a consequence, MCMC can be applied in contexts where other Monte Carlo methods fail. The first MCMC algorithms were

used for solving challenging probabilistic problems in statistical physics (Metropolis, Rosenbluth, Rosenbluth, Teller, & Teller, 1953), but they have become commonplace in a variety

of disciplines, including statistics (Gilks, Richardson, & Spiegelhalter, 1996) and biology

(Huelsenbeck, Ronquist, Nielsen, & Bollback, 2001). These methods are also beginning to

be used in modeling of cognitive psychology (Farrell & Ludwig, in press; Griffiths, Steyvers,

& Tenenbaum, 2007; Morey, Rouder, & Speckman, 2008; Navarro, Griffiths, Steyvers, &

Lee, 2006)

In standard uses of MCMC, the distribution p(x) from which we want to generate

samples is known – in the sense that it is possible to write down at least a function proportional to p(x) – but difficult to sample from. Our application to mental representations is

more challenging, as we are attempting to sample from distributions that are unknown to

the researcher, but implicit in the behavior of our participants. To address this problem, we

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

3

designed a task that will allow people to act as elements of an MCMC algorithm, letting us

sample from the distribution p(x) associated with the underlying representation f (x). This

task is a simple two-alternative forced choice. Much research has been devoted to relating

the magnitude of psychological responses to choice probabilities, resulting in mathematical

models of these tasks (e.g., Luce, 1963). We point out an equivalence between a model of

human choice behavior and one of the elements of an MCMC algorithm, and then use this

equivalence to develop a method for obtaining information about different kinds of mental

representations. This allows us to harness the power of a sophisticated sampling algorithm

to efficiently explore these representations, while never presenting our participants with

anything more exotic than a choice between two alternatives.

While our approach can be applied to any representation that can be expressed as

a non-negative function over objects, our focus in this paper will be on the case of object

categories. Grouping objects into categories is a basic mental operation that has been

widely studied and modeled. Most successful models of categorization assign a category to

a new object according to the similarity of that object to the category representation. The

similarity of all possible new objects to the category representation can be thought of as a

non-negative function over these objects, f (x). This function, which we are interested in

determining, is a consequence of not only category membership, but also of the decisional

processes that extend partial category membership to objects that are not part of the

category representation.

Our method is certainly not the first developed to investigate categorization. Researchers have invented many methods to investigate the categorization process: some determine the psychological space in which categories lie, others determine how participants

choose between different categories, and still others determine what objects are members of

a category. All of these methods have been applied in simple training experiments, but they

do not always scale well to the large stimulus spaces needed to represent natural stimuli.

Consequently, the majority of experiments that investigate human categorization models

are training studies. Most of these training experiments use simple stimuli with only a

few dimensions, such as binary dimensional shapes (Shepard, Hovland, & Jenkins, 1961),

lengths of a line (Huttenlocher, Hedges, & Vevea, 2000), or Munsell colors (Nosofsky, 1987;

Roberson, Davies, & Davidoff, 2000), though more complicated stimuli have also been used

(e.g., Posner & Keele, 1968). Research on natural perceptual categories has focused on exploring the relationships of category labels (Storms, Boeck, & Ruts, 2000; Rosch & Mervis,

1975; Rosch, Mervis, Gray, Johnson, & Boyes-Braem, 1976), with a few studies examining

members of a perceptual category using simple parameterized stimuli (e.g., Labov, 1973).

It is difficult to investigate natural perceptual categories with realistic stimuli because

natural stimuli have a great deal of variability. The number of objects that can be distinguished by our senses is enormous. Our MCMC method makes it possible to explore the

structure of natural categories in relatively large stimulus spaces. We illustrate the potential

of this approach by estimating the probability distributions associated with a set of natural

categories, and asking whether these distributions are consistent with different models of

categorization. An enduring question in the categorization literature is whether people represent categories in terms of exemplars or prototypes (J. D. Smith & Minda, 1998; Nosofsky

& Zaki, 2002; Minda & Smith, 2002; Storms et al., 2000; Nosofsky, 1988; Nosofsky & Johansen, 2000; Zaki, Nosofsky, Stanton, & Cohen, 2003; E. E. Smith, Patalano, & Jonides,

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

4

1998; Heit & Barsalou, 1996). In an exemplar-based representation, a category is simply

the set of its members, and all of these members are used when evaluating whether a new

object belongs to the category (e.g., Medin & Schaffer, 1978; Nosofsky, 1986). In contrast, a

prototype model assumes that people have an abstract representation of a category in terms

of a typical or central member, and use only this abstract representation when evaluating

new objects (e.g., Posner & Keele, 1968; Reed, 1972). These representations are associated

with different probability distributions over objects, with exemplar models corresponding to

a nonparametric density estimation scheme that admits distributions of arbitrary structure

and prototype models corresponding to a parametric density estimation scheme that favors

unimodal distributions (Ashby & Alfonso-Reese, 1995). The distributions for different natural categories produced by MCMC could thus be used to discriminate between these two

different accounts of categorization.

The plan of the paper is as follows. The next section describes MCMC in general

and the Metropolis method and Hastings acceptance rules in particular. We then describe

the experimental task we use to connect human judgments to MCMC, and outline how

this task can be used to study the mental representation of categories. Experiment 1 tests

three variants of this task using simple one-dimensional stimuli and trained distributions,

while Experiment 2 uses one of these variants to show that our method can be used to

recover different category structures from human judgments. Experiment 3 applies the

method to four natural categories, providing estimates of the distributions over animal

shapes that people associate with giraffes, horses, cats, and dogs. Experiment 4 combines

the MCMC method with multidimensional scaling in order to explore the structure of

natural categories in a perceptual space. This experiment illustrates how the suitability of a

simple prototype model for describing natural categories could be tested by combining these

two methodologies. Finally, in the General Discussion we discuss how MCMC relates to

other experimental methods, and describe some potential problems that can be encountered

in using MCMC, together with possible solutions.

Markov chain Monte Carlo

Generating samples from a probability distribution is a common problem that arises

when working with probabilistic models in a wide range of disciplines. This problem is

rendered more difficult by the fact that there are relatively few probability distributions for

which direct methods for generating samples exist. For this reason, a variety of sophisticated

Monte Carlo methods have been developed for generating samples from arbitrary probability distributions. Markov chain Monte Carlo (MCMC) is one of these methods, being

particularly useful for correctly generating samples from distributions where the probability of a particular outcome is multiplied by an unknown constant – a common situation in

statistical physics (Newman & Barkema, 1999) and Bayesian statistics (Gilks et al., 1996).

A Markov chain is a sequence of random variables where the value taken by each

variable (known as the state of the Markov chain) depends only on the value taken by

the previous variable (Norris, 1997). We can generate a sequence of states from a Markov

chain by iteratively drawing each variable from its distribution conditioned on the previous

variable. This distribution expresses the transition probabilities associated with the Markov

chain, which are the probabilities of moving from one state to the next. An ergodic Markov

chain, having a non-zero probability of reaching any state from any other state in a finite and

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

5

aperiodic number of iterations, eventually converges to a stationary distribution over states.

That is, after many iterations the probability that a chain is in a particular state converges

to a fixed value, the stationary probability, regardless of the initial value of the chain. The

stationary distribution is determined by the transition probabilities of the Markov chain.

Markov chain Monte Carlo algorithms are schemes for constructing Markov chains

that have a particular distribution (the “target distribution”) as their stationary distribution. If a sequence of states is generated from such a Markov chain, the states towards the

end of the sequence will arise with probabilities that correspond to the target distribution.

These states of the Markov chain can be used in the same way as samples from the target

distribution, although the correlations among the states that result from the dynamics of

the Markov chain will reduce the effective sample size. The original scheme for constructing

these types of Markov chains is the Metropolis method (Metropolis et al., 1953), which was

subsequently generalized by Hastings (1970).

The Metropolis method

The Metropolis method specifies a Markov chain by splitting the transition probabilities into two parts: a proposal distribution and an acceptance function. A proposed state

is drawn from the proposal distribution (which can depend on the current state) and the

acceptance function determines the probability with which that proposal is accepted as the

next state. If the proposal is not accepted, then the current state is taken as the next state.

This procedure defines a Markov chain, with the probability of the next state depending

only on the current state, and it can be iterated until it converges to the stationary distribution. Through careful choice of the proposal distribution and acceptance function, we

can ensure that this stationary distribution is the target distribution.

A sufficient, but not necessary, condition for specifying a proposal distribution and

acceptance function that produce the appropriate stationary distribution is detailed balance,

with

p(x)q(x∗ |x)a(x∗ ; x) = p(x∗ )q(x|x∗ )a(x; x∗ )

(1)

where p(x) is the stationary distribution of the Markov chain (our target distribution),

q(x∗ |x) is the probability of proposing a new state x∗ given the current state x, and a(x∗ ; x)

is the probability of accepting that proposal. We can motivate detailed balance by first

imagining that we are running a Markov chain in which we draw a new proposed state from

a uniform distribution over all possible proposals and then accept the proposal with some

probability. The transition probability of the Markov chain is equal to the probability of

accepting this proposal. We would like for the states of the Markov chain to act as samples

from a target distribution. A different way to think of this constraint is to require the ratio

of the number of visits of the chain to state x to the number of visits of the chain to state x∗

to be equal to the ratio of the probabilities of these two states under the target distribution.

A sufficient, but not necessary, condition for satisfying this requirement is to enforce this

constraint for every transition probability. Formally we can express this equality between

ratios as

p(x)

a(x; x∗ )

=

(2)

p(x∗ )

a(x∗ ; x)

Now, to generalize, instead of assuming that each proposal is drawn uniformly from the space

of parameters, we assume that each proposal is drawn from a distribution that depends on

6

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

the current state, q(x∗ |x). In order to correct for a non-uniform proposal we need to multiply

each side of Equation 2 by the proposal distribution. The result is equal to the detailed

balance equation.

The Metropolis method specifies an acceptance function which satisfies detailed balance for any symmetric proposal distribution, with q(x∗ |x) = q(x|x∗ ) for all x and x∗ . The

Metropolis acceptance function is

a(x∗ ; x) = min

p(x∗ )

p(x)

,1 .

(3)

meaning that proposals with higher probability than the current state are always accepted.

To apply this method in practice, a researcher must be able to determine the relative

probabilities of any two states. However, it is worth noting that the researcher need not

know p(x) exactly. Since the algorithm only uses the ratio p(x∗ )/p(x), it is sufficient to

know the distribution up to a multiplicative constant. That is, the Metropolis method can

still be used even if we only know a function f (x) ∝ p(x).

Hastings acceptance functions

Hastings (1970) gave a more general form for acceptance functions that satisfy detailed

balance, and extended the Metropolis method to admit asymmetric proposal distributions.

The key observation is that Equation 1 is satisfied by any acceptance rule of the form

s(x∗ , x)

a(x∗ ; x) =

1+

p(x) q(x∗ |x)

p(x∗ ) q(x|x∗ )

(4)

where s(x∗ , x) is a symmetric in x and x∗ and 0 ≤ a(x∗ ; x) ≤ 1 for all x and x∗ . Taking

s(x∗ , x) =

p(x) q(x∗ |x)

p(x∗ ) q(x|x∗ )

1 + p(x∗ ) q(x|x∗ ) , if p(x) q(x∗ |x) ≥ 1

(5)

∗)

∗

p(x∗ ) q(x|x∗ )

1 + p(x ) q(x|x

p(x) q(x∗ |x) , if p(x) q(x∗ |x) ≤ 1

yields the Metropolis acceptance function for symmetric proposal distributions, and generalizes it to

p(x∗ ) q(x|x∗ )

a(x∗ ; x) = min

,

1

(6)

p(x) q(x∗ |x)

for asymmetric proposal distributions. With a symmetric proposal distribution, taking

s(x∗ , x) = 1 gives the Barker acceptance function

a(x∗ ; x) =

p(x∗ )

p(x∗ ) + p(x)

(7)

where the acceptance probability is proportional to the probability of the proposed and the

current state under the target distribution (Barker, 1965). While the Metropolis acceptance function has been shown to result in lower asymptotic variance (Peskun, 1973) and

faster convergence to the stationary distribution (Billera & Diaconis, 2001) than the Barker

acceptance function, it has also been argued that neither rule is clearly dominant across all

situations (Neal, 1993).

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

7

Summary

Markov chain Monte Carlo provides a simple way to generate samples from probability

distributions for which other sampling schemes are infeasible. The basic procedure is to start

a Markov chain at some initial state, chosen arbitrarily, and then apply one of the methods

for generating transitions outlined above, proposing a change to the state of the Markov

chain and then deciding whether or not to accept this change based on the probabilities

of the different states under the target distribution. After allowing enough iterations for

the Markov chain to converge to its stationary distribution (known as the “burn-in”), the

states of the Markov chain can be used to answer questions about the target distribution

in the same way as a set of samples from that distribution.

An acceptance function from human behavior

The way that transitions between states occur in the Metropolis-Hastings algorithm

already has the feel of a psychological task: two objects are presented, one being the

current state and one the proposal, and a choice is made between them. We could thus

imagine running an MCMC algorithm in which a computer generates a proposed variation

on the current state and people choose between the current state and the proposal. In this

section, we consider how to lead people to choose between two objects in a way that would

correspond to a valid acceptance function.

From a task to an acceptance function

Assume that we want to gather information about a mental representation characterized by a non-negative function over objects, f (x). In order for people’s choices to act as an

element in an MCMC algorithm that produces samples from a distribution proportional to

f (x), we need to design a task that leads them to choose between two objects x∗ and x in

a way that corresponds to one of the valid acceptance functions introduced in the previous

section. In particular, it is sufficient to construct a task such that the probability with

which people choose x∗ is

f (x∗ )

a(x∗ ; x) =

(8)

f (x∗ ) + f (x)

this being the Barker acceptance function (Equation 7) for the distribution p(x) ∝ f (x).

Equation 8 has a long history as a model of human choice probabilities, where it is

known as the Luce choice rule or the ratio rule (Luce, 1963). This rule has been shown

to provide a good fit to human data when participants choose between two stimuli based

on a particular property (Bradley, 1954; Clarke, 1957; Hopkins, 1954), though the rule

does not seem to scale with additional alternatives (Morgan, 1974; Rouder, 2004; Wills,

Reimers, Stewart, Suret, & McLaren, 2000). When f (x) is a utility function or probability

distribution, the behavior described in Equation 8 is known as probability matching and

is often found empirically (Vulkan, 2000). The ratio rule has also been used to convert

psychological response magnitudes into response probabilities in many models of cognition

such as the Similarity Choice Model (Luce, 1963; Shepard, 1957), the Generalized Context

Model (Nosofsky, 1986), the Probabilistic Prototype model (Ashby, 1992; Nosofsky, 1987),

the Fuzzy Logical Model of Perception (Oden & Massaro, 1978), and the TRACE model

(McClelland & Elman, 1986).

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

8

There are additional simple theoretical justification for using Equation 8 to model

people’s response probabilities. One justification is to use the logistic function to model

a soft threshold on the log odds of two alternatives (cf. Anderson, 1990; Anderson &

Milson, 1989). The log odds in favor of x∗ over x under the distribution p(x) will be

∗)

Λ = log p(x

p(x) . Assuming that people receive greater reward for a correct response than

an incorrect response, the optimal solution to the problem of choosing between the two

objects is to deterministically select x∗ whenever Λ > 0. However, we could imagine that

people place their thresholds at slightly different locations or otherwise make choices nondeterministically, such that their choices can be modeled as a logistic function on Λ with

the probability of choosing x∗ being 1/(1 + exp{−Λ}). An elementary calculation shows

that this yields the acceptance rule given in Equation 8.

Another theoretical justification is to assume that log probabilities are noisy and

the decision is deterministic. The decision is made by sampling from each of the noisy log

probabilities and choosing the higher sample. If the additive noise follows a Type I Extreme

Value (e.g., Gumbel) distribution, then it is known that the resulting choice probabilities

are equivalent to the ratio rule (McFadden, 1974; Yellott, 1977).

The correspondence between the Barker acceptance function and the ratio rule suggests that we should be able to use people as elements in MCMC algorithms in any context

where we can lead them to choose in a way that is well-modeled by the ratio rule. We can

then explore the representation characterized by f (x) through a series of choices between

two alternatives, where one of the alternatives that is presented is that selected on the

previous trial and the other is a variation drawn from an arbitrary proposal distribution.

The result will be an MCMC algorithm that produces samples from p(x) ∝ f (x), providing

an efficient way to explore those parts of the space where f (x) takes large values.

Allowing a wider range of behavior

Probability matching, as expressed in the ratio rule, provide a good description of

human choice behavior in many situations, but motivated participants can produce behavior

that is more deterministic than probability matching (Vulkan, 2000; Ashby & Gott, 1988).

Several models of choice, particularly in the context of categorization, have been extended

in order to account for this behavior (Ashby & Maddox, 1993) by using an exponentiated

version of Equation 8 to map category probabilities onto response probabilities,

a(x∗ ; x) =

f (x∗ )γ

f (x∗ )γ + f (x)γ

(9)

where γ raises each term on the right side of Equation 8 to a constant. The parameter

γ makes it possible to interpolate between probability matching and purely deterministic

responding: when γ = 1 participants probability match, and when γ = ∞ they choose

deterministically.

Equation 9 can be derived from an extension to the “soft threshold” argument given

above. If responses follow a logistic function on the log posterior odds with gain γ, the

probability of choosing x∗ is 1/(1 + exp{γΛ}) where Λ is defined above. The case discussed

above corresponds to γ = 1, and as γ increases, the threshold moves closer to a step function

at Λ = 0. Carrying out the same calculation as for the case where γ = 1 in the general case

yields the acceptance rule given in Equation 9.

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

9

The acceptance function in Equation 9 contains an unknown parameter, so we will

not gain as much information about f (x) as when we make the stronger assumption that

participants probability match (i.e. fixing γ = 1). Substituting Equation 9 into Equation 1

and assuming a symmetric proposal distribution we can show that the exponentiated choice

rule is an acceptance function for a Markov chain with stationary distribution

p(x) ∝ f (x)γ

(10)

Thus, using the weaker assumptions of Equation 9 as a model of human behavior, we can

estimate f (x) up to a constant exponent. The relative probabilities of different objects will

remain ordered, but cannot be directly evaluated. Consequently, the distribution defined

in Equation 10 has the same peaks as the function produced by directly normalizing f (x),

and the ordering of the variances for the dimensions is unchanged, but the exact values of

the variances will depend on the parameter γ.

Categories

The results in the previous section provide a general method for exploring mental

representations that can be expressed in terms of a non-negative function over a set of

objects. In this section, we consider how this approach can be applied to the case of

categories, providing a concrete example of a context in which this method can be used and

an illustration of how tasks can be constructed to meet the criteria for defining an MCMC

algorithm. We begin with a brief review of the methods that have been used to study

mental representations of category structure. In the following discussion it is important

to keep in mind that performance in any task used to study categories involves both the

mental representations with which the category members, or category abstractions, are held

in memory, and the processes that operate on those representations to produce the observed

data. In this article our aim is to map the category structure that results from both the

representations and processes acting together. Much research in the field has of course been

aimed at specifying the representations, the processes, or both, as described briefly in the

review that follows.

Methods for studying categories

Cognitive psychologists have extensively studied the ways in which people group objects into categories, investigating issues such as whether people represent categories in

terms of exemplars or prototypes (J. D. Smith & Minda, 1998; Nosofsky & Zaki, 2002;

Minda & Smith, 2002; Storms et al., 2000; Nosofsky, 1988; Nosofsky & Johansen, 2000;

Zaki et al., 2003; E. E. Smith et al., 1998; Heit & Barsalou, 1996). Many different types

of methodologies have been developed in an effort to distinguish the effects of prototypes

and exemplars, and to test other theories of categorization. Two of the most commonly

used methods are multidimensional scaling (MDS; Shepard, 1962; Torgerson, 1958) and

additive clustering (Shepard & Arabie, 1979). These methods each take a set of similarity

judgments and find the representation that best respects these judgments. MDS uses similarity judgments to constrain the placement of objects within a similarity space – objects

judged most similar are placed close together and those judged dissimilar are place far apart.

Additive clustering works in a similar manner, except that the resulting representation is

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

10

based on discrete features that are either shared or not shared between objects. These two

methodologies are used in order to establish the psychological space for the stimuli in an

experiment, assisting in the evaluation of categorization models. MDS has been applied

to many natural categories, including Morse code (Rothkopf, 1957) and colors (Nosofsky,

1987). Traditional MDS does not scale well to a large number of objects because all pairwise

judgments must be collected, but alternative formulations have been developed to deal with

large numbers of stimuli (Hadsell, Chopra, & LeCun, 2006; de Silva & Tenenbaum, 2003;

Goldstone, 1994).

Discriminative tests are a second avenue for comparing theories about categorization.

In these tests, participants are shown a single object and asked to pick the category to

which it belongs. The choices that are made reflect the structures of all the categories under

consideration and can be analyzed to determine the decision boundaries between categories.

This is perhaps the most commonly used method for distinguishing between many different

types of models (e.g., Nosofsky, Gluck, Palmeri, McKinley, & Glauthier, 1994; Ashby &

Gott, 1988), and it has been combined with MDS to provide a more diagnostic test (e.g,

Nosofsky, 1986, 1987). In addition, discriminative tests can be applied to naturalistic stimuli

with large numbers of parameters. In psychophysics, the response classification technique

is a discriminative test that has been used to infer the decision boundary that guides

perceptual decisions between two stimuli (Ahumada & Lovell, 1971). This methodology

has been applied to visualize the decision boundary between images with as many as 10,000

pixels (Gold, Murray, Bennett, & Sekuler, 2000).

A third method for exploring category structure is to evaluate the strength of category

membership for a set of the objects. In categorization studies, this goal is generally accomplished by asking participants for typicality ratings of stimuli (e.g., Storms et al., 2000;

Bourne, 1982). This procedure was originally used to justify the existence of a category

prototype (Rosch & Mervis, 1975; Rosch, Simpson, & Miller, 1976), but typicality ratings

have also been used to support exemplar models (Nosofsky, 1988). Typicality ratings are

difficult to collect for a large set of stimuli, because a judgment must be made for every

object. In situations in which there are a large number of objects and few belong to a

category, this method will be very inefficient. The key claim of this paper is the idea that

the MCMC method can provide the same insight into categorization as given by typicality

judgments, but in a form that scales well to the large numbers of stimuli needed to explore

natural categories.

Representing categories

Computational models of categorization quantify different hypotheses about how categories are represented. These models were originally developed as accounts of the cognitive

processes involved in categorization, and specify the structure of categories in terms of a

similarity function over objects. Subsequent work identified these models as being equivalent to schemes for estimating a probability distribution (a problem known as density

estimation), and showed that the underlying representations could be expressed as probability distributions over objects (Ashby & Alfonso-Reese, 1995). We will outline the basic

ideas behind two classic models – exemplar and prototype models – from both of these

perspectives. While we will use the probabilitistic perspective throughout the rest of the

paper, the equivalence between these two perspectives mean that our analyses carry over

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

11

equally well to the representation of categories as a similarity function over objects.

Exemplar and prototype models share the basic assumption that people assign stimuli

to categories based on similarity. Given a set of N − 1 stimuli x1 , . . . , xN −1 with category

labels c1 , . . . , cN −1 , these models express the probability that stimulus N is assigned to

category c as

ηN,c βc

p(cN = c|xn ) = P

(11)

c0 ηN,c0 βc0

where ηN,c is the similarity of the stimulus xN to category c and βc is the response bias

for category c. The key difference between the models is in how ηN,c , the similarity of a

stimulus to a category, is computed.

In an exemplar model (e.g., Medin & Schaffer, 1978; Nosofsky, 1986), a category is

represented by all of the stored instances of that category. The similarity of stimulus N to

category c is calculated by summing the similarity of the stimulus to all stored instances of

the category. That is,

X

ηN,i

(12)

ηN,c =

i|ci =c

where ηN,i is a symmetric measure of the similarity between the two stimuli xN and xi . The

similarity measure is typically defined as a decaying exponential function of the distance

between the two stimuli, following Shepard (1987). In a prototype model (e.g., Reed,

1972), a category c is represented by a single prototypical instance. In this formulation, the

similarity of a stimulus N to category c is defined to be

ηN,c = ηN,pc

(13)

where pc is the prototypical instance of the category and ηN,pc is a measure of the similarity

between stimulus N and the prototype pc , as used in the exemplar model. One common

way of defining the prototype is as the centroid of all instances of the category in some

psychological space, i.e.,

1 X

pc =

xi

(14)

Nc i|c =c

i

where Nj is the number of instances of the category (i.e. the number of stimuli for which

ci = c). There are obviously a variety of possibilities within these extremes, with similarity

being computed to a subset of the exemplars within a category, and these have been explored

in more recent models of categorization (e.g., Love, Medin, & Gureckis, 2004; Vanpaemel,

Storms, & Ons, 2005).

Taking a probabilistic perspective on the problem of categorization, a learner should

believe stimulus N belongs to category c with probability

p(xN |cN = c)p(cN = c)

0

0

c0 p(xN |cN = c )p(cN = c )

p(cN = c|xN ) = P

(15)

with the posterior probability of category c being proportional to the product of the probability of an object with features xN being produced from that category and the prior

probability of choosing that category. The distribution p(x|c), which we use as shorthand

for p(xN |cN = c), reflects the learner’s knowledge of the structure of the category, taking

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

12

into account the features and labels of the previous N −1 objects. Ashby and Alfonso-Reese

(1995) observed a connection between this Bayesian solution to the problem of categorization and the way that choice probabilities are computed in exemplar and prototype models

(i.e. Equation 11). Specifically, ηN,c can be identified with p(xN |cN = c), while βc corresponds to the prior probability of category c, p(cN = c). The difference between exemplar

and prototype models thus comes down to different ways of estimating p(x|c).

The definition of ηN,c used in an exemplar model (Equation 12) corresponds to estimating p(x|c) as the sum of a set of functions (known as “kernels”) centered on the xi

already labeled as belonging to category c, with

p(xN |cN = c) ∝

X

k(xN , xi )

(16)

i|ci =c

where k(x, xi ) is a probability distribution centered on xi .1 This is a method that is widely

used for approximating distributions in statistics, being a simple form of nonparametric

density estimation (meaning that it can be used to identify distributions without assuming that they come from an underlying parametric family) called kernel density estimation

(e.g., Silverman, 1986). The definition of ηN,c used in a prototype model (Equation 13)

corresponds to estimating p(x|c) by assuming that the distribution associated with each

category comes from an underlying parametric family, and then finding the parameters

that best characterize the instances labeled as belonging to that category. The prototype

corresponds to these parameters, with the centroid being an appropriate estimate for distributions whose parameters characterize their mean. Again, this is a common method for

estimating a probability distribution, known as parametric density estimation, in which the

distribution is assumed to be of a known form but with unknown parameters (e.g., Rice,

1995). As with the similarity-based perspective, there are also probabilistic models that

interpolate between exemplars and prototypes (e.g., Rosseel, 2002).

Tasks resulting in valid MCMC acceptance rules

Whether they are represented as similarity functions (being non-negative functions

over objects), or probability distributions, it should be possible to use our MCMC method

to investigate the stucture of categories. The critical step is finding a question that we can

ask people that leads them to respond in the way identified by Equation 8 when presented

with two alternatives. That is, we need a question that leads people to choose alternatives

with probability proportional to their similarity to the category or probability under that

category. Fortunately, it is possible to argue that several kinds of questions should have

this property. We consider three candidates.

Which stimulus belongs to the category?. The simplest question we can ask people is

to identify which of the two stimuli belongs to the category, on the assumption that this is

true for only one of the stimuli. We give a Bayesian analysis of this question in Appendix

A, showing that stimuli should be selected in proportion to their probability under the

category distribution p(x|c) under some minimal assumptions. It is also possible to argue

for this claim via an analogy to the standard categorization task, in which Equation 8 is

1

R

The constant of proportionality is determined by k(x, xi )dx, being

and is absorbed into βc to produce direct equivalence to Equation 12.

1

Nc

if

R

k(x, xi ) dx = 1 for all i,

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

13

widely used to describe choice probabilities. In the standard categorization task, people

are shown a single stimulus and asked to select one of two categories. In our task, people

are shown two stimuli and asked to identify which belongs to a single category. Just as

people make choices in proportion to the similarity between the stimulus and the category

in categorization, we expect them to do likewise here.

Which stimulus is a better example of the category?. The question of which stimulus

belongs to the catgory is straightforward for participants to interpret when it is clear that

one stimulus belongs to the category and the other does not. However, if both stimuli

have very high or very low values under the probability distribution, then the question

can be confusing. An alternative is to ask people which stimulus is a better example of

the category – asking for a typicality rating rather than a categorization judgment. This

question is about the representativeness of an example for a category. Tenenbaum and

Griffiths (2001) provide evidence for a representativeness measure that is the ratio of the

posterior probabilities of the alternatives. In Appendix A, we show that under the same

assumptions as those for the previous question, answers to this question should also follow

the Barker acceptance function. As above, this question can also be justified by appealing to

similarity-based models: Nosofsky (1988) found that a ratio rule combined with an exemplar

model predicted response probabilities when participants were asked to choose the better

example of a category from a pair of stimuli.

Which stimulus is more likely to have come from the category?. An alternative means

of relaxing the assumption that only one of the two stimuli came from the category is

to ask people for a judgment of relative probability rather than a forced choice. This

question directly measures p(x|c) or the corresponding similarity function. Assuming that

participants apply the ratio rule to these quantities, their responses should yield the Barker

acceptance function for the appropriate stationary distribution.

Summary

Based on the results in this section, we can define a simple method for drawing samples from the probability distribution (or normalized similarity function) associated with a

category using MCMC. Start with a parameterized set of objects and start with an arbitrary

member of that set. On each trial, a proposed object is drawn from a symmetric distribution

around the original object. A person chooses between the current object and the proposed

new object, responding to a question about which object is more consistent with the category. Assuming that people’s choice behavior follows the ratio rule given in Equation 8, the

stationary distribution of the Markov chain is the probability distribution p(x|c) associated

with the category, and samples from the chain provide information about the mental representation of that category. As outlined above, this procedure can also provide information

about the relative probabilities of different objects when people’s behavior is more deterministic than probability matching. To explore the value of this method for investigating

category structures we conducted a series of four experiments. The first two experiments

examine whether we can recover artificial category structures produced through training,

while the other two experiments apply it to estimation of the distributions associated with

natural categories.

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

14

Figure 1. Examples of the largest and smallest fish stimuli presented to participants during training

in Experiments 1 and 2. The relative size of the fish stimuli are shown here; true display sizes are

given in the text.

Experiment 1

To test whether our assumptions about human decision making were accurate enough

to use MCMC to sample from people’s mental representations, we trained people on a

category structure and attempted to recover the resulting distribution. A simple onedimensional categorization task was used, with the height of schematic fish (see Figure 1)

being the dimension along which category structures were defined. Participants were trained

on two categories of fish height – a uniform distribution and a Gaussian distribution – being

told that they were learning to judge whether a fish came from the ocean (the uniform

distribution) or a fish farm (the Gaussian distribution). Once participants were trained,

we collected MCMC samples for the Gaussian distributions by asking participants to judge

which of two fish came from the fish farm using one of the three questions introduced

above. The three questions conditions were used to find empirical support for the theoretical

conclusion that all three questions would allow samples to be drawn from a probability

distribution. Assuming participants accurately represent the training structure and use a

ratio decision rule, the samples drawn will reflect the structure on which participants were

trained for each question condition.

Method

Participants. Forty participants were recruited from the Brown University community. The data from four participants were discarded due to computer error in the presentation. Two additional participants were discarded for reaching the upper or lower bounds

of the parameter range. These participants were replaced to give a total of 12 participants

using each of the three MCMC questions. Each participant was paid $4 for a 35 minute

session.

Stimuli. The experiment was presented on a Apple iMac G5 controlled by a script

running in Matlab using PsychToolbox extensions (Brainard, 1997; Pelli, 1997). Participants were seated approximately 44 cm away from the display. The stimuli were a modified

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

15

version of the fish stimuli used in Huttenlocher et al. (2000). The fish were constructed from

three ovals, two gray and one black, and a gray circle on a black background. Fish were all

9.1 cm long with heights drawn from the Gaussian and uniform distributions in training.

Because the display did not allow a continuous range of fish heights to be displayed, the

heights varied in steps of 0.13 cm. Examples of the smallest and largest fish are shown in

Figure 1. During the the MCMC trials, fish were allowed to range in height from 0.03 cm

to 8.35 cm.

Procedure.

Each participant was trained to discriminate between two categories of fish: ocean

fish and fish farm fish. Participants were instructed, “Fish from the ocean have to fend for

themselves and as a result they have an equal probability of being any size. In contrast,

fish from the fish farm are all fed the same amount of food, so their sizes are similar and

only determined by genetics.” These instructions were meant to suggest that the ocean fish

were drawn from a uniform distribution and the fish farm fish were drawn from a Gaussian

distribution, and that the variance of the ocean fish was greater than the variance of the

fish farm fish.

Participants saw two types of trials. In a training trial, either the uniform or Gaussian

distribution was selected with equal probability, and a single sample (with replacement) was

drawn from the selected distribution. The uniform distribution had a lower bound of 2.63

cm and an upper bound of 5.75 cm, while the Gaussian distribution had a mean of 4.19 cm

and a standard deviation of 0.39 cm. The sampled fish was shown to the participant, who

chose which distribution produced the fish. Feedback was then provided on the accuracy

of this choice. In an MCMC trial, two fish were presented on the screen. Participants

chose which of the two fish came from the Gaussian distribution. Neither fish had been

sampled from the Gaussian distribution. Instead, one fish was the state of a Markov chain

and the other fish was the proposal. The state and proposal were unlabeled and they were

randomly assigned to either the left or right side of the screen. Three MCMC chains were

interleaved during the MCMC trials. The start states of the chains were chosen to be 2.63

cm, 4.20 cm, and 5.75 cm. Relative to the training distributions, the start states were

over-dispersed, facilitating assessment of convergence. The proposal was chosen from a

symmetric discretized pseudo-Gaussian distribution with a mean equal to the current state

and standard deviation equal to the training Gaussian standard deviation. The probability

of proposing the current state was set to zero.

The question asked on the MCMC trials was varied between-participants. During the

test phase, participants were asked, “Which fish came from the fish farm?”, “Which fish

is a better example of a fish from the fish farm?”, or “Which fish was more likely to have

come from the fish farm?”. Twelve participants were assigned to each question condition.

All participants were trained on the same Gaussian distribution, with mean µ = 4.19 cm

and standard deviation σ = 3.9 mm.

The experiment was broken up into blocks of training and MCMC trials. The experiment began with 120 training trials, followed by alternating blocks of 60 MCMC trials

and 60 training trials. Training and MCMC trials were interleaved to keep participants

from forgetting the training distributions. After a total of 240 MCMC trials, there was one

final block of 60 test trials. These were identical to the training trials, except participants

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

16

were not given feedback. The final block provided an opportunity to test the discriminative

predictions of the MCMC method.

Results and Discussion

Participants were excluded if the state of any chain reached the edge of the parameter

range or if their chains did not converge to the stationary distribution. We used a heuristic

for determining convergence to the stationary distribution: every chain had to cross another

chain.2 For the participants that were retained, the first 20 trials were removed from each

chain. Figure 2 provides justification for removing the first 20 trials, as each example

participant (one from each question condition) required about this many trials for the

chains to converge. The remaining 60 trials from each chain were pooled and used in

further analyses.

The acceptance rates of the proposals should be reasonably low in MCMC applications. A high acceptance rate generally results from proposal distributions that are narrow

compared to the category distribution and thus do not explore the space well. Very low

acceptance rates occur if the proposals are too extreme and the low acceptance rate also

causes the algorithm to explore the space more slowly. A rate of 20% to 40% is often considered a good value (Roberts, Gelman, & Gilks, 1997). In this experiment, participants

accepted between 33% and 35% of proposals in the three conditions. A more extensive

exploration of the effects of acceptance rates is presented in the General Discussion.

The chains for one example participant per condition are shown in Figure 2. The

right side of the figure compares the training distribution to two estimates of the stationary distribution formed from the samples: a nonparametric kernel density estimate, and a

parametric estimate made by assuming that the stationary distribution was Gaussian. The

nonparametric kernel density estimator used a Gaussian kernel with width optimal for producing Gaussian distributions (Bowman & Azzalini, 1997), although we should note that

this kernel does not necessarily produce Gaussian distributions. The parametric density

estimator assumed that the samples come from a Gaussian distribution and estimates the

parameters of the Gaussian distribution that generated the samples. Both density estimation methods show that the samples do a good job of reproducing the Gaussian training

distributions. A more quantitative measure of how well the participants reproduced the

training distribution is to evaluate the means and standard deviations of the samples relative to the training mean and standard deviation. The averages over participants are shown

in Figure 3. Over all participants, the mean of the samples is not significantly different from

the training mean and the standard deviation of the samples is not significantly different

from the standard deviation of the training distribution for any of the questions. The t-tests

for the mean and standard deviation for each question are shown in Table 1.

The final testing block provided an opportunity to test the predictions of samples

collected by the MCMC method for a separate set of discriminative judgments. The fish

farm distribution was assumed to be Gaussian with the parameters determined by the

mean and standard deviation of the MCMC samples. The uniform distribution on which

participants were trained was assumed for the ocean fish category. The category with the

2

Many heuristics have been proposed for assessing convergence (Brooks & Roberts, 1998; Mengersen,

Robert, & Guihenneuc-Jouyaux, 1999; Cowles & Carlin, 1996). The heuristic we used is simple to apply in

a one-dimensional state space.

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

17

Figure 2. Markov chains produced by participants in Experiment 1. The three rows are participants

from the three question conditions. The panels in the first column show the behavior of the three

Markov chains per participant. The black lines represent the states of the Markov chains, the dashed

line is the mean of the Gaussian training distribution, and the dot-dashed lines are two standard

deviations from the mean. The second column shows the densities of the training distributions.

These training densities can be compared to the MCMC samples, which are described by their

kernel density estimates and Gaussian fits in the last two columns.

Figure 3. Results of Experiment 1. The bar plots show the mean of µ and σ across the MCMC

samples produced by participants in all three question conditions. Error bars are one standard error.

The black dot indicates the actual value of µ and σ for each condition, which corresponds closely

with the MCMC samples.

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

18

Table 1: Statistical Significance Tests for Question Conditions in Experiment 1

Question

Which?

Better Example?

More Likely?

Statistic

Mean

Std Dev

Mean

Std Dev

Mean

Std Dev

df

11

11

11

11

9

9

t

0.41

-1.46

0.00

0.72

-0.95

-0.22

p

0.69

0.17

1.00

0.48

0.37

0.83

higher probability for each fish size was used as the prediction, consistent with assuming that

participants make optimal decisions. The discriminative responses matched the prediction

on 69% of trials, which was significantly greater than the chance value of 50%, z = 17.7, p <

0.001.

Experiment 2

The results of Experiment 1 indicate that the MCMC method can accurately produce

samples from a trained structure for a variety of questions. In the second experiment, the

design was extended to test whether participants trained on different structures would show

clear differences in the samples that they produced. Specifically, participants were trained

on four distributions differing in their mean and standard deviation to observe the effect on

the MCMC samples.

Method

Participants. Fifty participants were recruited from the Brown University community.

Data from one participant was discarded for not finishing the experiment, data from another

was discarded because the chains reached a boundary, and the data of eight others were

discarded because their chains did not cross. After discarding participants, there were ten

participants in the low mean and low standard deviation condition, ten participants in the

low mean and high standard deviation condition, nine participants in the high mean and low

standard deviation condition, and eleven participants in the high mean and high standard

deviation condition.

Stimuli. Stimuli were the same as Experiment 1.

Procedure. The procedure for this experiment is identical to that of Experiment 1,

except for three changes. The first change is that participants were all asked the same question, “Which fish came from the fish farm?” The second difference is that the mean and

the standard deviation of the Gaussian were varied in four between-participant conditions.

Two levels of the mean, µ = 3.66 cm and µ = 4.72 cm, and two levels of the standard deviation, σ = 3.1 mm and σ = 1.3 mm, were crossed to produce the four between-participant

conditions. The uniform distribution was the same across training distributions and was

bounded at 2.63 cm and 5.76 cm. The final change was that the standard deviation of the

proposal distribution was set to 2.2 mm in this experiment.

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

19

Results and Discussion

As in the previous experiment, it took approximately 20 trials for the chains to

converge, so only the remaining 60 trials per chain were analyzed. The acceptance rates in

the four conditions ranged from 38% to 45%. The distributions on the right hand side of

Figure 4 show the training distribution and the nonparametric and parametric estimates of

the stationary distribution produced from the MCMC samples. The distributions estimated

for the participants shown in this figure match well with the training distributions. The

mean, µ, and standard deviation, σ, was computed from the MCMC samples produced by

each participant. The average of these estimates for each condition is shown in Figure 5.

As predicted, µ was higher for participants trained on Gaussians with higher means, and

σ was higher for participants trained on Gaussians with higher standard deviations. These

differences were statistically significant, with a one-tailed Student’s t-test for independent

samples giving t(38) = 7.36, p < 0.001 and t(38) = 2.01, p < 0.05 for µ and σ respectively.

The figure also shows that the means of the MCMC samples corresponded well with the

actual means of the training distributions. The standard deviations of the samples tended to

be higher than the training distributions, which could be a consequence of either perceptual

noise (increasing the effective variation in stimuli associated with a category) or choices

being made in a way consistent with the exponentiated choice rule with γ < 1. Perceptual

noise seems to be implicated because both standard deviations in this experiment (which

were overestimated) were less than the standard deviation in the previous experiment (which

was correctly estimated). The prediction of the MCMC samples for discriminative trials

was determined by the same method used in Experiment 1. The discriminative responses

matched the prediction on 75% of trials, significantly greater than the chance value of 50%,

z = 24.5, p < 0.001.

Experiment 3

The results of Experiments 1 and 2 suggest that the MCMC method can accurately

reproduce a trained structure. Experiment 3 used this method to gather samples from the

natural (i.e., untrained) categories of giraffes, horses, cats, and dogs, representing these

animals using nine-parameter stick figures (Olman & Kersten, 2004). These stimuli are less

realistic than full photographs of animals, but have a representation that is tuned to the

category, so that perturbations of the parameters will have a better chance of producing a

similar animal than than pixel perturbations. The complexity of these stimuli is still fairly

high, hopefully allowing the capture of a large number of interesting aspects of natural

categories. The samples produced by the MCMC method were used to examine the mean

animals in each category, what variables are important in defining membership of a category,

and how the distributions of the different animals are related.

The use of natural categories also provided the opportunity to compare the results

of the MCMC method with other approaches to studying category representations. These

stick figure stimuli were developed by Olman and Kersten (2004) to illustrate that classification images, one of the discriminative approaches introduced earlier in the paper, could

be applied to parameter spaces as well as to pixel spaces. Discriminative methods like classification images can be used to determine the decision bound between categories, but the

results of discrimination judgments should not be used to estimate the parameters charac-

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

20

Figure 4. Markov chains produced by participants in Experiment 2. The four rows are participants

from each of the four conditions. The panels in the first column show the behavior of the three

Markov chains per participant. The black lines represent the states of the Markov chains, the dashed

line is the mean of the Gaussian training distribution, and the dot-dashed lines are two standard

deviations from the mean. The second column shows the densities of the training distributions.

These training densities can be compared to the MCMC samples, which are described by their

kernel density estimates and Gaussian fits in the last two columns.

Figure 5. Results of Experiment 2. The bar plots show the mean of µ and σ across the MCMC

samples produced by participants in all four training conditions. Error bars are one standard error.

The black dot indicates the actual value of µ and σ for each condition, which corresponds with the

MCMC samples.

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

21

terizing the mean of a category. The experiment empirically highlights why discriminative

judgments should not be used for this purpose, and serves as a complement to the theoretical argument given in the discussion. In addition, we analyzed the results to determine

whether it would have been feasible to use typicality judgments on a random subset of

examples to identify the underlying category structure. Collecting typicality judgments is

efficient when most stimuli belong to a category, but can be inefficient when a category

distribution is non-zero in only a small portion of a large parameter space. The MCMC

method excels in the latter situation, as it uses a participant’s previous responses to choose

new trials.

Method

Participants. Eight participants were recruited from the Brown University community. Each participant was tested in five sessions, each of which could be split over multiple

days. A session lasted for a total of one to one-and-a-half hours for a total time of five to

seven-and-a-half hours. Participants were compensated with $10 per hour of testing.

Stimuli. The experiment was presented on a Apple iMac G5 controlled by a script

running in Matlab using PsychToolbox extensions (Brainard, 1997; Pelli, 1997). Participants were seated approximately 44 cm away from the display. The nine-parameter stick

figure stimuli that we used were developed by Olman and Kersten (2004). The parameter

names and ranges are shown in Figure 6. From the side view, the stick figures had a minimum possible width of 1.8 cm and height of 2.5 mm. The maximum possible width and

height were 10.9 cm and 11.4 cm respectively. Each stick figure was plotted on the screen

by projecting the three dimensional description of the lines onto a two dimensional plane

from a particular viewpoint. To prevent viewpoint biases, the stimuli were rotated on the

screen by incrementally changing the azimuth of the viewpoint, while keeping the elevation

constant at zero. The starting azimuth of the pair of stick figures on a trial was drawn from

a uniform distribution over all possible values and the period of rotation was fixed at six

seconds. The perceived direction of rotation is ambiguous in this experiment.

Procedure. The first four sessions of the experiment were used to sample from a

participant’s category distribution for four animals: giraffes, horses, cats, and dogs. Each

session was devoted to a single animal and the order in which the animals were tested was

counterbalanced across participants. On each trial, a pair of stick figures was presented,

with one stick figure being the current state of the Markov chain and the other the proposed

state of the chain. Participants were asked on each trial to pick “Which animal is the

”,

where the blank was filled by the animal tested during that session. Participants made their

responses via keypress. The proposed states were drawn from a multivariate discretized

pseudo-Gaussian with a mean equal to the current state and a diagonal covariance matrix.

Informal pilot testing showed good results were obtained by setting the standard deviation

of each variable to 7% of the range of the variable. Proposals that went beyond the range

of the parameters were automatically rejected and were not shown to the participant.

To assess convergence, three independent Markov chains were run during each session.

The starting states of the Markov chains were the same across sessions. These starting states

were at the vertices of an equilateral triangle embedded in the nine-dimensional parameter

space. The coordinates of each vertex were either 20% of the range away from the minimum

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

22

Figure 6. Range of stick figures shown in Experiment 3 by parameter. The top line for each

parameter is the range in the discrimination session, while the bottom line is the range in the

MCMC sessions. Lengths are relative to the fixed body length of one.

value or 20% of the range away from the maximum value for each parameter. Participants

responded to 999 trials in each of the first four sessions, with 333 trials presented from each

chain. The automatically rejected out-of-range trials did not count against the number

of trials in a chain, so more samples were collected than were presented. The number of

collected trials ranged from 1252 to 2179 trials with an average of 1553 trials.

The fifth and final session was a 1000 trial discrimination task, reproducing the procedure that Olman and Kersten (2004) used to estimate the structure of visual categories

of animals. On each trial, participants saw a single stick figure and made a forced response

of giraffe, horse, dog, or cat. Stimuli were drawn from a uniform distribution over the

restricted parameter range shown in Figure 6. This range was used in Olman and Kersten

(2004), and provided a higher proportion of animal-like stick figures at the expense of not

testing portions of the animal distributions. Unlike in the MCMC sessions, the stimuli

presented were independent across trials.

Results and Discussion

In order to determine the number of trials that were required for the Markov chains to

converge to the stationary distribution, the samples were projected onto a plane. The plane

used was the best possible plane for discriminating the different animal distributions for a

particular participant, as calculated through linear discriminant analysis (Duda, Hart, &

Stork, 2000). Trials were removed from the beginning of each chain until the distributions

looked as if they had converged. Removal of 250 trials appeared to be sufficient for most

chains, and this criterion was used for all chains and all participants. The acceptance rate of

the proposals across subjects and conditions ranged between 14% and 31%. Averaged across

individuals, it ranged from 21% to 26%, while averaged across categories, acceptance rates

ranged from 19% to 27%. Figure 7 shows the chains of the giraffe condition of Participant 3

projected onto the plane that best discriminates the categories. The largest factor in the

horizontal component was neck length, while the largest factor in the vertical component

was head length. The left panel shows the starting points and all of the samples for each

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

23

Figure 7. Markov chains and samples from Participant 3 in Experiment 3. The left panel displays

the three chains from the giraffe condition. The discs represent starting points, the dotted lines are

discarded samples, and the solid lines are retained samples. The right panel shows the samples from

all of the animal conditions for Participant 3 projected onto the plane that best discriminates the

classes. The quadrupeds in the bubbles are examples of MCMC samples.

chain. The right panel shows the remaining samples after the burn-in was removed. From

this figure, we can see that the giraffe and horse distributions are relatively distinct, while

the cat and dog distributions show more overlap.

Displaying a nine-dimensional joint distribution is impossible, so we must look at

summaries of the distribution. A basic summary of the animal distributions that were

sampled is the mean over samples. The means for all combinations of participants and

quadrupeds are shown in Figure 8. As support for the MCMC method, these means tend

to resemble the quadrupeds that participants were questioned about. While the means

provide face validity for the MCMC method, the distributions associated with the different

categories contain much more interesting information. Samples from each animal category

are shown in Figure 7, which give a sense of variation within a category. Also, the marginal

distributions shown in Figure 9 give a more informative picture of the joint distribution than

the means. The marginal distributions were estimated by taking a kernel density estimate

along each parameter for each of the quadrupeds on the data pooled over participants.

The Gaussian kernel used had its width optimized for estimating Gaussian distributions

(Bowman & Azzalini, 1997). In Figure 9 we can see that neck length and neck angle

distinguish giraffes from the other quadrupeds. Horses generally have a longer head length

than the other quadrupeds. Dogs have a somewhat distinct head length and head angle.

Cats have the longest tails, shortest head length, and a narrowest foot spread.

The data summaries along one or two dimensions may exaggerate the overlap between

categories. It is possible that the overlap differs between participants or the distributions

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

24

Figure 8. Means across samples for all participants and all quadrupeds in Experiment 3. The rows

of the figure correspond to giraffes, horses, cats, and dogs respectively. The columns correspond to

the eight participants.

are even more separated in the full parameter space. In order to examine how much the joint

distributions overlap, a multivariate Gaussian distribution was fit to each set of animal samples. Using these distributions for each quadruped, We calculated how many samples from

each quadruped fell within the 95% confidence region of each animal distribution. Table 2

shows the number of samples that fall within each distribution averaged over participants.

The diagonal values are close to the anticipated value of 95%. Between quadrupeds, horses

and giraffes share a fair amount of overlap and dogs and cats share a fair amount of overlap.

Between these two groups, cats and dogs overlap a bit less with horses than they do with

each other. Also, cats and dogs overlap very little with giraffes. There is an interesting

asymmetry between the cat and dog distributions. The cat distribution contains 14% of

the dog samples, but the dog distribution only contains 3.7% of the cat samples.

The discrimination session allows us to compare the outcome of the MCMC method

with that of a more standard experimental task. The mean animals from all of the MCMC

trials and from all of the discrimination trials (both pooled over participants) are shown

in Figure 10. The discrimination judgments were made on stimuli drawn randomly from

the parameter range used by Olman and Kersten (2004). The mean stick figures that

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

25

Figure 9. Marginal kernel density estimates for each of the nine stimulus parameters, aggregating

over all participants in Experiment 3. Examples of a parameter’s effect are shown on the horizontal

axis. The middle stick figure is given the central value in the parameter space. The two flanking

stick figures show the extremes of that particular parameter with all other parameters held constant.

Table 2: Proportion of Samples that Fall Within Quadruped 95% Confidence Regions (Averaged

Over Participants) in Experiment 3

Distribution

Giraffe

Horse

Cat

Dog

Giraffe

0.954

0.097

0.000

0.021

Samples

Horse Cat

0.048 0.006

0.960 0.046

0.044 0.936

0.044 0.037

Dog

0.000

0.058

0.143

0.954

UNCOVERING MENTAL REPRESENTATIONS WITH MCMC

26

Figure 10. Mean quadrupeds across participants for the MCMC and discrimination sessions in

Experiment 3. The rows of the figure correspond to the data collection method and the columns to

giraffes, horses, cats, and dogs respectively.

result from these discrimination trials look reasonable, but MCMC pilot results showed

that the marginal distributions were often pushed against the edges of the parameter ranges.

The parameter ranges were greatly expanded for the MCMC trials as shown in Figure 6,

resulting in a space that was approximately 3,800 times the size of the discrimination

space. Over all participants and animals, only 0.4% of the MCMC samples fell within the

restricted parameter range used in the discrimination session. This indicates that the true

category distributions lie outside this restricted range, and that the mean animals from

the discrimination session are actually poor examples of the categories – something that

would have been hard to detect on the basis of the discrimination judgments alone. This

result was borne out by asking people whether they thought the mean MCMC animals

or the corresponding mean discrimination animals better represented the target category.

A separate group of 80 naive participants preferred most mean MCMC animals over the

corresponding mean discrimination animals. Given a forced choice between the MCMC and

discrimination mean animals, the MCMC version was chosen by 99% of raters for giraffes,