1 Solutions to selected problems

advertisement

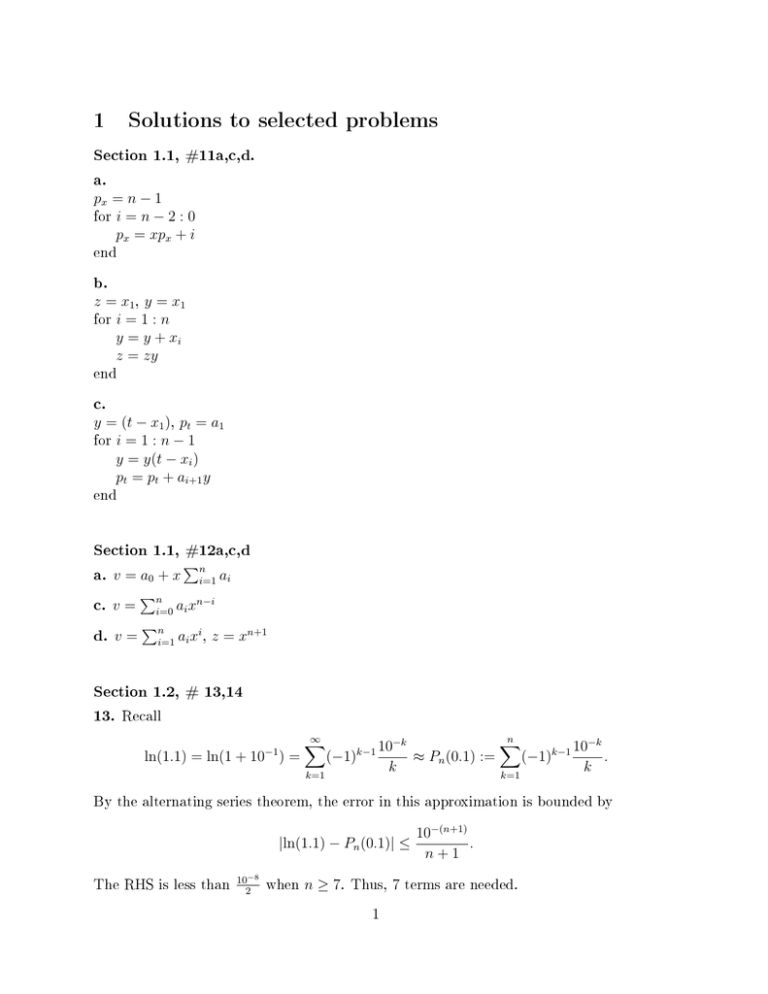

1 Solutions to selected problems Section 1.1, #11a,c,d. a. px = n − 1 for i = n − 2 : 0 px = xpx + i end b. z = x1 , y = x 1 for i = 1 : n y = y + xi z = zy end c. y = (t − x1 ), pt = a1 i=1:n−1 y = y(t − xi ) pt = pt + ai+1 y for end Section 1.1, P #12a,c,d a. v = a + x a c. v = P a x d. v = P a x z = x n i=1 0 n i=0 i n i=1 i i n−i i n+1 , Section 1.2, # 13,14 13. Recall −1 ln(1.1) = ln(1 + 10 ) = ∞ X −k k−1 10 (−1) k k=1 ≈ Pn (0.1) := n X k=1 (−1)k−1 10−k . k By the alternating series theorem, the error in this approximation is bounded by 10−(n+1) |ln(1.1) − Pn (0.1)| ≤ . n+1 The RHS is less than 10−8 when 2 n ≥ 7. Thus, 1 7 terms are needed. 14. We compute f (2) = 7, f 0 (2) = 8, f 00 (2) = 8 and f 000 (2) = 6. Thus, f (2 + h) = 7h0 /0! + 8h1 /1! + 8h2 /2! + 6h3 /3! = 7 + 8h + 4h2 + h3 or equivalently, f (x) = 7 + 8(x − 2) + 4(x − 2)2 + (x − 2)3 . Section 2.1, #1a,b a. 2 = (−1) 2 Since −30 0 97−127 (1.00 . . .)2 and 97 = 26 + 25 + 20 = (01100001)2 , 0|01100001|00000000000000000000000 is the b. 32-bit machine representation. 64.015625 = 26 (1 + 2−12 ) = (−1)0 2133−127 (1.000000000001)2 2 = (10000101)2 , 0|10000101|00000000000100000000000 Since and 133 = 27 + 22 + 0 is the 32-bit machine representation. Section 2.1, #3a-e 32-bit machine numbers must be in the range of the machine (roughly 2−126 they must have at most 24 signicant digits. 3a. 3b. 3c-d. e. to 2128 ), and Not a machine number: too large to be in machine range Not a machine number: binary expansion has more than 24 signicant digits Not machine numbers: their binary expansions are nonterminating 1 = 2−8 is a machine number: it is in the range of the machine and its binary 256 expansion has 1 signicant digit. Section 2.1, #5a-c, e-h Conversions can be done as in Section 2.1 #1a,b above. a. +0 b. −0 c. ∞ d. −∞ e. 2 f. 5.5 −126 2 g. 1 h. 1 10 Section 2.1, #24 x is positive and write x = 10m 1.a1 a2 . . .. After chopping to keep only 15 m digits we get the machine representation x̂ = 10 1.a1 a2 . . . a14 . Observe that WLOG assume signicant |x − x̂| = 10m 0.00 . . . a15 a16 . . . ≤ 10m 10−14 . Thus, 10m 10−14 |x − x̂| 10−14 ≤ m ≤ 10−14 . = |x| 10 1.a1 a2 . . . 1.a1 a2 . . . Section 2.1, #29 Write x = 2n (1.a1 a2 . . . a23 )2 and y = 2m (1.b1 b2 . . . b23 )2 . Write x = 2m (2n−m (1.a1 a2 . . . a23 )2 ) = 2n (0.00 . . . a1 a2 . . .)2 . x < 2−25 y , we have n − m ≤ −25, so in the last display a1 least 25 places to the rights of the decimal point. Thus, Since is at appears in a spot which x + y = 2m (1.b1 b2 . . . b23 0 . . . 0a1 a2 . . . a23 )2 where a1 is 25 or more spots to the right of the decimal point. After chopping (or rounding), we get (x + y) = 2m (1.b1 b2 . . . b23 )2 = y. Section 2.2, #2 Using ex = 1 + x + x2 /2! + x3 /3! + . . ., f (x) = write f (x) = ex − x − 1 as x2 x3 x4 + + + ... 2 6 24 It's easy to check that the rst two terms above are enough for 5 signicant digits: f (0.01) ≈ 0.000050167 Using e0.01 ≈ 1.0101 and direct calculation gives f (0.01) ≈ 1.0101 − 0.0100 − 1.0000 = 1.0001 − 1.0000 = 0.0001. 3 Section 2.2, #3 One option is to write f (x) = (1 − e) + x + x2 /x + x3 /3 + . . .. Section 2.2, #7 Note that if x 6= 0, 1 sinh2 x − tanh2 x tanh2 x sinh2 x (sinh x − tanh x) = = , x x(sinh x + tanh x) x(sinh x + tanh x) where the last equality comes the hyperbolic trig identity cosh2 x − 1 = sinh2 x sinh2 x(cosh2 x − 1) sinh x − tanh x = = tanh2 x sinh2 x. 2 cosh x 2 2 The last expression in (1) avoids loss of signicance near Section 2.2 , #14 √ By rewriting f (x) = x+2− √ x x = 0. as f (x) = √ 2 √ . x+2+ x Section 2.2 #21 Using sin x = x − x3 x 5 + − ... 3! 5! and the alternating series theorem, we get | sin x − x| ≤ For |x| < 1/10 |x|3 . 3! this becomes | sin x − x| ≤ 10−3 = 0.0001666... 6 Section 2.2 Computer Problems, #8 See the script section 2.2 #8. 4 (1) and Section 3.1 Computer Problems, #1 See the script section 3.1 #1. Section 3.2 #1 Newton's method for nding roots of xn+1 f (x) = x2 − R is f (xn ) x2 − R 2x2 − x2n + R 1 = xn − 0 = xn − n = n = f (xn ) 2xn 2xn 2 R xn + . xn Section 3.2 #2 Using #1 above, x2n+1 Letting 2 R 1 R2 2 xn + −R= xn + 2R + 2 − R xn 4 xn 2 2 2 xn − R 1 R R2 1 2 = = xn − 2R + 2 = xn − . 4 xn 4 xn 2xn 1 −R= 4 en = x2n − R be the error in the nth en+1 = If |en | < R approximation, 1 2 e . 4x2n n this means |en+1 | ≤ In particular if |e0 | ≤ R/2, 1 |en |2 . 4(R − |en |) an induction argument shows that |en+1 | ≤ 1 |e |2 . 2R n Section 3.2 #6 m = 8, and x0 = 1.1, we have x1 = 1.0875 and x2 = 1.0765625. When m = 12 and x0 = 1.1, we have x1 = 1.091666... and x2 = 1.08402777... Note the slower convergence to r = 1 when m = 12 compared to m = 8. When Section 3.2 #10 For f (x) = tan x − R we have f (x) tan x − R = = cos x sin x − R cos2 x. f 0 (x) sec2 x 5 Thus, the iteration formula xn+1 = xn − cos xn sin xn + R cos2 xn tan x = R. can be used for nding solutions to Section 3.2 Computer Problems, #7,19 See the scripts section 3.2 #7 and section 3.2 #19. Section 4.1 #6 The polynomial, in Newton form, is p(x) = 2 + x − 3x(x − 2) + 4x(x − 2)(x − 3). Section 4.1 #7b The columns can be lled top to bottom with third column: − 3, 25, fourth column: 7, 9.5, fth column: 0.5. Thus, the interpolating polynomial is 1 p(x) = 2 − 3(x + 1) + 7(x + 1)(x − 1) + (x + 1)(x − 1)(x − 3). 2 Section 4.1 #10 Newton's interpolation polynomial is p(x) = 7 + 2x + 5x(x − 2) + x(x − 2)(x − 3). A nested form for ecient computation is p(x) = 7 + x(2 + (x − 2)(5 + (x − 3))). Section 4.1 #20 6 With x = (−2, −1, 0, 1, 2), y = (2, 14, 4, 2, 2) we have P0 (x) = a0 P1 (x) = a0 + a1 (x + 2) P2 (x) = a0 + a1 (x + 2) + a2 (x + 2)(x + 1) P3 (x) = a0 + a1 (x + 2) + a2 (x + 2)(x + 1) + a3 (x + 2)(x + 1)x P4 (x) = a0 + a1 (x + 2) + a2 (x + 2)(x + 1) + a3 (x + 2)(x + 1)x + a4 (x + 2)(x + 1)x(x − 1), for our Newton polynomials. Using Pi (xi ) = yi , i = 0, 1, 2, 3, 4, we can solve to get a0 = 2, a1 = 12, a2 = −11, a3 = 5, a4 = −1.5. Q(x) = b0 + b1 x + b2 x2 + b3 x3 + b4 x4 1 −2 4 −8 16 b0 2 1 −1 1 −1 1 b1 14 1 0 0 0 0 b2 = 4 1 1 1 1 1 b3 2 1 2 4 8 16 b4 2 Alternatively, we may write and solve to get b0 = 4, b1 = −8, b2 = 5.5, b3 = 2, b4 = −1.5. Of course, P4 (x) = Q(x). Section 4.1 #21 To interpolate the additional point (3, 10), we look at R(x) = Q(x) + c(x + 2)(x + 1)x(x − 1)(x − 2), and plug in 10 = R(3) to get c = 2/5. (3, 10). Now R(x) interpolates the points from #20, along with the extra point Section 4.1 Computer Problems #1,2,9 See scripts online. Section 4.1 #43 The Newton form of the interpolating polynomial at x0 , x1 is P (x) = f [x0 ] + f [x0 , x1 ](x − x0 ). 7 Plugging in x = x1 and solving for f [x0 , x1 ] = f [x0 , x1 ], we nd P (x1 ) − f [x0 ] f (x1 ) − f (x0 ) = . x1 − x0 x1 − x0 By the mean value theorem, there is f 0 (ξ) = ξ ∈ (x0 , x1 ) such that f (x1 ) − f (x0 ) = f [x0 , x1 ]. x1 − x0 Section 4.1 #45 Let x − xi [g(x) − h(x)]. xn − x0 i = 1, . . . , n − 1, Q(x) = g(x) + Since both g and h interpolate Q(xi ) = g(xi ) + for i = 1, . . . , n − 1. h at x0 − xi x0 − xi [g(xi ) − h(xi )] = f (xi ) + [f (xi ) − f (xi )] = f (xi ) xn − x0 xn − x0 Since g interpolates Q(x0 ) = g(x0 ) + and since f interpolates Q(xn ) = g(xn ) + x0 , x0 − x0 [g(x0 ) − h(x0 )] = f (x0 ) + 0 = f (x0 ), xn − x0 xn , x0 − xn [g(xn ) − h(xn )] = g(xn ) − [g(xn ) − f (xn )] = f (xn ). x n − x0 Note that this is the main step in proving Theorem 2 in Section 4.1. Section 4.2 #2 Without loss of generality we can assume xj = 0 h2 |(x − 0)(x − h)| ≤ 4 and xj+1 = h. for all We must show that x ∈ [0, h]. Q(x) := (x − 0)(x − h) = x2 − hx. Since Q0 (x) = 2x − h = 0 when x = h/2, the function Q has its smallest and largest values on [0, h] at h/2 or at the 2 endpoints 0, h. Since Q(0) = Q(h) = 0 we conclude max0≤x≤h |Q(x)| = |Q(h/2)| = h /4. To do this, consider Section 4.3 #3 8 Solution. We must show that 1 n n! = ≥ 1, j = 0, . . . , n − 1. (j + 1)!(n − j)! j+1 j 1 When j = 1, . . . , n − 1, we have ≥ n1 and nj ≥ n so that j+1 1 n n 1 n n = ≥ ≥ = 1. j+1 j j n j n 1 n And when j = 0, we get = 1. j+1 j Section 4.2 #4 Let x ∈ [a, b] and a = x0 < x1 < . . . < xn = b n Y |x − xi | ≤ hn+1 n!, h := max (xi − xi−1 ). 1≤i≤n i=0 Notice h be any choice of nodes. We must show is the largest spacing between adjacent nodes. For xj ≤ x ≤ xj+1 , 1 1 |x − xj ||x − xj+1 | ≤ |xj+1 − xj |2 ≤ h2 . 4 4 where the rst inequality comes from Section 4.2, #2. Since j−1 Y j−1 j−1 j−1 Y Y Y |x − xi | = (x − xi ) ≤ (xj+1 − xi ) = (j − i + 1)h = (j + 1)! hj . i=0 i=0 Similarly, since n Y x ≤ xj+1 , i=0 i=0 x ≥ xj−1 , n Y |x − xi | = i=j+2 (xi − x) ≤ i=j+2 n Y (xi − xj ) = i=j+2 n Y (i − j)h = (n − j)! hn−j−1 . i=j+2 Combining the last three inequalities, we get n Y 1 1 |x − xi | ≤ h2 (j + 1)!hj (n − j)!hn−j−1 ≤ hn+1 n! 4 4 i=0 Section 4.2 #18 Let P2 (x) be the degree 2 interpolant of f at x0 , x1 , x2 : P2 (x) = f [x0 ] + f [x0 , x1 ](x − x0 ) + f [x0 , x1 , x2 ](x − x0 )(x − x1 ), 9 (2) Q(x) = f (x) − P2 (x). Then Q has at least 3 roots namely x0 , x1 , x2 so, 0 00 due to Rolle's theorem, Q has at least 2 roots and Q has at least 1 root, call it ξ . Thus, and dene 0 = Q00 (ξ) = f 00 (ξ) − P200 (ξ) = f 00 (ξ) − 2f [x0 , x1 , x2 ], which shows 1 f [x0 , x1 , x2 ] = f 00 (ξ). 2 Section 4.2 Computer Problem #9 See script online. Section 4.3 #3 Using Taylor's theorem, 1 1 f (x + h) = f (x) + f 0 (x)h + f 00 (x)h2 + f 000 (x)h3 + . . . 2 6 4 f (x + 2h) = f (x) + 2f 0 (x)h + 2f 00 (x)h2 + f 000 (x)h3 + . . . 3 Straightforward calculations give 1 1 [4f (x + h) − 3f (x) − f (x + 2h)] = f 0 (x) − f 000 (x)h2 + . . . 2h 3 1 Terminating this expansion at second order , we nd the error term 1 0 [4f (x + h) − 3f (x) − f (x + 2h)] − f (x) = 1 |f 000 (ξ)|h2 . 3 2h Section 4.3 #5 We have 1 1 f (x + h) = f (x) + f 0 (x)h + f 00 (x)h2 + f 00 (α)h3 , 2 6 1 1 f (x − h) = f (x) − f 0 (x)h + f 00 (x)h2 − f 00 (β)h3 . 2 6 Thus, f (x + h) − f (x) 1 1 − f 0 (x) = f 00 (x)h + f 00 (α)h2 , h 2 6 f (x) − f (x − h) 1 1 − f 0 (x) = − f 00 (x)h + f 00 (β)h2 , h 2 6 1 and using Taylor's theorem 10 so 1 2 f (x + h) − f (x) f (x) − f (x − h) + h h h2 00 − f (x) = [f (α) + f 00 (β)]. 6 0 Section 4.3 #7 The problem with the analysis is that the expansions do not go to high enough order. If we include one more term in the expansions for 2 nd the error is in fact O(h ). f (x + h) − f (x) and f (x − h) − f (x), we Section 4.3 #10 a) We write the interpolating polynomial in Newton form: P (x) = f (x0 ) + f [x0 , x1 ](x − x0 ) + f [x0 , x1 , x2 ](x − x0 )(x − x1 ) and compute P 00 (x) = 2f [x0 , x1 , x2 ]. By the divided dierence recursion theorem, f [x1 , x2 ] − f [x0 , x1 ] f [x0 , x1 , x2 ] = = x2 − x0 f (x2 )−f (x1 ) x2 −x1 − f (x1 )−f (x0 ) x1 −x0 − x0 (x0 ) − f (x1 )−f h = h + αh f (x2 ) 2 f (x0 ) f (x1 ) − + . = 2 h 1+α α α(α + 1) b) Consider P0 (x) = 1, P1 (x) = x − x1 and x2 f (x2 )−f (x1 ) αh P2 (x) = (x − x1 )2 . Pi00 (x) = AP (x0 ) + BP (x1 ) + CP (x2 ), for A, B, C . We will solve the equations i = 0, 1, 2, The equations are 0=A+B+C 0 = −hA + αhC 2 = h2 A + α2 h2 C. It is straightforward to verify that A= 2 , h2 (1+α) Section 4.3 #14 11 B = − h22α , and C= 2 . h2 α(1+α) Write 1 1 1 (5) 1 f (x)h5 + . . . f (x + h) = f (x) + f 0 (x)h + f 00 (x)h2 + f 000 (x)h3 + f (4) (x)h4 + 2 6 24 120 1 1 1 1 (5) f (x − h) = f (x) − f 0 (x)h + f 00 (x)h2 − f 000 (x)h3 + f (4) (x)h4 − f (x)h5 + . . . 2 6 24 120 and similarly 4 2 4 f (x + 2h) = f (x) + f 0 (x)2h + 2f 00 (x)h2 + f 000 (x)h3 + f (4) (x)h4 + f (5) (x)h5 + . . . 3 3 15 4 2 4 f (x − 2h) = f (x) − f 0 (x)2h + 2f 00 (x)h2 − f 000 (x)h3 + f (4) (x)h4 − f (5) (x)h5 + . . . . 3 3 15 Thus, and Notice 1 1 1 (5) [f (x + h) − f (x − h)] = f 0 (x) + f 000 (x)h2 + f (x)h4 + . . . 2h 6 120 (3) 1 1 2 2 [f (x + 2h) − f (x − 2h)] = f 0 (x) + f 000 (x)h2 + f (5) (x)h4 + . . . . 12h 3 9 45 that multiplying equation (3) by 4/3 and subtracting (4) gives (4) 2 1 1 [f (x + h) − f (x − h)] − [f (x + 2h) − f (x − 2h)] = f 0 (x) − f (5) (x)h4 + . . . 3 12h 30 Terminating this expansion at fourth order, we nd 2 1 1 0 [f (x + h) − f (x − h)] − [f (x + 2h) − f (x − 2h)] − f (x) = |f (5) (ξ)|h4 . 3 12h 30 Section 4.3 Computer problems #4 See script online. Section 7.1 #3 c,d c. In the rst step of naive Gaussian elimination, we divide 1 by 0, so the algorithm fails. However, if we interchange rows 1 and 2, then we can do naive elimination to obtain x2 = 2, x1 = 7. d. After doing naive elimination on the rst column, we nd a 0 in the (2, 2) and a 1 in (3, 2) spot. Thus, continuing with naive elimination on the second column, we divide 1 by 0, and the algorithm fails. the Section 7.1 #7 d,e 12 d. The Gaussian elimination sequence is, using 3 2 −1 7 3 2 −1 5 3 2 4 ∼ 0 −1/3 11/3 −1 1 −3 −1 0 5/3 −10/3 3 2 0 68/15 3 0 0 ∼ 0 −1/3 0 62/45 ∼ 0 −1/3 0 0 0 15 −37 0 0 15 Thus, to four signicant digits, e. Using gauss_naive.m gauss_naive.m, 7 3 2 −1 7 −23/3 ∼ 0 −1/3 11/3 −23/3 4/3 0 0 15 −37 64/5 1 0 0 64/15 62/45 ∼ 0 1 0 −62/15 0 0 1 −37/15 −37 x1 = 4.266, x2 = −4.133 again, we nd and x1 = 1, x2 = −1, x3 = −2.466. and x3 = x4 = 0. Section 7.1 Computer problem #3 See script online. Section 7.3 #4 One example is 0 1 0 0 1 0 1 0 0 1 0 1 0 0 1 0 0 1 . 0 0 The system has a unique solution, but naive elimination fails at the very rst step. Section 7.3 #6 Let A j + 1. be a diagonally dominant matrix such that the Inductively, assume that after these eliminations row ai,j (i, j)th entry of A is 0 for i > A up to and including row i. Suppose naive elimination has been applied to the be the (i, j)th entry of A (i + 1)st is still diagonally dominant. Let after these row operations. The next row operation is ai+1,j = ai+1,j − We check if the i row of A ai+1,i ai,j , ai,i (5) is still diagonally dominant after this row operation. Notice that the row operation makes the the induction assumption that the j = i, . . . , n. ith n X (i + 1, j) entries all 0 except for j ≥ i + 1. A is diagonally dominant, row of |ai,j | ≤ |ai,i | − |ai,i+1 |. j=i+2 13 By Moreover, the to begin with (i + 1)th for of A is diagonally dominant, since A was diagonally dominant and the row operations up to row i do not touch row (i + 1). Thus, n X |ai+1,j | ≤ |ai+1,i+1 | − |ai+1,i |. j=i+2 Combining the above displays, n n X X ai,j ai+1,j − ai+1,i ai,j ≤ |ai+1,j | + |ai+1,i | a a i,i i,i j=i+2 j=i+2 ai,i+1 ≤ (|ai+1,i+1 | − |ai+1,i |) + |ai+1,i | − ai,i+1 ai,i ai,i+1 ≤ ai+1,i+1 − ai,i+1 . ai,i Thus, after the row operation (5), the (i + 1)th row of A is still diagonally dominant. Section 8.1 #1b,c Using LU_fact.m, 1 0 a) L = 3 0 the LU factorizations are 0 0 1 0 −3 1 2 −1/4 0 0 , 0 1 1 0 0 −1/20 1 0 b) L = 0 −4/3 1 0 0 −3/2 0 1/3 0 1 3 −1 0 8 3 0 0 −13/4 −20 −15 −10 −5 0 −3/4 −1/2 −1/4 . U = 0 0 −2/3 −1/3 0 0 0 −1/2 1 0 U = 0 0 0 0 , 0 1 Section 8.1 #2b The factorization is 1 0 L= 0 5 The M 0 1 3 0 0 0 1 2 0 0 , 0 1 1 0 U = 0 0 from part a) is 1 0 0 0 1 0 0 −3 1 −5 6 −2 14 0 0 . 0 1 0 3 0 0 0 0 4 0 2 0 . 0 0 It can be calculated by inverting the individual row operation matrices used to build L, then multiplying these matrices together. Section 8.1 #4a After the rst row operation, a zero appears in the fails. Thus, the matrix has no LU (2, 2) spot and so naive elimination factorization. Section 8.1 #11 a) The solution is x1 = 2, x2 = −3, x3 = 0 and x4 = −1. b) In matrix notation the system is 6 0 0 0 12 3 6 0 0 −12 4 −2 7 0 14 . 5 −3 9 21 −2 Since the system is already in lower triangular form, one only needs to scale the columns to get L (which must be unit lower triangular) and adjust 1 0 0 1/2 1 0 L= 2/3 −1/3 1 5/6 −1/2 9/7 0 0 , 0 1 6 0 U = 0 0 U 0 6 0 0 accordingly. Thus, 0 0 0 0 . 7 0 0 21 Section 8.1 Computer problems #11 See script online. Section 8.2 #6,7,8 #6: c (Theorem 1, p. 329), #7: b (Theorem 2, p. 330) #8: a (Theorem 2, p. 330) Section 8.2 #11 a) Since A is symmetric, its condition number can be computed from its eigenvalues. 15 Note that −2 − λ 1 0 −2 − λ 1 det(A − λI) = 1 0 1 −2 − λ = (−2 − λ)((2 + λ)2 − 1) + (2 + λ) = (2 + λ)(2 − (2 + λ)2 ) A Thus, the eigenvalues of in order of decreasing magnitude are √ λ1 = − 2 − 2, and so λ2 = −2, λ3 = √ 2−2 √ |λ1 | 2+2 √ ≈ 5.828. κ(A) = = |λ3 | 2− 2 b) Since A is not symmetric, its condition number must be calculated from the eigenvalues T of A A (or singular values of A). Note that 1 − λ 1 1 T 2−λ 1 det(A A − λI) = 1 1 1 2 − λ = (1 − λ)((2 − λ)2 − 1) − [(2 − λ) − 1] + [1 − (2 − λ)] = (1 − λ)[(2 − λ)2 − 3]. Thus, the eigenvalues of The singular values of A AT A in order of decreasing magnitude are λ1 = √ 3 + 2, λ2 = 1, in decreasing order are √ λ3 = 3 − 2. √ σi = λi , i = 1, 2, 3. Thus, p√ σ1 3+2 κ(A) = = p√ ≈ 3.732. σ3 3−2 Section 8.2 Computer problems #2,7 See scripts online. Section 8.3 #8,12,13 #8: d (Theorem 1, p. 345), #12: e (Gershgorin's theorem states that all eigenvalues must be in the union of the intervals listed), #13: True (the to λ ∈ Ci (aii , ri ), ith inequality is equivalent and every eigenvalue must be in at least one such disk). 16 Section 8.4 #2 Under appropriate assumptions on the inputs (including that value zero), the output will be of A, and x r ≈ 1/λn , with λn A does not have the eigen- the smallest (in magnitude) eigenvalue will be an approximation of the eigenvector corresponding to λn . Section 8.4 #4,5,6 We use the power method with ϕ(x) = uT x λ(5) = 17/5, u = (1, 0, 0). 17/24 = −1 . 17/24 and x(5) A The aim is to estimate the dominant eigenvalue of λ(5) ≈ λ = 2 + √ x(5) 2, In #4, ve iterations give and the corresponding eigenvector, √ 1/ 2 . −1 ≈x= √ 1/ 2 In #5, ve iterations give λ(5) = −99/29, The aim is to estimate the eigenvalue of x(5) A 99/140 = 1 . 99/140 furthest from vector, √ λ(5) + 4 ≈ λ = 2 − 2, x(5) 4 and the corresponding eigen- √ 1/ 2 1√ . ≈x= 1/ 2 In #6, ve iterations give λ(5) = 99/58, x(5) The aim is to estimate smallest eigenvalue of 1/λ(5) ≈ λ = 2 − √ A 99/140 = 1 . 99/140 and the corresponding eigenvector, x(5) 2, Section 8.4 Computer problems #4 See script online. 17 √ 1/ 2 1√ . ≈x= 1/ 2