Math 151 Homework Solutions: Probability & Statistics

advertisement

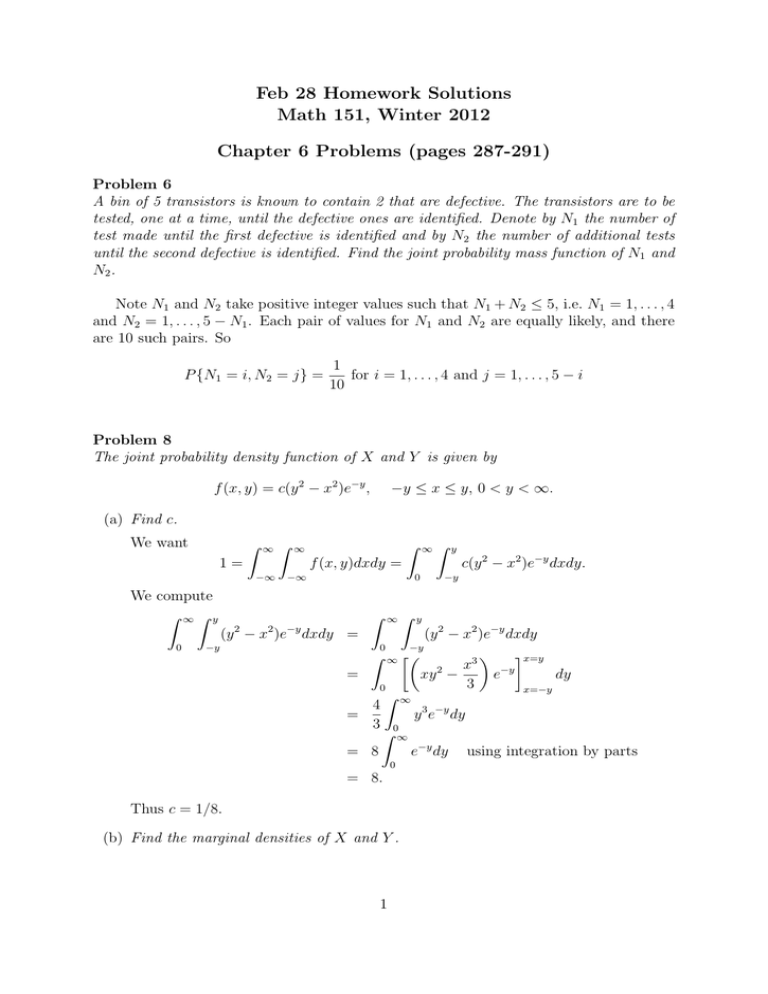

Feb 28 Homework Solutions

Math 151, Winter 2012

Chapter 6 Problems (pages 287-291)

Problem 6

A bin of 5 transistors is known to contain 2 that are defective. The transistors are to be

tested, one at a time, until the defective ones are identified. Denote by N1 the number of

test made until the first defective is identified and by N2 the number of additional tests

until the second defective is identified. Find the joint probability mass function of N1 and

N2 .

Note N1 and N2 take positive integer values such that N1 + N2 ≤ 5, i.e. N1 = 1, . . . , 4

and N2 = 1, . . . , 5 − N1 . Each pair of values for N1 and N2 are equally likely, and there

are 10 such pairs. So

P {N1 = i, N2 = j} =

1

for i = 1, . . . , 4 and j = 1, . . . , 5 − i

10

Problem 8

The joint probability density function of X and Y is given by

f (x, y) = c(y 2 − x2 )e−y ,

−y ≤ x ≤ y, 0 < y < ∞.

(a) Find c.

We want

Z

∞

Z

∞

1=

2

2

−∞

−y

0

∞

Z

−y

y

Z

c(y 2 − x2 )e−y dxdy.

−y

y

(y 2 − x2 )e−y dxdy

0

−y

x=y

Z ∞ x3 −y

2

=

xy −

e

dy

3

0

x=−y

Z

4 ∞ 3 −y

=

y e dy

3 0

Z ∞

= 8

e−y dy using integration by parts

(y − x )e dxdy =

0

Z

f (x, y)dxdy =

−∞

We compute

Z ∞Z y

∞

Z

0

= 8.

Thus c = 1/8.

(b) Find the marginal densities of X and Y .

1

The marginal probability density function of X is

Z ∞

f (x, y)dy

fX (x) =

−∞

Z ∞

1 2

=

(y − x2 )e−y dy

|x| 8

Z ∞

1 −y

=

ye dy using integration by parts

|x| 4

Z ∞

1 −y

1

−|x|

+

e dy using integration by parts

= |x|e

4

|x| 4

1

1

= |x|e−|x| + e−|x|

4

4

1 −|x|

= e

|x| + 1

4

Let fY be the marginal probability density function of Y . For y < 0 we have

fY (y) = 0, and for y ≥ 0 we have

Z ∞

fY (y) =

f (x, y)dx

−∞

Z

1 y 2

(y − x2 )e−y dx

=

8 −y

x=y

1

x3 −y

2

=

xy −

e

8

3

x=−y

1 3 −y

= y e

6

(c) Find E[X].

Z

∞

E[X] =

−∞

Z

∞

1

xf (x, y)dxdy =

8

−∞

Z

0

∞

Z

y

x(y 2 − x2 )e−y dxdy = 0,

−y

since x(y 2 − x2 )e−y is an odd function of x.

Problem 10

The joint probability density function of X and Y is given by

f (x, y) = e−x−y ,

0 ≤ x < ∞, 0 ≤ y < ∞

(a) Find P {X < Y }.

Z ∞Z ∞

Z ∞Z ∞

Z ∞

−x−y y=∞

−x−y

P {X < Y } =

f (x, y)dydx =

e

dydx =

−e

dx

y=x

0

x

0

x

0

Z ∞

x=∞ 1

=

e−2x dx = −1

e−2x x=0 = .

2

2

0

2

(b) Find P {X < a}.

Z

a

∞

Z

P {X < a} =

a

Z

Z

∞

f (x, y)dydx =

0

Z

=

0

e

0

a

−x−y

0

Z

dydx =

0

a

−e−x−y

0

y=∞

y=0

dx

x=a

e−x dx = −e−x x=0 = 1 − e−a .

Problem 15

The random vector (X, Y ) is said to be uniformly distributed over a region R in the plane

if, for some constant c, its joint density is

c if (x, y) ∈ R,

f (x, y) =

0 otherwise.

(a) Show that 1/c = area of region R.

Let R2 be the 2-dimensional plane. Observe that for E ⊆ R2 ,

ZZ

ZZ

P {(X, Y ) ∈ E} =

f (x, y)dxdy =

c = c · area(E ∩ R).

E

E∩R

Since f (x, y) is a joint density function, we have

1 = P {(X, Y ) ∈ R2 } = c · area(R2 ∩ R) = c · area(R).

So the area of R is 1/c.

(b) Suppose that (X, Y ) is uniformly distributed over the square centered at (0, 0) and

with sides of length 2. Show that X and Y are independent, with each being distributed uniformly over (−1, 1).

Let R be the real line. For sets A, B ⊆ R, we have

Z Z

Z

P {X ∈ A, Y ∈ B} =

f (x, y)dxdy =

B

A

B∩[−1,1]

Z

A∩[−1,1]

1

dxdy

4

1

=

length(A ∩ [−1, 1])length(B ∩ [−1, 1])

4

and

Z

∞

Z

P {X ∈ A} =

Z

1

Z

f (x, y)dxdy =

−∞

−1

A

A∩[−1,1]

1

1

dxdy = length(A ∩ [−1, 1])

4

2

and

Z Z

∞

P {Y ∈ B} =

Z

Z

1

f (x, y)dxdy =

B

−∞

B∩[−1,1]

−1

1

1

dxdy = length(B ∩ [−1, 1]).

4

2

Thus

1

P {X ∈ A, Y ∈ B} = length(A∩[−1, 1])length(B∩[−1, 1]) = P {X ∈ A}P {Y ∈ B},

4

so X and Y are independent. Also, our formulas for P {X ∈ A} and P {Y ∈ B}

show that each of X and Y are uniformly distributed over (−1, 1).

3

(c) What is the probability that (X, Y ) lies in the circle of radius 1 centered at the

origin? That is, find P {X 2 + Y 2 ≤ 1}.

Let C denote the interior of the circle of radius 1 centered at the origin. We want

to compute P {(X, Y ) ∈ C}. We have

P {(X, Y ) ∈ C} =

1

1

π

1

· area(C ∩ R) = · area(C) = · π = .

4

4

4

4

Problem 16

Suppose that n points are independently chosen at random on the circumference of a

circle, and we want the probability that they all lie in some semicircle. That is, we want

the probability that there is a line passing through the center of the circle such that all the

points are on one side of that line. Let P1 , . . . , Pn denote the n points. Let A denote the

event that all the points are contained in some semicircle, and let Ai be the event that

all the points lie in the semicircle beginning at the point Pi and going clockwise for 180◦ ,

i = 1, . . . , n.

(a) Express A in terms of the Ai .

S

It’s clear that Ai ⊂ A for each i. Hence ni=1 Ai ⊆ A. Now we want to show the

reverse inclusion. If A occurs, then we can rotate the semicircle

Sn clockwise until it

startsSat one of the points Pi , showing Sthat Ai and thus i=1 Ai occurs. Hence

A ⊆ ni=1 Ai . So we’ve shown that A = ni=1 Ai .

(b) Are the Ai mutually exclusive?

Almost, but not quite. Strictly speaking the Ai and Aj are not mutually exclusive

since if Pi = Pj , the other Pk can lie in the semicircle beginning at Pi = Pj and

going clockwise for 180 degrees in which case both Ai and Aj occur. However, if

Pi 6= Pj , then Ai and Aj cannot both occur since if Pj is in the semicircle starting

at Pi and going clockwise 180 degrees, then Pi is not in the semicircle starting at Pj

and going clockwise 180 degrees. So Ai Aj ⊆ {Pi = Pj }. Moreover P {Pi = Pj } = 0,

so P (Ai Aj ) = 0.

(c) Find P (A).

We argued in part (b) that P (Ai Aj ) = 0. It follows that P (Ai Aj Ak ) = 0, and the

same is true for all higher order intersections.

We have

P (A) = P (

n

[

Ai )

i=1

=

n

X

i=1

P (Ai ) −

X

P (Ai Aj ) +

i<j

X

P (Ai Aj Ak ) − . . .

i<j<k

by the inclusion-exclusion identity

n

X

P (Ai ) since all other terms are zero.

=

i=1

4

Fix i ∈ {1, ..., n} and fix a randomly chosen Pi . For j 6= i, the probability that the

randomly chosen point Pj is in the semicircle starting at Pi and going clockwise 180

degrees is 1/2. So P (Ai ) = (1/2)n−1 . Therefore

P (A) =

n

X

P (Ai ) = n(1/2)n−1 .

i=1

Problem 18

Two points are selected randomly on a line of length L so as to be on opposite sides of the

midpoint of the line. [In other words, the two points X and Y are independent random

variables such that X is uniformly distributed over (0, L/2) and Y is uniformly distributed

over (L/2, L).] Find the probability that the distance between the two points is greater

than L/3.

Note that X and Y are jointly distributed with joint probability density function

given by

(

4

0 ≤ x ≤ L2 and L2 ≤ y ≤ L

2

f (x, y) = L

0

otherwise.

Hence the probability that the distance between the two points is greater than L/3 is

equal to

ZZ

f (x, y)dydx

P (Y − X > L/3) =

Z

y−x>L/3

L/2 Z L

=

0

Z

L/6

max{L/2,L/3+x}

L

Z

=

0

Z

L/2

4

dydx +

L2

L/6

4

dydx

L2

Z L/2 Z L

L/6

Z

L/3+x

L/2

4

dydx

L2

4

4

(L − L/2)dx +

L − (L/3 + x) dx

2

2

L

0

L/6 L

Z L/6

Z L/2

2

8

4

=

dx +

− 2 x dx

L

L

0

L/6 3L

h

2

8

2 iL/2

= (L/6 − 0) +

x − 2 x2

L

3L

L

L/6

4 1

4

1

1

−

−

−

= +

3

3 2

9 18

7

= .

9

=

Note: it is much easier to find the limits for the integrals above if you first draw

a picture of the region in the plane where 0 ≤ x ≤ L2 , where L2 ≤ y ≤ L, and where

y − x > L/3. Alternatively, after drawing this region in the plane, you can compare the

area of this region to the area of the larger rectangle given by 0 ≤ x ≤ L2 and L2 ≤ y ≤ L

to see that the desired probability is 7/9.

5

Problem 23

The random variables X and Y have joint density function

f (x, y) = 12xy(1 − x),

0 < x < 1, 0 < y < 1.

and equal to zero otherwise.

(a) Are X and Y are independent?

Let R be the real line. Let A, B ⊆ R. Without loss of generality, we may assume

that A, B ⊆ (0, 1) since f is zero outside this range. We have

Z 1Z

Z ∞Z

f (x, y)dxdy =

12xy(1 − x)dxdy

P {X ∈ A} =

−∞ A

0

A

Z

Z

Z 1

1

ydy = 12 x(1 − x)dx ·

= 12 x(1 − x)dx ·

2

0

A

Z A

= 6 x(1 − x)dx

A

and

Z Z

P {Y ∈ B} =

B

∞

Z Z

1

f (x, y)dxdy =

12xy(1 − x)dxdy

B 0

Z

Z

Z

1

ydy = 2 ·

ydy

x(1 − x)dx ·

ydy = 12 · ·

6 B

B

B

−∞

Z 1

= 12

0

and

Z Z

Z Z

P {X ∈ A, Y ∈ B} =

B

Z

= 6

12xy(1 − x)dxdy

f (x, y)dxdy =

B

A

Z

x(1 − x)dx · 2 ydy = P {X ∈ A} · P {Y ∈ B}.

A

A

B

Since P {X ∈ A, Y ∈ B} = P {X ∈ A} · P {Y ∈ B}, this means that X and Y are

independent. Note that we’ve also shown that fX (x) = 6x(1 − x) and fY (y) = 2y,

and we will use this information in the remaining parts of this problem.

(b) Find E[X].

Z

∞

1

Z

xfX (x)dx =

E[X] =

−∞

0

1

6x2 (1 − x)dx = 2x3 − 3x4 /2 0 = 1/2.

(c) Find E[Y ].

Z

∞

E[Y ] =

Z

yfY (y)dy =

−∞

0

(d) Find V ar(X).

Z ∞

Z

2

2

E[X ] =

x fX (x)dx =

−∞

0

1

1

1

2y 2 dx = 2y 3 /3 0 = 2/3.

1

6x3 (1 − x)dx = 3x4 /2 − 6x5 /5 0 = 3/10,

so

Var(X) = E[X 2 ] − E[X]2 = 3/10 − (1/2)2 = 1/20.

6

(e) Find V ar(Y ).

2

Z

∞

E[Y ] =

1

Z

2

y fY (y)dy =

−∞

0

1

2y 3 dx = y 4 /2 0 = 1/2.

so

Var(Y ) = E[Y 2 ] − E[Y ]2 = 1/2 − (2/3)2 = 1/18.

Problem 26

Suppose that A, B, C are independent random variables, each being uniformly distributed

over (0, 1).

(a) What is the joint cumulative distribution function of A, B, C?

The joint cumulative distribution function is

(

1 if 0 < x, y, z < 1

f (a, b, c) = fA (a)fB (b)fC (c) =

0 otherwise.

(b) What is the probability that all the roots of the equation Ax2 + Bx + C = 0 are

real?

Recall that the roots of Ax2 + Bx + C = 0 are real if B 2 − 4AC ≥ 0, which occurs

with probability

Z

0

1

Z

0

1

Z

1

√

min{1, 4ac}

Z

1

min{1,1/(4a)}

Z

1dbdcda =

0

Z

√

0

1/4

Z

1

=

0

Z

Z

Z

Z

0

Z

0

1/4

4ac

1/2 1/2

c

Z

Z

1

1/4

1

Z

1dbdcda

4ac

1/(4a)

)dcda +

c=1

c − 43 a1/2 c3/2 c=0 da +

1 − 34 a1/2 da +

0

1

1/4

Z

Z

√

0

1

1/4

=

1/(4a)

Z

0

1/4

=

Z

1

1dbdcda +

(1 − 2a

=

1dbdcda

4ac

1

√

0

1/4

1

Z

(1 − 2a1/2 c1/2 )dcda

0

c=1/(4a)

c − 34 a1/2 c3/2 c=0

da

1

1/4

1 −1

a da

12

a=1/4 1

a=1

= a − 89 a3/2 a=0 + 12

log(a) a=1/4

=

5

36

+

1

12

log(4).

Section 6 Theoretical Exercises (page 291-293)

Problem 4

Solve Buffon’s needle problem when L > D.

See page 243 for the version of the problem where L ≤ D. What is new for L > D is

that now X < min{D/2, (L/2) cos θ}. This does not reduce to X < (L/2) cos θ as it did

7

before. Let φ be the angle such that D/2 = (L/2) cos φ, that is, cos φ = D/L (note we

are using slightly different notation from the text). Then

ZZ

L

fX (x)fθ (y)dxdy

=

P X < cos θ

2

x<(L/2) cos y

Z π/2 Z min{D/2,(L/2) cos y

4

=

dxdy

πD 0

0

Z φ Z D/2

Z π/2 Z (L/2) cos y

4

4

dxdy +

dxdy

=

πD 0 0

πD φ

0

Z π/2

4 φD

4

L

=

·

+

cos y dy

πD 2

πD φ

2

2L

2φ

+

(1 − sin φ),

=

π

πD

where φ is the angle such that cos φ = D/L.

Problem 5

If X and Y are independent continuous positive random variables, express the density

function of (a) Z = X/Y and (b) Z = XY in terms of the density functions of X and

Y . Evaluate the density functions in the special case where X and Y are both exponential

random variables.

(a) Z = X/Y .

Note fZ (z) = 0 for z ≤ 0, since X and Y are both positive. For z > 0, we compute

Z ∞ Z zy

P {Z < z} = P {X/Y < z} = P {X < zY } =

fX (x)fY (y)dxdy,

−∞

0

so

d

fZ (z) = P {Z < z} =

dz

Z

∞

0

d

dz

Z

zy

∞

Z

fX (x)fY (y)dxdy =

yfX (zy)fY (y)dy.

−∞

0

(b) Z = XY .

Note fZ (z) = 0 for z ≤ 0, since X and Y are both positive. For z > 0, we compute

Z

∞

Z

z/y

P {Z < z} = P {XY < z} = P {X < z/Y } =

fX (x)fY (y)dxdy,

0

−∞

so

d

fZ (z) = P {Z < z} =

dz

Z

0

∞

d

dz

Z

z/y

Z

fX (x)fY (y)dxdy =

−∞

0

∞

1

fX (z/y)fY (y)dy.

y

Now, let X and Y be exponential random variables with parameters λ and µ,

respectively. We can now evaluate the density functions a bit more explicitly.

8

(a) Z = X/Y .

Z

∞

yfX (zy)fY (y)dy =

0

Z ∞

λµ

=

e−(λz+µ)y dy

λz + µ 0

λµ

=

(λz + µ)2

∞

Z

−λzy −µy

λµye

fZ (z) =

e

∞

Z

λµye−(λz+µ)y dy

dy =

0

0

after integrating by parts

(b) Z = XY .

Z

fZ (z) =

0

∞

1

fX (z/y)fY (y)dy =

y

Z

0

9

∞

λµ −λz/y −µy

e

e dy =

y

Z

0

∞

λµ −λz/y−µy

e

dy.

y