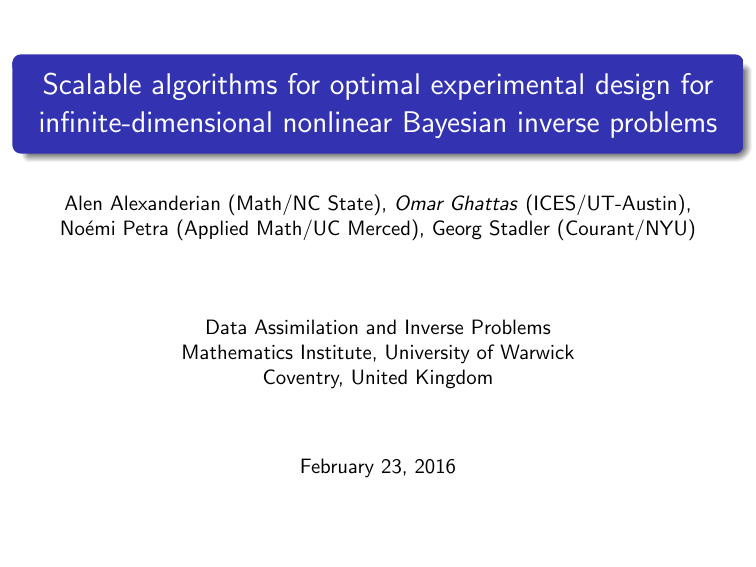

Scalable algorithms for optimal experimental design for

advertisement

Scalable algorithms for optimal experimental design for

infinite-dimensional nonlinear Bayesian inverse problems

Alen Alexanderian (Math/NC State), Omar Ghattas (ICES/UT-Austin),

Noémi Petra (Applied Math/UC Merced), Georg Stadler (Courant/NYU)

Data Assimilation and Inverse Problems

Mathematics Institute, University of Warwick

Coventry, United Kingdom

February 23, 2016

The conceptual optimal experimental design problem

Data: d ∈ Rq

Model parameter field: m = {m(x)}x∈D

Bayesian inverse problem:

Use data d to infer the model parameter

field m in the Bayesian sense, i.e., update

the prior state of knowledge on m

Optimal experimental design:

How to design observation system for d to

“optimally” infer m?

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

2 / 32

Some relevant references on OED for PDEs

E. Haber, L. Horesh, L. Tenorio, Numerical methods for experimental design of

large-scale linear ill-posed inverse problems, Inverse Problems, 24:125-137, 2008.

E. Haber, L. Horesh, L. Tenorio, Numerical methods for the design of large-scale

nonlinear discrete ill-posed inverse problems, Inverse Problems, 26, 025002, 2010.

E. Haber, Z. Magnant, C. Lucero, L. Tenorio, Numerical methods for A-optimal

designs with a sparsity constraint for ill-posed inverse problems, Computational

Optimization and Applications, 1-22, 2012.

Q. Long, M. Scavino, R. Tempone, S Wang, Fast estimation of expected

information gains for Bayesian experimental designs based on Laplace

approximations, Comp. Meth. in Appl. Mech. and Eng., 259:24–39, 2013.

Q. Long, M. Scavino, R. Tempone, S. Wang, A Laplace method for

under-determined Bayesian optimal experimental designs, Comp. Meth. in Applied

Mech. and Engineering, 285:849–876, 2015.

F. Bisetti, D. Kim, O. Knio, Q. Long, R. Tempone, Optimal Bayesian experimental

design for priors of compact support with application to shocktube experiments for

combustion kinetics, Intl. Journal for Num. Methods in Engineering, 2016.

X. Huan and Y.M. Marzouk, Simulation-based optimal Bayesian experimental

design for nonlinear systems, Journal of Computational Physics, 232:288-317, 2013.

X. Huan, Y. Marzouk, Gradient-based stochastic optimization methods in Bayesian

experimental design, Intl. Journal for Uncertainty Quantification, 4(6), 2014.

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

3 / 32

How we define an optimal design

ground truth + sensor locations

Given locations xi of possible sensor sites,

choose optimal weights wi for a subset (using

sparsity constraints):

x1 , . . . , xns

design :=

w1 , . . . , wns

MAP solution

Obtain data, solve Bayesian inverse problem:

data + likelihood, prior =⇒ posterior

variance

We seek an A-optimal design:

minimize average posterior variance

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

4 / 32

Bayesian inverse problem in Hilbert space (A. Stuart, 2010)

D: bounded domain

H = L2 (D)

m ∈ H: parameter

Parameter-to-observable map: f : H → R

q

Additive Gaussian noise:

η ∼ N (0, Γnoise )

d = f (m) + η,

Likelihood:

πlike (d|m) ∝ exp

n

o

1

− (f (m) − d)T Γ−1

noise (f (m) − d)

2

Gaussian prior measure: µprior = N (mpr , Cprior )

Bayes Theorem (in infinite dimensions):

dµpost

∝ πlike (d|m)

dµprior

“dµpost ∝ πlike (d|m) dµprior ”

A.M. Stuart, Inverse problems: A Bayesian perspective, Acta Numerica, 2010.

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

5 / 32

The experimental design and a weighted inference problem

design :=

Want wi ∈ {0, 1}

relax

=⇒

x 1 , . . . , x ns

w1 , . . . , wns

0 ≤ wi ≤ 1

threshold

=⇒

wi ∈ {0, 1}

w-weighted data-likelihood:

πlike (d|m; w) ∝ exp

n

o

1

− (f (m) − d)T Wσ (f (m) − d)

2

f : parameter-to-observable map

W := diag(wi )

Wσ :=

1

W

2

σnoise

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

6 / 32

A-optimal design in Hilbert space

Covariance function: cpost (x, y) = Cov {m(x), m(y)}

Z

1

cpost (x, x) dx

average posterior variance =

|D| D

Covariance operator:

Z

[Cpost u](x) =

cpost (x, y)u(y) dy

D

Mercer’s Theorem:

Z

cpost (x, x) dx = tr(Cpost )

D

A-optimal design criterion:

Choose a sensor configuration to minimize tr(Cpost )

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

7 / 32

Relationship to some other OED criteria

For Gaussian prior and noise, and linear parameter-to-observable map:

Maximizing the expected information gain is equivalent to D-optimal design:

1

1

Eµprior Ed|m {Dkl (µpost , µprior )} = − log det Γpost + log det Γprior

2

2

Minimizing Bayes risk of posterior mean is equivalent to A-optimal design:

Eµprior Ed|m km − mpost (d)k2

=

Z Z

km − mpost (d)k2 πlike (d|m) dd µprior (dm)

H Y

= tr(Γpost )

For infinite dimensions, see:

A. Alexanderian, P. Gloor, and O. Ghattas, On Bayesian A- and D-optimal experimental designs

in infinite dimensions, Bayesian Analysis, to appear, 2016.

http://dx.doi.org/10.1214/15-BA969

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

8 / 32

Challenges

The infinite-dimensional Bayesian inverse problem is “merely” an inner

problem within OED

Need trace of posterior covariance operator, which would be intractable in

the large-scale setting

We approximate the posterior covariance by the inverse of the Hessian at the

maximum a posteriori (MAP) point, but explicit construction of the Hessian

is still very expensive

For nonlinear inverse problem, Hessian depends on data, which are not

available a priori

For efficient optimization, we need derivatives of the trace of the inverse

Hessian with respect to the sensor weights

We seek scalable algorithms, i.e., those whose cost (in terms of forward PDE

solves) is independent of the data dimension and the parameter dimension

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

9 / 32

A-optimal design problem: Linear case

Linear parameter-to-observable map: f (m) := Fm

Gaussian prior measure: µprior = N (mprior , Cprior )

Gaussian prior and noise =⇒ Gaussian posterior

2

E

1

1 D −1

mpost = arg min W 1/2 (Fm − d) −1 +

Cprior (m − mprior ), m − mprior

m 2

Γnoise 2

|

{z

}

J (m) := negative log-posterior

Cpost (w) = H−1 (w),

−1

H(w) = F ∗ Wσ F + Cprior

OED problem:

minimize tr

w

∗

F Wσ F +

−1

Cprior

−1 + penalty

A. Alexanderian, N. Petra, G. Stadler, O. Ghattas, A-optimal design of experiments for infinite-dimensional

Bayesian linear inverse problems with regularized `0 -sparsification, SIAM Journal on Scientific Computing,

36(5):A2122–A2148, 2014.

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

10 / 32

A-optimal design problem: Nonlinear case

Nonlinear case: Gaussian prior and noise =⇒

6

Gaussian posterior

Use Gaussian approximation to posterior: for given w and d

Compute the maximum a posteriori probability (MAP) estimate mMAP (w; d)

mMAP (w; d) := arg min J (m, w, d)

m

Gaussian approximation to posterior at MAP point

N mMAP (w; d), H−1 mMAP (w; d), w; d

Problem: data d not available a priori

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

11 / 32

A-optimal designs for nonlinear inverse problems

General formulation: minimize average posterior variance:

tr H−1 mMAP (w; d), w; d

minimize

w

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

12 / 32

A-optimal designs for nonlinear inverse problems

General formulation: minimize expected average posterior variance:

n o

minimize

Ed

tr H−1 mMAP (w; d), w; d

w

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

12 / 32

A-optimal designs for nonlinear inverse problems

General formulation: minimize expected average posterior variance:

n o

minimize Eµprior Ed|m tr H−1 mMAP (w; d), w; d

w

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

12 / 32

A-optimal designs for nonlinear inverse problems

General formulation: minimize expected average posterior variance:

n o

minimize Eµprior Ed|m tr H−1 mMAP (w; d), w; d

+ γP (w)

w

P (w): sparsifying penalty function

In practice: get data from a few training models m1 , . . . , mnd

mi : draws from prior

Training data:

di = f (mi ) + η i ,

Omar Ghattas (UT Austin)

Large-scale OED

i = 1, . . . , nd

February 23, 2016

12 / 32

A-optimal designs for nonlinear inverse problems

General formulation: minimize expected average posterior variance:

n o

minimize Eµprior Ed|m tr H−1 mMAP (w; d), w; d

+ γP (w)

w

P (w): sparsifying penalty function

In practice: get data from a few training models m1 , . . . , mnd

mi : draws from prior

Training data:

di = f (mi ) + η i ,

i = 1, . . . , nd

The problem to solve in practice:

minimize

w

Omar Ghattas (UT Austin)

nd

1 X

tr H−1 mMAP (w; di ), w; di + γP (w)

nd i=1

Large-scale OED

February 23, 2016

12 / 32

Randomized trace estimation

A ∈ Rn×n — symmetric

Trace estimator:

tr(A) ≈

ntr

1 X

z T Az i ,

ntr i=1 i

z i — random vectors

Gaussian trace estimator: z i independent draws from N (0, I)

For z ∼ N (0, I)

E z T Az = tr(A)

2

Var z T Az = 2 kAkF

Clustered eigenvalues =⇒ Good approximation with small ntr

Efficient means of approximating trace of inverse Hessian

M. Hutchinson, A stochastic estimator of the trace of the influence matrix for Laplacian smoothing

splines (1990).

H. Avron and S. Toledo, Randomized algorithms for estimating the trace of an implicit symmetric positive

semi-definite matrix (2011).

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

13 / 32

Optimization problem for computing A-optimal design

OED objective function:

Ψ(w) =

Omar Ghattas (UT Austin)

nd

1 X

tr H−1 w; di )

nd i=1

Large-scale OED

February 23, 2016

14 / 32

Optimization problem for computing A-optimal design

OED objective function:

Ψ(w) =

nd X

ntr

1 X

zk , H−1 w; di ) zk

nd ntr i=1

k=1

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

14 / 32

Optimization problem for computing A-optimal design

OED objective function:

Ψ(w) =

nd X

ntr

1 X

h zk , H−1 w; di ) zk i

nd ntr i=1

{z

}

|

k=1

yik

Auxiliary variable: yik = H−1 w; di )zk

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

14 / 32

Optimization problem for computing A-optimal design

OED objective function:

Ψ(w) =

nd X

ntr

1 X

h zk , H−1 w; di ) zk i

nd ntr i=1

{z

}

|

k=1

yik

Auxiliary variable: yik = H−1 w; di )zk

OED optimization problem

nd X

ntr

1 X

hzk , yik i + γP (w)

minimize

w

nd ntr i=1

k=1

where

mMAP (w; di ) = arg min J m, w; di , i = 1, . . . , nd

m

H mMAP (w; di ), w; di yik = zk , i = 1, . . . , nd , k = 1, . . . , ntr

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

14 / 32

Application: subsurface flow

Forward problem

−∇·(em ∇u) = f

in D

u=g

on ΓD

e ∇u · n = h

on ΓN

m

u: pressure field

m: log-permeability field (inversion parameter)

Left: true parameter; Right: pressure-field

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

15 / 32

Bayesian inverse problem: Gaussian approximation

MAP point is solution to

minimize J (m, w; d) :=

m

1

1

(Bu − d)T Wσ (Bu − d) + hm − mprior , m − mprior iE

2

2

where

−∇·(em ∇u) = f

in D

u=g

on ΓD

em ∇u · n = 0

on ΓN

B is observation operator

Cameron-Martin inner-product:

E

D

−1/2

−1/2

hm1 , m2 iE = Cprior m1 , Cprior m2 ,

1/2

m1 , m2 ∈ range(Cprior )

Covariance of Gaussian approximation to posterior:

C = H(m, w; d)−1 = [∇2m J (m, w; d)]−1

with m = mMAP (w; d)

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

16 / 32

Optimization problem for A-optimal design

minimize

n

w∈[0,1]

s

nd X

ntr

1 X

hzk , yik i + γP (w)

nd ntr i=1

k=1

where for i = 1, . . . , nd , k = 1, . . . , ntr

−∇·(emi ∇ui ) = f

−∇·(emi ∇pi ) = −B ∗ Wσ (Bui − di )

−1

Cprior

(mi − mpr ) + emi ∇ui · ∇pi = 0

|

{z

}

∇m J (mi )

−∇·(e

mi

∇vik ) = ∇·(yik emi ∇ui )

−∇·(e

mi

∇qik ) = ∇·(yik emi ∇pi ) − B ∗ Wσ Bvik

−1

Cprior

yik + yik emi ∇ui · ∇pi + emi ∇vik · ∇pi + ∇ui · ∇qik = zk

|

{z

}

H(mi )yik

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

17 / 32

The Lagrangian for the OED problem

OED

L

∗

nd

:=

+

∗

∗

∗

∗

∗

w, {ui }, {mi }, {pi }, {vik }, {qik }, {yik }, {ui }, {mi }, {pi }, {vik }, {qik }, {yik }

ntr

1 XX

hzk , yik i + γP (w)

nd ntr i=1 k=1

nd

X

m

∗

∗

∗

e i ∇ui , ∇ui − f, ui − h, ui Γ

N

i=1

nd

X

m

∗

∗

∗ +

e i ∇pi , ∇pi + B Wσ (Bui − di ), pi

i=1

+

nd

X

∗

E

(mi − mpr ), mi

∗ m

+ mi e i ∇ui , ∇pi

i=1

nd ntr

X

X m

∗ m

∗ +

e i ∇vik , ∇vik + yik e i ∇ui , ∇vik

i=1 k=1

nd ntr

X

X m

∗

∗ m

∗ ∗ +

e i ∇qik , ∇qik + yik e i ∇pi , ∇qik + B Wσ Bvik , qik

i=1 k=1

nd ntr

X

X ∗ m

∗

∗ m

∗

m

+

yik e i ∇vik , ∇pi + yik , yik E + yik e i ∇ui , ∇qik + (yik yik e i ∇ui , ∇pi )

i=1 k=1

∗ − zk , yik

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

18 / 32

The adjoint OED problem and the gradient

∗

∗

∗

The adjoint OED problem for {u∗i }, {m∗i }, {p∗i }, {vik

}, {qik

}, {yik

}

∗

∗ mi

∗

B ∗ Wσ Bqik

− ∇·(yik

e ∇pi ) −∇·(emi ∇vik

)=0

−1

∗

∗

∗ m

∗

emi ∇qik

· ∇pi + Cprior

yik

+ yik

e ∇ui · ∇pi + emi ∇ui · ∇vik

=−

1

zk

nd ntr

∗

∗ m

−∇·(emi ∇qik

) − ∇·(yik

e ∇ui ) = 0

B ∗ Wσ Bp∗i − ∇·(m∗i emi ∇pi ) −∇·(emi ∇u∗i ) = b1i

−1

emi ∇p∗i · ∇pi + Cprior

m∗i + m∗i emi ∇ui · ∇pi + emi ∇ui · ∇u∗i = b2i

−∇·(emi ∇p∗i ) − ∇·(m∗i emi ∇ui ) = b3i

Gradient for the OED problem:

g(w) =

nd

X

∗

Γ−1

noise (Bui − di ) Bpi +

i=1

nd X

ntr

X

i=1 k=1

∗

∗

∗

qik

, yik

, and vik

can be eliminated:

∗

∗

qik

= −vik , yik

= −yik ,

Omar Ghattas (UT Austin)

∗

Γ−1

noise Bvik Bqik ,

Large-scale OED

∗

vik

= −qik

February 23, 2016

19 / 32

Computing the OED objective function and its gradient

Solve inverse problems: mi (w), ui (mi (w)) and pi (mi (w)), i = 1, . . . , nd

Solve for (vik , yik , qik ), i = 1, . . . , nd , k = 1, . . . , ntr :

−∇·(emi ∇vik ) = ∇·(yik emi ∇ui )

−∇·(emi ∇qik ) = ∇·(yik emi ∇pi ) − B∗ Wσ Bvik

−1

Cprior

yik + yik emi ∇ui · ∇pi + emi ∇vik · ∇pi + ∇ui · ∇qik = zk

Objective function evaluation: Ψ(w) =

Solve for

(p∗i , m∗i , u∗i ),

1

nd ntr

Pnd Pntr

i=1

k=1

i = 1, . . . , nd

B∗ Wσ Bp∗i − ∇·(m∗i emi ∇pi ) −∇·(emi ∇u∗i )

mi

e

∇p∗i

· ∇pi +

mi

−∇·(e

∇p∗i )

−1

Cprior

m∗i

−

hzk , yik i

+

m∗i em

i ∇ui

∇·(m∗i em

i ∇ui )

· ∇pi + e

mi

∇ui ·

= b1i

∗

∇ui = b2i

= b3i

Gradient:

g(w) =

nd

X

∗

Γ−1

noise (Bui − di ) Bpi −

i=1

Omar Ghattas (UT Austin)

Large-scale OED

nd ntr

X

1 X

Γ−1 Bvik Bvik

nd ntr i=1 k=1 noise

February 23, 2016

20 / 32

Computational cost: the number of PDE solves

r: rank of Hessian of the data misfit

Independent of mesh (beyond a certain resolution)

Independent of sensor dimension (beyond a certain dimension)

Cost (in PDE solves) of evaluating OED objective function & gradient

1

Cost of solving inverse problems ∼ 2 × r × nd × Nnewton

2

Cost of computing OED objective ∼ 2 × r × ntr × nd

3

Cost of computing OED gradient ∼ 2 × ntr × nd + 2 × r × nd

Low-rank approx to misfit Hessian =⇒ Cost in (2) and (3) ∼ 2 × r × nd

OED optimization problem:

Solved via quasi-Newton interior point

Number of quasi-Newton iterations insensitive to parameter/sensor dimension

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

21 / 32

The prior and training models

Prior knowledge: parameter value at few points, correlation

truth

prior mean

prior variance

Prior mean: “smooth” least-squares fit to point measurements

N Z

2

1

αX

mpr = arg min hm, Ami +

δi (x) m(x) − mtrue (x) dx

m 2

2 i=1 D

PN

Prior covariance: Cprior = (A + α i=1 δi )−2 ,

Am = −∇ · (D∇m)

Draws from prior used to generate training data for OED

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

22 / 32

Computing an optimal design with sparsification

The functions fε

Optimization problem

minimize

w

1

nd X

ntr

1 X

hzk , yik i + γPε (w)

nd ntr i=1

0.8

0.6

k=1

0.4

where

mMAP (w; di ) = arg min J m, w; di

m

H mMAP (w; di ), w; di yik = zk

0.2

0

0

0.2

0.4

0.6

0.8

1

The optimal weight vector w

1

nd = 5, ntr = 20

0.8

Continuation ⇒ `0 -penalty

0.6

Pε (w) :=

ns

X

0.4

fε (wi )

0.2

i=1

0

0

Solve the problem with ε → 0

Omar Ghattas (UT Austin)

Large-scale OED

20

40

60

80

100

February 23, 2016

23 / 32

Effectiveness of the optimal design

Numerical test: how well can we recover the “true model” from “true data”?

Optimal

Random

Truth

Designs with 10 sensors

Comparing MAP point (top row) and

posterior variance (Bottom row)

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

24 / 32

Effectiveness of the optimal design

Numerical test: how well can we recover the “true model” from “true data”?

10 sensor designs

20 sensor designs

1.2

tr Γpost (w)

1.2

Random

Optimal

1

0.8

0.6

0.2

Random

Optimal

1

0.8

0.3

0.4

0.5

0.6

0.6

0.2

0.3

Erel (w)

d = f (mtrue ) + η

Erel (w) :=

Omar Ghattas (UT Austin)

0.4

0.5

0.6

Erel (w)

Γpost (w) := H−1 (mMAP (w; d), w; d)

kmMAP (w; d) − mtrue k

kmtrue k

Large-scale OED

February 23, 2016

25 / 32

Effectiveness of the optimal design

Numerical test: how well we do on average?

10 sensor designs

20 sensor designs

1.2

Ψ(w)

1.2

Random

Optimal

1

0.8

0.6

0.2

Random

Optimal

1

0.8

0.3

0.4

0.5

0.6

0.6

0.2

0.3

Erel (w)

0

0.4

0.5

0.6

Erel (w)

nd

1 X kmMAP (w; di ) − mi k

E rel (w) = 0

nd i=1

kmi k

0

nd

1 X

tr H−1 (mMAP (w; di ), w; di )

Ψ(w) = 0

nd i=1

di generated from nd0 = 50 draws of mi from the prior

(different from the nd = 5 used to solve the OED problem)

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

26 / 32

OED optimization

obj func/grad evaluation

Scalability with respect to parameter/sensor dimension

300

300

inner CG iters

outer CG

200

inner CG iters

outer CG

200

100

100

500

2,000

3,500

5,000

BFGS iterations

100

50

10

250

500

750

BFGS iterations

100

50

10

500

2,000

3,500

5,000

parameter dimension

Omar Ghattas (UT Austin)

Large-scale OED

10

10

250

500

750

sensor dimension

February 23, 2016

27 / 32

Inversion of a realistic permeability field

m

−∇ · (e ∇u) = f

in D

u=0

on ΓD

e ∇u · n = 0

on ΓN

m

ΓD : at the corners

ΓN : the rest of boundary

Point source at center

Log-permeability field from SPE Comparative Solution Project

Injection well at the center

Production wells at four corners

Parameter (and state) dimension: n = 10, 202

Potential sensor locations: ns = 128

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

28 / 32

SPE model: the prior

Mean

Omar Ghattas (UT Austin)

Standard deviation

Large-scale OED

Random draw

February 23, 2016

29 / 32

SPE model: inference with the optimal design

Posterior samples

Truth

Posterior mean

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

30 / 32

SPE model: Compare optimal vs random design

Optimal

average var = 1.8824

Random

average var = 2.4722

average var = 2.7206

Designs with 32 sensors

Observed data collected using true parameter on high resolution mesh

(n ∼ 2.4 × 105 )

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

31 / 32

Challenges revisited

Infinite-dimensional inference

Proper choice of prior, mass-weighted inner-product

Need trace of posterior covariance (inverse of Hessian, large, dense, expensive

matvecs)

Randomized trace-estimator, Gaussian approximation in nonlinear case

In nonlinear inverse problem, Hessian depends on data (not available a priori)

Random draws from prior to generate training data

Optimal design has combinatorial complexity in general

w-weighted formulation, relax weights 0 ≤ wj ≤ 1, continuation to get 0–1

Conventional OED algorithms intractable for large-scale problems

Infinite-dimensional formulation, gradient based optimization, scalable algorithms

Gaussian assumption (on posterior of inverse problem) remains via linearized

parameter-to-observable map; current worked aimed at higher-order approximation

A. Alexanderian, N. Petra, G. Stadler, and O. Ghattas, A fast and scalable method for A-optimal design of

experiments for infinite-dimensional Bayesian nonlinear inverse problems, SIAM Journal on Scientific

Computing, 38(1):A243–A272, 2016. http://dx.doi.org/10.1137/140992564

Omar Ghattas (UT Austin)

Large-scale OED

February 23, 2016

32 / 32